Yi-Hsuan Hsiao

Department of Electrical Engineering and Computer Science, Massachusetts Institute of Technology

Controlled Flight of an Insect-Scale Flapping-Wing Robot via Integrated Onboard Sensing and Computation

Feb 09, 2026Abstract:Aerial insects can effortlessly navigate dense vegetation, whereas similarly sized aerial robots typically depend on offboard sensors and computation to maintain stable flight. This disparity restricts insect-scale robots to operation within motion capture environments, substantially limiting their applicability to tasks such as search-and-rescue and precision agriculture. In this work, we present a 1.29-gram aerial robot capable of hovering and tracking trajectories with solely onboard sensing and computation. The combination of a sensor suite, estimators, and a low-level controller achieved centimeter-scale positional flight accuracy. Additionally, we developed a hierarchical controller in which a human operator provides high-level commands to direct the robot's motion. In a 30-second flight experiment conducted outside a motion capture system, the robot avoided obstacles and ultimately landed on a sunflower. This level of sensing and computational autonomy represents a significant advancement for the aerial microrobotics community, further opening opportunities to explore onboard planning and power autonomy.

Hovering Flight of Soft-Actuated Insect-Scale Micro Aerial Vehicles using Deep Reinforcement Learning

Feb 17, 2025

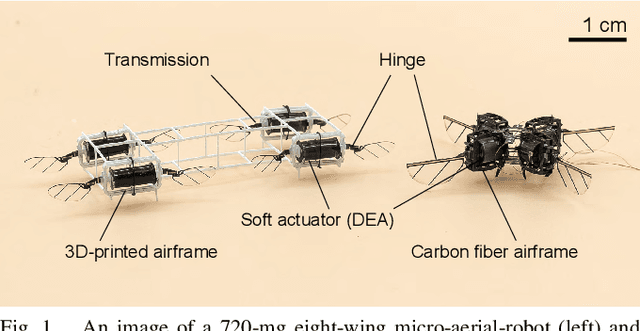

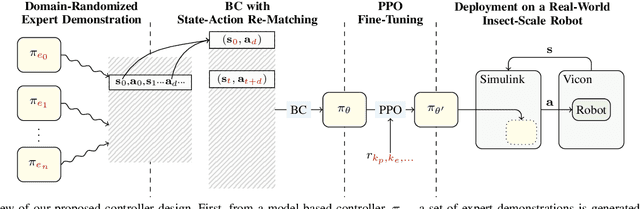

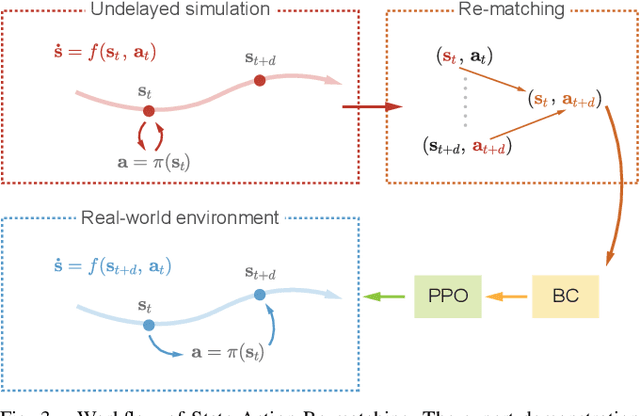

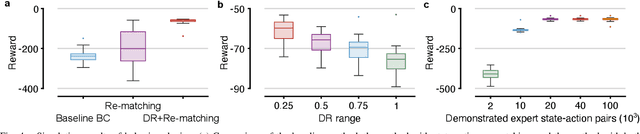

Abstract:Soft-actuated insect-scale micro aerial vehicles (IMAVs) pose unique challenges for designing robust and computationally efficient controllers. At the millimeter scale, fast robot dynamics ($\sim$ms), together with system delay, model uncertainty, and external disturbances significantly affect flight performances. Here, we design a deep reinforcement learning (RL) controller that addresses system delay and uncertainties. To initialize this neural network (NN) controller, we propose a modified behavior cloning (BC) approach with state-action re-matching to account for delay and domain-randomized expert demonstration to tackle uncertainty. Then we apply proximal policy optimization (PPO) to fine-tune the policy during RL, enhancing performance and smoothing commands. In simulations, our modified BC substantially increases the mean reward compared to baseline BC; and RL with PPO improves flight quality and reduces command fluctuations. We deploy this controller on two different insect-scale aerial robots that weigh 720 mg and 850 mg, respectively. The robots demonstrate multiple successful zero-shot hovering flights, with the longest lasting 50 seconds and root-mean-square errors of 1.34 cm in lateral direction and 0.05 cm in altitude, marking the first end-to-end deep RL-based flight on soft-driven IMAVs.

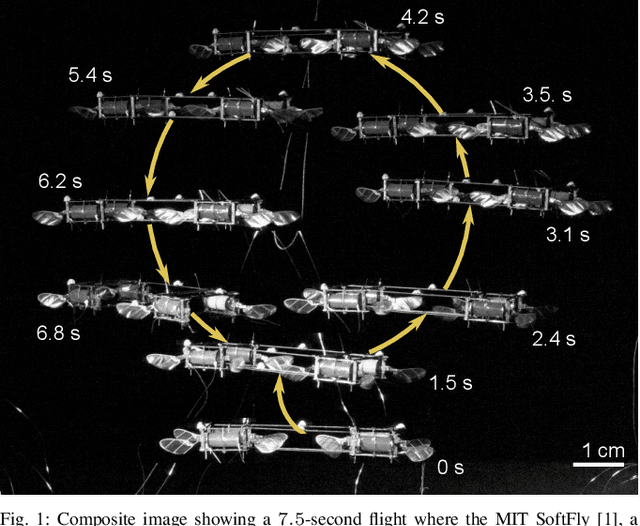

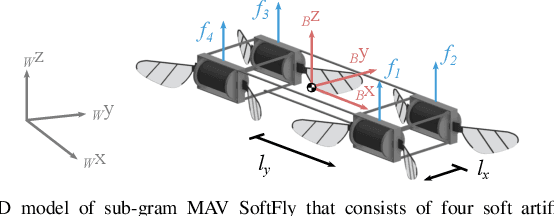

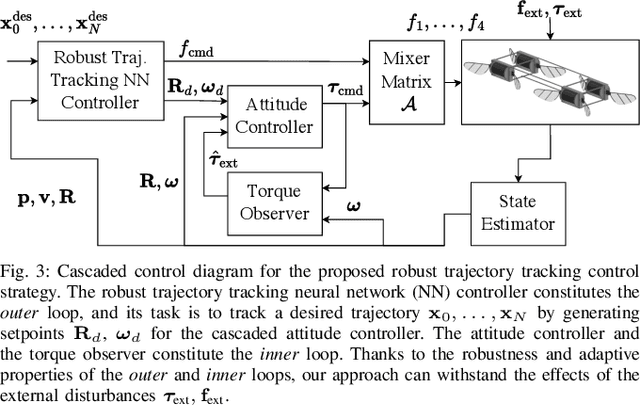

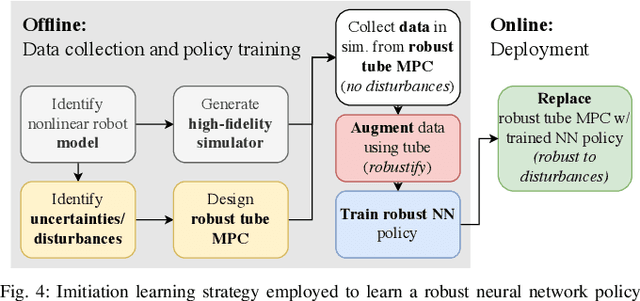

Robust, High-Rate Trajectory Tracking on Insect-Scale Soft-Actuated Aerial Robots with Deep-Learned Tube MPC

Sep 26, 2022

Abstract:Accurate and agile trajectory tracking in sub-gram Micro Aerial Vehicles (MAVs) is challenging, as the small scale of the robot induces large model uncertainties, demanding robust feedback controllers, while the fast dynamics and computational constraints prevent the deployment of computationally expensive strategies. In this work, we present an approach for agile and computationally efficient trajectory tracking on the MIT SoftFly, a sub-gram MAV (0.7 grams). Our strategy employs a cascaded control scheme, where an adaptive attitude controller is combined with a neural network policy trained to imitate a trajectory tracking robust tube model predictive controller (RTMPC). The neural network policy is obtained using our recent work, which enables the policy to preserve the robustness of RTMPC, but at a fraction of its computational cost. We experimentally evaluate our approach, achieving position Root Mean Square Errors lower than 1.8 cm even in the more challenging maneuvers, obtaining a 60% reduction in maximum position error compared to our previous work, and demonstrating robustness to large external disturbances

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge