Ye Deng

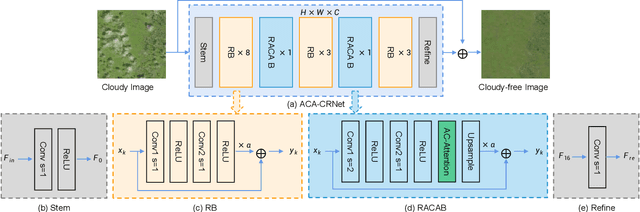

Attentive Contextual Attention for Cloud Removal

Nov 20, 2024

Abstract:Cloud cover can significantly hinder the use of remote sensing images for Earth observation, prompting urgent advancements in cloud removal technology. Recently, deep learning strategies have shown strong potential in restoring cloud-obscured areas. These methods utilize convolution to extract intricate local features and attention mechanisms to gather long-range information, improving the overall comprehension of the scene. However, a common drawback of these approaches is that the resulting images often suffer from blurriness, artifacts, and inconsistencies. This is partly because attention mechanisms apply weights to all features based on generalized similarity scores, which can inadvertently introduce noise and irrelevant details from cloud-covered areas. To overcome this limitation and better capture relevant distant context, we introduce a novel approach named Attentive Contextual Attention (AC-Attention). This method enhances conventional attention mechanisms by dynamically learning data-driven attentive selection scores, enabling it to filter out noise and irrelevant features effectively. By integrating the AC-Attention module into the DSen2-CR cloud removal framework, we significantly improve the model's ability to capture essential distant information, leading to more effective cloud removal. Our extensive evaluation of various datasets shows that our method outperforms existing ones regarding image reconstruction quality. Additionally, we conducted ablation studies by integrating AC-Attention into multiple existing methods and widely used network architectures. These studies demonstrate the effectiveness and adaptability of AC-Attention and reveal its ability to focus on relevant features, thereby improving the overall performance of the networks. The code is available at \url{https://github.com/huangwenwenlili/ACA-CRNet}.

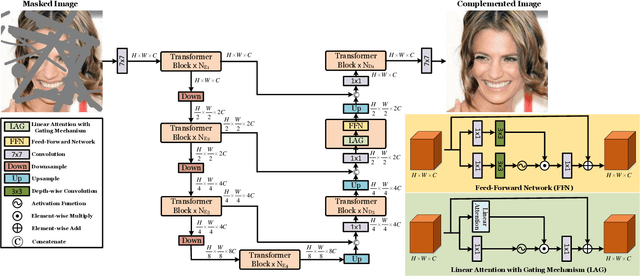

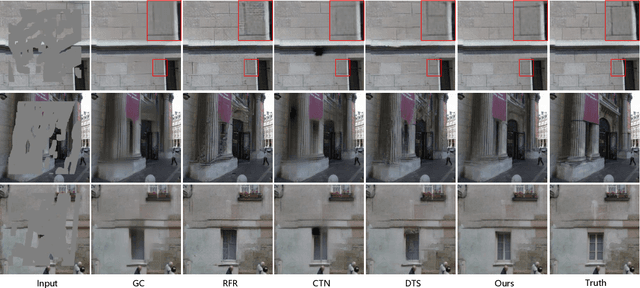

T-former: An Efficient Transformer for Image Inpainting

May 19, 2023

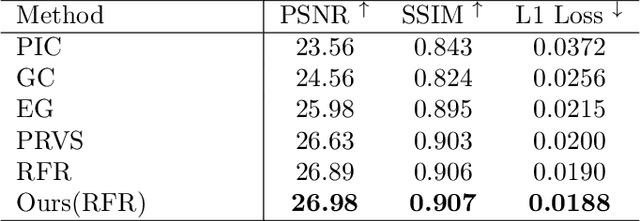

Abstract:Benefiting from powerful convolutional neural networks (CNNs), learning-based image inpainting methods have made significant breakthroughs over the years. However, some nature of CNNs (e.g. local prior, spatially shared parameters) limit the performance in the face of broken images with diverse and complex forms. Recently, a class of attention-based network architectures, called transformer, has shown significant performance on natural language processing fields and high-level vision tasks. Compared with CNNs, attention operators are better at long-range modeling and have dynamic weights, but their computational complexity is quadratic in spatial resolution, and thus less suitable for applications involving higher resolution images, such as image inpainting. In this paper, we design a novel attention linearly related to the resolution according to Taylor expansion. And based on this attention, a network called $T$-former is designed for image inpainting. Experiments on several benchmark datasets demonstrate that our proposed method achieves state-of-the-art accuracy while maintaining a relatively low number of parameters and computational complexity. The code can be found at \href{https://github.com/dengyecode/T-former_image_inpainting}{github.com/dengyecode/T-former\_image\_inpainting}

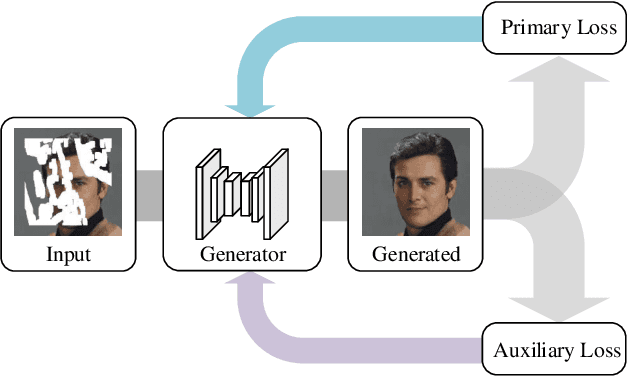

Auxiliary Loss Adaptation for Image Inpainting

Nov 22, 2021

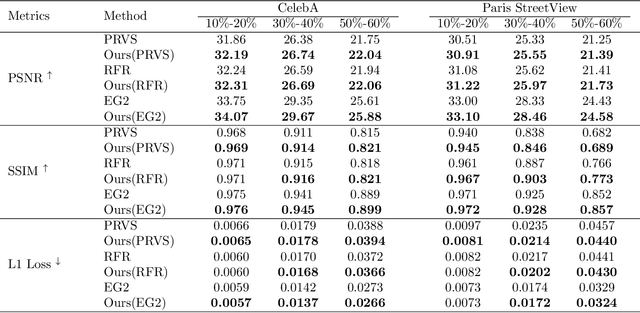

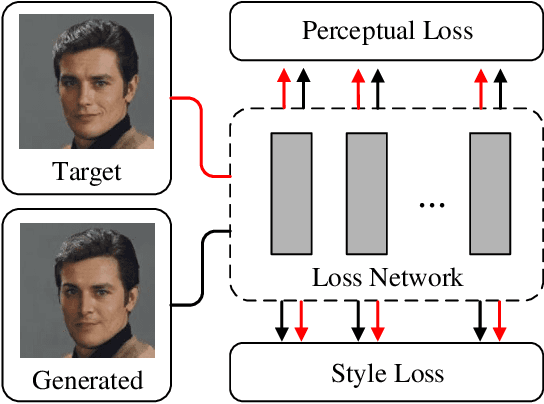

Abstract:Auxiliary losses commonly used in image inpainting lead to better reconstruction performance by incorporating prior knowledge of missing regions. However, it usually requires a lot of effort to fully exploit the potential of auxiliary losses, or otherwise, improperly weighted auxiliary losses would distract the model from the inpainting task, and the effectiveness of an auxiliary loss might vary during the training process. Hence the design of auxiliary losses takes strong domain expertise. To mitigate the problem, in this work, we introduce the Auxiliary Loss Adaptation for Image Inpainting (ALA) algorithm to dynamically adjust the parameters of the auxiliary loss. Our method is based on the principle that the best auxiliary loss is the one that helps increase the performance of the main loss most through several steps of gradient descent. We then examined two commonly used auxiliary losses in inpainting and used ALA to adapt their parameters. Experimental results show that ALA induces more competitive inpainting results than fixed auxiliary losses. In particular, simply combining auxiliary loss with ALA, existing inpainting methods can achieve increased performances without explicitly incorporating delicate network design or structure knowledge prior.

Distributed Generative Adversarial Net

Nov 19, 2019

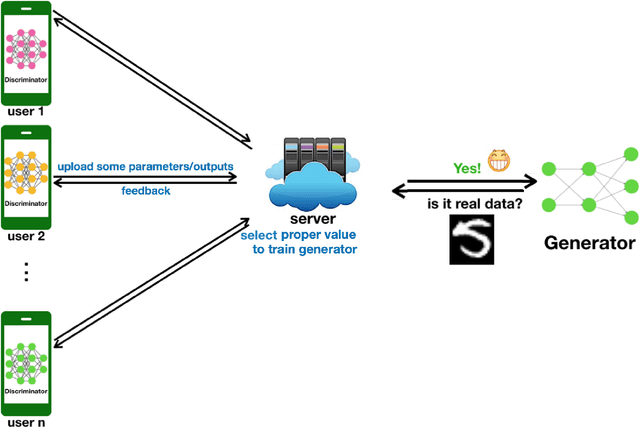

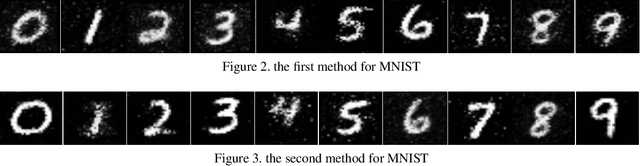

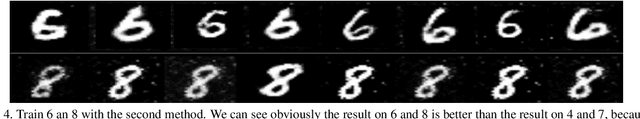

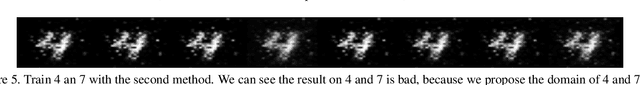

Abstract:Recently the Generative Adversarial Network has become a hot topic. Considering the application of GAN in multi-user environment, we propose Distributed-GAN. It enables multiple users to train with their own data locally and generates more diverse samples. Users don't need to share data with each other to avoid the leakage of privacy. In recent years, commercial companies have launched cloud platforms based on artificial intelligence to provide model for users who lack computing power. We hope our work can inspire these companies to provide more powerful AI services.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge