Yash Belhe

Repurposing Marigold for Zero-Shot Metric Depth Estimation via Defocus Blur Cues

May 23, 2025Abstract:Recent monocular metric depth estimation (MMDE) methods have made notable progress towards zero-shot generalization. However, they still exhibit a significant performance drop on out-of-distribution datasets. We address this limitation by injecting defocus blur cues at inference time into Marigold, a \textit{pre-trained} diffusion model for zero-shot, scale-invariant monocular depth estimation (MDE). Our method effectively turns Marigold into a metric depth predictor in a training-free manner. To incorporate defocus cues, we capture two images with a small and a large aperture from the same viewpoint. To recover metric depth, we then optimize the metric depth scaling parameters and the noise latents of Marigold at inference time using gradients from a loss function based on the defocus-blur image formation model. We compare our method against existing state-of-the-art zero-shot MMDE methods on a self-collected real dataset, showing quantitative and qualitative improvements.

A Differentiable Wave Optics Model for End-to-End Computational Imaging System Optimization

Dec 13, 2024

Abstract:End-to-end optimization, which simultaneously optimizes optics and algorithms, has emerged as a powerful data-driven method for computational imaging system design. This method achieves joint optimization through backpropagation by incorporating differentiable optics simulators to generate measurements and algorithms to extract information from measurements. However, due to high computational costs, it is challenging to model both aberration and diffraction in light transport for end-to-end optimization of compound optics. Therefore, most existing methods compromise physical accuracy by neglecting wave optics effects or off-axis aberrations, which raises concerns about the robustness of the resulting designs. In this paper, we propose a differentiable optics simulator that efficiently models both aberration and diffraction for compound optics. Using the simulator, we conduct end-to-end optimization on scene reconstruction and classification. Experimental results demonstrate that both lenses and algorithms adopt different configurations depending on whether wave optics is modeled. We also show that systems optimized without wave optics suffer from performance degradation when wave optics effects are introduced during testing. These findings underscore the importance of accurate wave optics modeling in optimizing imaging systems for robust, high-performance applications.

Volumetrically Consistent 3D Gaussian Rasterization

Dec 04, 2024

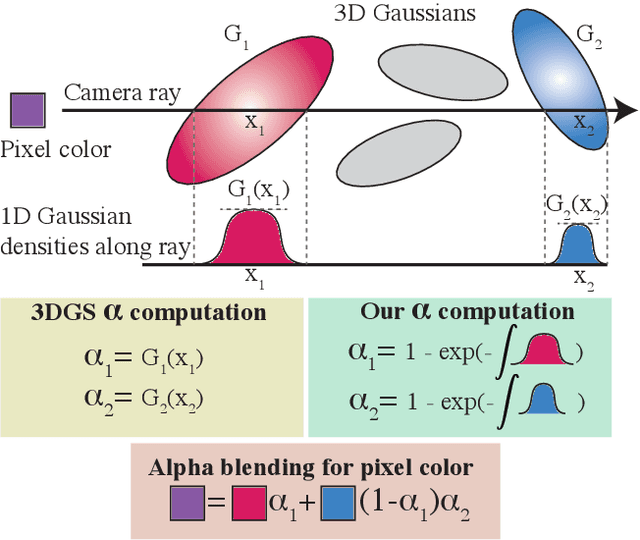

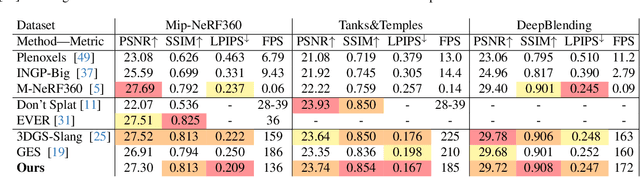

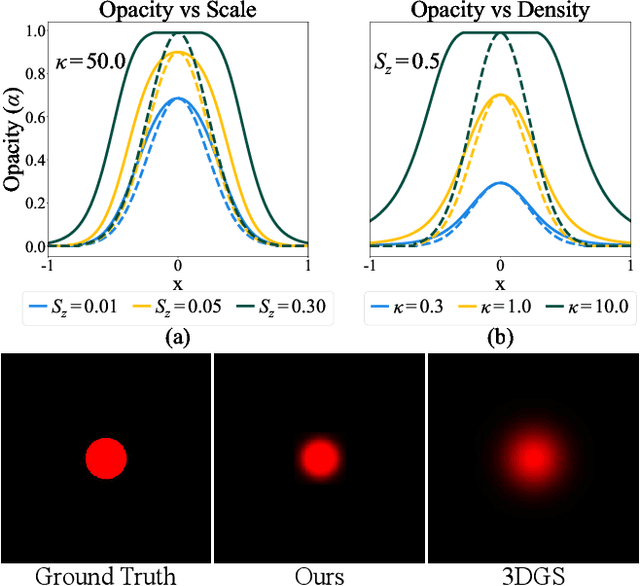

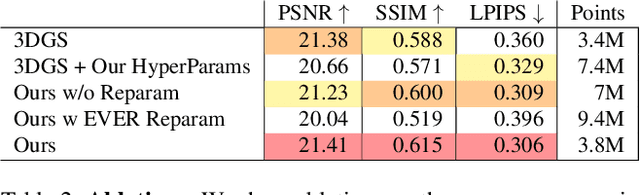

Abstract:Recently, 3D Gaussian Splatting (3DGS) has enabled photorealistic view synthesis at high inference speeds. However, its splatting-based rendering model makes several approximations to the rendering equation, reducing physical accuracy. We show that splatting and its approximations are unnecessary, even within a rasterizer; we instead volumetrically integrate 3D Gaussians directly to compute the transmittance across them analytically. We use this analytic transmittance to derive more physically-accurate alpha values than 3DGS, which can directly be used within their framework. The result is a method that more closely follows the volume rendering equation (similar to ray-tracing) while enjoying the speed benefits of rasterization. Our method represents opaque surfaces with higher accuracy and fewer points than 3DGS. This enables it to outperform 3DGS for view synthesis (measured in SSIM and LPIPS). Being volumetrically consistent also enables our method to work out of the box for tomography. We match the state-of-the-art 3DGS-based tomography method with fewer points. Being volumetrically consistent also enables our method to work out of the box for tomography. We match the state-of-the-art 3DGS-based tomography method with fewer points.

A Construct-Optimize Approach to Sparse View Synthesis without Camera Pose

May 06, 2024Abstract:Novel view synthesis from a sparse set of input images is a challenging problem of great practical interest, especially when camera poses are absent or inaccurate. Direct optimization of camera poses and usage of estimated depths in neural radiance field algorithms usually do not produce good results because of the coupling between poses and depths, and inaccuracies in monocular depth estimation. In this paper, we leverage the recent 3D Gaussian splatting method to develop a novel construct-and-optimize method for sparse view synthesis without camera poses. Specifically, we construct a solution progressively by using monocular depth and projecting pixels back into the 3D world. During construction, we optimize the solution by detecting 2D correspondences between training views and the corresponding rendered images. We develop a unified differentiable pipeline for camera registration and adjustment of both camera poses and depths, followed by back-projection. We also introduce a novel notion of an expected surface in Gaussian splatting, which is critical to our optimization. These steps enable a coarse solution, which can then be low-pass filtered and refined using standard optimization methods. We demonstrate results on the Tanks and Temples and Static Hikes datasets with as few as three widely-spaced views, showing significantly better quality than competing methods, including those with approximate camera pose information. Moreover, our results improve with more views and outperform previous InstantNGP and Gaussian Splatting algorithms even when using half the dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge