Yaohua Sun

Performance analysis of satellite-terrestrial integrated radio access networks based on stochastic geometry

Apr 15, 2024Abstract:To enhance coverage and improve service continuity, satellite-terrestrial integrated radio access network (STIRAN) has been seen as an essential trend in the development of 6G. However, there is still a lack of theoretical analysis on its coverage performance. To fill this gap, we first establish a system model to characterize a typical scenario where low-earth-orbit (LEO) satellites and terrestrial base stations are both deployed. Then, stochastic geometry is utilized to analyze the downlink coverage probability under the setting of shared frequency and distinct frequencies. Specifically, we derive mathematical expressions for the distances distribution from the serving station to the typical user and the associated probability based on the maximum bias power selection strategy (Max-BPR). Taking into account real-world satellite antenna beamforming patterns in two system scenarios, we derive the downlink coverage probabilities in terms of parameters such as base station density and orbital inclination. Finally, the correctness of the theoretical derivations is verified through experimental simulations, and the influence of network design parameters on the downlink coverage probability is analyzed.

Joint Beam Scheduling and Beamforming Design for Cooperative Positioning in Multi-beam LEO Satellite Networks

Apr 01, 2024

Abstract:Cooperative positioning with multiple low earth orbit (LEO) satellites is promising in providing location-based services and enhancing satellite-terrestrial communication. However, positioning accuracy is greatly affected by inter-beam interference and satellite-terrestrial topology geometry. To select the best combination of satellites from visible ones and suppress inter-beam interference, this paper explores the utilization of flexible beam scheduling and beamforming of multi-beam LEO satellites that can adjust beam directions toward the same earth-fixed cell to send positioning signals simultaneously. By leveraging Cram\'{e}r-Rao lower bound (CRLB) to characterize user Time Difference of Arrival (TDOA) positioning accuracy, the concerned problem is formulated, aiming at optimizing user positioning accuracy under beam scheduling and beam transmission power constraints. To deal with the mixed-integer-nonconvex problem, we decompose it into an inner beamforming design problem and an outer beam scheduling problem. For the former, we first prove the monotonic relationship between user positioning accuracy and its perceived signal-to-interference-plus-noise ratio (SINR) to reformulate the problem, and then semidefinite relaxation (SDR) is adopted for beamforming design. For the outer problem, a heuristic low-complexity beam scheduling scheme is proposed, whose core idea is to schedule users with lower channel correlation to mitigate inter-beam interference while seeking a proper satellite-terrestrial topology geometry. Simulation results verify the superior positioning performance of our proposed positioning-oriented beamforming and beam scheduling scheme, and it is shown that average user positioning accuracy is improved by $17.1\%$ and $55.9\%$ when the beam transmission power is 20 dBw, compared to conventional beamforming and beam scheduling schemes, respectively.

Joint Network Function Placement and Routing Optimization in Dynamic Software-defined Satellite-Terrestrial Integrated Networks

Oct 21, 2023Abstract:Software-defined satellite-terrestrial integrated networks (SDSTNs) are seen as a promising paradigm for achieving high resource flexibility and global communication coverage. However, low latency service provisioning is still challenging due to the fast variation of network topology and limited onboard resource at low earth orbit satellites. To address this issue, we study service provisioning in SDSTNs via joint optimization of virtual network function (VNF) placement and routing planning with network dynamics characterized by a time-evolving graph. Aiming at minimizing average service latency, the corresponding problem is formulated as an integer nonlinear programming under resource, VNF deployment, and time-slotted flow constraints. Since exhaustive search is intractable, we transform the primary problem into an integer linear programming by involving auxiliary variables and then propose a Benders decomposition based branch-and-cut (BDBC) algorithm. Towards practical use, a time expansion-based decoupled greedy (TEDG) algorithm is further designed with rigorous complexity analysis. Extensive experiments demonstrate the optimality of BDBC algorithm and the low complexity of TEDG algorithm. Meanwhile, it is indicated that they can improve the number of completed services within a configuration period by up to 58% and reduce the average service latency by up to 17% compared to baseline schemes.

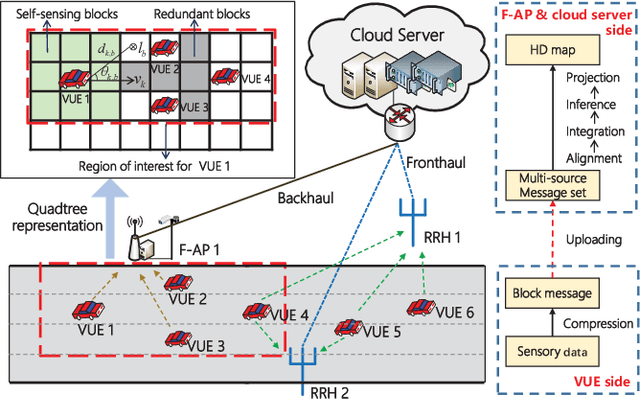

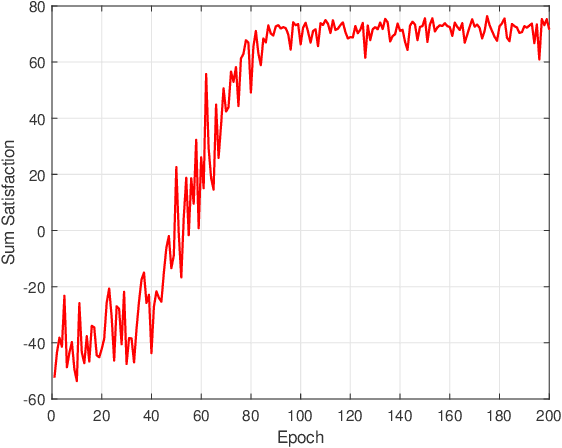

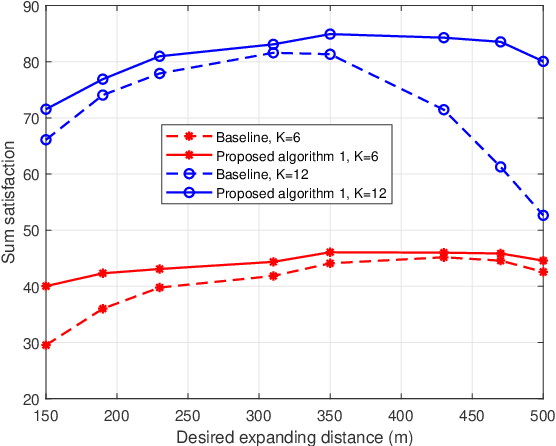

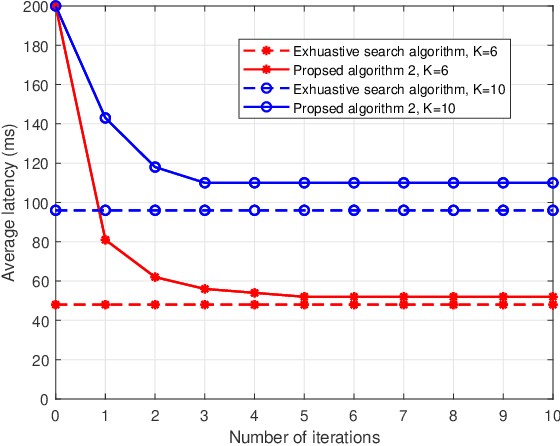

Joint Sensing, Communication, and Computation Resource Allocation for Cooperative Perception in Fog-Based Vehicular Networks

Dec 12, 2021

Abstract:To enlarge the perception range and reliability of individual autonomous vehicles, cooperative perception has been received much attention. However, considering the high volume of shared messages, limited bandwidth and computation resources in vehicular networks become bottlenecks. In this paper, we investigate how to balance the volume of shared messages and constrained resources in fog-based vehicular networks. To this end, we first characterize sum satisfaction of cooperative perception taking account of its spatial-temporal value and latency performance. Next, the sensing block message, communication resource block, and computation resource are jointly allocated to maximize the sum satisfaction of cooperative perception, while satisfying the maximum latency and sojourn time constraints of vehicles. Owing to its non-convexity, we decouple the original problem into two separate sub-problems and devise corresponding solutions. Simulation results demonstrate that our proposed scheme can effectively boost the sum satisfaction of cooperative perception compared with existing baselines.

Application of Machine Learning in Wireless Networks: Key Techniques and Open Issues

Sep 24, 2018

Abstract:As a key technique for enabling artificial intelligence, machine learning (ML) has been shown to be capable of solving complex problems without explicit programming. Motivated by its successful applications to many practical tasks like image recognition and recommendation systems, both industry and the research community have advocated the applications of ML in wireless communication. This paper comprehensively surveys the recent advances of the applications of ML in wireless communication, which are classified as: resource management in the MAC layer, networking and mobility management in the network layer, and localization in the application layer. The applications in resource management further include power control, spectrum management, backhaul management, cache management, beamformer design, and computation resource management, while ML-based networking focuses on the applications in base station (BS) clustering, BS switching control, user association, and routing. Each aspect is further categorized according to the adopted ML techniques. Additionally, given the extensiveness of the research area, challenges and unresolved issues are presented to facilitate future studies, where the topics of ML-based network slicing, infrastructure update to support ML-based paradigms, open data sets and platforms for researchers, theoretical guidance for ML implementation, and so on are discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge