Yangyang Xia

Incorporating Real-world Noisy Speech in Neural-network-based Speech Enhancement Systems

Sep 21, 2021

Abstract:Supervised speech enhancement relies on parallel databases of degraded speech signals and their clean reference signals during training. This setting prohibits the use of real-world degraded speech data that may better represent the scenarios where such systems are used. In this paper, we explore methods that enable supervised speech enhancement systems to train on real-world degraded speech data. Specifically, we propose a semi-supervised approach for speech enhancement in which we first train a modified vector-quantized variational autoencoder that solves a source separation task. We then use this trained autoencoder to further train an enhancement network using real-world noisy speech data by computing a triplet-based unsupervised loss function. Experiments show promising results for incorporating real-world data in training speech enhancement systems.

A Modulation-Domain Loss for Neural-Network-based Real-time Speech Enhancement

Feb 15, 2021

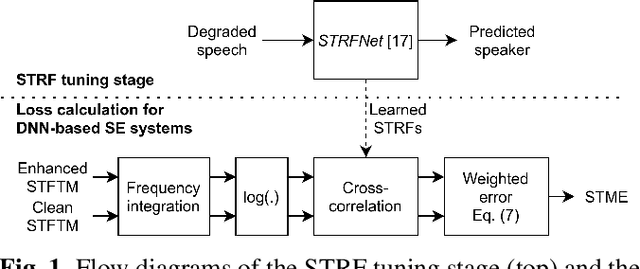

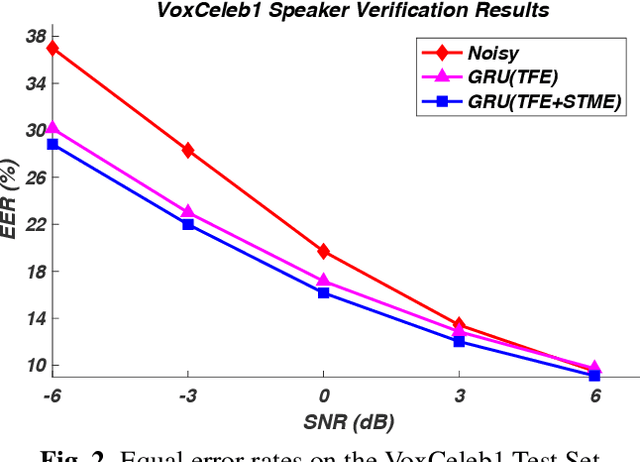

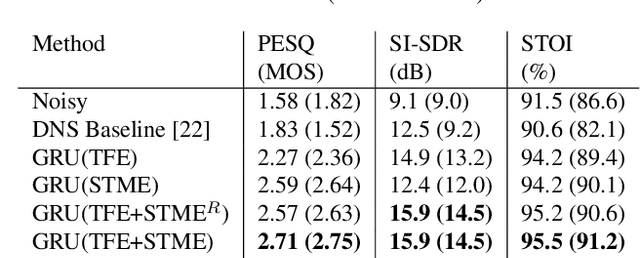

Abstract:We describe a modulation-domain loss function for deep-learning-based speech enhancement systems. Learnable spectro-temporal receptive fields (STRFs) were adapted to optimize for a speaker identification task. The learned STRFs were then used to calculate a weighted mean-squared error (MSE) in the modulation domain for training a speech enhancement system. Experiments showed that adding the modulation-domain MSE to the MSE in the spectro-temporal domain substantially improved the objective prediction of speech quality and intelligibility for real-time speech enhancement systems without incurring additional computation during inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge