Xuewen Shi

Tag Recommendation by Word-Level Tag Sequence Modeling

Nov 30, 2019

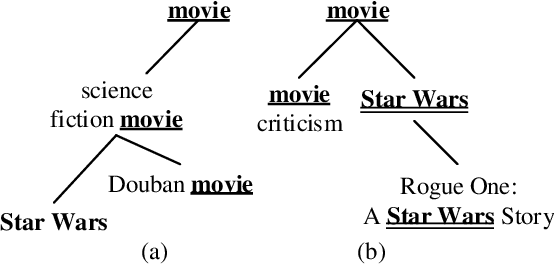

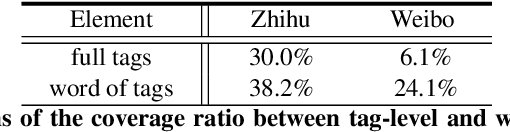

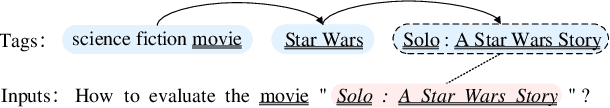

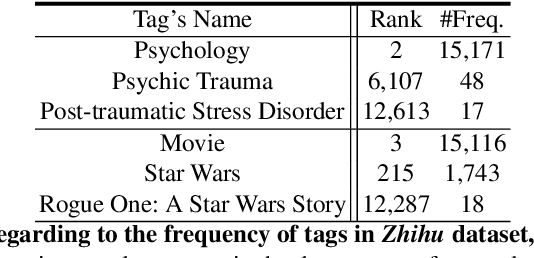

Abstract:In this paper, we transform tag recommendation into a word-based text generation problem and introduce a sequence-to-sequence model. The model inherits the advantages of LSTM-based encoder for sequential modeling and attention-based decoder with local positional encodings for learning relations globally. Experimental results on Zhihu datasets illustrate the proposed model outperforms other state-of-the-art text classification based methods.

Neural Chinese Word Segmentation as Sequence to Sequence Translation

Nov 29, 2019

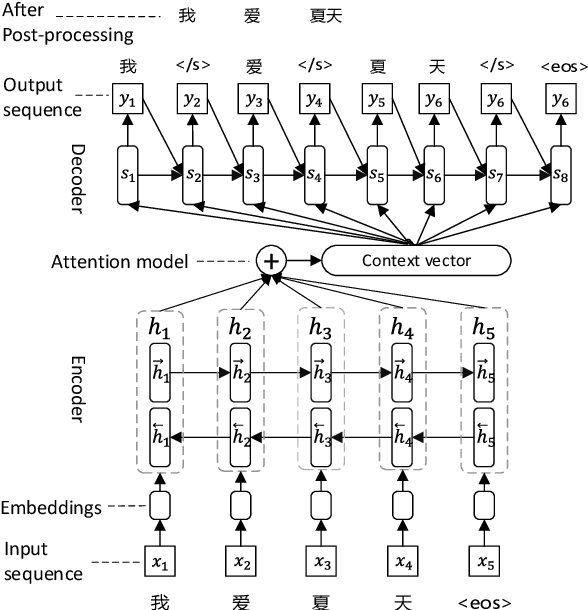

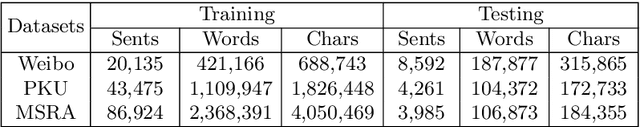

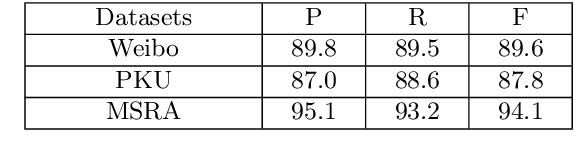

Abstract:Recently, Chinese word segmentation (CWS) methods using neural networks have made impressive progress. Most of them regard the CWS as a sequence labeling problem which construct models based on local features rather than considering global information of input sequence. In this paper, we cast the CWS as a sequence translation problem and propose a novel sequence-to-sequence CWS model with an attention-based encoder-decoder framework. The model captures the global information from the input and directly outputs the segmented sequence. It can also tackle other NLP tasks with CWS jointly in an end-to-end mode. Experiments on Weibo, PKU and MSRA benchmark datasets show that our approach has achieved competitive performances compared with state-of-the-art methods. Meanwhile, we successfully applied our proposed model to jointly learning CWS and Chinese spelling correction, which demonstrates its applicability of multi-task fusion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge