Xuepeng Shi

Semi-Supervised Object Detection with Object-wise Contrastive Learning and Regression Uncertainty

Dec 06, 2022Abstract:Semi-supervised object detection (SSOD) aims to boost detection performance by leveraging extra unlabeled data. The teacher-student framework has been shown to be promising for SSOD, in which a teacher network generates pseudo-labels for unlabeled data to assist the training of a student network. Since the pseudo-labels are noisy, filtering the pseudo-labels is crucial to exploit the potential of such framework. Unlike existing suboptimal methods, we propose a two-step pseudo-label filtering for the classification and regression heads in a teacher-student framework. For the classification head, OCL (Object-wise Contrastive Learning) regularizes the object representation learning that utilizes unlabeled data to improve pseudo-label filtering by enhancing the discriminativeness of the classification score. This is designed to pull together objects in the same class and push away objects from different classes. For the regression head, we further propose RUPL (Regression-Uncertainty-guided Pseudo-Labeling) to learn the aleatoric uncertainty of object localization for label filtering. By jointly filtering the pseudo-labels for the classification and regression heads, the student network receives better guidance from the teacher network for object detection task. Experimental results on Pascal VOC and MS-COCO datasets demonstrate the superiority of our proposed method with competitive performance compared to existing methods.

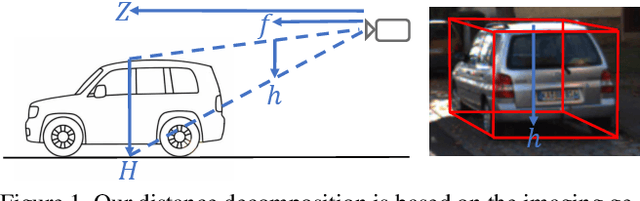

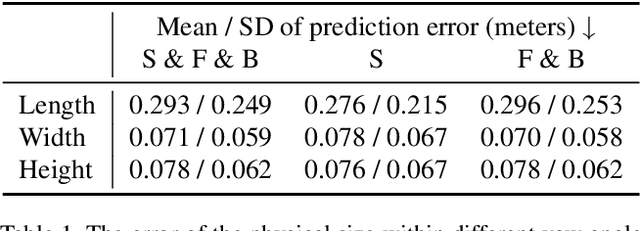

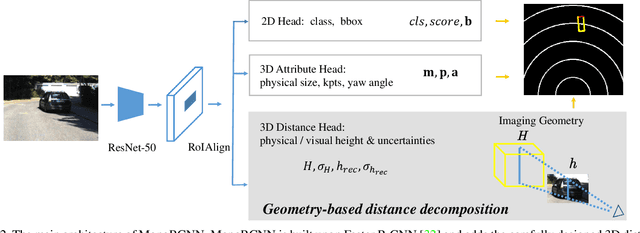

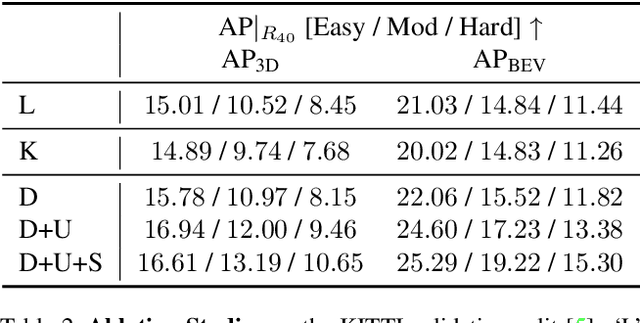

Geometry-based Distance Decomposition for Monocular 3D Object Detection

Apr 08, 2021

Abstract:Monocular 3D object detection is of great significance for autonomous driving but remains challenging. The core challenge is to predict the distance of objects in the absence of explicit depth information. Unlike regressing the distance as a single variable in most existing methods, we propose a novel geometry-based distance decomposition to recover the distance by its factors. The decomposition factors the distance of objects into the most representative and stable variables, i.e. the physical height and the projected visual height in the image plane. Moreover, the decomposition maintains the self-consistency between the two heights, leading to the robust distance prediction when both predicted heights are inaccurate. The decomposition also enables us to trace the cause of the distance uncertainty for different scenarios. Such decomposition makes the distance prediction interpretable, accurate, and robust. Our method directly predicts 3D bounding boxes from RGB images with a compact architecture, making the training and inference simple and efficient. The experimental results show that our method achieves the state-of-the-art performance on the monocular 3D Object detection and Birds Eye View tasks on the KITTI dataset, and can generalize to images with different camera intrinsics.

Real-Time Rotation-Invariant Face Detection with Progressive Calibration Networks

Apr 17, 2018

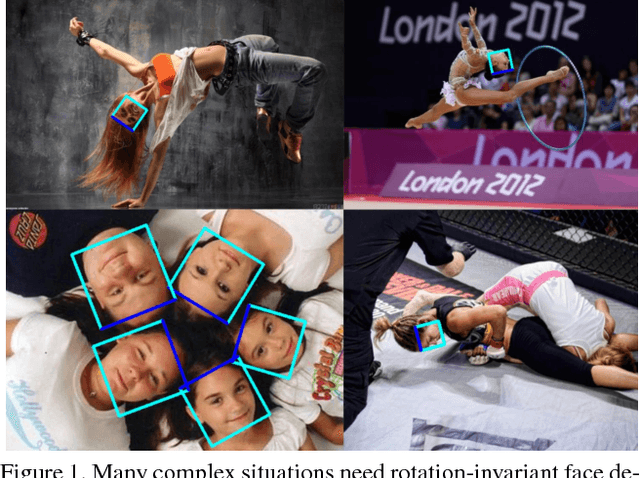

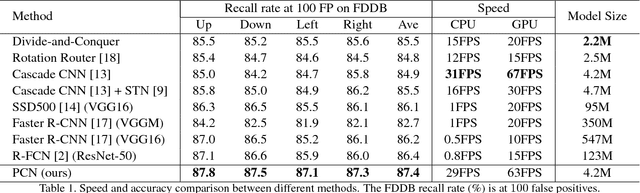

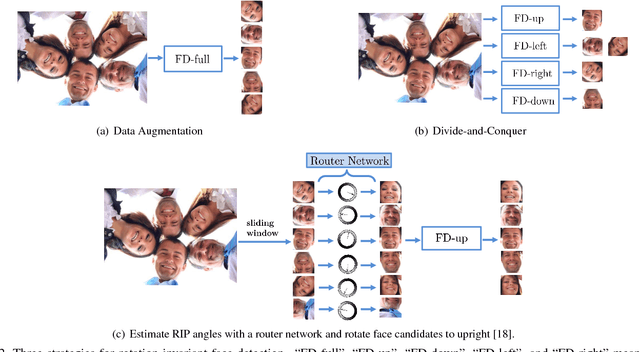

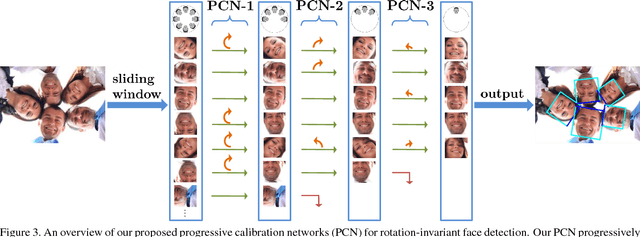

Abstract:Rotation-invariant face detection, i.e. detecting faces with arbitrary rotation-in-plane (RIP) angles, is widely required in unconstrained applications but still remains as a challenging task, due to the large variations of face appearances. Most existing methods compromise with speed or accuracy to handle the large RIP variations. To address this problem more efficiently, we propose Progressive Calibration Networks (PCN) to perform rotation-invariant face detection in a coarse-to-fine manner. PCN consists of three stages, each of which not only distinguishes the faces from non-faces, but also calibrates the RIP orientation of each face candidate to upright progressively. By dividing the calibration process into several progressive steps and only predicting coarse orientations in early stages, PCN can achieve precise and fast calibration. By performing binary classification of face vs. non-face with gradually decreasing RIP ranges, PCN can accurately detect faces with full $360^{\circ}$ RIP angles. Such designs lead to a real-time rotation-invariant face detector. The experiments on multi-oriented FDDB and a challenging subset of WIDER FACE containing rotated faces in the wild show that our PCN achieves quite promising performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge