Xinyuan Gao

Boosting Domain Incremental Learning: Selecting the Optimal Parameters is All You Need

May 29, 2025Abstract:Deep neural networks (DNNs) often underperform in real-world, dynamic settings where data distributions change over time. Domain Incremental Learning (DIL) offers a solution by enabling continual model adaptation, with Parameter-Isolation DIL (PIDIL) emerging as a promising paradigm to reduce knowledge conflicts. However, existing PIDIL methods struggle with parameter selection accuracy, especially as the number of domains and corresponding classes grows. To address this, we propose SOYO, a lightweight framework that improves domain selection in PIDIL. SOYO introduces a Gaussian Mixture Compressor (GMC) and Domain Feature Resampler (DFR) to store and balance prior domain data efficiently, while a Multi-level Domain Feature Fusion Network (MDFN) enhances domain feature extraction. Our framework supports multiple Parameter-Efficient Fine-Tuning (PEFT) methods and is validated across tasks such as image classification, object detection, and speech enhancement. Experimental results on six benchmarks demonstrate SOYO's consistent superiority over existing baselines, showcasing its robustness and adaptability in complex, evolving environments. The codes will be released in https://github.com/qwangcv/SOYO.

Learn by Reasoning: Analogical Weight Generation for Few-Shot Class-Incremental Learning

Mar 27, 2025Abstract:Few-shot class-incremental Learning (FSCIL) enables models to learn new classes from limited data while retaining performance on previously learned classes. Traditional FSCIL methods often require fine-tuning parameters with limited new class data and suffer from a separation between learning new classes and utilizing old knowledge. Inspired by the analogical learning mechanisms of the human brain, we propose a novel analogical generative method. Our approach includes the Brain-Inspired Analogical Generator (BiAG), which derives new class weights from existing classes without parameter fine-tuning during incremental stages. BiAG consists of three components: Weight Self-Attention Module (WSA), Weight & Prototype Analogical Attention Module (WPAA), and Semantic Conversion Module (SCM). SCM uses Neural Collapse theory for semantic conversion, WSA supplements new class weights, and WPAA computes analogies to generate new class weights. Experiments on miniImageNet, CUB-200, and CIFAR-100 datasets demonstrate that our method achieves higher final and average accuracy compared to SOTA methods.

Diversity Covariance-Aware Prompt Learning for Vision-Language Models

Mar 03, 2025Abstract:Prompt tuning can further enhance the performance of visual-language models across various downstream tasks (e.g., few-shot learning), enabling them to better adapt to specific applications and needs. In this paper, we present a Diversity Covariance-Aware framework that learns distributional information from the data to enhance the few-shot ability of the prompt model. First, we propose a covariance-aware method that models the covariance relationships between visual features and uses anisotropic Mahalanobis distance, instead of the suboptimal cosine distance, to measure the similarity between two modalities. We rigorously derive and prove the validity of this modeling process. Then, we propose the diversity-aware method, which learns multiple diverse soft prompts to capture different attributes of categories and aligns them independently with visual modalities. This method achieves multi-centered covariance modeling, leading to more diverse decision boundaries. Extensive experiments on 11 datasets in various tasks demonstrate the effectiveness of our method.

Space Rotation with Basis Transformation for Training-free Test-Time Adaptation

Feb 27, 2025Abstract:With the development of visual-language models (VLM) in downstream task applications, test-time adaptation methods based on VLM have attracted increasing attention for their ability to address changes distribution in test-time. Although prior approaches have achieved some progress, they typically either demand substantial computational resources or are constrained by the limitations of the original feature space, rendering them less effective for test-time adaptation tasks. To address these challenges, we propose a training-free feature space rotation with basis transformation for test-time adaptation. By leveraging the inherent distinctions among classes, we reconstruct the original feature space and map it to a new representation, thereby enhancing the clarity of class differences and providing more effective guidance for the model during testing. Additionally, to better capture relevant information from various classes, we maintain a dynamic queue to store representative samples. Experimental results across multiple benchmarks demonstrate that our method outperforms state-of-the-art techniques in terms of both performance and efficiency.

LOBG:Less Overfitting for Better Generalization in Vision-Language Model

Oct 14, 2024Abstract:Existing prompt learning methods in Vision-Language Models (VLM) have effectively enhanced the transfer capability of VLM to downstream tasks, but they suffer from a significant decline in generalization due to severe overfitting. To address this issue, we propose a framework named LOBG for vision-language models. Specifically, we use CLIP to filter out fine-grained foreground information that might cause overfitting, thereby guiding prompts with basic visual concepts. To further mitigate overfitting, we devel oped a structural topology preservation (STP) loss at the feature level, which endows the feature space with overall plasticity, allowing effective reshaping of the feature space during optimization. Additionally, we employed hierarchical logit distilation (HLD) at the output level to constrain outputs, complementing STP at the output end. Extensive experimental results demonstrate that our method significantly improves generalization capability and alleviates overfitting compared to state-of-the-art approaches.

Beyond Prompt Learning: Continual Adapter for Efficient Rehearsal-Free Continual Learning

Jul 14, 2024

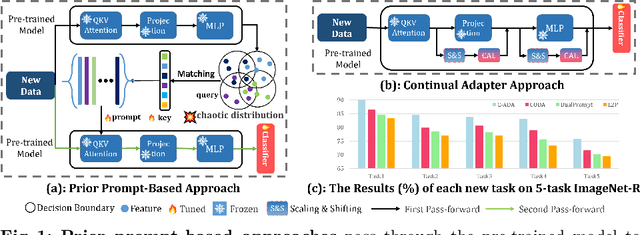

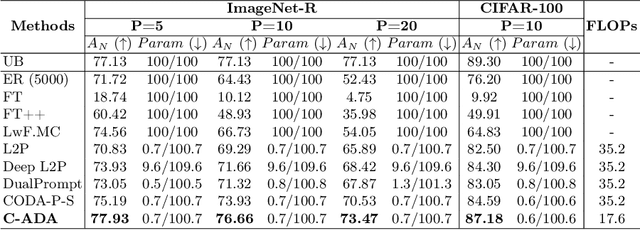

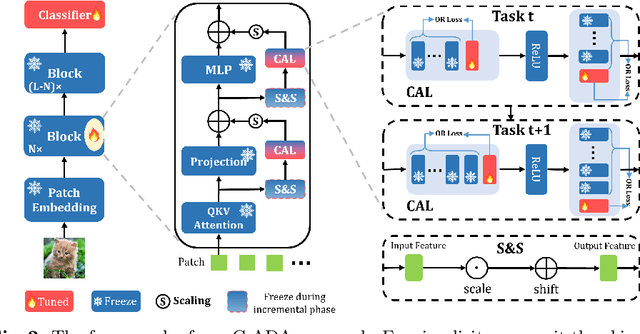

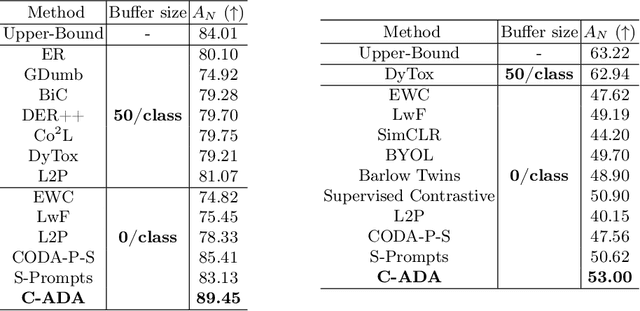

Abstract:The problem of Rehearsal-Free Continual Learning (RFCL) aims to continually learn new knowledge while preventing forgetting of the old knowledge, without storing any old samples and prototypes. The latest methods leverage large-scale pre-trained models as the backbone and use key-query matching to generate trainable prompts to learn new knowledge. However, the domain gap between the pre-training dataset and the downstream datasets can easily lead to inaccuracies in key-query matching prompt selection when directly generating queries using the pre-trained model, which hampers learning new knowledge. Thus, in this paper, we propose a beyond prompt learning approach to the RFCL task, called Continual Adapter (C-ADA). It mainly comprises a parameter-extensible continual adapter layer (CAL) and a scaling and shifting (S&S) module in parallel with the pre-trained model. C-ADA flexibly extends specific weights in CAL to learn new knowledge for each task and freezes old weights to preserve prior knowledge, thereby avoiding matching errors and operational inefficiencies introduced by key-query matching. To reduce the gap, C-ADA employs an S&S module to transfer the feature space from pre-trained datasets to downstream datasets. Moreover, we propose an orthogonal loss to mitigate the interaction between old and new knowledge. Our approach achieves significantly improved performance and training speed, outperforming the current state-of-the-art (SOTA) method. Additionally, we conduct experiments on domain-incremental learning, surpassing the SOTA, and demonstrating the generality of our approach in different settings.

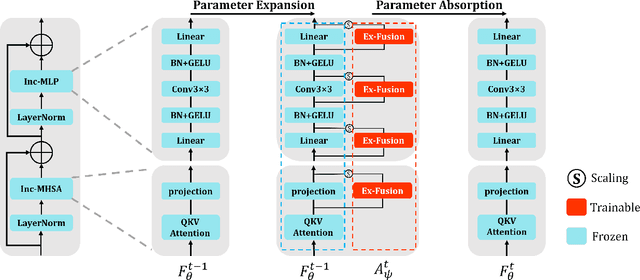

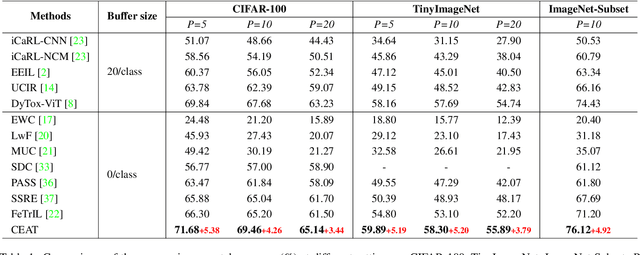

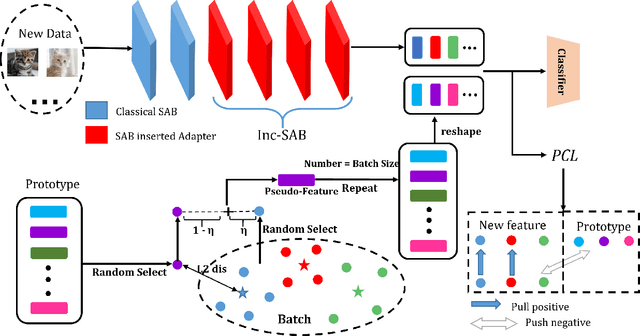

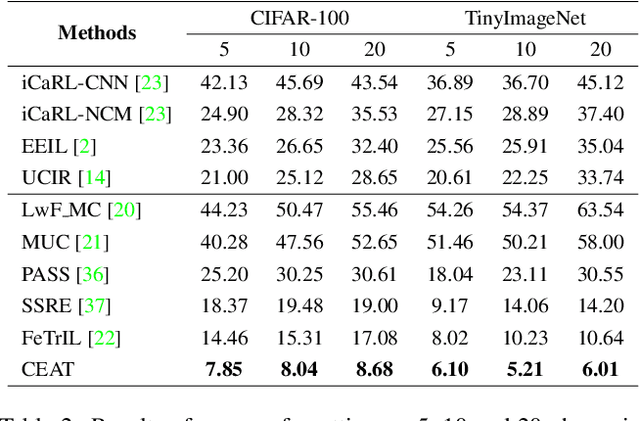

CEAT: Continual Expansion and Absorption Transformer for Non-Exemplar Class-Incremental Learning

Mar 12, 2024

Abstract:In real-world applications, dynamic scenarios require the models to possess the capability to learn new tasks continuously without forgetting the old knowledge. Experience-Replay methods store a subset of the old images for joint training. In the scenario of more strict privacy protection, storing the old images becomes infeasible, which leads to a more severe plasticity-stability dilemma and classifier bias. To meet the above challenges, we propose a new architecture, named continual expansion and absorption transformer~(CEAT). The model can learn the novel knowledge by extending the expanded-fusion layers in parallel with the frozen previous parameters. After the task ends, we losslessly absorb the extended parameters into the backbone to ensure that the number of parameters remains constant. To improve the learning ability of the model, we designed a novel prototype contrastive loss to reduce the overlap between old and new classes in the feature space. Besides, to address the classifier bias towards the new classes, we propose a novel approach to generate the pseudo-features to correct the classifier. We experiment with our methods on three standard Non-Exemplar Class-Incremental Learning~(NECIL) benchmarks. Extensive experiments demonstrate that our model gets a significant improvement compared with the previous works and achieves 5.38%, 5.20%, and 4.92% improvement on CIFAR-100, TinyImageNet, and ImageNet-Subset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge