Xinyu Lei

Mitigating Cross-client GANs-based Attack in Federated Learning

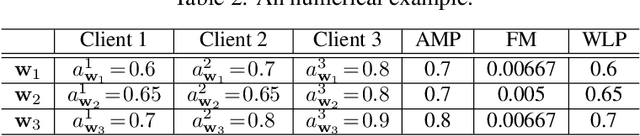

Jul 25, 2023Abstract:Machine learning makes multimedia data (e.g., images) more attractive, however, multimedia data is usually distributed and privacy sensitive. Multiple distributed multimedia clients can resort to federated learning (FL) to jointly learn a global shared model without requiring to share their private samples with any third-party entities. In this paper, we show that FL suffers from the cross-client generative adversarial networks (GANs)-based (C-GANs) attack, in which a malicious client (i.e., adversary) can reconstruct samples with the same distribution as the training samples from other clients (i.e., victims). Since a benign client's data can be leaked to the adversary, this attack brings the risk of local data leakage for clients in many security-critical FL applications. Thus, we propose Fed-EDKD (i.e., Federated Ensemble Data-free Knowledge Distillation) technique to improve the current popular FL schemes to resist C-GANs attack. In Fed-EDKD, each client submits a local model to the server for obtaining an ensemble global model. Then, to avoid model expansion, Fed-EDKD adopts data-free knowledge distillation techniques to transfer knowledge from the ensemble global model to a compressed model. By this way, Fed-EDKD reduces the adversary's control capability over the global model, so Fed-EDKD can effectively mitigate C-GANs attack. Finally, the experimental results demonstrate that Fed-EDKD significantly mitigates C-GANs attack while only incurring a slight accuracy degradation of FL.

Handling Data Heterogeneity in Federated Learning via Knowledge Fusion

Jul 23, 2022

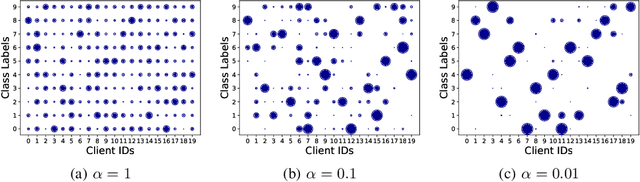

Abstract:Federated learning (FL) supports distributed training of a global machine learning model across multiple clients with the help from a central server. The local dataset held by each client is never exchanged in FL, so the local dataset privacy is protected. Although FL is increasingly popular, data heterogeneity across different clients leads to the client model drift issue and results in model performance degradation and poor model fairness. To address the issue, we design Federated learning with global-local Knowledge Fusion (FedKF) scheme in this paper. The key idea in FedKF is to let the server return the global knowledge to be fused with the local knowledge in each training round so that the local model can be regularized towards the global optima. Thus, the client model drift issue can be mitigated. In FedKF, we first propose the active-inactive model aggregation technique that supports a precise global knowledge representation. Then, we propose a data-free knowledge distillation (KD) approach to facilitate the KD from the global model to the local model while the local model can still learn the local knowledge (embedded in the local dataset) simultaneously, thereby realizing the global-local knowledge fusion process. The theoretical analysis and intensive experiments demonstrate that FedKF achieves high model performance, high fairness, and privacy-preserving simultaneously. The project source codes will be released on GitHub after the paper review.

Federated Learning with Domain Generalization

Nov 20, 2021

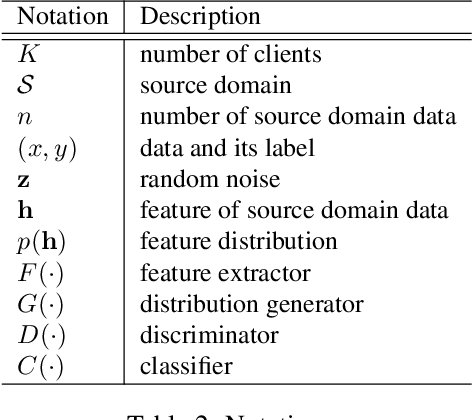

Abstract:Federated Learning (FL) enables a group of clients to jointly train a machine learning model with the help of a centralized server. Clients do not need to submit their local data to the server during training, and hence the local training data of clients is protected. In FL, distributed clients collect their local data independently, so the dataset of each client may naturally form a distinct source domain. In practice, the model trained over multiple source domains may have poor generalization performance on unseen target domains. To address this issue, we propose FedADG to equip federated learning with domain generalization capability. FedADG employs the federated adversarial learning approach to measure and align the distributions among different source domains via matching each distribution to a reference distribution. The reference distribution is adaptively generated (by accommodating all source domains) to minimize the domain shift distance during alignment. In FedADG, the alignment is fine-grained since each class is aligned independently. In this way, the learned feature representation is supposed to be universal, so it can generalize well on the unseen domains. Extensive experiments on various datasets demonstrate that FedADG has better performance than most of the previous solutions even if they have an additional advantage that allows centralized data access. To support study reproducibility, the project codes are available in https://github.com/wzml/FedADG

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge