Jingwen Shi

Handling Data Heterogeneity in Federated Learning via Knowledge Fusion

Jul 23, 2022

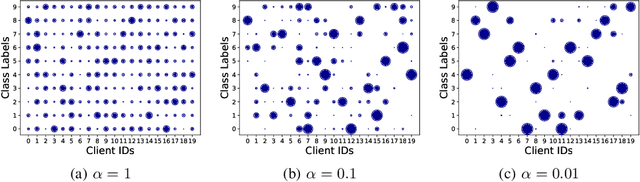

Abstract:Federated learning (FL) supports distributed training of a global machine learning model across multiple clients with the help from a central server. The local dataset held by each client is never exchanged in FL, so the local dataset privacy is protected. Although FL is increasingly popular, data heterogeneity across different clients leads to the client model drift issue and results in model performance degradation and poor model fairness. To address the issue, we design Federated learning with global-local Knowledge Fusion (FedKF) scheme in this paper. The key idea in FedKF is to let the server return the global knowledge to be fused with the local knowledge in each training round so that the local model can be regularized towards the global optima. Thus, the client model drift issue can be mitigated. In FedKF, we first propose the active-inactive model aggregation technique that supports a precise global knowledge representation. Then, we propose a data-free knowledge distillation (KD) approach to facilitate the KD from the global model to the local model while the local model can still learn the local knowledge (embedded in the local dataset) simultaneously, thereby realizing the global-local knowledge fusion process. The theoretical analysis and intensive experiments demonstrate that FedKF achieves high model performance, high fairness, and privacy-preserving simultaneously. The project source codes will be released on GitHub after the paper review.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge