Xingming Long

VOPE: Revisiting Hallucination of Vision-Language Models in Voluntary Imagination Task

Nov 17, 2025Abstract:Most research on hallucinations in Large Vision-Language Models (LVLMs) focuses on factual description tasks that prohibit any output absent from the image. However, little attention has been paid to hallucinations in voluntary imagination tasks, e.g., story writing, where the models are expected to generate novel content beyond the given image. In these tasks, it is inappropriate to simply regard such imagined novel content as hallucinations. To address this limitation, we introduce Voluntary-imagined Object Presence Evaluation (VOPE)-a novel method to assess LVLMs' hallucinations in voluntary imagination tasks via presence evaluation. Specifically, VOPE poses recheck-based questions to evaluate how an LVLM interprets the presence of the imagined objects in its own response. The consistency between the model's interpretation and the object's presence in the image is then used to determine whether the model hallucinates when generating the response. We apply VOPE to several mainstream LVLMs and hallucination mitigation methods, revealing two key findings: (1) most LVLMs hallucinate heavily during voluntary imagination, and their performance in presence evaluation is notably poor on imagined objects; (2) existing hallucination mitigation methods show limited effect in voluntary imagination tasks, making this an important direction for future research.

Semantic or Covariate? A Study on the Intractable Case of Out-of-Distribution Detection

Nov 18, 2024

Abstract:The primary goal of out-of-distribution (OOD) detection tasks is to identify inputs with semantic shifts, i.e., if samples from novel classes are absent in the in-distribution (ID) dataset used for training, we should reject these OOD samples rather than misclassifying them into existing ID classes. However, we find the current definition of "semantic shift" is ambiguous, which renders certain OOD testing protocols intractable for the post-hoc OOD detection methods based on a classifier trained on the ID dataset. In this paper, we offer a more precise definition of the Semantic Space and the Covariate Space for the ID distribution, allowing us to theoretically analyze which types of OOD distributions make the detection task intractable. To avoid the flaw in the existing OOD settings, we further define the "Tractable OOD" setting which ensures the distinguishability of OOD and ID distributions for the post-hoc OOD detection methods. Finally, we conduct several experiments to demonstrate the necessity of our definitions and validate the correctness of our theorems.

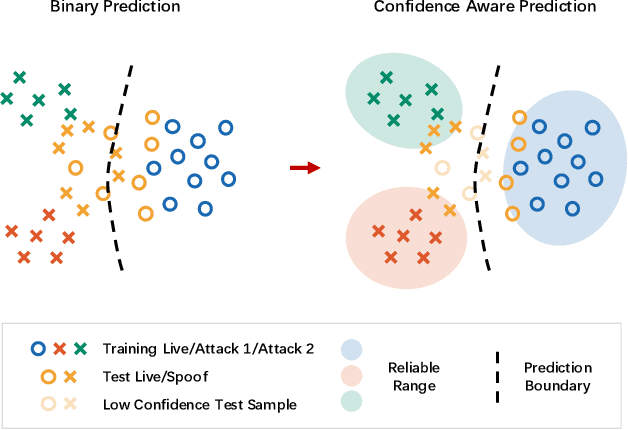

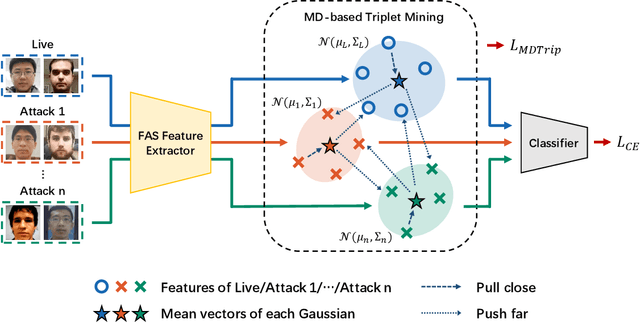

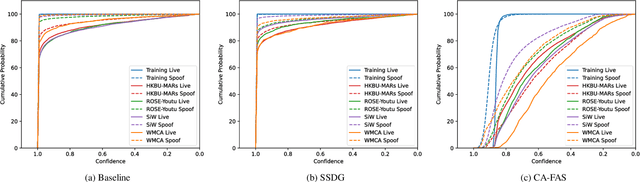

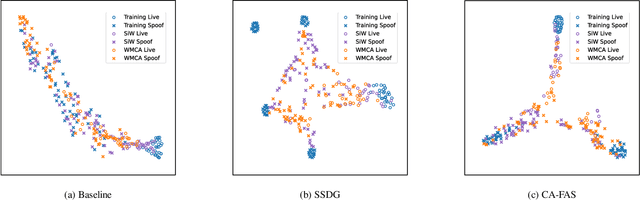

Confidence Aware Learning for Reliable Face Anti-spoofing

Nov 02, 2024

Abstract:Current Face Anti-spoofing (FAS) models tend to make overly confident predictions even when encountering unfamiliar scenarios or unknown presentation attacks, which leads to serious potential risks. To solve this problem, we propose a Confidence Aware Face Anti-spoofing (CA-FAS) model, which is aware of its capability boundary, thus achieving reliable liveness detection within this boundary. To enable the CA-FAS to "know what it doesn't know", we propose to estimate its confidence during the prediction of each sample. Specifically, we build Gaussian distributions for both the live faces and the known attacks. The prediction confidence for each sample is subsequently assessed using the Mahalanobis distance between the sample and the Gaussians for the "known data". We further introduce the Mahalanobis distance-based triplet mining to optimize the parameters of both the model and the constructed Gaussians as a whole. Extensive experiments show that the proposed CA-FAS can effectively recognize samples with low prediction confidence and thus achieve much more reliable performance than other FAS models by filtering out samples that are beyond its reliable range.

Rethinking the Evaluation of Out-of-Distribution Detection: A Sorites Paradox

Jun 14, 2024

Abstract:Most existing out-of-distribution (OOD) detection benchmarks classify samples with novel labels as the OOD data. However, some marginal OOD samples actually have close semantic contents to the in-distribution (ID) sample, which makes determining the OOD sample a Sorites Paradox. In this paper, we construct a benchmark named Incremental Shift OOD (IS-OOD) to address the issue, in which we divide the test samples into subsets with different semantic and covariate shift degrees relative to the ID dataset. The data division is achieved through a shift measuring method based on our proposed Language Aligned Image feature Decomposition (LAID). Moreover, we construct a Synthetic Incremental Shift (Syn-IS) dataset that contains high-quality generated images with more diverse covariate contents to complement the IS-OOD benchmark. We evaluate current OOD detection methods on our benchmark and find several important insights: (1) The performance of most OOD detection methods significantly improves as the semantic shift increases; (2) Some methods like GradNorm may have different OOD detection mechanisms as they rely less on semantic shifts to make decisions; (3) Excessive covariate shifts in the image are also likely to be considered as OOD for some methods. Our code and data are released in https://github.com/qqwsad5/IS-OOD.

Generalized Face Liveness Detection via De-spoofing Face Generator

Jan 17, 2024Abstract:Previous Face Anti-spoofing (FAS) works face the challenge of generalizing in unseen domains. One of the major problems is that most existing FAS datasets are relatively small and lack data diversity. However, we find that there are numerous real faces that can be easily achieved under various conditions, which are neglected by previous FAS works. In this paper, we conduct an Anomalous cue Guided FAS (AG-FAS) method, which leverages real faces for improving model generalization via a De-spoofing Face Generator (DFG). Specifically, the DFG trained only on the real faces gains the knowledge of what a real face should be like and can generate a "real" version of the face corresponding to any given input face. The difference between the generated "real" face and the input face can provide an anomalous cue for the downstream FAS task. We then propose an Anomalous cue Guided FAS feature extraction Network (AG-Net) to further improve the FAS feature generalization via a cross-attention transformer. Extensive experiments on a total of nine public datasets show our method achieves state-of-the-art results under cross-domain evaluations with unseen scenarios and unknown presentation attacks.

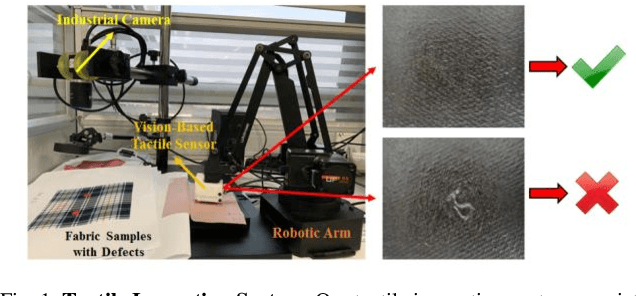

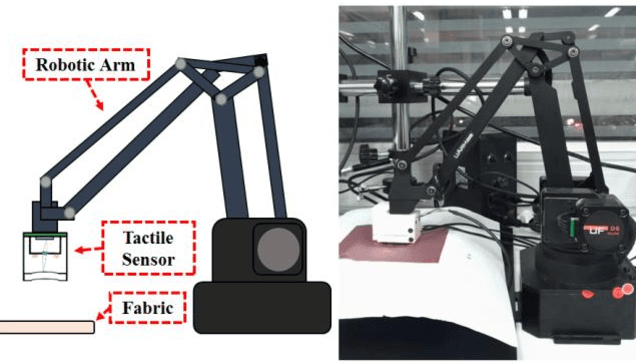

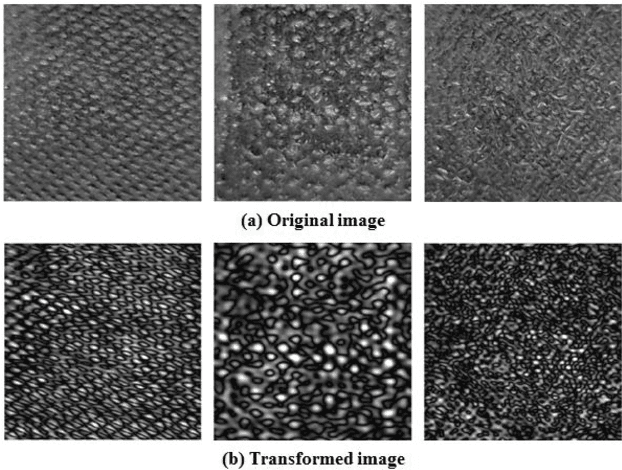

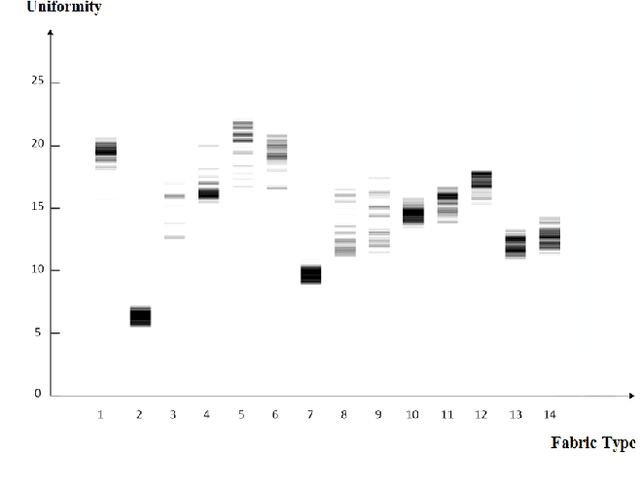

Fabric Defect Detection Using Vision-Based Tactile Sensor

Mar 02, 2020

Abstract:This paper introduces a new type of system for fabric defect detection with the tactile inspection system. Different from existed visual inspection systems, the proposed system implements a vision-based tactile sensor. The tactile sensor, which mainly consists of a camera, four LEDs, and an elastic sensing layer, captures detailed information about fabric surface structure and ignores the color and pattern. Thus, the ambiguity between a defect and image background related to fabric color and pattern is avoided. To utilize the tactile sensor for fabric inspection, we employ intensity adjustment for image preprocessing, Residual Network with ensemble learning for detecting defects, and uniformity measurement for selecting ideal dataset for model training. An experiment is conducted to verify the performance of the proposed tactile system. The experimental results have demonstrated the feasibility of the proposed system, which performs well in detecting structural defects for various types of fabrics. In addition, the system does not require external light sources, which skips the process of setting up and tuning a lighting environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge