Xiaobo Zhu

Multi-perspective Feedback-attention Coupling Model for Continuous-time Dynamic Graphs

Dec 13, 2023Abstract:Recently, representation learning over graph networks has gained popularity, with various models showing promising results. Despite this, several challenges persist: 1) most methods are designed for static or discrete-time dynamic graphs; 2) existing continuous-time dynamic graph algorithms focus on a single evolving perspective; and 3) many continuous-time dynamic graph approaches necessitate numerous temporal neighbors to capture long-term dependencies. In response, this paper introduces the Multi-Perspective Feedback-Attention Coupling (MPFA) model. MPFA incorporates information from both evolving and raw perspectives, efficiently learning the interleaved dynamics of observed processes. The evolving perspective employs temporal self-attention to distinguish continuously evolving temporal neighbors for information aggregation. Through dynamic updates, this perspective can capture long-term dependencies using a small number of temporal neighbors. Meanwhile, the raw perspective utilizes a feedback attention module with growth characteristic coefficients to aggregate raw neighborhood information. Experimental results on a self-organizing dataset and seven public datasets validate the efficacy and competitiveness of our proposed model.

Dynamic Link Prediction for New Nodes in Temporal Graph Networks

Oct 15, 2023

Abstract:Modelling temporal networks for dynamic link prediction of new nodes has many real-world applications, such as providing relevant item recommendations to new customers in recommender systems and suggesting appropriate posts to new users on social platforms. Unlike old nodes, new nodes have few historical links, which poses a challenge for the dynamic link prediction task. Most existing dynamic models treat all nodes equally and are not specialized for new nodes, resulting in suboptimal performances. In this paper, we consider dynamic link prediction of new nodes as a few-shot problem and propose a novel model based on the meta-learning principle to effectively mitigate this problem. Specifically, we develop a temporal encoder with a node-level span memory to obtain a new node embedding, and then we use a predictor to determine whether the new node generates a link. To overcome the few-shot challenge, we incorporate the encoder-predictor into the meta-learning paradigm, which can learn two types of implicit information during the formation of the temporal network through span adaptation and node adaptation. The acquired implicit information can serve as model initialisation and facilitate rapid adaptation to new nodes through a fine-tuning process on just a few links. Experiments on three publicly available datasets demonstrate the superior performance of our model compared to existing state-of-the-art methods.

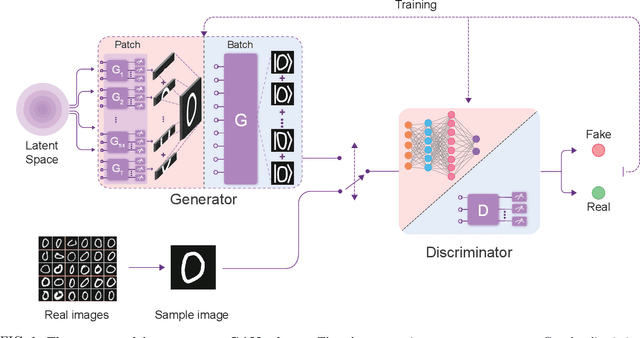

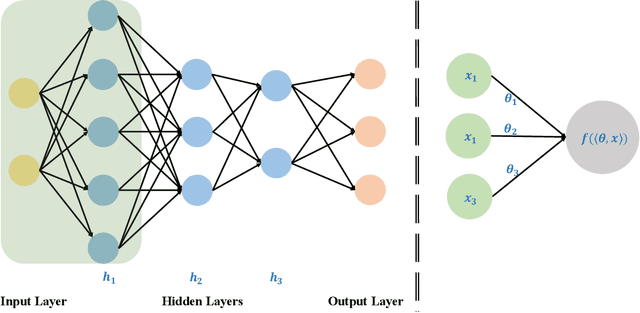

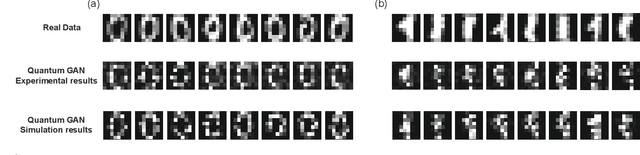

Experimental Quantum Generative Adversarial Networks for Image Generation

Oct 21, 2020

Abstract:Quantum machine learning is expected to be one of the first practical applications of near-term quantum devices. Pioneer theoretical works suggest that quantum generative adversarial networks (GANs) may exhibit a potential exponential advantage over classical GANs, thus attracting widespread attention. However, it remains elusive whether quantum GANs implemented on near-term quantum devices can actually solve real-world learning tasks. Here, we devise a flexible quantum GAN scheme to narrow this knowledge gap, which could accomplish image generation with arbitrarily high-dimensional features, and could also take advantage of quantum superposition to train multiple examples in parallel. For the first time, we experimentally achieve the learning and generation of real-world hand-written digit images on a superconducting quantum processor. Moreover, we utilize a gray-scale bar dataset to exhibit the competitive performance between quantum GANs and the classical GANs based on multilayer perceptron and convolutional neural network architectures, respectively, benchmarked by the Fr\'echet Distance score. Our work provides guidance for developing advanced quantum generative models on near-term quantum devices and opens up an avenue for exploring quantum advantages in various GAN-related learning tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge