Xiang Cui

EvilModel 2.0: Hiding Malware Inside of Neural Network Models

Sep 09, 2021

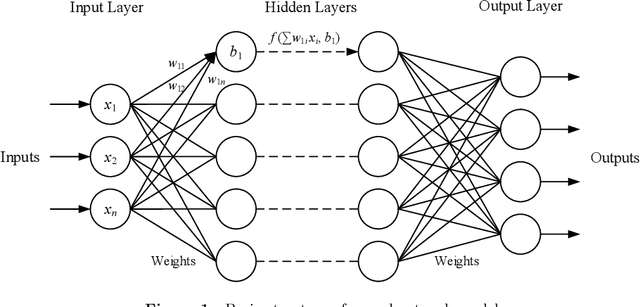

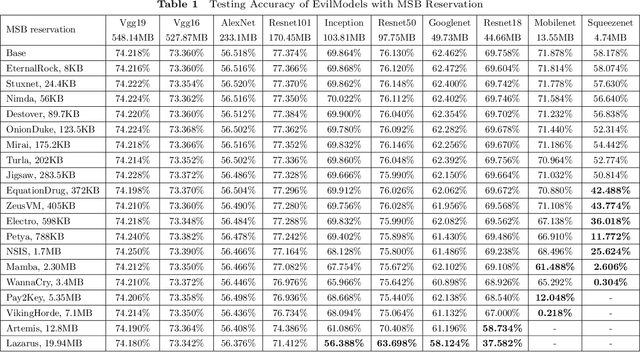

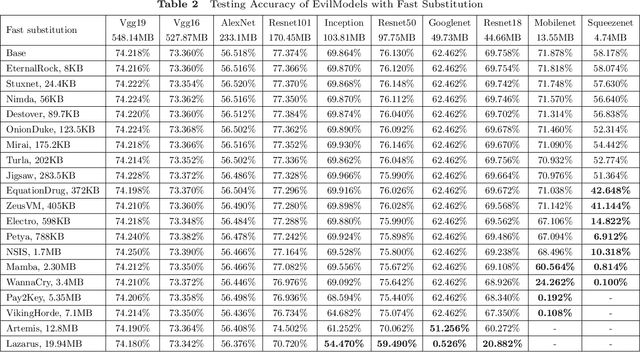

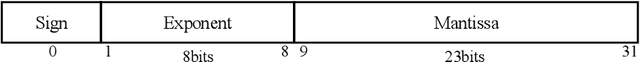

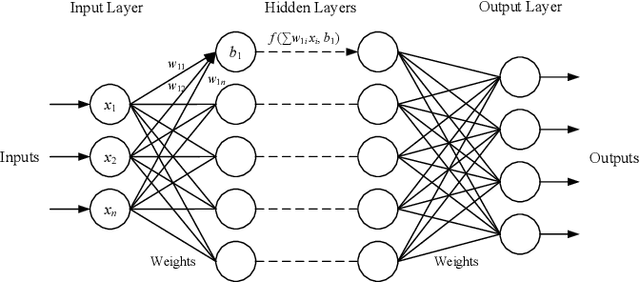

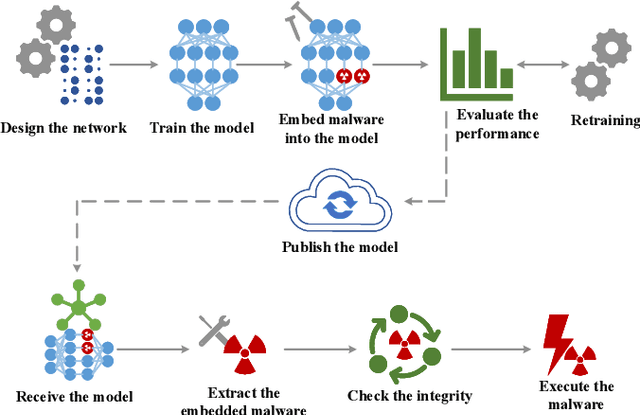

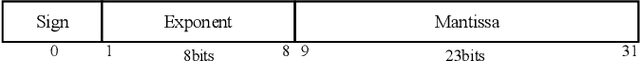

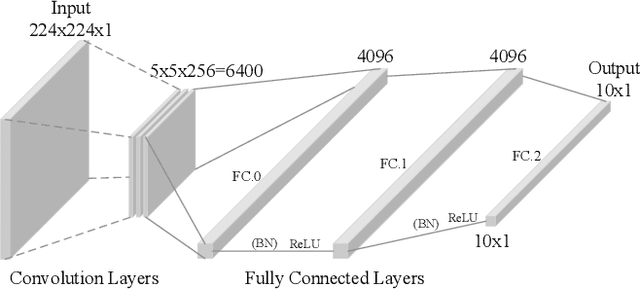

Abstract:While artificial intelligence (AI) is widely applied in various areas, it is also being used maliciously. It is necessary to study and predict AI-powered attacks to prevent them in advance. Turning neural network models into stegomalware is a malicious use of AI, which utilizes the features of neural network models to hide malware while maintaining the performance of the models. However, the existing methods have a low malware embedding rate and a high impact on the model performance, making it not practical. Therefore, by analyzing the composition of the neural network models, this paper proposes new methods to embed malware in models with high capacity and no service quality degradation. We used 19 malware samples and 10 mainstream models to build 550 malware-embedded models and analyzed the models' performance on ImageNet dataset. A new evaluation method that combines the embedding rate, the model performance impact and the embedding effort is proposed to evaluate the existing methods. This paper also designs a trigger and proposes an application scenario in attack tasks combining EvilModel with WannaCry. This paper further studies the relationship between neural network models' embedding capacity and the model structure, layer and size. With the widespread application of artificial intelligence, utilizing neural networks for attacks is becoming a forwarding trend. We hope this work can provide a reference scenario for the defense of neural network-assisted attacks.

EvilModel: Hiding Malware Inside of Neural Network Models

Aug 05, 2021

Abstract:Delivering malware covertly and evasively is critical to advanced malware campaigns. In this paper, we present a new method to covertly and evasively deliver malware through a neural network model. Neural network models are poorly explainable and have a good generalization ability. By embedding malware in neurons, the malware can be delivered covertly, with minor or no impact on the performance of neural network. Meanwhile, because the structure of the neural network model remains unchanged, it can pass the security scan of antivirus engines. Experiments show that 36.9MB of malware can be embedded in a 178MB-AlexNet model within 1% accuracy loss, and no suspicion is raised by anti-virus engines in VirusTotal, which verifies the feasibility of this method. With the widespread application of artificial intelligence, utilizing neural networks for attacks becomes a forwarding trend. We hope this work can provide a reference scenario for the defense on neural network-assisted attacks.

AI-powered Covert Botnet Command and Control on OSNs

Sep 22, 2020

Abstract:Botnet is one of the major threats to computer security. In previous botnet command and control (C&C) scenarios using online social networks (OSNs), methods for finding botmasters (e.g. ids, links, DGAs, etc.) are hardcoded into bots. Once a bot is reverse engineered, botmaster is exposed. Meanwhile, abnormal contents from explicit commands may expose botmaster and raise anomalies on OSNs. To overcome these deficiencies, we propose an AI-powered covert C&C channel. On leverage of neural networks, bots can find botmasters by avatars, which are converted into feature vectors. Commands are embedded into normal contents (e.g. tweets, comments, etc.) using text data augmentation and hash collision. Experiment on Twitter shows that the command-embedded contents can be generated efficiently, and bots can find botmaster and obtain commands accurately. By demonstrating how AI may help promote a covert communication on OSNs, this work provides a new perspective on botnet detection and confrontation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge