Xavier Timoneda

Multi-modal NeRF Self-Supervision for LiDAR Semantic Segmentation

Nov 05, 2024

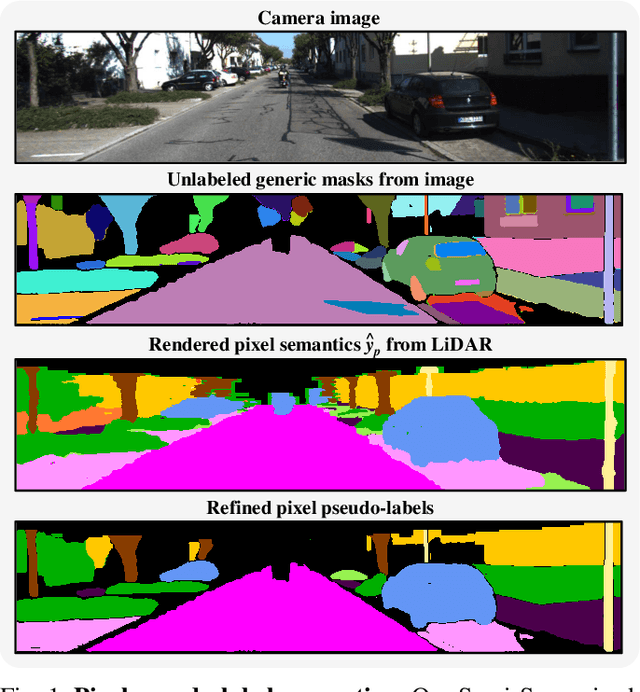

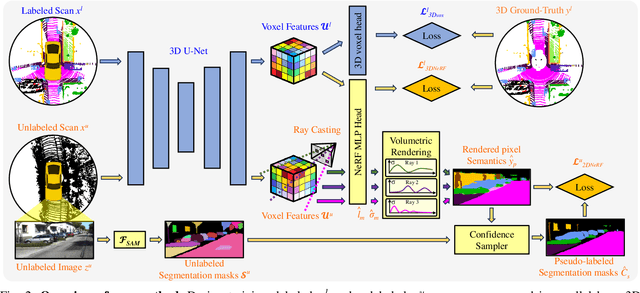

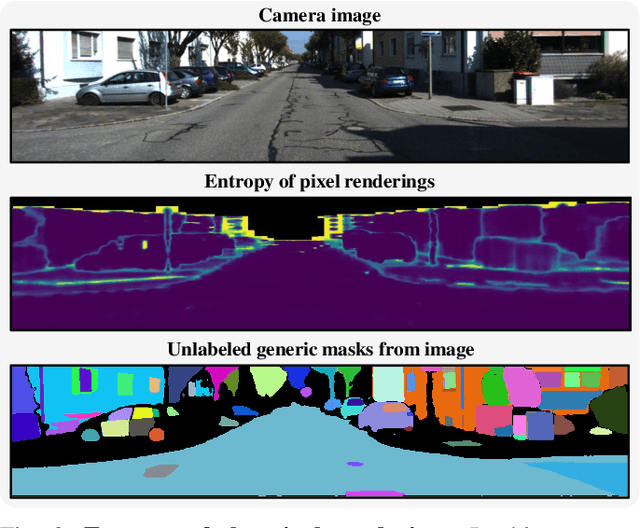

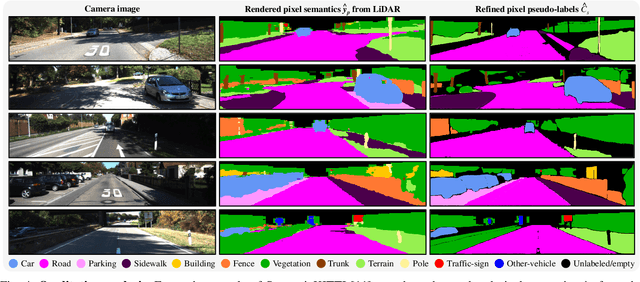

Abstract:LiDAR Semantic Segmentation is a fundamental task in autonomous driving perception consisting of associating each LiDAR point to a semantic label. Fully-supervised models have widely tackled this task, but they require labels for each scan, which either limits their domain or requires impractical amounts of expensive annotations. Camera images, which are generally recorded alongside LiDAR pointclouds, can be processed by the widely available 2D foundation models, which are generic and dataset-agnostic. However, distilling knowledge from 2D data to improve LiDAR perception raises domain adaptation challenges. For example, the classical perspective projection suffers from the parallax effect produced by the position shift between both sensors at their respective capture times. We propose a Semi-Supervised Learning setup to leverage unlabeled LiDAR pointclouds alongside distilled knowledge from the camera images. To self-supervise our model on the unlabeled scans, we add an auxiliary NeRF head and cast rays from the camera viewpoint over the unlabeled voxel features. The NeRF head predicts densities and semantic logits at each sampled ray location which are used for rendering pixel semantics. Concurrently, we query the Segment-Anything (SAM) foundation model with the camera image to generate a set of unlabeled generic masks. We fuse the masks with the rendered pixel semantics from LiDAR to produce pseudo-labels that supervise the pixel predictions. During inference, we drop the NeRF head and run our model with only LiDAR. We show the effectiveness of our approach in three public LiDAR Semantic Segmentation benchmarks: nuScenes, SemanticKITTI and ScribbleKITTI.

Reinforcement Learning for Scalable Logic Optimization with Graph Neural Networks

May 04, 2021

Abstract:Logic optimization is an NP-hard problem commonly approached through hand-engineered heuristics. We propose to combine graph convolutional networks with reinforcement learning and a novel, scalable node embedding method to learn which local transforms should be applied to the logic graph. We show that this method achieves a similar size reduction as ABC on smaller circuits and outperforms it by 1.5-1.75x on larger random graphs.

Radiation pattern prediction for Metasurfaces: A Neural Network based approach

Jul 15, 2020

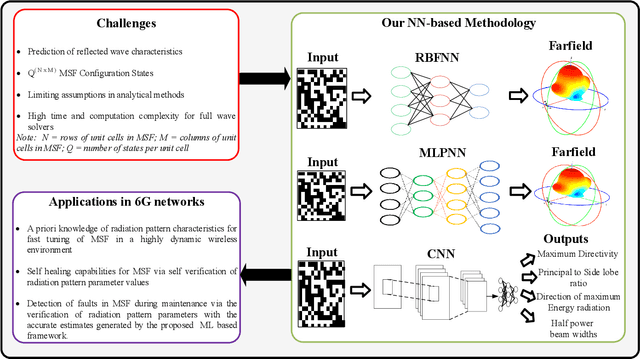

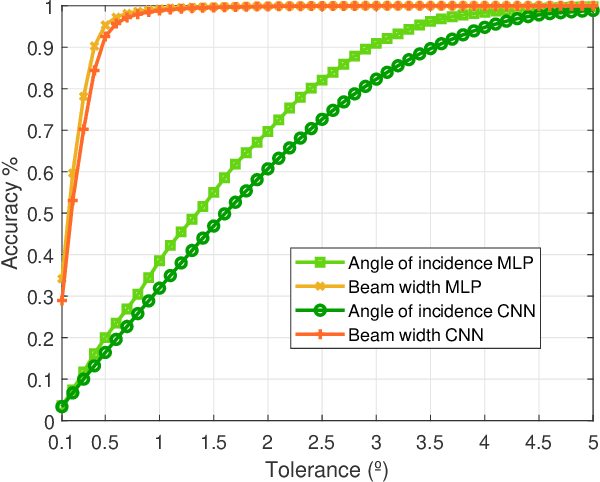

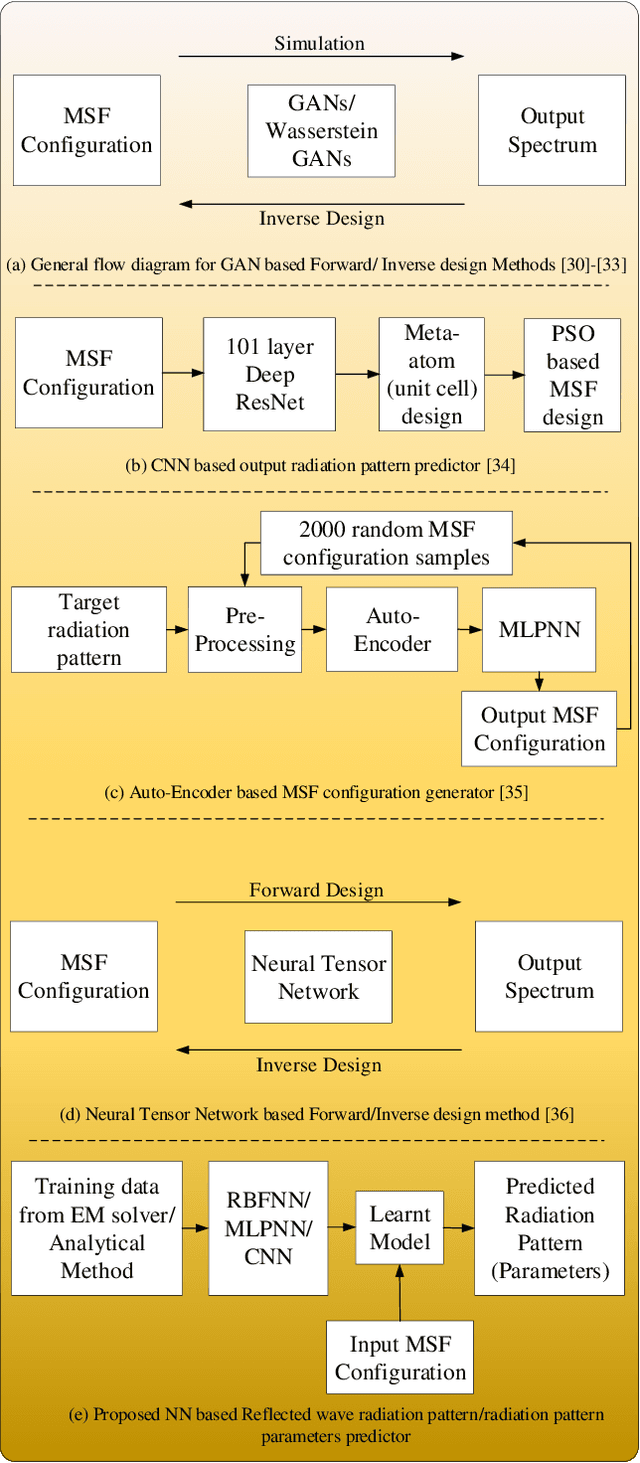

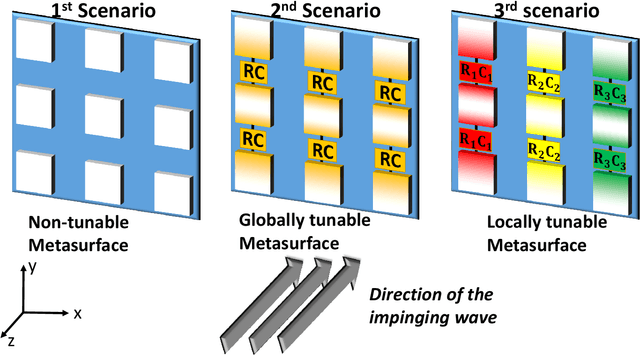

Abstract:As the current standardization for the 5G networks nears completion, work towards understanding the potential technologies for the 6G wireless networks is already underway. One of these potential technologies for the 6G networks are Reconfigurable Intelligent Surfaces (RISs). They offer unprecedented degrees of freedom towards engineering the wireless channel, i.e., the ability to modify the characteristics of the channel whenever and however required. Nevertheless, such properties demand that the response of the associated metasurface (MSF) is well understood under all possible operational conditions. While an understanding of the radiation pattern characteristics can be obtained through either analytical models or full wave simulations, they suffer from inaccuracy under certain conditions and extremely high computational complexity, respectively. Hence, in this paper we propose a novel neural networks based approach that enables a fast and accurate characterization of the MSF response. We analyze multiple scenarios and demonstrate the capabilities and utility of the proposed methodology. Concretely, we show that this method is able to learn and predict the parameters governing the reflected wave radiation pattern with an accuracy of a full wave simulation (98.8%-99.8%) and the time and computational complexity of an analytical model. The aforementioned result and methodology will be of specific importance for the design, fault tolerance and maintenance of the thousands of RISs that will be deployed in the 6G network environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge