Albert Cabellos-Aparicio

Barcelona Neural Networking Center, Universitat Politècnica de Catalunya, Spain

Ordered Topological Deep Learning: a Network Modeling Case Study

Mar 20, 2025

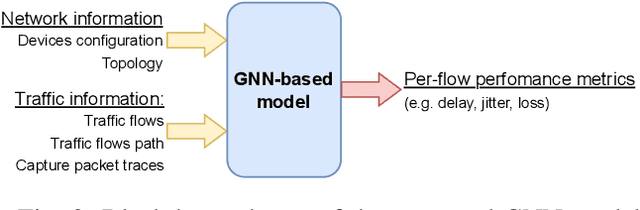

Abstract:Computer networks are the foundation of modern digital infrastructure, facilitating global communication and data exchange. As demand for reliable high-bandwidth connectivity grows, advanced network modeling techniques become increasingly essential to optimize performance and predict network behavior. Traditional modeling methods, such as packet-level simulators and queueing theory, have notable limitations --either being computationally expensive or relying on restrictive assumptions that reduce accuracy. In this context, the deep learning-based RouteNet family of models has recently redefined network modeling by showing an unprecedented cost-performance trade-off. In this work, we revisit RouteNet's sophisticated design and uncover its hidden connection to Topological Deep Learning (TDL), an emerging field that models higher-order interactions beyond standard graph-based methods. We demonstrate that, although originally formulated as a heterogeneous Graph Neural Network, RouteNet serves as the first instantiation of a new form of TDL. More specifically, this paper presents OrdGCCN, a novel TDL framework that introduces the notion of ordered neighbors in arbitrary discrete topological spaces, and shows that RouteNet's architecture can be naturally described as an ordered topological neural network. To the best of our knowledge, this marks the first successful real-world application of state-of-the-art TDL principles --which we confirm through extensive testbed experiments--, laying the foundation for the next generation of ordered TDL-driven applications.

RouteNet-Gauss: Hardware-Enhanced Network Modeling with Machine Learning

Jan 15, 2025

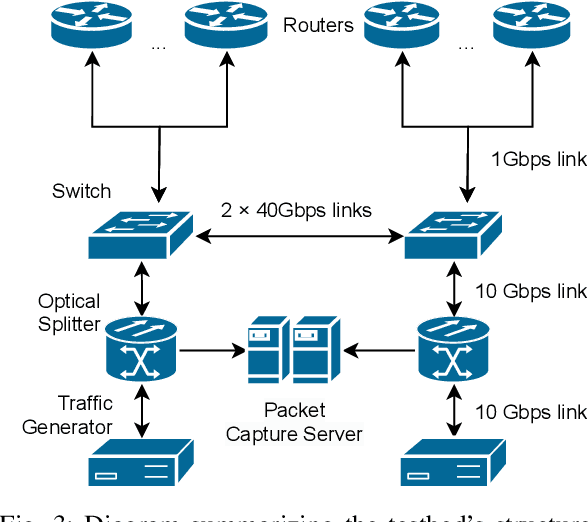

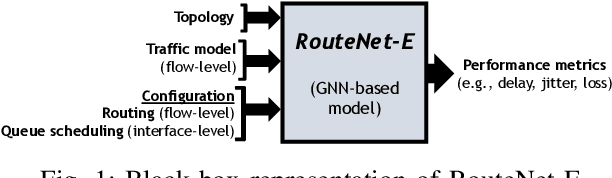

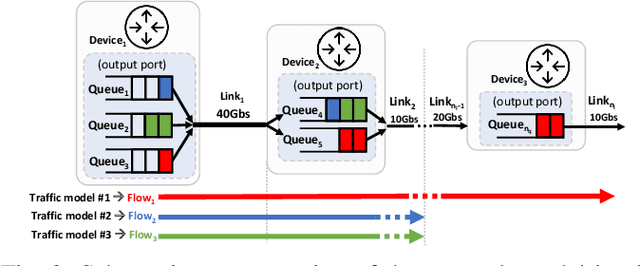

Abstract:Network simulation is pivotal in network modeling, assisting with tasks ranging from capacity planning to performance estimation. Traditional approaches such as Discrete Event Simulation (DES) face limitations in terms of computational cost and accuracy. This paper introduces RouteNet-Gauss, a novel integration of a testbed network with a Machine Learning (ML) model to address these challenges. By using the testbed as a hardware accelerator, RouteNet-Gauss generates training datasets rapidly and simulates network scenarios with high fidelity to real-world conditions. Experimental results show that RouteNet-Gauss significantly reduces prediction errors by up to 95% and achieves a 488x speedup in inference time compared to state-of-the-art DES-based methods. RouteNet-Gauss's modular architecture is dynamically constructed based on the specific characteristics of the network scenario, such as topology and routing. This enables it to understand and generalize to different network configurations beyond those seen during training, including networks up to 10x larger. Additionally, it supports Temporal Aggregated Performance Estimation (TAPE), providing configurable temporal granularity and maintaining high accuracy in flow performance metrics. This approach shows promise in improving both simulation efficiency and accuracy, offering a valuable tool for network operators.

Detecting Contextual Network Anomalies with Graph Neural Networks

Dec 11, 2023

Abstract:Detecting anomalies on network traffic is a complex task due to the massive amount of traffic flows in today's networks, as well as the highly-dynamic nature of traffic over time. In this paper, we propose the use of Graph Neural Networks (GNN) for network traffic anomaly detection. We formulate the problem as contextual anomaly detection on network traffic measurements, and propose a custom GNN-based solution that detects traffic anomalies on origin-destination flows. In our evaluation, we use real-world data from Abilene (6 months), and make a comparison with other widely used methods for the same task (PCA, EWMA, RNN). The results show that the anomalies detected by our solution are quite complementary to those captured by the baselines (with a max. of 36.33% overlapping anomalies for PCA). Moreover, we manually inspect the anomalies detected by our method, and find that a large portion of them can be visually validated by a network expert (64% with high confidence, 18% with mid confidence, 18% normal traffic). Lastly, we analyze the characteristics of the anomalies through two paradigmatic cases that are quite representative of the bulk of anomalies.

Building a Graph-based Deep Learning network model from captured traffic traces

Oct 18, 2023

Abstract:Currently the state of the art network models are based or depend on Discrete Event Simulation (DES). While DES is highly accurate, it is also computationally costly and cumbersome to parallelize, making it unpractical to simulate high performance networks. Additionally, simulated scenarios fail to capture all of the complexities present in real network scenarios. While there exists network models based on Machine Learning (ML) techniques to minimize these issues, these models are also trained with simulated data and hence vulnerable to the same pitfalls. Consequently, the Graph Neural Networking Challenge 2023 introduces a dataset of captured traffic traces that can be used to build a ML-based network model without these limitations. In this paper we propose a Graph Neural Network (GNN)-based solution specifically designed to better capture the complexities of real network scenarios. This is done through a novel encoding method to capture information from the sequence of captured packets, and an improved message passing algorithm to better represent the dependencies present in physical networks. We show that the proposed solution it is able to learn and generalize to unseen captured network scenarios.

Topological Graph Signal Compression

Aug 21, 2023Abstract:Recently emerged Topological Deep Learning (TDL) methods aim to extend current Graph Neural Networks (GNN) by naturally processing higher-order interactions, going beyond the pairwise relations and local neighborhoods defined by graph representations. In this paper we propose a novel TDL-based method for compressing signals over graphs, consisting in two main steps: first, disjoint sets of higher-order structures are inferred based on the original signal --by clustering $N$ datapoints into $K\ll N$ collections; then, a topological-inspired message passing gets a compressed representation of the signal within those multi-element sets. Our results show that our framework improves both standard GNN and feed-forward architectures in compressing temporal link-based signals from two real-word Internet Service Provider Networks' datasets --from $30\%$ up to $90\%$ better reconstruction errors across all evaluation scenarios--, suggesting that it better captures and exploits spatial and temporal correlations over the whole graph-based network structure.

GraphCC: A Practical Graph Learning-based Approach to Congestion Control in Datacenters

Aug 09, 2023

Abstract:Congestion Control (CC) plays a fundamental role in optimizing traffic in Data Center Networks (DCN). Currently, DCNs mainly implement two main CC protocols: DCTCP and DCQCN. Both protocols -- and their main variants -- are based on Explicit Congestion Notification (ECN), where intermediate switches mark packets when they detect congestion. The ECN configuration is thus a crucial aspect on the performance of CC protocols. Nowadays, network experts set static ECN parameters carefully selected to optimize the average network performance. However, today's high-speed DCNs experience quick and abrupt changes that severely change the network state (e.g., dynamic traffic workloads, incast events, failures). This leads to under-utilization and sub-optimal performance. This paper presents GraphCC, a novel Machine Learning-based framework for in-network CC optimization. Our distributed solution relies on a novel combination of Multi-agent Reinforcement Learning (MARL) and Graph Neural Networks (GNN), and it is compatible with widely deployed ECN-based CC protocols. GraphCC deploys distributed agents on switches that communicate with their neighbors to cooperate and optimize the global ECN configuration. In our evaluation, we test the performance of GraphCC under a wide variety of scenarios, focusing on the capability of this solution to adapt to new scenarios unseen during training (e.g., new traffic workloads, failures, upgrades). We compare GraphCC with a state-of-the-art MARL-based solution for ECN tuning -- ACC -- and observe that our proposed solution outperforms the state-of-the-art baseline in all of the evaluation scenarios, showing improvements up to $20\%$ in Flow Completion Time as well as significant reductions in buffer occupancy ($38.0-85.7\%$).

MAGNNETO: A Graph Neural Network-based Multi-Agent system for Traffic Engineering

Mar 31, 2023

Abstract:Current trends in networking propose the use of Machine Learning (ML) for a wide variety of network optimization tasks. As such, many efforts have been made to produce ML-based solutions for Traffic Engineering (TE), which is a fundamental problem in ISP networks. Nowadays, state-of-the-art TE optimizers rely on traditional optimization techniques, such as Local search, Constraint Programming, or Linear programming. In this paper, we present MAGNNETO, a distributed ML-based framework that leverages Multi-Agent Reinforcement Learning and Graph Neural Networks for distributed TE optimization. MAGNNETO deploys a set of agents across the network that learn and communicate in a distributed fashion via message exchanges between neighboring agents. Particularly, we apply this framework to optimize link weights in OSPF, with the goal of minimizing network congestion. In our evaluation, we compare MAGNNETO against several state-of-the-art TE optimizers in more than 75 topologies (up to 153 nodes and 354 links), including realistic traffic loads. Our experimental results show that, thanks to its distributed nature, MAGNNETO achieves comparable performance to state-of-the-art TE optimizers with significantly lower execution times. Moreover, our ML-based solution demonstrates a strong generalization capability to successfully operate in new networks unseen during training.

Proximal Policy Optimization with Graph Neural Networks for Optimal Power Flow

Dec 23, 2022

Abstract:Optimal Power Flow (OPF) is a very traditional research area within the power systems field that seeks for the optimal operation point of electric power plants, and which needs to be solved every few minutes in real-world scenarios. However, due to the nonconvexities that arise in power generation systems, there is not yet a fast, robust solution technique for the full Alternating Current Optimal Power Flow (ACOPF). In the last decades, power grids have evolved into a typical dynamic, non-linear and large-scale control system, known as the power system, so searching for better and faster ACOPF solutions is becoming crucial. Appearance of Graph Neural Networks (GNN) has allowed the natural use of Machine Learning (ML) algorithms on graph data, such as power networks. On the other hand, Deep Reinforcement Learning (DRL) is known for its powerful capability to solve complex decision-making problems. Although solutions that use these two methods separately are beginning to appear in the literature, none has yet combined the advantages of both. We propose a novel architecture based on the Proximal Policy Optimization algorithm with Graph Neural Networks to solve the Optimal Power Flow. The objective is to design an architecture that learns how to solve the optimization problem and that is at the same time able to generalize to unseen scenarios. We compare our solution with the DCOPF in terms of cost after having trained our DRL agent on IEEE 30 bus system and then computing the OPF on that base network with topology changes

RouteNet-Fermi: Network Modeling with Graph Neural Networks

Dec 22, 2022

Abstract:Network models are an essential block of modern networks. For example, they are widely used in network planning and optimization. However, as networks increase in scale and complexity, some models present limitations, such as the assumption of markovian traffic in queuing theory models, or the high computational cost of network simulators. Recent advances in machine learning, such as Graph Neural Networks (GNN), are enabling a new generation of network models that are data-driven and can learn complex non-linear behaviors. In this paper, we present RouteNet-Fermi, a custom GNN model that shares the same goals as queuing theory, while being considerably more accurate in the presence of realistic traffic models. The proposed model predicts accurately the delay, jitter, and loss in networks. We have tested RouteNet-Fermi in networks of increasing size (up to 300 nodes), including samples with mixed traffic profiles -- e.g., with complex non-markovian models -- and arbitrary routing and queue scheduling configurations. Our experimental results show that RouteNet-Fermi achieves similar accuracy as computationally-expensive packet-level simulators and it is able to accurately scale to large networks. For example, the model produces delay estimates with a mean relative error of 6.24% when applied to a test dataset with 1,000 samples, including network topologies one order of magnitude larger than those seen during training.

RouteNet-Erlang: A Graph Neural Network for Network Performance Evaluation

Feb 28, 2022

Abstract:Network modeling is a fundamental tool in network research, design, and operation. Arguably the most popular method for modeling is Queuing Theory (QT). Its main limitation is that it imposes strong assumptions on the packet arrival process, which typically do not hold in real networks. In the field of Deep Learning, Graph Neural Networks (GNN) have emerged as a new technique to build data-driven models that can learn complex and non-linear behavior. In this paper, we present \emph{RouteNet-Erlang}, a pioneering GNN architecture designed to model computer networks. RouteNet-Erlang supports complex traffic models, multi-queue scheduling policies, routing policies and can provide accurate estimates in networks not seen in the training phase. We benchmark RouteNet-Erlang against a state-of-the-art QT model, and our results show that it outperforms QT in all the network scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge