Wouter Jansen

Delay-Multiply-And-Sum Beamforming for Real-Time In-Air Acoustic Imaging

Nov 12, 2025Abstract:In-air acoustic imaging systems demand beamforming techniques that offer a high dynamic range and spatial resolution while also remaining robust. Conventional Delay-and-Sum (DAS) beamforming fails to meet these quality demands due to high sidelobes, a wide main lobe and the resulting low contrast, whereas advanced adaptive methods are typically precluded by the computational cost and the single-snapshot constraint of real-time field operation. To overcome this trade-off, we propose and detail the implementation of higher-order non-linear beamforming methods using the Delay-Multiply-and-Sum technique, coupled with Coherence Factor weighting, specifically adapted for ultrasonic in-air microphone arrays. Our efficient implementation allows for enabling GPU-accelerated, real-time performance on embedded computing platforms. Through validation against the DAS baseline using simulated and real-world acoustic data, we demonstrate that the proposed method provides significant improvements in image contrast, establishing higher-order non-linear beamforming as a practical, high-performance solution for in-air acoustic imaging.

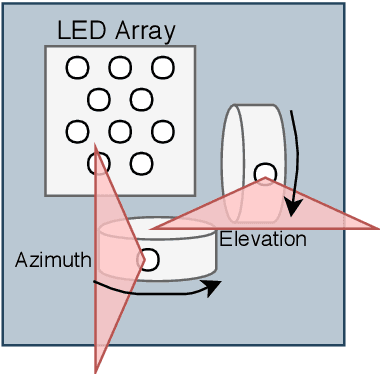

Stabilized Adaptive Steering for 3D Sonar Microphone Arrays with IMU Sensor Fusion

Jun 10, 2024Abstract:This paper presents a novel software-based approach to stabilizing the acoustic images for in-air 3D sonars. Due to uneven terrain, traditional static beamforming techniques can be misaligned, causing inaccurate measurements and imaging artifacts. Furthermore, mechanical stabilization can be more costly and prone to failure. We propose using an adaptive conventional beamforming approach by fusing it with real-time IMU data to adjust the sonar array's steering matrix dynamically based on the elevation tilt angle caused by the uneven ground. Additionally, we propose gaining compensation to offset emission energy loss due to the transducer's directivity pattern and validate our approach through various experiments, which show significant improvements in temporal consistency in the acoustic images. We implemented a GPU-accelerated software system that operates in real-time with an average execution time of 210ms, meeting autonomous navigation requirements.

Semantic Landmark Detection & Classification Using Neural Networks For 3D In-Air Sonar

May 30, 2024Abstract:In challenging environments where traditional sensing modalities struggle, in-air sonar offers resilience to optical interference. Placing a priori known landmarks in these environments can eliminate accumulated errors in autonomous mobile systems such as Simultaneous Localization and Mapping (SLAM) and autonomous navigation. We present a novel approach using a convolutional neural network to detect and classify ten different reflector landmarks with varying radii using in-air 3D sonar. Additionally, the network predicts the orientation angle of the detected landmarks. The neural network is trained on cochleograms, representing echoes received by the sensor in a time-frequency domain. Experimental results in cluttered indoor settings show promising performance. The CNN achieves a 97.3% classification accuracy on the test dataset, accurately detecting both the presence and absence of landmarks. Moreover, the network predicts landmark orientation angles with an RMSE lower than 10 degrees, enhancing the utility in SLAM and autonomous navigation applications. This advancement improves the robustness and accuracy of autonomous systems in challenging environments.

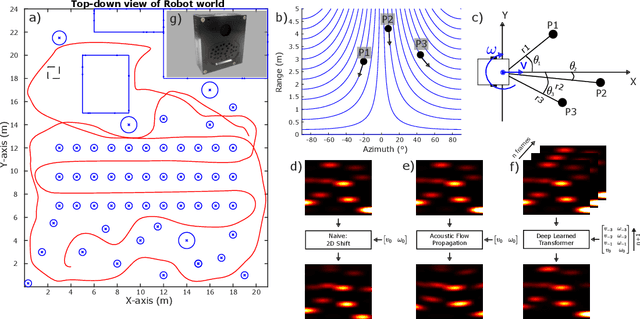

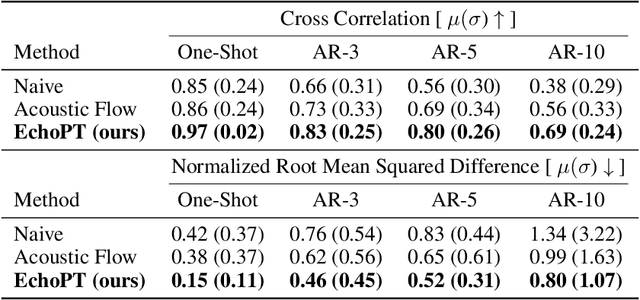

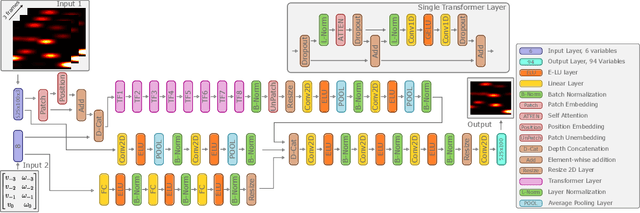

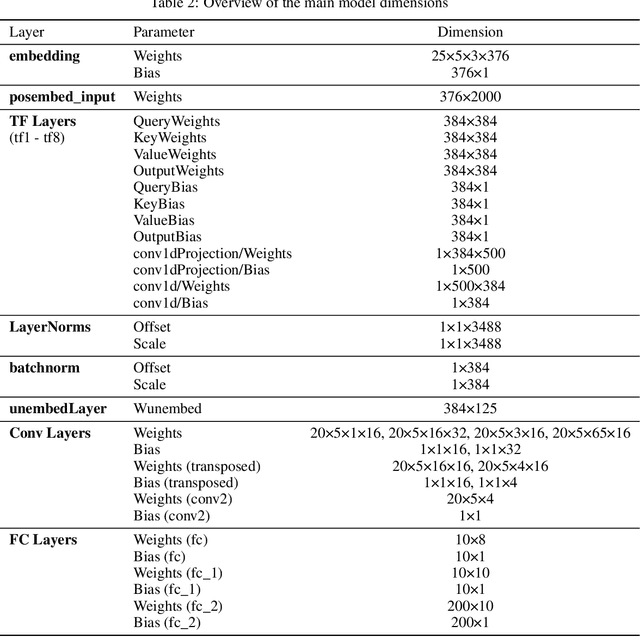

EchoPT: A Pretrained Transformer Architecture that Predicts 2D In-Air Sonar Images for Mobile Robotics

May 21, 2024

Abstract:The predictive brain hypothesis suggests that perception can be interpreted as the process of minimizing the error between predicted perception tokens generated by an internal world model and actual sensory input tokens. When implementing working examples of this hypothesis in the context of in-air sonar, significant difficulties arise due to the sparse nature of the reflection model that governs ultrasonic sensing. Despite these challenges, creating consistent world models using sonar data is crucial for implementing predictive processing of ultrasound data in robotics. In an effort to enable robust robot behavior using ultrasound as the sole exteroceptive sensor modality, this paper introduces EchoPT, a pretrained transformer architecture designed to predict 2D sonar images from previous sensory data and robot ego-motion information. We detail the transformer architecture that drives EchoPT and compare the performance of our model to several state-of-the-art techniques. In addition to presenting and evaluating our EchoPT model, we demonstrate the effectiveness of this predictive perception approach in two robotic tasks.

SonoTraceLab -- A Raytracing-Based Acoustic Modelling System for Simulating Echolocation Behavior of Bats

Mar 11, 2024Abstract:Echolocation is the prime sensing modality for many species of bats, who show the intricate ability to perform a plethora of tasks in complex and unstructured environments. Understanding this exceptional feat of sensorimotor interaction is a key aspect into building more robust and performant man-made sonar sensors. In order to better understand the underlying perception mechanisms it is important to get a good insight into the nature of the reflected signals that the bat perceives. While ensonification experiments are in important way to better understand the nature of these signals, they are as time-consuming to perform as they are informative. In this paper we present SonoTraceLab, an open-source software package for simulating both technical as well as biological sonar systems in complex scenes. Using simulation approaches can drastically increase insights into the nature of biological echolocation systems, while reducing the time- and material complexity of performing them.

Detecting and Classifying Bio-Inspired Artificial Landmarks Using In-Air 3D Sonar

Aug 11, 2023Abstract:Various autonomous applications rely on recognizing specific known landmarks in their environment. For example, Simultaneous Localization And Mapping (SLAM) is an important technique that lays the foundation for many common tasks, such as navigation and long-term object tracking. This entails building a map on the go based on sensory inputs which are prone to accumulating errors. Recognizing landmarks in the environment plays a vital role in correcting these errors and further improving the accuracy of SLAM. The most popular choice of sensors for conducting SLAM today is optical sensors such as cameras or LiDAR sensors. These can use landmarks such as QR codes as a prerequisite. However, such sensors become unreliable in certain conditions, e.g., foggy, dusty, reflective, or glass-rich environments. Sonar has proven to be a viable alternative to manage such situations better. However, acoustic sensors also require a different type of landmark. In this paper, we put forward a method to detect the presence of bio-mimetic acoustic landmarks using support vector machines trained on the frequency bands of the reflecting acoustic echoes using an embedded real-time imaging sonar.

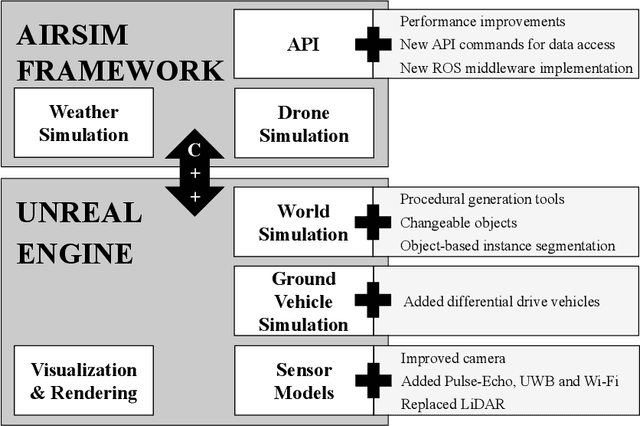

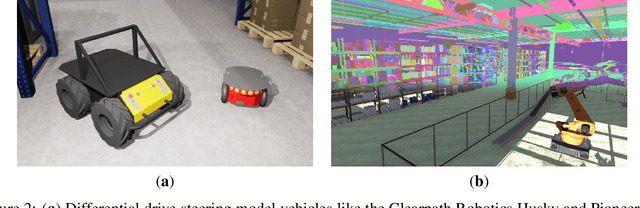

Cosys-AirSim: A Real-Time Simulation Framework Expanded for Complex Industrial Applications

Mar 28, 2023

Abstract:Within academia and industry, there has been a need for expansive simulation frameworks that include model-based simulation of sensors, mobile vehicles, and the environment around them. To this end, the modular, real-time, and open-source AirSim framework has been a popular community-built system that fulfills some of those needs. However, the framework required adding systems to serve some complex industrial applications, including designing and testing new sensor modalities, Simultaneous Localization And Mapping (SLAM), autonomous navigation algorithms, and transfer learning with machine learning models. In this work, we discuss the modification and additions to our open-source version of the AirSim simulation framework, including new sensor modalities, vehicle types, and methods to generate realistic environments with changeable objects procedurally. Furthermore, we show the various applications and use cases the framework can serve.

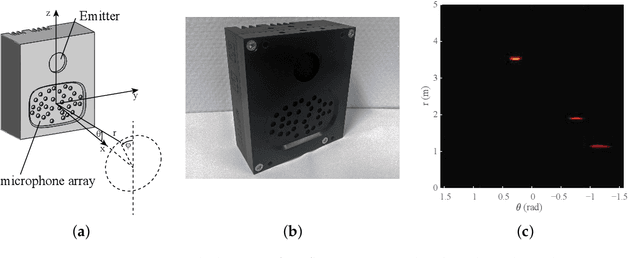

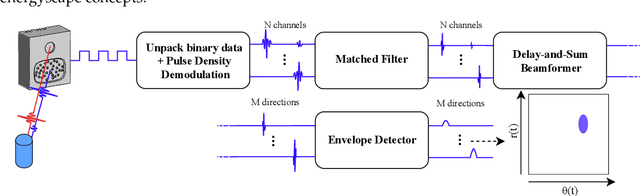

In-Air Imaging Sonar Sensor Network with Real-Time Processing Using GPUs

Aug 23, 2022Abstract:For autonomous navigation and robotic applications, sensing the environment correctly is crucial. Many sensing modalities for this purpose exist. In recent years, one such modality that is being used is in-air imaging sonar. It is ideal in complex environments with rough conditions such as dust or fog. However, like with most sensing modalities, to sense the full environment around the mobile platform, multiple such sensors are needed to capture the full 360-degree range. Currently the processing algorithms used to create this data are insufficient to do so for multiple sensors at a reasonably fast update rate. Furthermore, a flexible and robust framework is needed to easily implement multiple imaging sonar sensors into any setup and serve multiple application types for the data. In this paper we present a sensor network framework designed for this novel sensing modality. Furthermore, an implementation of the processing algorithm on a Graphics Processing Unit is proposed to potentially decrease the computing time to allow for real-time processing of one or more imaging sonar sensors at a sufficiently high update rate.

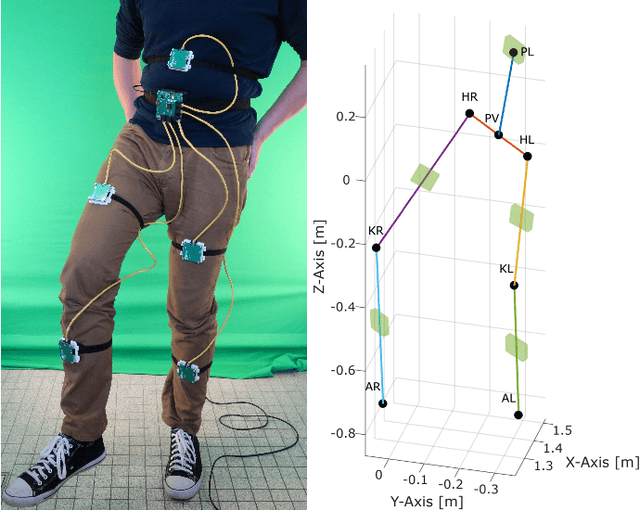

Automatic Calibration of a Six-Degrees-of-Freedom Pose Estimation System

Aug 23, 2022

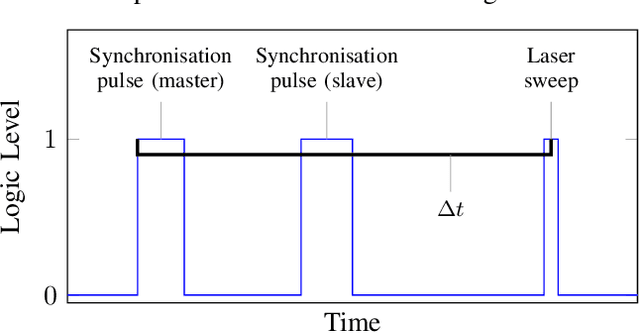

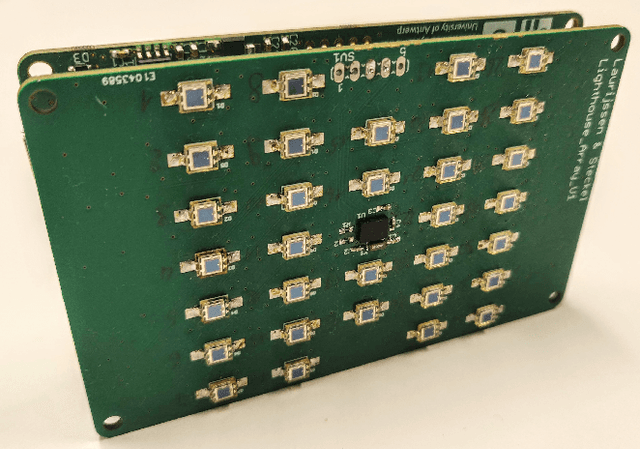

Abstract:Systems for estimating the six-degrees-of-freedom human body pose have been improving for over two decades. Technologies such as motion capture cameras, advanced gaming peripherals and more recently both deep learning techniques and virtual reality systems have shown impressive results. However, most systems that provide high accuracy and high precision are expensive and not easy to operate. Recently, research has been carried out to estimate the human body pose using the HTC Vive virtual reality system. This system shows accurate results while keeping the cost under a 1000 USD. This system uses an optical approach. Two transmitter devices emit infrared pulses and laser planes are tracked by use of photo diodes on receiver hardware. A system using these transmitter devices combined with low-cost custom-made receiver hardware was developed previously but requires manual measurement of the position and orientation of the transmitter devices. These manual measurements can be time consuming, prone to error and not possible in particular setups. We propose an algorithm to automatically calibrate the poses of the transmitter devices in any chosen environment with custom receiver/calibration hardware. Results show that the calibration works in a variety of setups while being more accurate than what manual measurements would allow. Furthermore, the calibration movement and speed has no noticeable influence on the precision of the results.

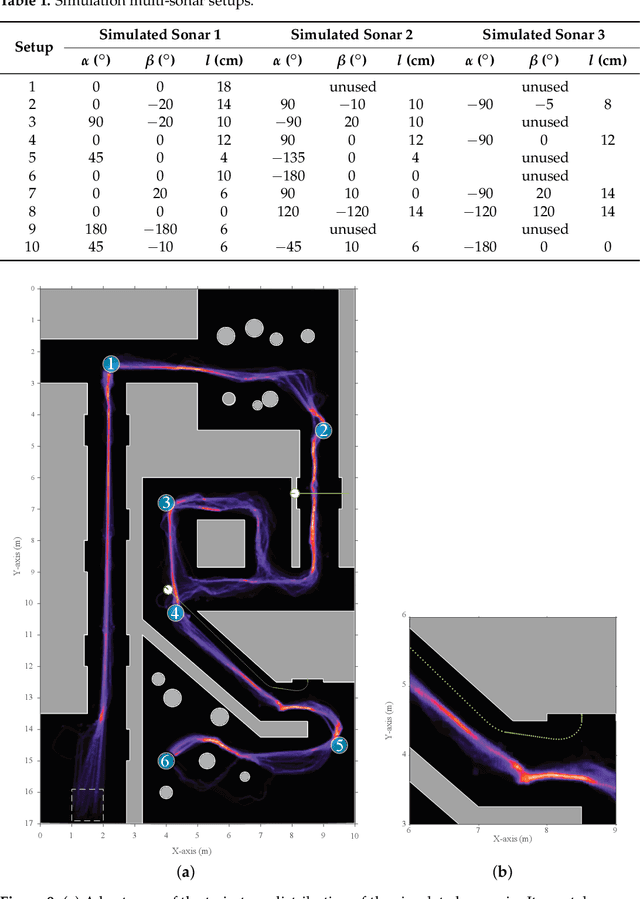

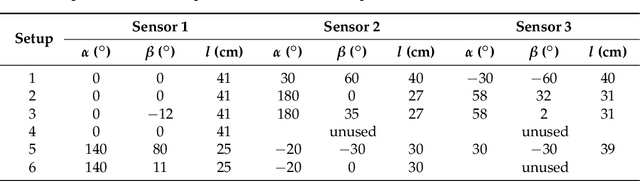

Real-Time Sonar Fusion for Layered Navigation Controller

Aug 23, 2022

Abstract:Navigation in varied and dynamic indoor environments remains a complex task for autonomous mobile platforms. Especially when conditions worsen, typical sensor modalities may fail to operate optimally and subsequently provide inapt input for safe navigation control. In this study, we present an approach for the navigation of a dynamic indoor environment with a mobile platform with a single or several sonar sensors using a layered control system. These sensors can operate in conditions such as rain, fog, dust, or dirt. The different control layers, such as collision avoidance and corridor following behavior, are activated based on acoustic flow queues in the fusion of the sonar images. The novelty of this work is allowing these sensors to be freely positioned on the mobile platform and providing the framework for designing the optimal navigational outcome based on a zoning system around the mobile platform. Presented in this paper is the acoustic flow model used, as well as the design of the layered controller. Next to validation in simulation, an implementation is presented and validated in a real office environment using a real mobile platform with one, two, or three sonar sensors in real time with 2D navigation. Multiple sensor layouts were validated in both the simulation and real experiments to demonstrate that the modular approach for the controller and sensor fusion works optimally. The results of this work show stable and safe navigation of indoor environments with dynamic objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge