Real-Time Sonar Fusion for Layered Navigation Controller

Paper and Code

Aug 23, 2022

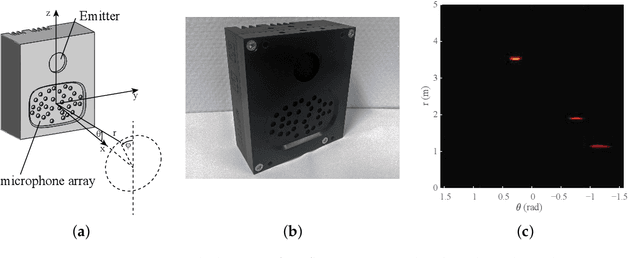

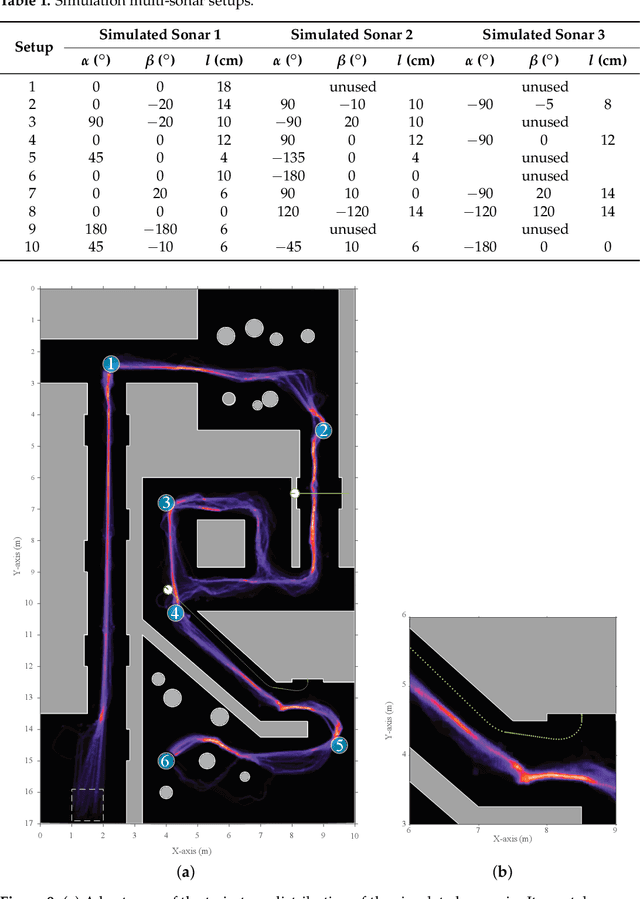

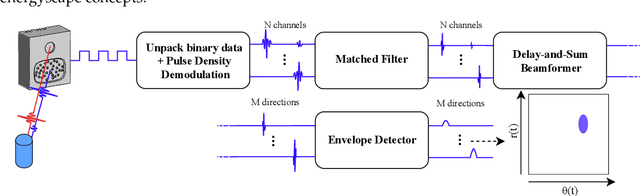

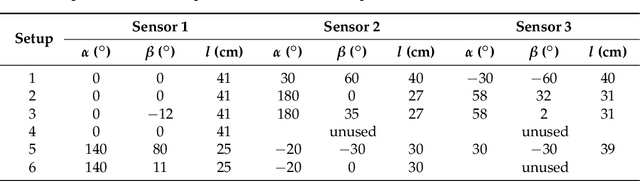

Navigation in varied and dynamic indoor environments remains a complex task for autonomous mobile platforms. Especially when conditions worsen, typical sensor modalities may fail to operate optimally and subsequently provide inapt input for safe navigation control. In this study, we present an approach for the navigation of a dynamic indoor environment with a mobile platform with a single or several sonar sensors using a layered control system. These sensors can operate in conditions such as rain, fog, dust, or dirt. The different control layers, such as collision avoidance and corridor following behavior, are activated based on acoustic flow queues in the fusion of the sonar images. The novelty of this work is allowing these sensors to be freely positioned on the mobile platform and providing the framework for designing the optimal navigational outcome based on a zoning system around the mobile platform. Presented in this paper is the acoustic flow model used, as well as the design of the layered controller. Next to validation in simulation, an implementation is presented and validated in a real office environment using a real mobile platform with one, two, or three sonar sensors in real time with 2D navigation. Multiple sensor layouts were validated in both the simulation and real experiments to demonstrate that the modular approach for the controller and sensor fusion works optimally. The results of this work show stable and safe navigation of indoor environments with dynamic objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge