Nico Huebel

EchoPT: A Pretrained Transformer Architecture that Predicts 2D In-Air Sonar Images for Mobile Robotics

May 21, 2024

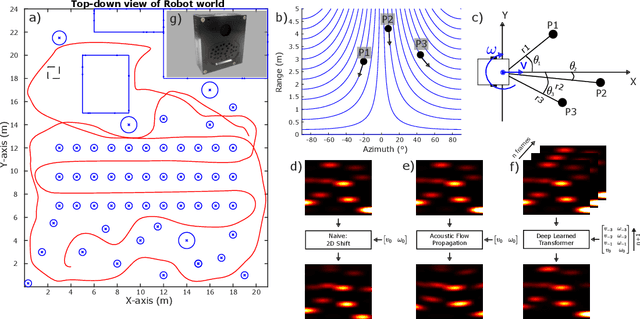

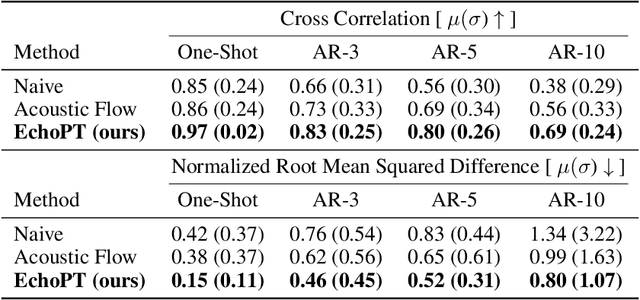

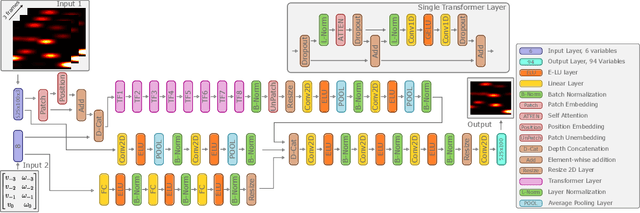

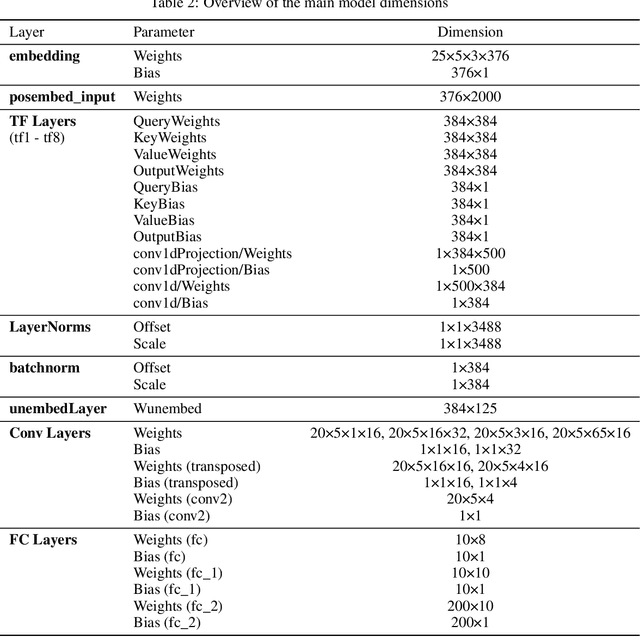

Abstract:The predictive brain hypothesis suggests that perception can be interpreted as the process of minimizing the error between predicted perception tokens generated by an internal world model and actual sensory input tokens. When implementing working examples of this hypothesis in the context of in-air sonar, significant difficulties arise due to the sparse nature of the reflection model that governs ultrasonic sensing. Despite these challenges, creating consistent world models using sonar data is crucial for implementing predictive processing of ultrasound data in robotics. In an effort to enable robust robot behavior using ultrasound as the sole exteroceptive sensor modality, this paper introduces EchoPT, a pretrained transformer architecture designed to predict 2D sonar images from previous sensory data and robot ego-motion information. We detail the transformer architecture that drives EchoPT and compare the performance of our model to several state-of-the-art techniques. In addition to presenting and evaluating our EchoPT model, we demonstrate the effectiveness of this predictive perception approach in two robotic tasks.

Cosys-AirSim: A Real-Time Simulation Framework Expanded for Complex Industrial Applications

Mar 28, 2023

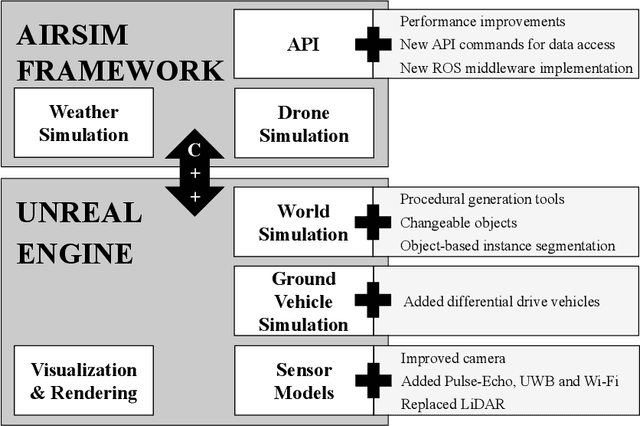

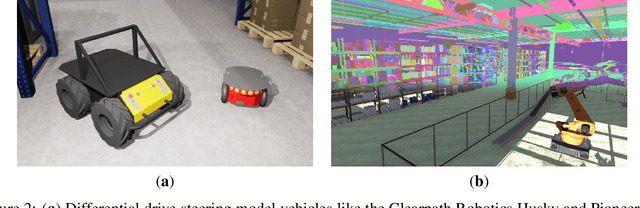

Abstract:Within academia and industry, there has been a need for expansive simulation frameworks that include model-based simulation of sensors, mobile vehicles, and the environment around them. To this end, the modular, real-time, and open-source AirSim framework has been a popular community-built system that fulfills some of those needs. However, the framework required adding systems to serve some complex industrial applications, including designing and testing new sensor modalities, Simultaneous Localization And Mapping (SLAM), autonomous navigation algorithms, and transfer learning with machine learning models. In this work, we discuss the modification and additions to our open-source version of the AirSim simulation framework, including new sensor modalities, vehicle types, and methods to generate realistic environments with changeable objects procedurally. Furthermore, we show the various applications and use cases the framework can serve.

Physical LiDAR Simulation in Real-Time Engine

Aug 23, 2022

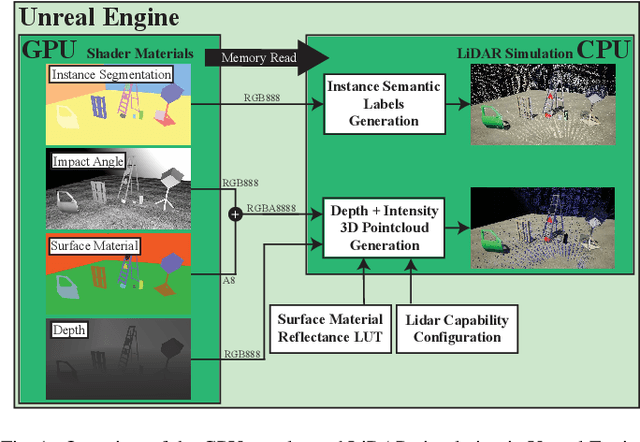

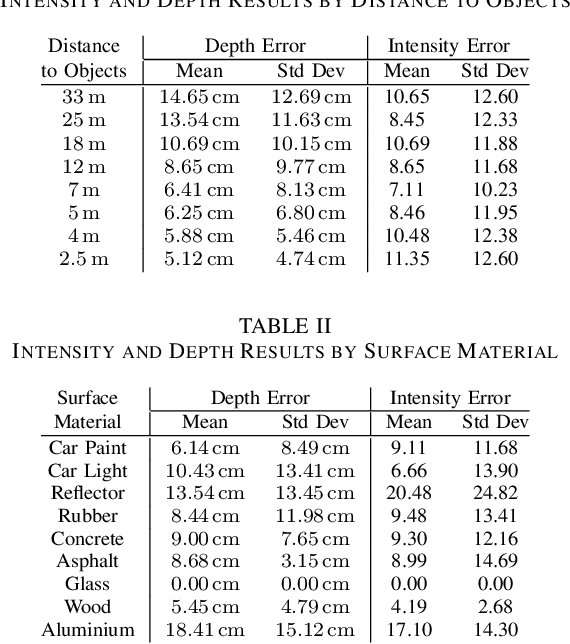

Abstract:Designing and validating sensor applications and algorithms in simulation is an important step in the modern development process. Furthermore, modern open-source multi-sensor simulation frameworks are moving towards the usage of video-game engines such as the Unreal Engine. Simulation of a sensor such as a LiDAR can prove to be difficult in such real-time software. In this paper we present a GPU-accelerated simulation of LiDAR based on its physical properties and interaction with the environment. We provide a generation of the depth and intensity data based on the properties of the sensor as well as the surface material and incidence angle at which the light beams hit the surface. It is validated against a real LiDAR sensor and shown to be accurate and precise although highly depended on the spectral data used for the material properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge