EchoPT: A Pretrained Transformer Architecture that Predicts 2D In-Air Sonar Images for Mobile Robotics

Paper and Code

May 21, 2024

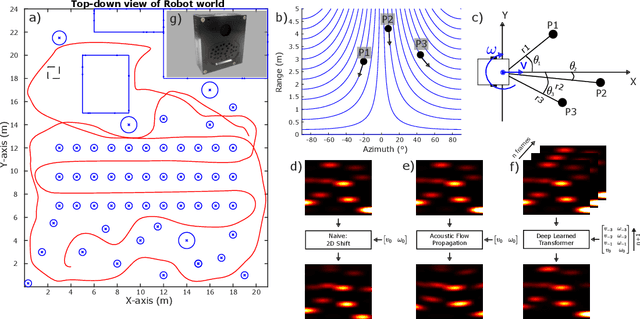

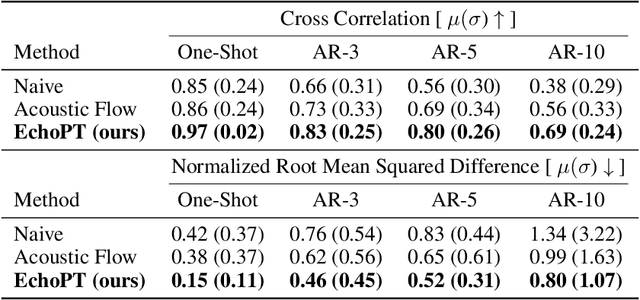

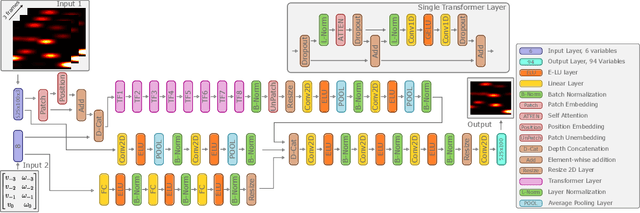

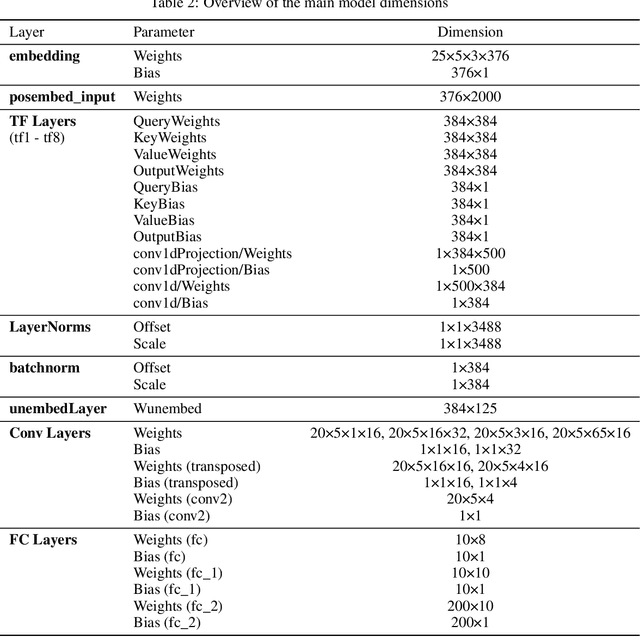

The predictive brain hypothesis suggests that perception can be interpreted as the process of minimizing the error between predicted perception tokens generated by an internal world model and actual sensory input tokens. When implementing working examples of this hypothesis in the context of in-air sonar, significant difficulties arise due to the sparse nature of the reflection model that governs ultrasonic sensing. Despite these challenges, creating consistent world models using sonar data is crucial for implementing predictive processing of ultrasound data in robotics. In an effort to enable robust robot behavior using ultrasound as the sole exteroceptive sensor modality, this paper introduces EchoPT, a pretrained transformer architecture designed to predict 2D sonar images from previous sensory data and robot ego-motion information. We detail the transformer architecture that drives EchoPT and compare the performance of our model to several state-of-the-art techniques. In addition to presenting and evaluating our EchoPT model, we demonstrate the effectiveness of this predictive perception approach in two robotic tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge