Wolfgang Ganglberger

Contactless Polysomnography: What Radio Waves Tell Us about Sleep

May 20, 2024

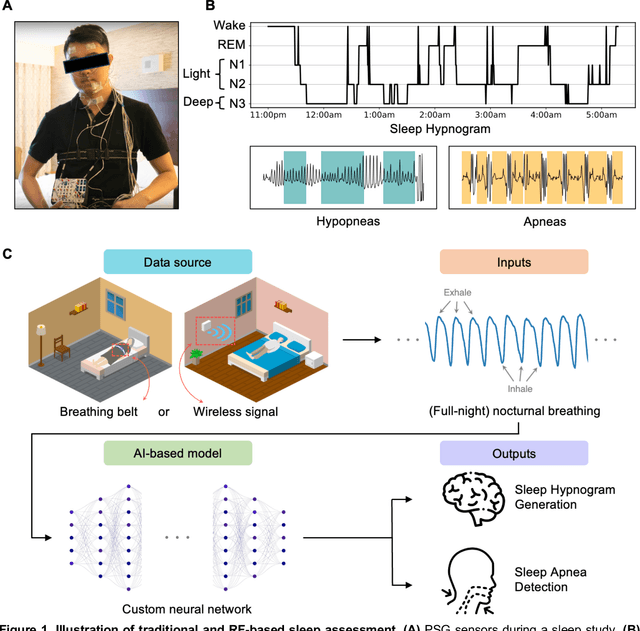

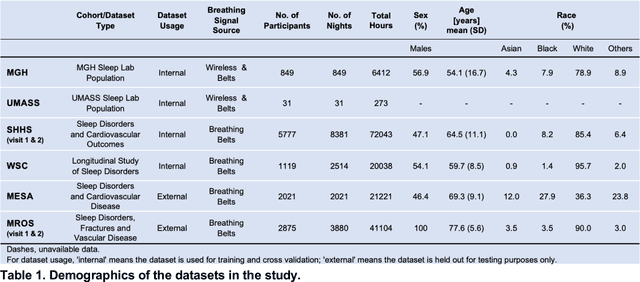

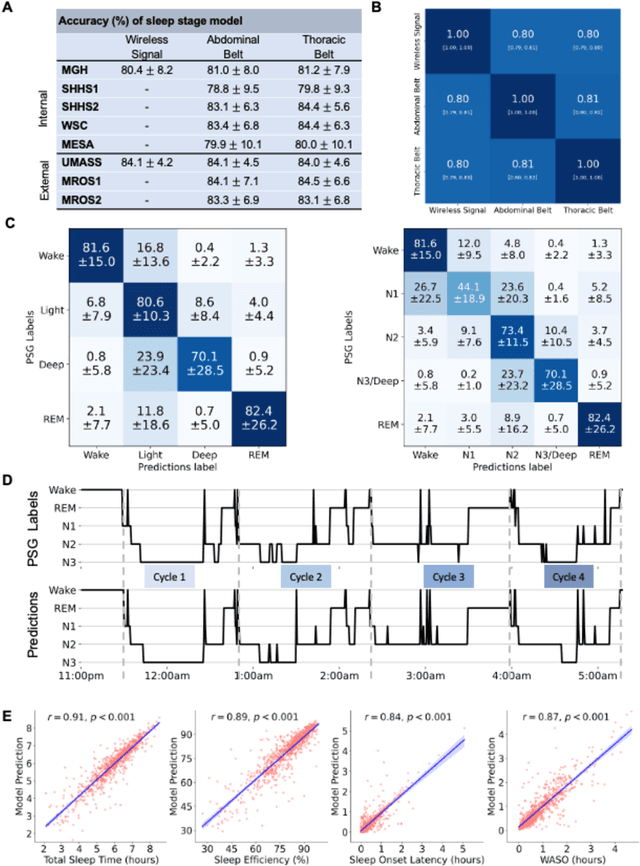

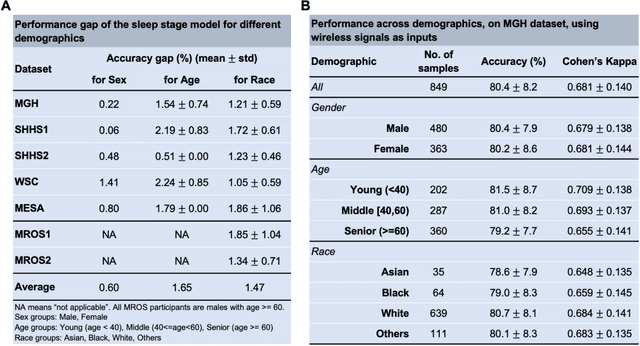

Abstract:The ability to assess sleep at home, capture sleep stages, and detect the occurrence of apnea (without on-body sensors) simply by analyzing the radio waves bouncing off people's bodies while they sleep is quite powerful. Such a capability would allow for longitudinal data collection in patients' homes, informing our understanding of sleep and its interaction with various diseases and their therapeutic responses, both in clinical trials and routine care. In this article, we develop an advanced machine learning algorithm for passively monitoring sleep and nocturnal breathing from radio waves reflected off people while asleep. Validation results in comparison with the gold standard (i.e., polysomnography) (n=849) demonstrate that the model captures the sleep hypnogram (with an accuracy of 81% for 30-second epochs categorized into Wake, Light Sleep, Deep Sleep, or REM), detects sleep apnea (AUROC = 0.88), and measures the patient's Apnea-Hypopnea Index (ICC=0.95; 95% CI = [0.93, 0.97]). Notably, the model exhibits equitable performance across race, sex, and age. Moreover, the model uncovers informative interactions between sleep stages and a range of diseases including neurological, psychiatric, cardiovascular, and immunological disorders. These findings not only hold promise for clinical practice and interventional trials but also underscore the significance of sleep as a fundamental component in understanding and managing various diseases.

Sleep Apnea and Respiratory Anomaly Detection from a Wearable Band and Oxygen Saturation

Feb 24, 2021

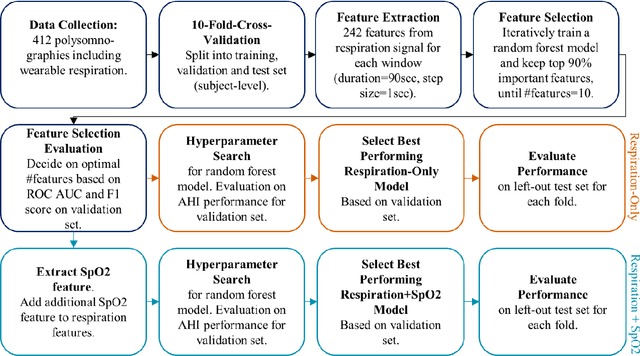

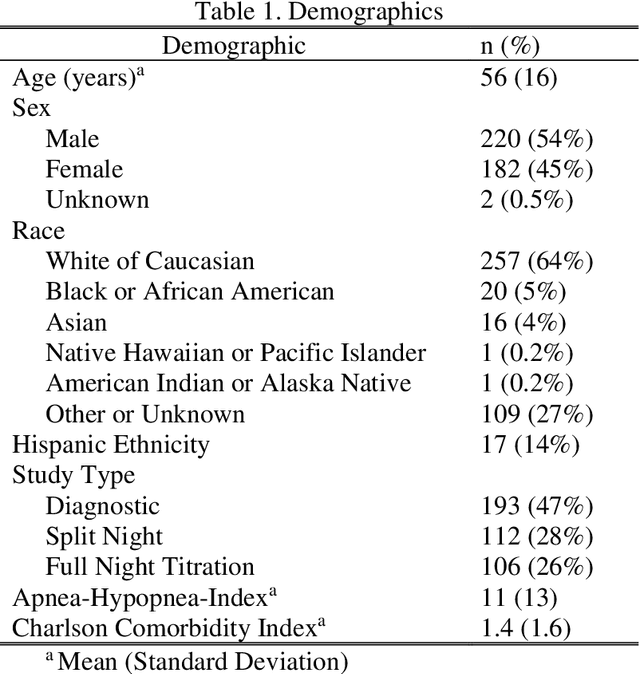

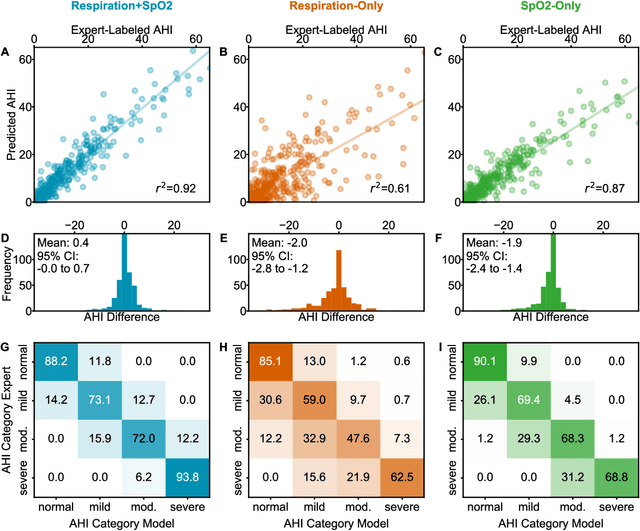

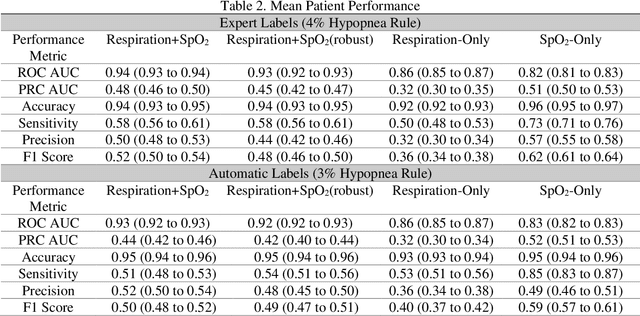

Abstract:Objective: Sleep related respiratory abnormalities are typically detected using polysomnography. There is a need in general medicine and critical care for a more convenient method to automatically detect sleep apnea from a simple, easy-to-wear device. The objective is to automatically detect abnormal respiration and estimate the Apnea-Hypopnea-Index (AHI) with a wearable respiratory device, compared to an SpO2 signal or polysomnography using a large (n = 412) dataset serving as ground truth. Methods: Simultaneously recorded polysomnographic (PSG) and wearable respiratory effort data were used to train and evaluate models in a cross-validation fashion. Time domain and complexity features were extracted, important features were identified, and a random forest model employed to detect events and predict AHI. Four models were trained: one each using the respiratory features only, a feature from the SpO2 (%)-signal only, and two additional models that use the respiratory features and the SpO2 (%)-feature, one allowing a time lag of 30 seconds between the two signals. Results: Event-based classification resulted in areas under the receiver operating characteristic curves of 0.94, 0.86, 0.82, and areas under the precision-recall curves of 0.48, 0.32, 0.51 for the models using respiration and SpO2, respiration-only, and SpO2-only respectively. Correlation between expert-labelled and predicted AHI was 0.96, 0.78, and 0.93, respectively. Conclusions: A wearable respiratory effort signal with or without SpO2 predicted AHI accurately. Given the large dataset and rigorous testing design, we expect our models are generalizable to evaluating respiration in a variety of environments, such as at home and in critical care.

Automated Respiratory Event Detection Using Deep Neural Networks

Jan 12, 2021

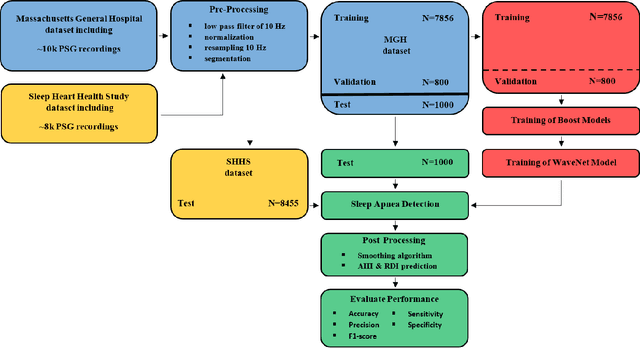

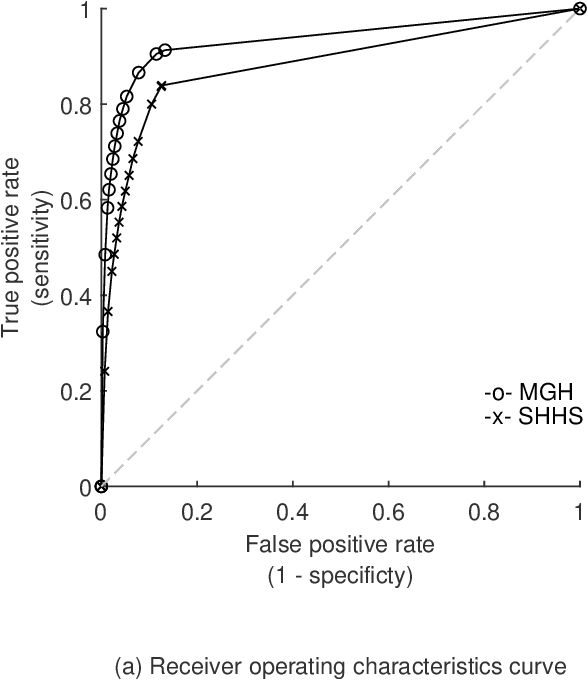

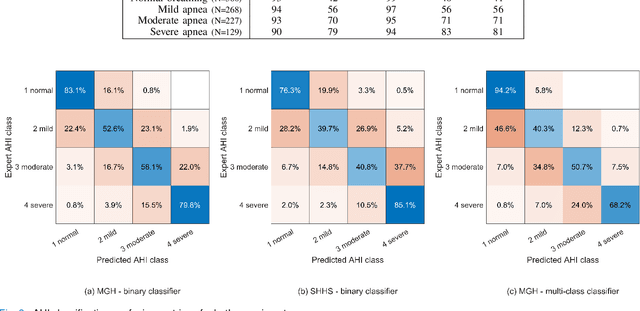

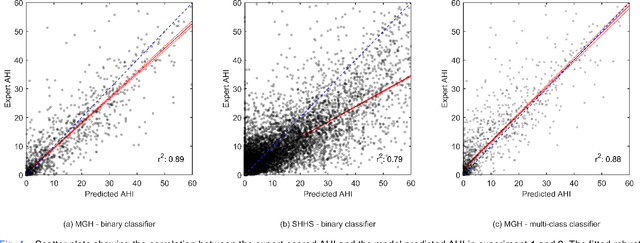

Abstract:The gold standard to assess respiration during sleep is polysomnography; a technique that is burdensome, expensive (both in analysis time and measurement costs), and difficult to repeat. Automation of respiratory analysis can improve test efficiency and enable accessible implementation opportunities worldwide. Using 9,656 polysomnography recordings from the Massachusetts General Hospital (MGH), we trained a neural network (WaveNet) based on a single respiratory effort belt to detect obstructive apnea, central apnea, hypopnea and respiratory-effort related arousals. Performance evaluation included event-based and recording-based metrics - using an apnea-hypopnea index analysis. The model was further evaluated on a public dataset, the Sleep-Heart-Health-Study-1, containing 8,455 polysomnographic recordings. For binary apnea event detection in the MGH dataset, the neural network obtained an accuracy of 95%, an apnea-hypopnea index $r^2$ of 0.89 and area under the curve for the receiver operating characteristics curve and precision-recall curve of 0.93 and 0.74, respectively. For the multiclass task, we obtained varying performances: 81% of all labeled central apneas were correctly classified, whereas this metric was 46% for obstructive apneas, 29% for respiratory effort related arousals and 16% for hypopneas. The majority of false predictions were misclassifications as another type of respiratory event. Our fully automated method can detect respiratory events and assess the apnea-hypopnea index with sufficient accuracy for clinical utilization. Differentiation of event types is more difficult and may reflect in part the complexity of human respiratory output and some degree of arbitrariness in the clinical thresholds and criteria used during manual annotation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge