Wojciech Fedorko

TRIUMF

Zephyr quantum-assisted hierarchical Calo4pQVAE for particle-calorimeter interactions

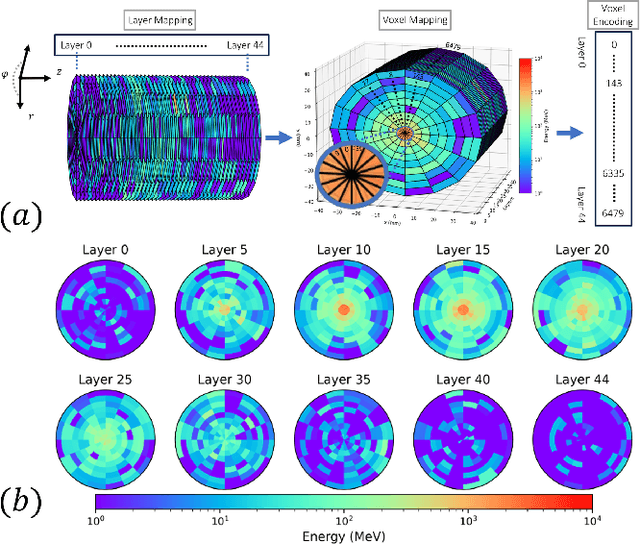

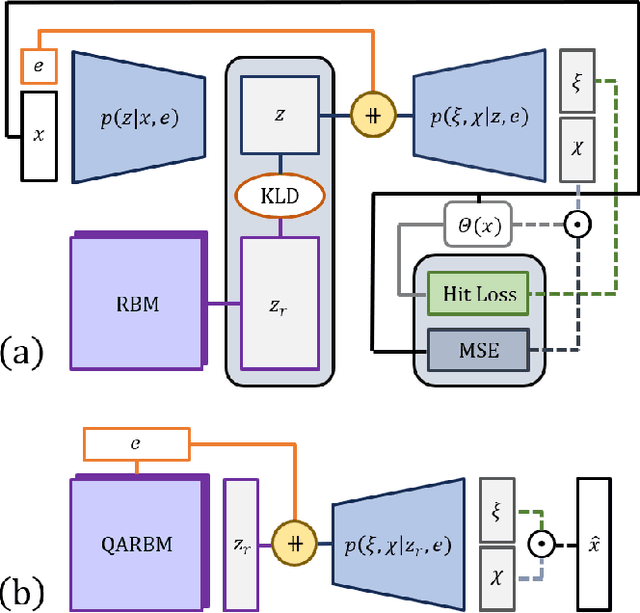

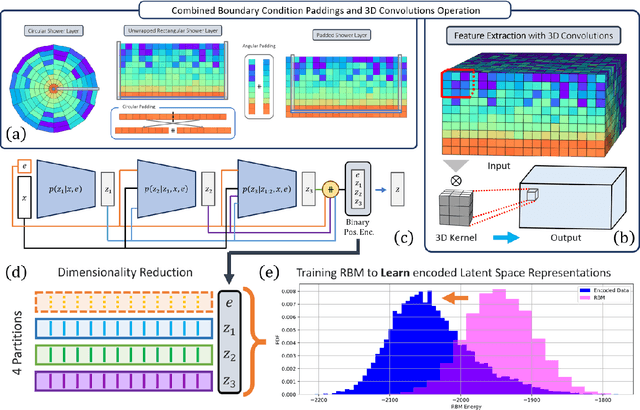

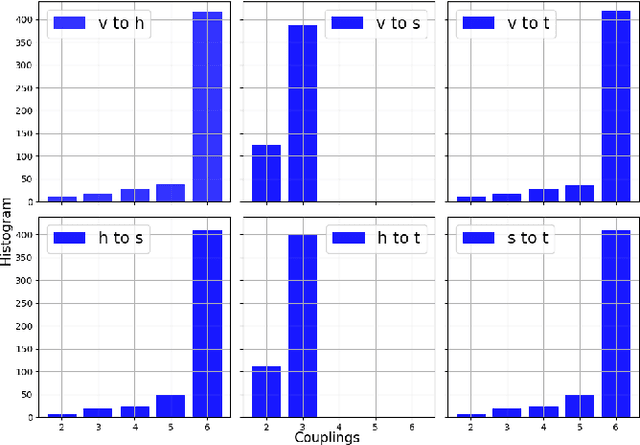

Dec 06, 2024Abstract:With the approach of the High Luminosity Large Hadron Collider (HL-LHC) era set to begin particle collisions by the end of this decade, it is evident that the computational demands of traditional collision simulation methods are becoming increasingly unsustainable. Existing approaches, which rely heavily on first-principles Monte Carlo simulations for modeling event showers in calorimeters, are projected to require millions of CPU-years annually -- far exceeding current computational capacities. This bottleneck presents an exciting opportunity for advancements in computational physics by integrating deep generative models with quantum simulations. We propose a quantum-assisted hierarchical deep generative surrogate founded on a variational autoencoder (VAE) in combination with an energy conditioned restricted Boltzmann machine (RBM) embedded in the model's latent space as a prior. By mapping the topology of D-Wave's Zephyr quantum annealer (QA) into the nodes and couplings of a 4-partite RBM, we leverage quantum simulation to accelerate our shower generation times significantly. To evaluate our framework, we use Dataset 2 of the CaloChallenge 2022. Through the integration of classical computation and quantum simulation, this hybrid framework paves way for utilizing large-scale quantum simulations as priors in deep generative models.

Conditioned quantum-assisted deep generative surrogate for particle-calorimeter interactions

Oct 30, 2024

Abstract:Particle collisions at accelerators such as the Large Hadron Collider, recorded and analyzed by experiments such as ATLAS and CMS, enable exquisite measurements of the Standard Model and searches for new phenomena. Simulations of collision events at these detectors have played a pivotal role in shaping the design of future experiments and analyzing ongoing ones. However, the quest for accuracy in Large Hadron Collider (LHC) collisions comes at an imposing computational cost, with projections estimating the need for millions of CPU-years annually during the High Luminosity LHC (HL-LHC) run \cite{collaboration2022atlas}. Simulating a single LHC event with \textsc{Geant4} currently devours around 1000 CPU seconds, with simulations of the calorimeter subdetectors in particular imposing substantial computational demands \cite{rousseau2023experimental}. To address this challenge, we propose a conditioned quantum-assisted deep generative model. Our model integrates a conditioned variational autoencoder (VAE) on the exterior with a conditioned Restricted Boltzmann Machine (RBM) in the latent space, providing enhanced expressiveness compared to conventional VAEs. The RBM nodes and connections are meticulously engineered to enable the use of qubits and couplers on D-Wave's Pegasus-structured \textit{Advantage} quantum annealer (QA) for sampling. We introduce a novel method for conditioning the quantum-assisted RBM using \textit{flux biases}. We further propose a novel adaptive mapping to estimate the effective inverse temperature in quantum annealers. The effectiveness of our framework is illustrated using Dataset 2 of the CaloChallenge \cite{calochallenge}.

CaloDVAE : Discrete Variational Autoencoders for Fast Calorimeter Shower Simulation

Oct 14, 2022

Abstract:Calorimeter simulation is the most computationally expensive part of Monte Carlo generation of samples necessary for analysis of experimental data at the Large Hadron Collider (LHC). The High-Luminosity upgrade of the LHC would require an even larger amount of such samples. We present a technique based on Discrete Variational Autoencoders (DVAEs) to simulate particle showers in Electromagnetic Calorimeters. We discuss how this work paves the way towards exploration of quantum annealing processors as sampling devices for generation of simulated High Energy Physics datasets.

Variational Autoencoders for Generative Modelling of Water Cherenkov Detectors

Nov 01, 2019

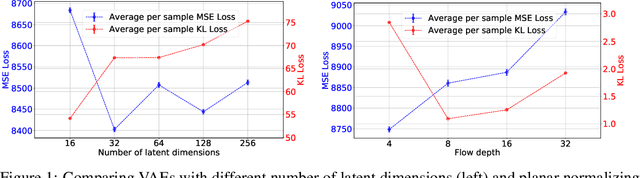

Abstract:Matter-antimatter asymmetry is one of the major unsolved problems in physics that can be probed through precision measurements of charge-parity symmetry violation at current and next-generation neutrino oscillation experiments. In this work, we demonstrate the capability of variational autoencoders and normalizing flows to approximate the generative distribution of simulated data for water Cherenkov detectors commonly used in these experiments. We study the performance of these methods and their applicability for semi-supervised learning and synthetic data generation.

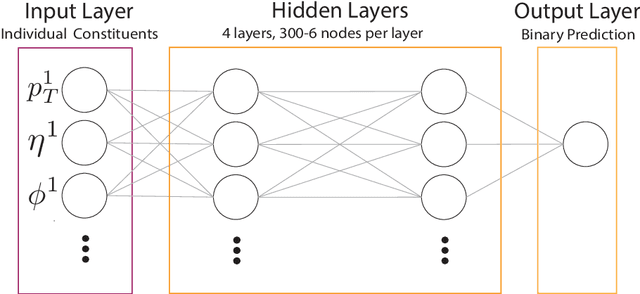

Long Short-Term Memory networks with jet constituents for boosted top tagging at the LHC

Nov 24, 2017

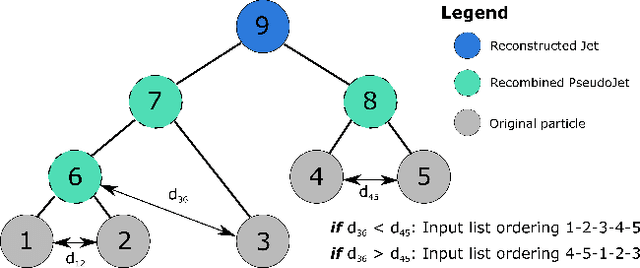

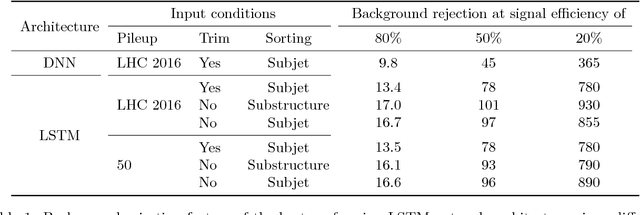

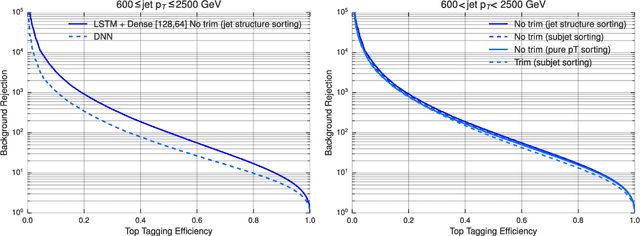

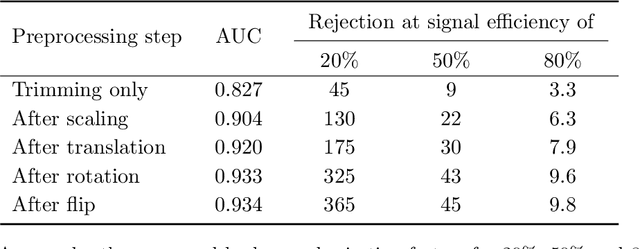

Abstract:Multivariate techniques based on engineered features have found wide adoption in the identification of jets resulting from hadronic top decays at the Large Hadron Collider (LHC). Recent Deep Learning developments in this area include the treatment of the calorimeter activation as an image or supplying a list of jet constituent momenta to a fully connected network. This latter approach lends itself well to the use of Recurrent Neural Networks. In this work the applicability of architectures incorporating Long Short-Term Memory (LSTM) networks is explored. Several network architectures, methods of ordering of jet constituents, and input pre-processing are studied. The best performing LSTM network achieves a background rejection of 100 for 50% signal efficiency. This represents more than a factor of two improvement over a fully connected Deep Neural Network (DNN) trained on similar types of inputs.

Jet Constituents for Deep Neural Network Based Top Quark Tagging

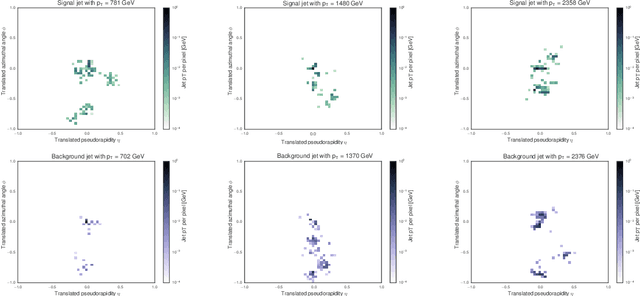

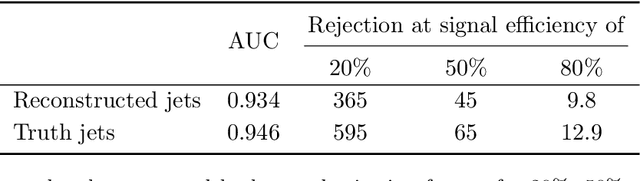

Aug 09, 2017

Abstract:Recent literature on deep neural networks for tagging of highly energetic jets resulting from top quark decays has focused on image based techniques or multivariate approaches using high-level jet substructure variables. Here, a sequential approach to this task is taken by using an ordered sequence of jet constituents as training inputs. Unlike the majority of previous approaches, this strategy does not result in a loss of information during pixelisation or the calculation of high level features. The jet classification method achieves a background rejection of 45 at a 50% efficiency operating point for reconstruction level jets with transverse momentum range of 600 to 2500 GeV and is insensitive to multiple proton-proton interactions at the levels expected throughout Run 2 of the LHC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge