William Korcari

CaloChallenge 2022: A Community Challenge for Fast Calorimeter Simulation

Oct 28, 2024

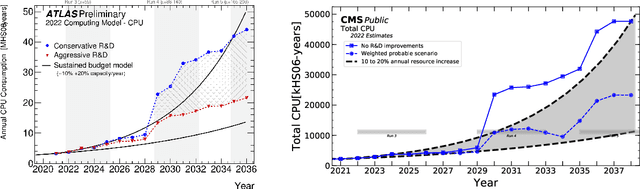

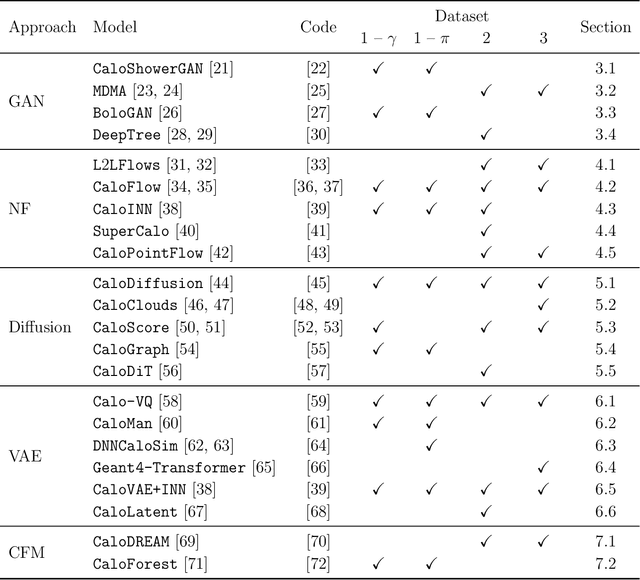

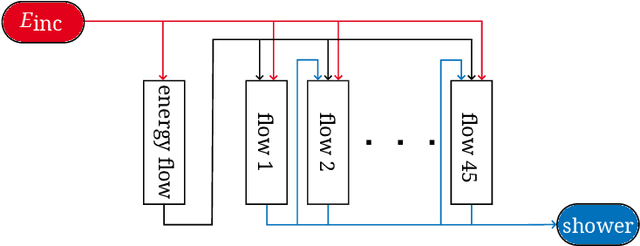

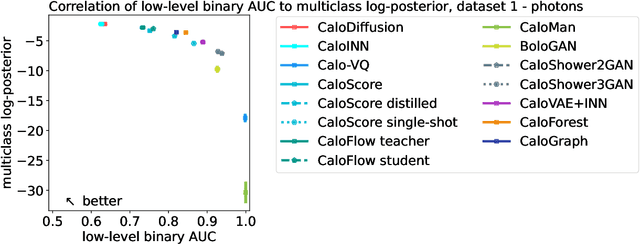

Abstract:We present the results of the "Fast Calorimeter Simulation Challenge 2022" - the CaloChallenge. We study state-of-the-art generative models on four calorimeter shower datasets of increasing dimensionality, ranging from a few hundred voxels to a few tens of thousand voxels. The 31 individual submissions span a wide range of current popular generative architectures, including Variational AutoEncoders (VAEs), Generative Adversarial Networks (GANs), Normalizing Flows, Diffusion models, and models based on Conditional Flow Matching. We compare all submissions in terms of quality of generated calorimeter showers, as well as shower generation time and model size. To assess the quality we use a broad range of different metrics including differences in 1-dimensional histograms of observables, KPD/FPD scores, AUCs of binary classifiers, and the log-posterior of a multiclass classifier. The results of the CaloChallenge provide the most complete and comprehensive survey of cutting-edge approaches to calorimeter fast simulation to date. In addition, our work provides a uniquely detailed perspective on the important problem of how to evaluate generative models. As such, the results presented here should be applicable for other domains that use generative AI and require fast and faithful generation of samples in a large phase space.

CaloClouds II: Ultra-Fast Geometry-Independent Highly-Granular Calorimeter Simulation

Sep 11, 2023Abstract:Fast simulation of the energy depositions in high-granular detectors is needed for future collider experiments with ever increasing luminosities. Generative machine learning (ML) models have been shown to speed up and augment the traditional simulation chain in physics analysis. However, the majority of previous efforts were limited to models relying on fixed, regular detector readout geometries. A major advancement is the recently introduced CaloClouds model, a geometry-independent diffusion model, which generates calorimeter showers as point clouds for the electromagnetic calorimeter of the envisioned International Large Detector (ILD). In this work, we introduce CaloClouds II which features a number of key improvements. This includes continuous time score-based modelling, which allows for a 25 step sampling with comparable fidelity to CaloClouds while yielding a $6\times$ speed-up over Geant4 on a single CPU ($5\times$ over CaloClouds). We further distill the diffusion model into a consistency model allowing for accurate sampling in a single step and resulting in a $46\times$ ($37\times$) speed-up. This constitutes the first application of consistency distillation for the generation of calorimeter showers.

CaloClouds: Fast Geometry-Independent Highly-Granular Calorimeter Simulation

May 08, 2023Abstract:Simulating showers of particles in highly-granular detectors is a key frontier in the application of machine learning to particle physics. Achieving high accuracy and speed with generative machine learning models would enable them to augment traditional simulations and alleviate a major computing constraint. This work achieves a major breakthrough in this task by, for the first time, directly generating a point cloud of a few thousand space points with energy depositions in the detector in 3D space without relying on a fixed-grid structure. This is made possible by two key innovations: i) using recent improvements in generative modeling we apply a diffusion model to generate ii) an initial even higher-resolution point cloud of up to 40,000 so-called Geant4 steps which is subsequently down-sampled to the desired number of up to 6,000 space points. We showcase the performance of this approach using the specific example of simulating photon showers in the planned electromagnetic calorimeter of the International Large Detector (ILD) and achieve overall good modeling of physically relevant distributions.

Shared Data and Algorithms for Deep Learning in Fundamental Physics

Jul 01, 2021

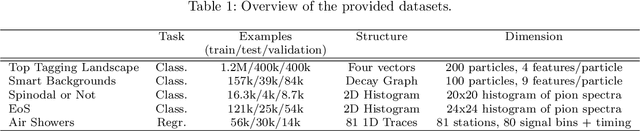

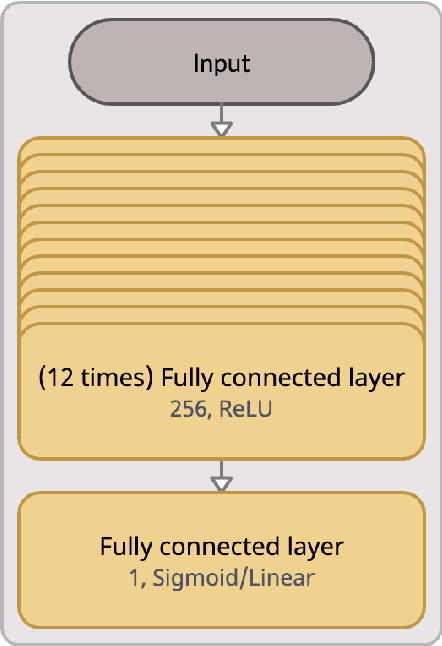

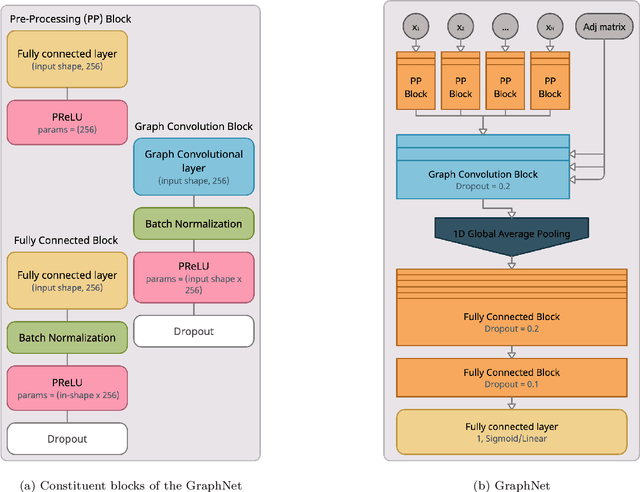

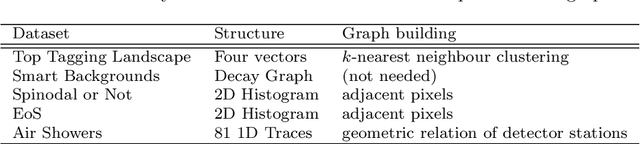

Abstract:We introduce a collection of datasets from fundamental physics research -- including particle physics, astroparticle physics, and hadron- and nuclear physics -- for supervised machine learning studies. These datasets, containing hadronic top quarks, cosmic-ray induced air showers, phase transitions in hadronic matter, and generator-level histories, are made public to simplify future work on cross-disciplinary machine learning and transfer learning in fundamental physics. Based on these data, we present a simple yet flexible graph-based neural network architecture that can easily be applied to a wide range of supervised learning tasks in these domains. We show that our approach reaches performance close to state-of-the-art dedicated methods on all datasets. To simplify adaptation for various problems, we provide easy-to-follow instructions on how graph-based representations of data structures, relevant for fundamental physics, can be constructed and provide code implementations for several of them. Implementations are also provided for our proposed method and all reference algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge