William Gropp

Infrastructure for Artificial Intelligence, Quantum and High Performance Computing

Dec 16, 2020Abstract:High Performance Computing (HPC), Artificial Intelligence (AI)/Machine Learning (ML), and Quantum Computing (QC) and communications offer immense opportunities for innovation and impact on society. Researchers in these areas depend on access to computing infrastructure, but these resources are in short supply and are typically siloed in support of their research communities, making it more difficult to pursue convergent and interdisciplinary research. Such research increasingly depends on complex workflows that require different resources for each stage. This paper argues that a more-holistic approach to computing infrastructure, one that recognizes both the convergence of some capabilities and the complementary capabilities from new computing approaches, be it commercial cloud to Quantum Computing, is needed to support computer science research.

Enabling real-time multi-messenger astrophysics discoveries with deep learning

Nov 26, 2019

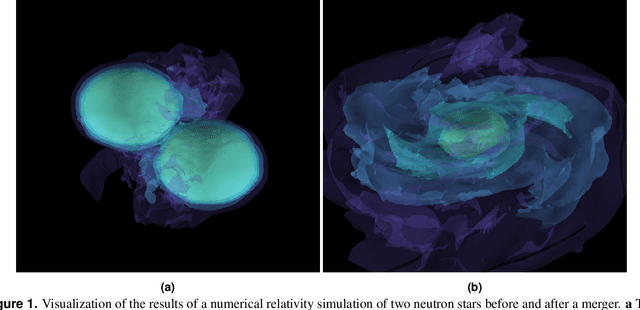

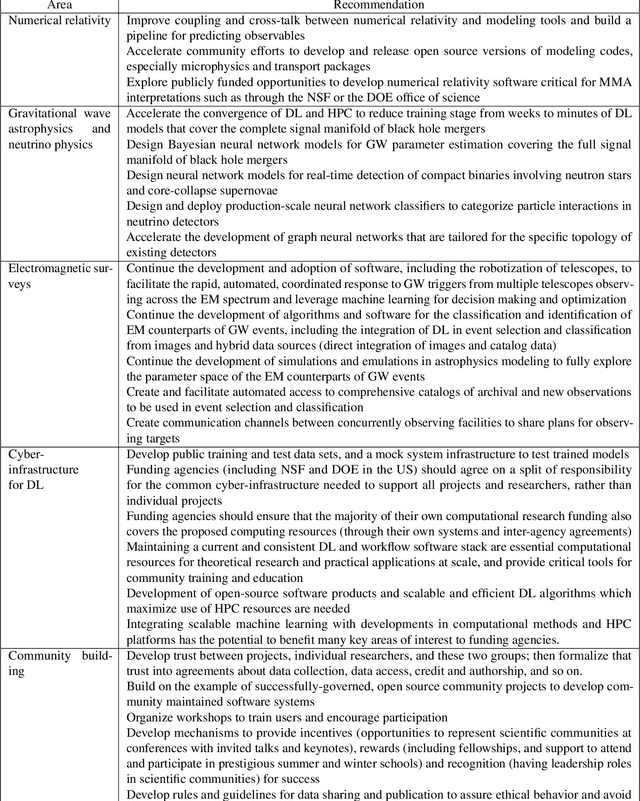

Abstract:Multi-messenger astrophysics is a fast-growing, interdisciplinary field that combines data, which vary in volume and speed of data processing, from many different instruments that probe the Universe using different cosmic messengers: electromagnetic waves, cosmic rays, gravitational waves and neutrinos. In this Expert Recommendation, we review the key challenges of real-time observations of gravitational wave sources and their electromagnetic and astroparticle counterparts, and make a number of recommendations to maximize their potential for scientific discovery. These recommendations refer to the design of scalable and computationally efficient machine learning algorithms; the cyber-infrastructure to numerically simulate astrophysical sources, and to process and interpret multi-messenger astrophysics data; the management of gravitational wave detections to trigger real-time alerts for electromagnetic and astroparticle follow-ups; a vision to harness future developments of machine learning and cyber-infrastructure resources to cope with the big-data requirements; and the need to build a community of experts to realize the goals of multi-messenger astrophysics.

* Invited Expert Recommendation for Nature Reviews Physics. The art work produced by E. A. Huerta and Shawn Rosofsky for this article was used by Carl Conway to design the cover of the October 2019 issue of Nature Reviews Physics

Deep Learning for Multi-Messenger Astrophysics: A Gateway for Discovery in the Big Data Era

Feb 01, 2019Abstract:This report provides an overview of recent work that harnesses the Big Data Revolution and Large Scale Computing to address grand computational challenges in Multi-Messenger Astrophysics, with a particular emphasis on real-time discovery campaigns. Acknowledging the transdisciplinary nature of Multi-Messenger Astrophysics, this document has been prepared by members of the physics, astronomy, computer science, data science, software and cyberinfrastructure communities who attended the NSF-, DOE- and NVIDIA-funded "Deep Learning for Multi-Messenger Astrophysics: Real-time Discovery at Scale" workshop, hosted at the National Center for Supercomputing Applications, October 17-19, 2018. Highlights of this report include unanimous agreement that it is critical to accelerate the development and deployment of novel, signal-processing algorithms that use the synergy between artificial intelligence (AI) and high performance computing to maximize the potential for scientific discovery with Multi-Messenger Astrophysics. We discuss key aspects to realize this endeavor, namely (i) the design and exploitation of scalable and computationally efficient AI algorithms for Multi-Messenger Astrophysics; (ii) cyberinfrastructure requirements to numerically simulate astrophysical sources, and to process and interpret Multi-Messenger Astrophysics data; (iii) management of gravitational wave detections and triggers to enable electromagnetic and astro-particle follow-ups; (iv) a vision to harness future developments of machine and deep learning and cyberinfrastructure resources to cope with the scale of discovery in the Big Data Era; (v) and the need to build a community that brings domain experts together with data scientists on equal footing to maximize and accelerate discovery in the nascent field of Multi-Messenger Astrophysics.

Learning with Analytical Models

Oct 28, 2018

Abstract:To understand and predict the performance of parallel and distributed programs, several analytical and machine learning approaches have been proposed, each having its advantages and disadvantages. In this paper, we propose and validate a hybrid approach exploiting both analytical and machine learning models. The hybrid model is able to learn and correct the analytical models to better match the actual performance. Furthermore, the proposed hybrid model improves the prediction accuracy in comparison to pure machine learning techniques while using small training datasets, thus making it suitable for hardware and workload changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge