Wenqing Yan

Navigate Biopsy with Ultrasound under Augmented Reality Device: Towards Higher System Performance

Feb 04, 2024

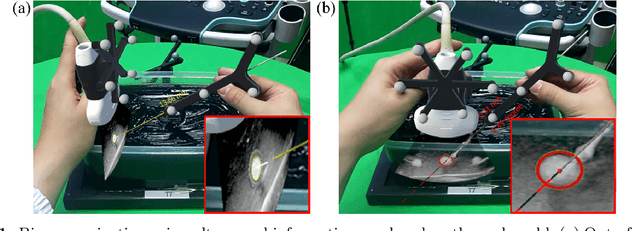

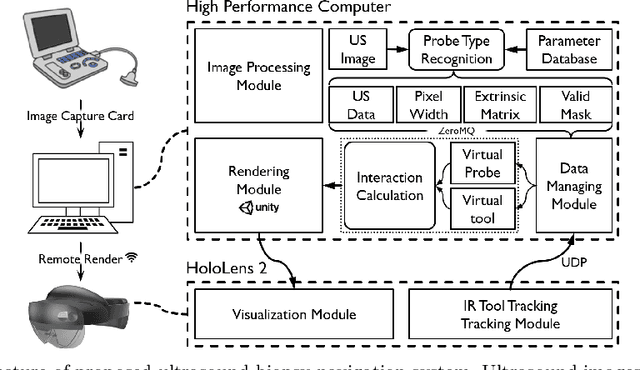

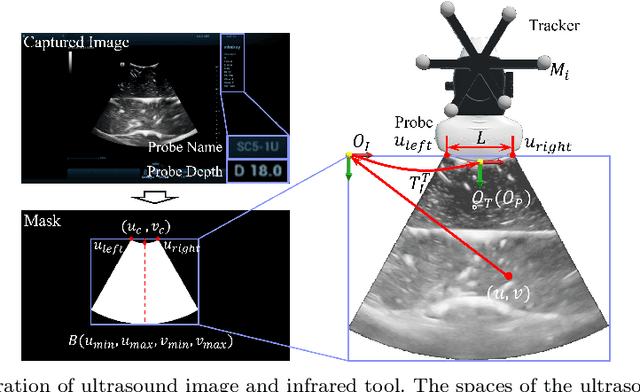

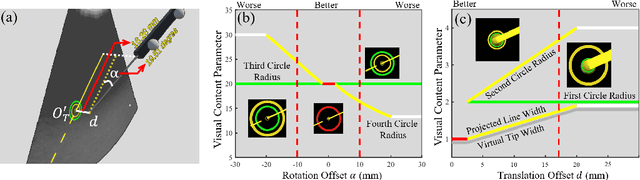

Abstract:Purpose: Biopsies play a crucial role in determining the classification and staging of tumors. Ultrasound is frequently used in this procedure to provide real-time anatomical information. Using augmented reality (AR), surgeons can visualize ultrasound data and spatial navigation information seamlessly integrated with real tissues. This innovation facilitates faster and more precise biopsy operations. Methods: We developed an AR biopsy navigation system with low display latency and high accuracy. Ultrasound data is initially read by an image capture card and streamed to Unity via net communication. In Unity, navigation information is rendered and transmitted to the HoloLens 2 device using holographic remoting. Retro-reflective tool tracking is implemented on the HoloLens 2, enabling simultaneous tracking of the ultrasound probe and biopsy needle. Distinct navigation information is provided during in-plane and out-of-plane punctuation. To evaluate the effectiveness of our system, we conducted a study involving ten participants, for puncture accuracy and biopsy time, comparing to traditional methods. Results: Our proposed framework enables ultrasound visualization in AR with only $16.22\pm11.45ms$ additional latency. Navigation accuracy reached $1.23\pm 0.68mm$ in the image plane and $0.95\pm 0.70mm$ outside the image plane. Remarkably, the utilization of our system led to $98\%$ and $95\%$ success rate in out-of-plane and in-plane biopsy. Conclusion: To sum up, this paper introduces an AR-based ultrasound biopsy navigation system characterized by high navigation accuracy and minimal latency. The system provides distinct visualization contents during in-plane and out-of-plane operations according to their different characteristics. Use case study in this paper proved that our system can help young surgeons perform biopsy faster and more accurately.

EVD Surgical Guidance with Retro-Reflective Tool Tracking and Spatial Reconstruction using Head-Mounted Augmented Reality Device

Jul 03, 2023

Abstract:Augmented Reality (AR) has been used to facilitate surgical guidance during External Ventricular Drain (EVD) surgery, reducing the risks of misplacement in manual operations. During this procedure, the key challenge is accurately estimating the spatial relationship between pre-operative images and actual patient anatomy in AR environment. This research proposes a novel framework utilizing Time of Flight (ToF) depth sensors integrated in commercially available AR Head Mounted Devices (HMD) for precise EVD surgical guidance. As previous studies have proven depth errors for ToF sensors, we first assessed their properties on AR-HMDs. Subsequently, a depth error model and patient-specific parameter identification method are introduced for accurate surface information. A tracking pipeline combining retro-reflective markers and point clouds is then proposed for accurate head tracking. The head surface is reconstructed using depth data for spatial registration, avoiding fixing tracking targets rigidly on the patient's skull. Firstly, $7.580\pm 1.488 mm$ depth value error was revealed on human skin, indicating the significance of depth correction. Our results showed that the error was reduced by over $85\%$ using proposed depth correction method on head phantoms in different materials. Meanwhile, the head surface reconstructed with corrected depth data achieved sub-millimetre accuracy. An experiment on sheep head revealed $0.79 mm$ reconstruction error. Furthermore, a user study was conducted for the performance in simulated EVD surgery, where five surgeons performed nine k-wire injections on a head phantom with virtual guidance. Results of this study revealed $2.09 \pm 0.16 mm$ translational accuracy and $2.97\pm 0.91$ degree orientational accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge