Wenjian Tang

CLRNet: Cross Layer Refinement Network for Lane Detection

Mar 19, 2022

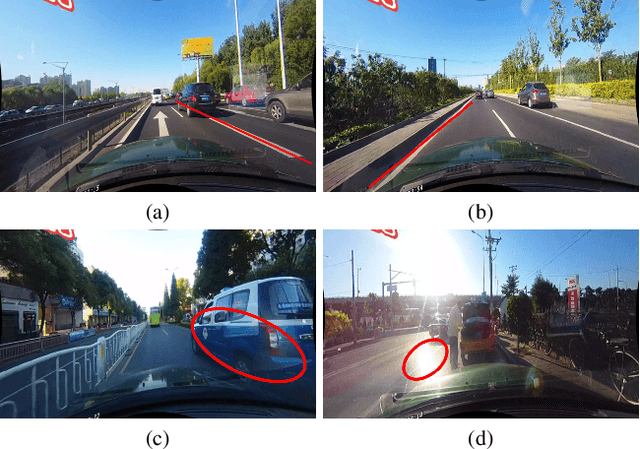

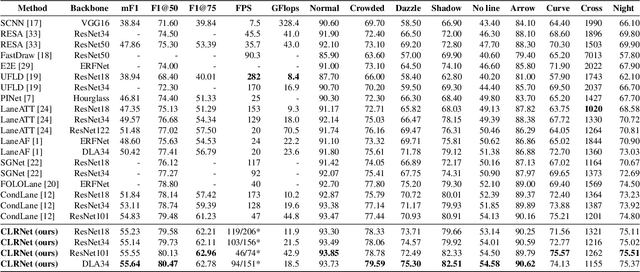

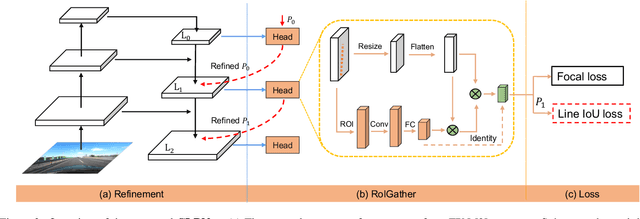

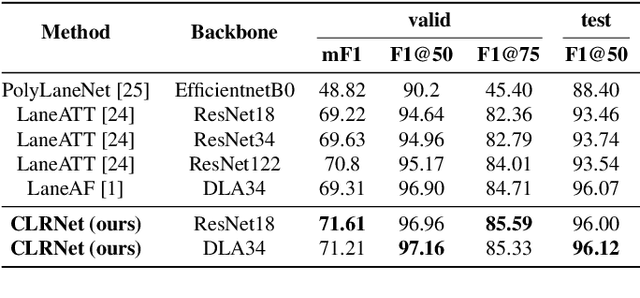

Abstract:Lane is critical in the vision navigation system of the intelligent vehicle. Naturally, lane is a traffic sign with high-level semantics, whereas it owns the specific local pattern which needs detailed low-level features to localize accurately. Using different feature levels is of great importance for accurate lane detection, but it is still under-explored. In this work, we present Cross Layer Refinement Network (CLRNet) aiming at fully utilizing both high-level and low-level features in lane detection. In particular, it first detects lanes with high-level semantic features then performs refinement based on low-level features. In this way, we can exploit more contextual information to detect lanes while leveraging local detailed lane features to improve localization accuracy. We present ROIGather to gather global context, which further enhances the feature representation of lanes. In addition to our novel network design, we introduce Line IoU loss which regresses the lane line as a whole unit to improve the localization accuracy. Experiments demonstrate that the proposed method greatly outperforms the state-of-the-art lane detection approaches.

RESA: Recurrent Feature-Shift Aggregator for Lane Detection

Aug 31, 2020

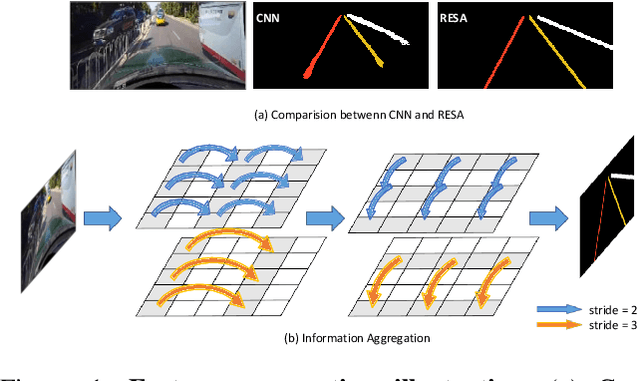

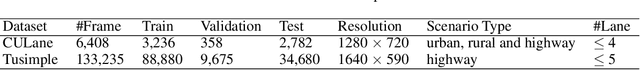

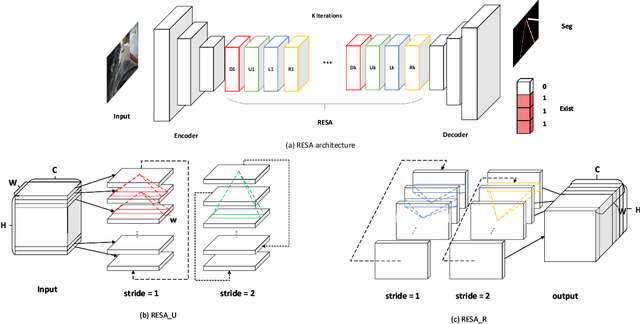

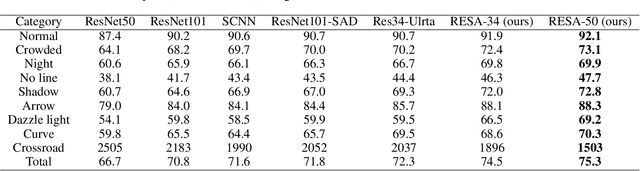

Abstract:Lane detection is one of the most important tasks in self-driving. Due to various complex scenarios (e.g., severe occlusion, ambiguous lanes, and etc.) and the sparse supervisory signals inherent in lane annotations, lane detection task is still challenging. Thus, it is difficult for ordinary convolutional neural network (CNN) trained in general scenes to catch subtle lane feature from raw image. In this paper, we present a novel module named REcurrent Feature-Shift Aggregator (RESA) to enrich lane feature after preliminary feature extraction with an ordinary CNN. RESA takes advantage of strong shape priors of lanes and captures spatial relationships of pixels across rows and columns. It shifts sliced feature map recurrently in vertical and horizontal directions and enables each pixel to gather global information. With the help of slice-by-slice information propagation, RESA can conjecture lanes accurately in challenging scenarios with weak appearance clues. Moreover, we also propose a Bilateral Up-Sampling Decoder which combines coarse grained feature and fine detailed feature in up-sampling stage, and it can recover low-resolution feature map into pixel-wise prediction meticulously. Our method achieves state-of-the-art results on two popular lane detection benchmarks (CULane and Tusimple). The code will be released publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge