Weisi Guo

Dialogue Telemetry: Turn-Level Instrumentation for Autonomous Information Gathering

Jan 14, 2026Abstract:Autonomous systems conducting schema-grounded information-gathering dialogues face an instrumentation gap, lacking turn-level observables for monitoring acquisition efficiency and detecting when questioning becomes unproductive. We introduce Dialogue Telemetry (DT), a measurement framework that produces two model-agnostic signals after each question-answer exchange: (i) a Progress Estimator (PE) quantifying residual information potential per category (with a bits-based variant), and (ii) a Stalling Index (SI) detecting an observable failure signature characterized by repeated category probing with semantically similar, low-marginal-gain responses. SI flags this pattern without requiring causal diagnosis, supporting monitoring in settings where attributing degradation to specific causes may be impractical. We validate DT in controlled search-and-rescue (SAR)-inspired interviews using large language model (LLM)-based simulations, distinguishing efficient from stalled dialogue traces and illustrating downstream utility by integrating DT signals into a reinforcement learning (RL) policy. Across these settings, DT provides interpretable turn-level instrumentation that improves policy performance when stalling carries operational costs.

LUCIFER: Language Understanding and Context-Infused Framework for Exploration and Behavior Refinement

Jun 09, 2025

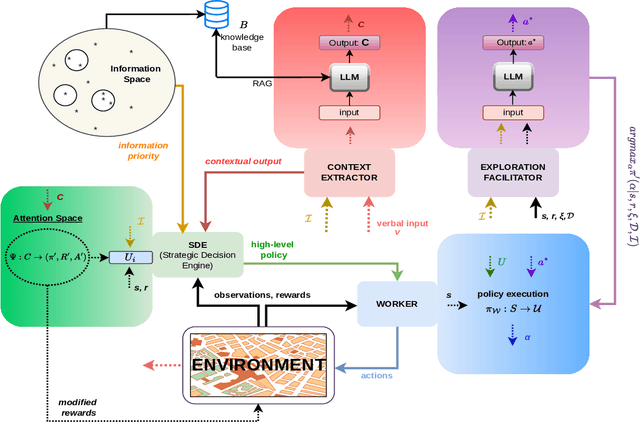

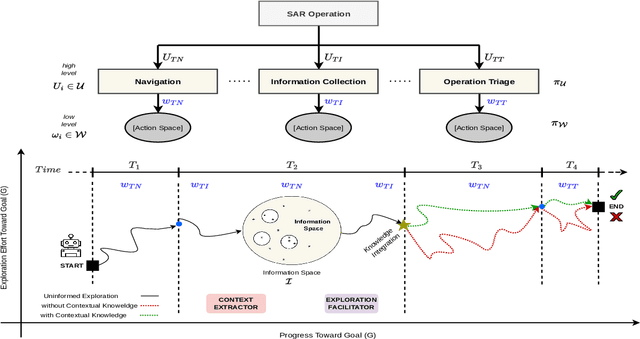

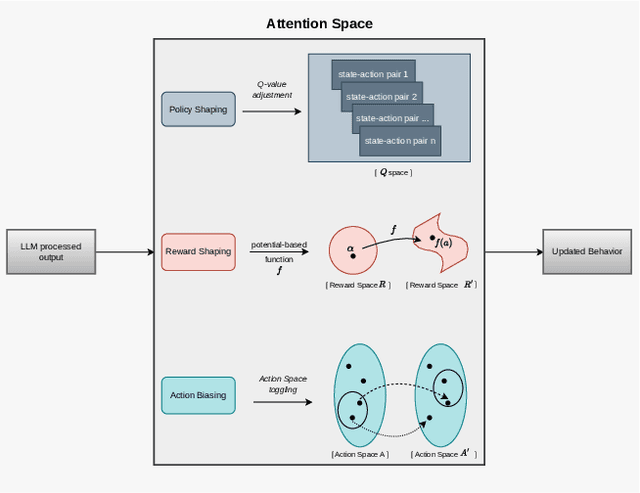

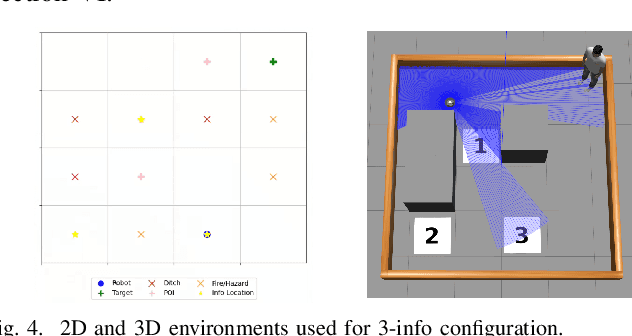

Abstract:In dynamic environments, the rapid obsolescence of pre-existing environmental knowledge creates a gap between an agent's internal model and the evolving reality of its operational context. This disparity between prior and updated environmental valuations fundamentally limits the effectiveness of autonomous decision-making. To bridge this gap, the contextual bias of human domain stakeholders, who naturally accumulate insights through direct, real-time observation, becomes indispensable. However, translating their nuanced, and context-rich input into actionable intelligence for autonomous systems remains an open challenge. To address this, we propose LUCIFER (Language Understanding and Context-Infused Framework for Exploration and Behavior Refinement), a domain-agnostic framework that integrates a hierarchical decision-making architecture with reinforcement learning (RL) and large language models (LLMs) into a unified system. This architecture mirrors how humans decompose complex tasks, enabling a high-level planner to coordinate specialised sub-agents, each focused on distinct objectives and temporally interdependent actions. Unlike traditional applications where LLMs are limited to single role, LUCIFER integrates them in two synergistic roles: as context extractors, structuring verbal stakeholder input into domain-aware representations that influence decision-making through an attention space mechanism aligning LLM-derived insights with the agent's learning process, and as zero-shot exploration facilitators guiding the agent's action selection process during exploration. We benchmark various LLMs in both roles and demonstrate that LUCIFER improves exploration efficiency and decision quality, outperforming flat, goal-conditioned policies. Our findings show the potential of context-driven decision-making, where autonomous systems leverage human contextual knowledge for operational success.

Learning What Matters Now: A Dual-Critic Context-Aware RL Framework for Priority-Driven Information Gain

Jun 07, 2025

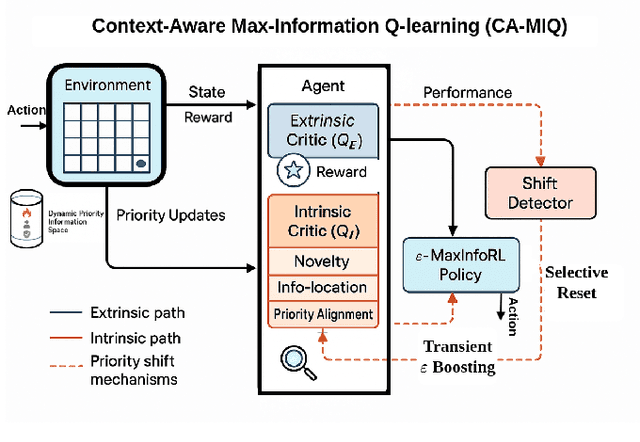

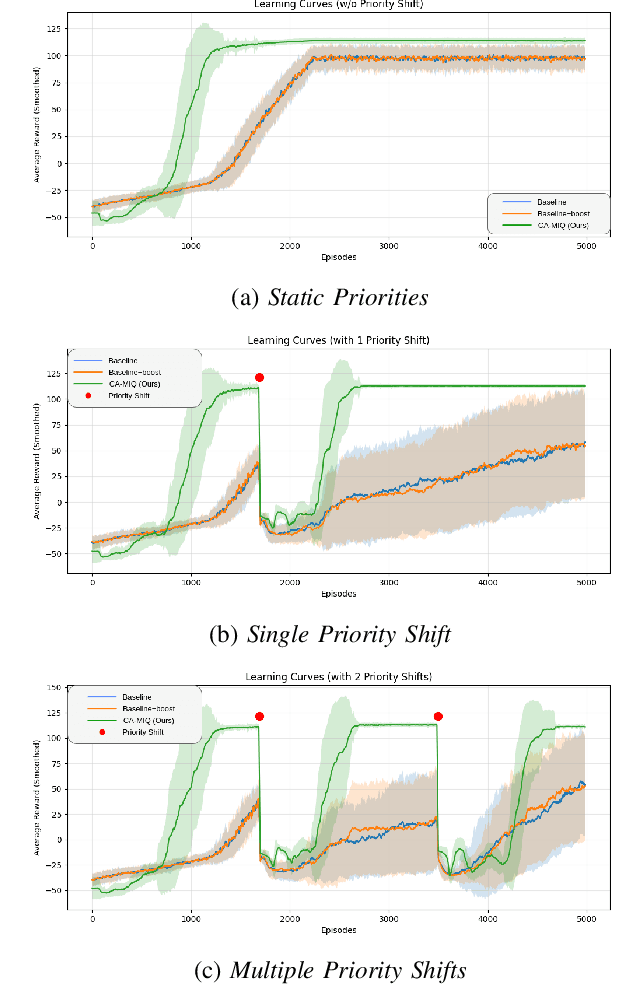

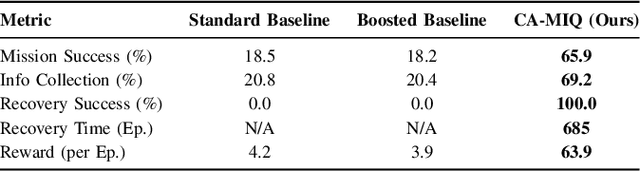

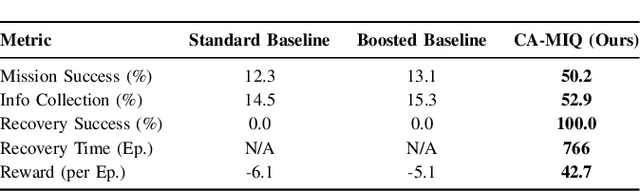

Abstract:Autonomous systems operating in high-stakes search-and-rescue (SAR) missions must continuously gather mission-critical information while flexibly adapting to shifting operational priorities. We propose CA-MIQ (Context-Aware Max-Information Q-learning), a lightweight dual-critic reinforcement learning (RL) framework that dynamically adjusts its exploration strategy whenever mission priorities change. CA-MIQ pairs a standard extrinsic critic for task reward with an intrinsic critic that fuses state-novelty, information-location awareness, and real-time priority alignment. A built-in shift detector triggers transient exploration boosts and selective critic resets, allowing the agent to re-focus after a priority revision. In a simulated SAR grid-world, where experiments specifically test adaptation to changes in the priority order of information types the agent is expected to focus on, CA-MIQ achieves nearly four times higher mission-success rates than baselines after a single priority shift and more than three times better performance in multiple-shift scenarios, achieving 100% recovery while baseline methods fail to adapt. These results highlight CA-MIQ's effectiveness in any discrete environment with piecewise-stationary information-value distributions.

Parameter Estimation using Reinforcement Learning Causal Curiosity: Limits and Challenges

May 13, 2025

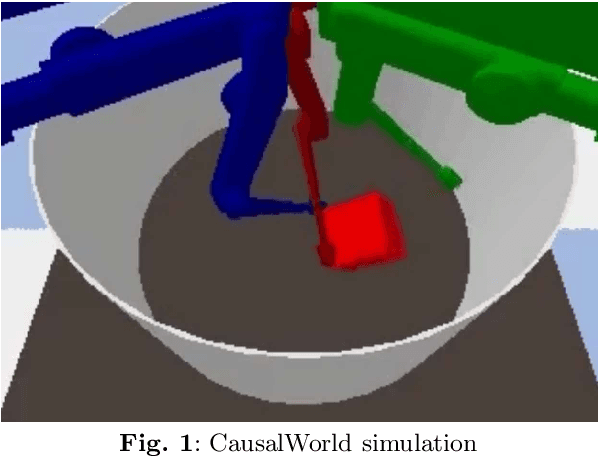

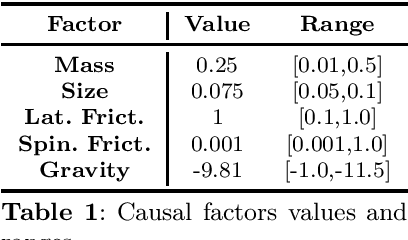

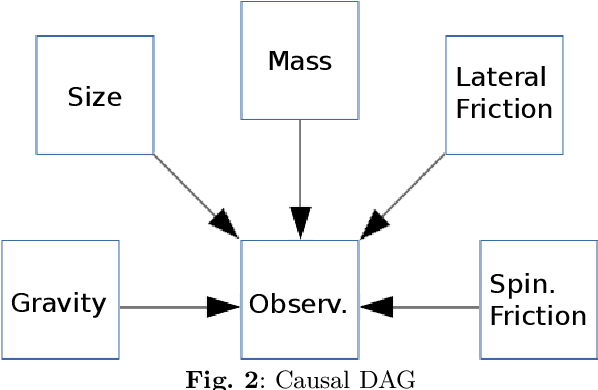

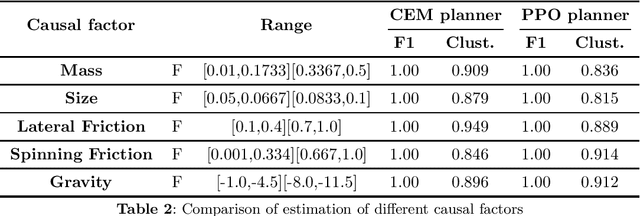

Abstract:Causal understanding is important in many disciplines of science and engineering, where we seek to understand how different factors in the system causally affect an experiment or situation and pave a pathway towards creating effective or optimising existing models. Examples of use cases are autonomous exploration and modelling of unknown environments or assessing key variables in optimising large complex systems. In this paper, we analyse a Reinforcement Learning approach called Causal Curiosity, which aims to estimate as accurately and efficiently as possible, without directly measuring them, the value of factors that causally determine the dynamics of a system. Whilst the idea presents a pathway forward, measurement accuracy is the foundation of methodology effectiveness. Focusing on the current causal curiosity's robotic manipulator, we present for the first time a measurement accuracy analysis of the future potentials and current limitations of this technique and an analysis of its sensitivity and confounding factor disentanglement capability - crucial for causal analysis. As a result of our work, we promote proposals for an improved and efficient design of Causal Curiosity methods to be applied to real-world complex scenarios.

Remote Rowhammer Attack using Adversarial Observations on Federated Learning Clients

May 09, 2025Abstract:Federated Learning (FL) has the potential for simultaneous global learning amongst a large number of parallel agents, enabling emerging AI such as LLMs to be trained across demographically diverse data. Central to this being efficient is the ability for FL to perform sparse gradient updates and remote direct memory access at the central server. Most of the research in FL security focuses on protecting data privacy at the edge client or in the communication channels between the client and server. Client-facing attacks on the server are less well investigated as the assumption is that a large collective of clients offer resilience. Here, we show that by attacking certain clients that lead to a high frequency repetitive memory update in the server, we can remote initiate a rowhammer attack on the server memory. For the first time, we do not need backdoor access to the server, and a reinforcement learning (RL) attacker can learn how to maximize server repetitive memory updates by manipulating the client's sensor observation. The consequence of the remote rowhammer attack is that we are able to achieve bit flips, which can corrupt the server memory. We demonstrate the feasibility of our attack using a large-scale FL automatic speech recognition (ASR) systems with sparse updates, our adversarial attacking agent can achieve around 70\% repeated update rate (RUR) in the targeted server model, effectively inducing bit flips on server DRAM. The security implications are that can cause disruptions to learning or may inadvertently cause elevated privilege. This paves the way for further research on practical mitigation strategies in FL and hardware design.

Building AI Service Repositories for On-Demand Service Orchestration in 6G AI-RAN

Apr 13, 2025

Abstract:Efficient orchestration of AI services in 6G AI-RAN requires well-structured, ready-to-deploy AI service repositories combined with orchestration methods adaptive to diverse runtime contexts across radio access, edge, and cloud layers. Current literature lacks comprehensive frameworks for constructing such repositories and generally overlooks key practical orchestration factors. This paper systematically identifies and categorizes critical attributes influencing AI service orchestration in 6G networks and introduces an open-source, LLM-assisted toolchain that automates service packaging, deployment, and runtime profiling. We validate the proposed toolchain through the Cranfield AI Service repository case study, demonstrating significant automation benefits, reduced manual coding efforts, and the necessity of infrastructure-specific profiling, paving the way for more practical orchestration frameworks.

Contextualized Autonomous Drone Navigation using LLMs Deployed in Edge-Cloud Computing

Apr 01, 2025Abstract:Autonomous navigation is usually trained offline in diverse scenarios and fine-tuned online subject to real-world experiences. However, the real world is dynamic and changeable, and many environmental encounters/effects are not accounted for in real-time due to difficulties in describing them within offline training data or hard to describe even in online scenarios. However, we know that the human operator can describe these dynamic environmental encounters through natural language, adding semantic context. The research is to deploy Large Language Models (LLMs) to perform real-time contextual code adjustment to autonomous navigation. The challenge not evaluated in literature is what LLMs are appropriate and where should these computationally heavy algorithms sit in the computation-communication edge-cloud computing architectures. In this paper, we evaluate how different LLMs can adjust both the navigation map parameters dynamically (e.g., contour map shaping) and also derive navigation task instruction sets. We then evaluate which LLMs are most suitable and where they should sit in future edge-cloud of 6G telecommunication architectures.

RAG-based User Profiling for Precision Planning in Mixed-precision Over-the-Air Federated Learning

Mar 19, 2025Abstract:Mixed-precision computing, a widely applied technique in AI, offers a larger trade-off space between accuracy and efficiency. The recent purposed Mixed-Precision Over-the-Air Federated Learning (MP-OTA-FL) enables clients to operate at appropriate precision levels based on their heterogeneous hardware, taking advantages of the larger trade-off space while covering the quantization overheads in the mixed-precision modulation scheme for the OTA aggregation process. A key to further exploring the potential of the MP-OTA-FL framework is the optimization of client precision levels. The choice of precision level hinges on multifaceted factors including hardware capability, potential client contribution, and user satisfaction, among which factors can be difficult to define or quantify. In this paper, we propose a RAG-based User Profiling for precision planning framework that integrates retrieval-augmented LLMs and dynamic client profiling to optimize satisfaction and contributions. This includes a hybrid interface for gathering device/user insights and an RAG database storing historical quantization decisions with feedback. Experiments show that our method boosts satisfaction, energy savings, and global model accuracy in MP-OTA-FL systems.

End-to-End Edge AI Service Provisioning Framework in 6G ORAN

Mar 15, 2025

Abstract:With the advent of 6G, Open Radio Access Network (O-RAN) architectures are evolving to support intelligent, adaptive, and automated network orchestration. This paper proposes a novel Edge AI and Network Service Orchestration framework that leverages Large Language Model (LLM) agents deployed as O-RAN rApps. The proposed LLM-agent-powered system enables interactive and intuitive orchestration by translating the user's use case description into deployable AI services and corresponding network configurations. The LLM agent automates multiple tasks, including AI model selection from repositories (e.g., Hugging Face), service deployment, network adaptation, and real-time monitoring via xApps. We implement a prototype using open-source O-RAN projects (OpenAirInterface and FlexRIC) to demonstrate the feasibility and functionality of our framework. Our demonstration showcases the end-to-end flow of AI service orchestration, from user interaction to network adaptation, ensuring Quality of Service (QoS) compliance. This work highlights the potential of integrating LLM-driven automation into 6G O-RAN ecosystems, paving the way for more accessible and efficient edge AI ecosystems.

Modelling Networked Dynamical System by Temporal Graph Neural ODE with Irregularly Partial Observed Time-series Data

Nov 29, 2024

Abstract:Modeling the evolution of system with time-series data is a challenging and critical task in a wide range of fields, especially when the time-series data is regularly sampled and partially observable. Some methods have been proposed to estimate the hidden dynamics between intervals like Neural ODE or Exponential decay dynamic function and combine with RNN to estimate the evolution. However, it is difficult for these methods to capture the spatial and temporal dependencies existing within graph-structured time-series data and take full advantage of the available relational information to impute missing data and predict the future states. Besides, traditional RNN-based methods leverage shared RNN cell to update the hidden state which does not capture the impact of various intervals and missing state information on the reliability of estimating the hidden state. To solve this problem, in this paper, we propose a method embedding Graph Neural ODE with reliability and time-aware mechanism which can capture the spatial and temporal dependencies in irregularly sampled and partially observable time-series data to reconstruct the dynamics. Also, a loss function is designed considering the reliability of the augment data from the above proposed method to make further prediction. The proposed method has been validated in experiments of different networked dynamical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge