Weijun Zhang

dots.llm1 Technical Report

Jun 06, 2025Abstract:Mixture of Experts (MoE) models have emerged as a promising paradigm for scaling language models efficiently by activating only a subset of parameters for each input token. In this report, we present dots.llm1, a large-scale MoE model that activates 14B parameters out of a total of 142B parameters, delivering performance on par with state-of-the-art models while reducing training and inference costs. Leveraging our meticulously crafted and efficient data processing pipeline, dots.llm1 achieves performance comparable to Qwen2.5-72B after pretraining on 11.2T high-quality tokens and post-training to fully unlock its capabilities. Notably, no synthetic data is used during pretraining. To foster further research, we open-source intermediate training checkpoints at every one trillion tokens, providing valuable insights into the learning dynamics of large language models.

CFTrack: Enhancing Lightweight Visual Tracking through Contrastive Learning and Feature Matching

Feb 27, 2025Abstract:Achieving both efficiency and strong discriminative ability in lightweight visual tracking is a challenge, especially on mobile and edge devices with limited computational resources. Conventional lightweight trackers often struggle with robustness under occlusion and interference, while deep trackers, when compressed to meet resource constraints, suffer from performance degradation. To address these issues, we introduce CFTrack, a lightweight tracker that integrates contrastive learning and feature matching to enhance discriminative feature representations. CFTrack dynamically assesses target similarity during prediction through a novel contrastive feature matching module optimized with an adaptive contrastive loss, thereby improving tracking accuracy. Extensive experiments on LaSOT, OTB100, and UAV123 show that CFTrack surpasses many state-of-the-art lightweight trackers, operating at 136 frames per second on the NVIDIA Jetson NX platform. Results on the HOOT dataset further demonstrate CFTrack's strong discriminative ability under heavy occlusion.

Multi-query Vehicle Re-identification: Viewpoint-conditioned Network, Unified Dataset and New Metric

May 25, 2023

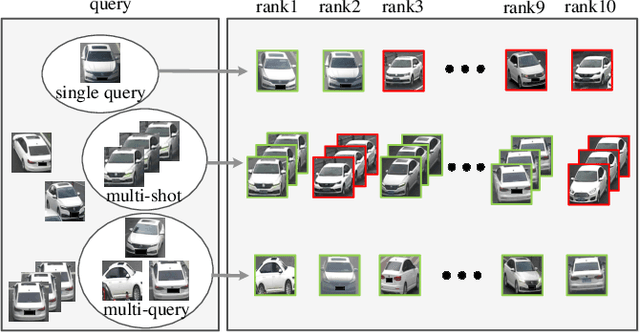

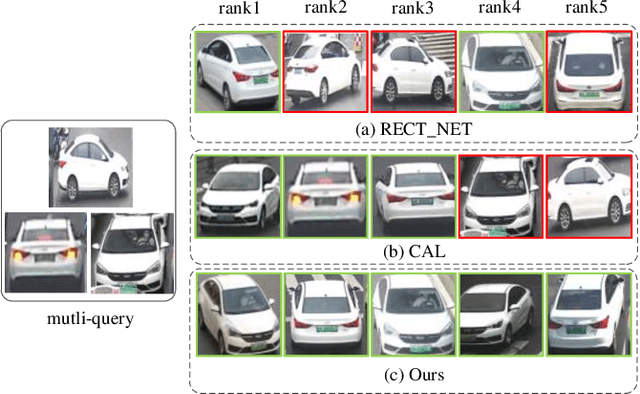

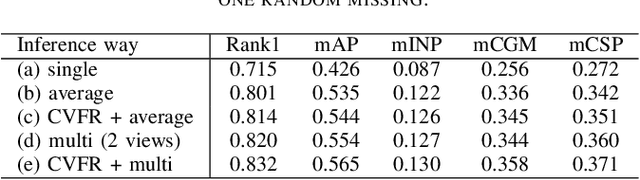

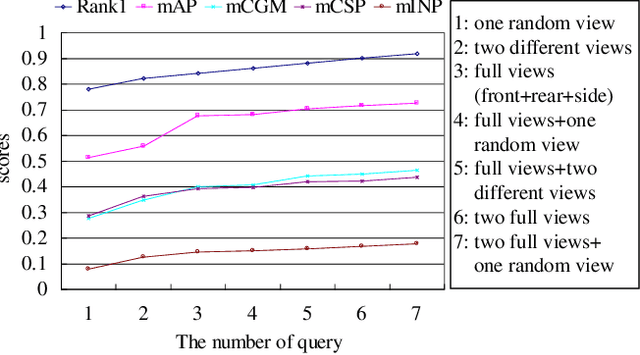

Abstract:Existing vehicle re-identification methods mainly rely on the single query, which has limited information for vehicle representation and thus significantly hinders the performance of vehicle Re-ID in complicated surveillance networks. In this paper, we propose a more realistic and easily accessible task, called multi-query vehicle Re-ID, which leverages multiple queries to overcome viewpoint limitation of single one. Based on this task, we make three major contributions. First, we design a novel viewpoint-conditioned network (VCNet), which adaptively combines the complementary information from different vehicle viewpoints, for multi-query vehicle Re-ID. Moreover, to deal with the problem of missing vehicle viewpoints, we propose a cross-view feature recovery module which recovers the features of the missing viewpoints by learnt the correlation between the features of available and missing viewpoints. Second, we create a unified benchmark dataset, taken by 6142 cameras from a real-life transportation surveillance system, with comprehensive viewpoints and large number of crossed scenes of each vehicle for multi-query vehicle Re-ID evaluation. Finally, we design a new evaluation metric, called mean cross-scene precision (mCSP), which measures the ability of cross-scene recognition by suppressing the positive samples with similar viewpoints from same camera. Comprehensive experiments validate the superiority of the proposed method against other methods, as well as the effectiveness of the designed metric in the evaluation of multi-query vehicle Re-ID.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge