Volkmar Sterzing

Learning Control Policies for Variable Objectives from Offline Data

Aug 11, 2023

Abstract:Offline reinforcement learning provides a viable approach to obtain advanced control strategies for dynamical systems, in particular when direct interaction with the environment is not available. In this paper, we introduce a conceptual extension for model-based policy search methods, called variable objective policy (VOP). With this approach, policies are trained to generalize efficiently over a variety of objectives, which parameterize the reward function. We demonstrate that by altering the objectives passed as input to the policy, users gain the freedom to adjust its behavior or re-balance optimization targets at runtime, without need for collecting additional observation batches or re-training.

A Benchmark Environment Motivated by Industrial Control Problems

Feb 06, 2018

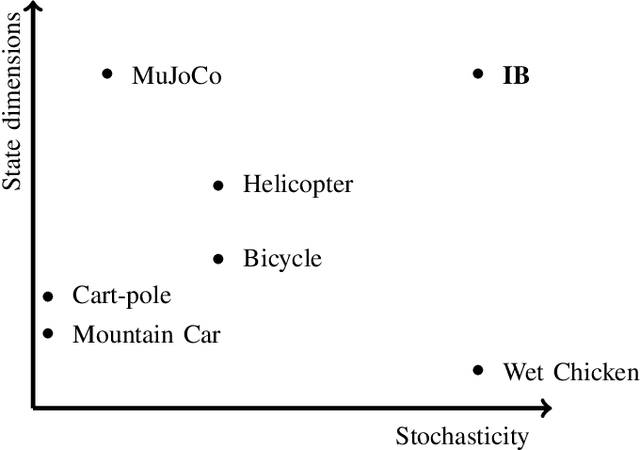

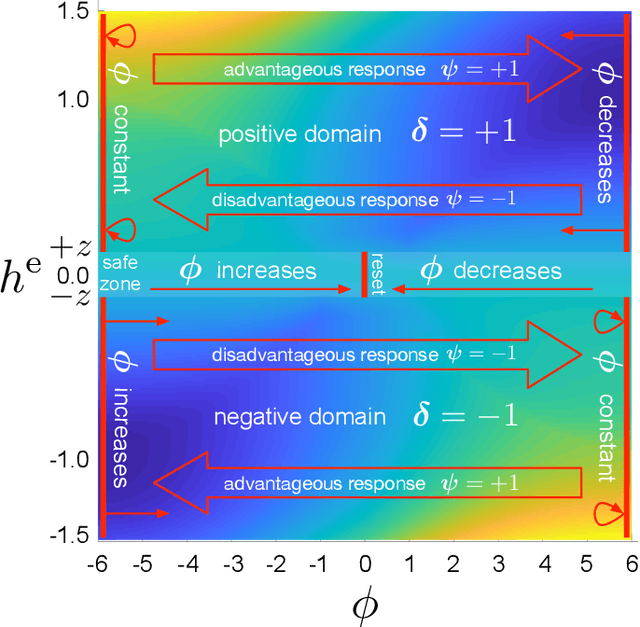

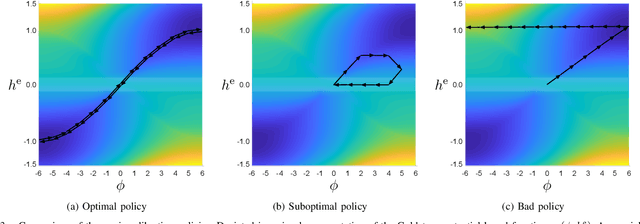

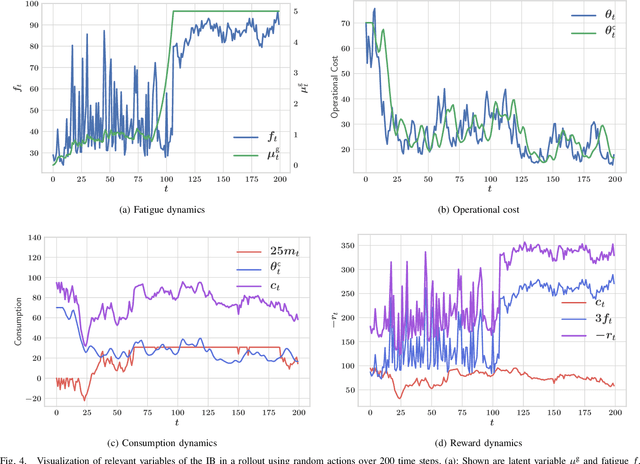

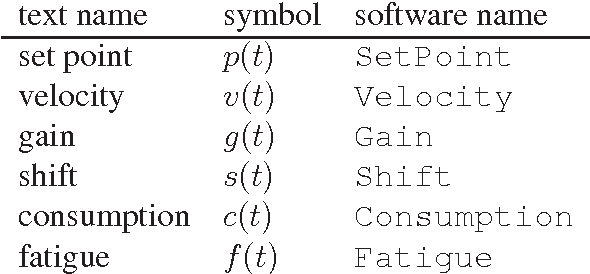

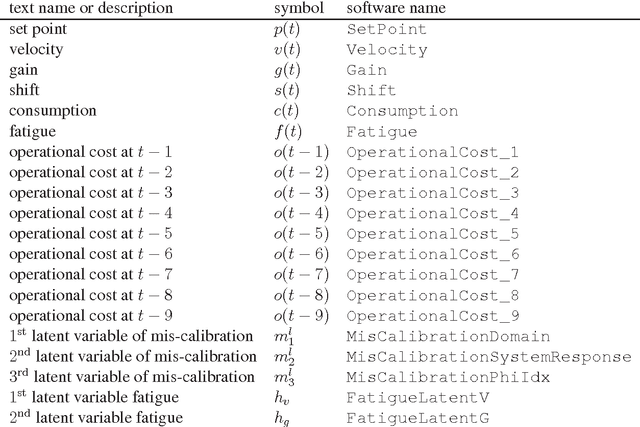

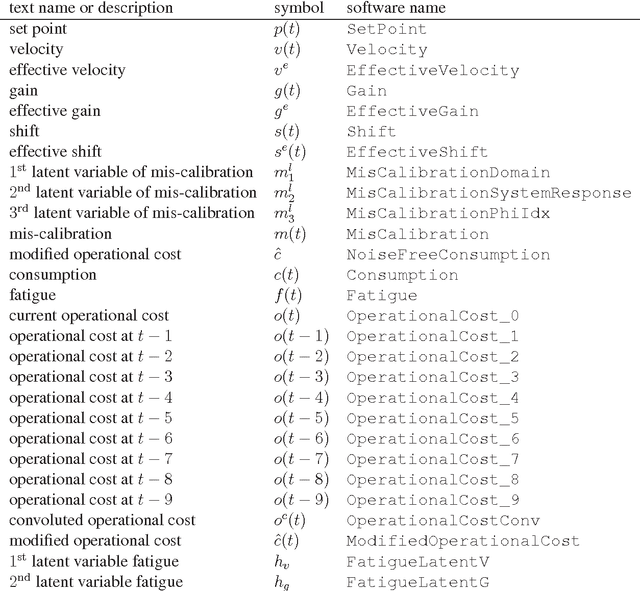

Abstract:In the research area of reinforcement learning (RL), frequently novel and promising methods are developed and introduced to the RL community. However, although many researchers are keen to apply their methods on real-world problems, implementing such methods in real industry environments often is a frustrating and tedious process. Generally, academic research groups have only limited access to real industrial data and applications. For this reason, new methods are usually developed, evaluated and compared by using artificial software benchmarks. On one hand, these benchmarks are designed to provide interpretable RL training scenarios and detailed insight into the learning process of the method on hand. On the other hand, they usually do not share much similarity with industrial real-world applications. For this reason we used our industry experience to design a benchmark which bridges the gap between freely available, documented, and motivated artificial benchmarks and properties of real industrial problems. The resulting industrial benchmark (IB) has been made publicly available to the RL community by publishing its Java and Python code, including an OpenAI Gym wrapper, on Github. In this paper we motivate and describe in detail the IB's dynamics and identify prototypic experimental settings that capture common situations in real-world industry control problems.

Introduction to the "Industrial Benchmark"

Sep 28, 2017

Abstract:A novel reinforcement learning benchmark, called Industrial Benchmark, is introduced. The Industrial Benchmark aims at being be realistic in the sense, that it includes a variety of aspects that we found to be vital in industrial applications. It is not designed to be an approximation of any real system, but to pose the same hardness and complexity.

Batch Reinforcement Learning on the Industrial Benchmark: First Experiences

Jul 27, 2017

Abstract:The Particle Swarm Optimization Policy (PSO-P) has been recently introduced and proven to produce remarkable results on interacting with academic reinforcement learning benchmarks in an off-policy, batch-based setting. To further investigate the properties and feasibility on real-world applications, this paper investigates PSO-P on the so-called Industrial Benchmark (IB), a novel reinforcement learning (RL) benchmark that aims at being realistic by including a variety of aspects found in industrial applications, like continuous state and action spaces, a high dimensional, partially observable state space, delayed effects, and complex stochasticity. The experimental results of PSO-P on IB are compared to results of closed-form control policies derived from the model-based Recurrent Control Neural Network (RCNN) and the model-free Neural Fitted Q-Iteration (NFQ). Experiments show that PSO-P is not only of interest for academic benchmarks, but also for real-world industrial applications, since it also yielded the best performing policy in our IB setting. Compared to other well established RL techniques, PSO-P produced outstanding results in performance and robustness, requiring only a relatively low amount of effort in finding adequate parameters or making complex design decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge