Volker Wulfmeyer

University of Hohenheim

Inductive biases in deep learning models for weather prediction

Apr 06, 2023Abstract:Deep learning has recently gained immense popularity in the Earth sciences as it enables us to formulate purely data-driven models of complex Earth system processes. Deep learning-based weather prediction (DLWP) models have made significant progress in the last few years, achieving forecast skills comparable to established numerical weather prediction (NWP) models with comparatively lesser computational costs. In order to train accurate, reliable, and tractable DLWP models with several millions of parameters, the model design needs to incorporate suitable inductive biases that encode structural assumptions about the data and modelled processes. When chosen appropriately, these biases enable faster learning and better generalisation to unseen data. Although inductive biases play a crucial role in successful DLWP models, they are often not stated explicitly and how they contribute to model performance remains unclear. Here, we review and analyse the inductive biases of six state-of-the-art DLWP models, involving a deeper look at five key design elements: input data, forecasting objective, loss components, layered design of the deep learning architectures, and optimisation methods. We show how the design choices made in each of the five design elements relate to structural assumptions. Given recent developments in the broader DL community, we anticipate that the future of DLWP will likely see a wider use of foundation models -- large models pre-trained on big databases with self-supervised learning -- combined with explicit physics-informed inductive biases that allow the models to provide competitive forecasts even at the more challenging subseasonal-to-seasonal scales.

Hidden Latent State Inference in a Spatio-Temporal Generative Model

Sep 21, 2020

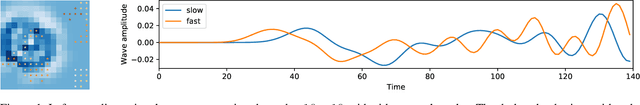

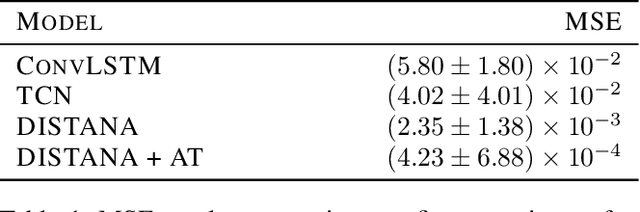

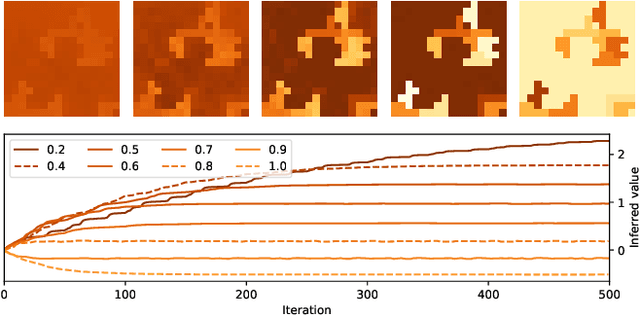

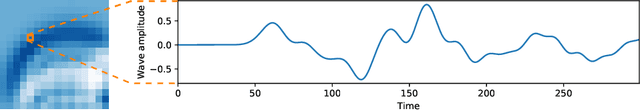

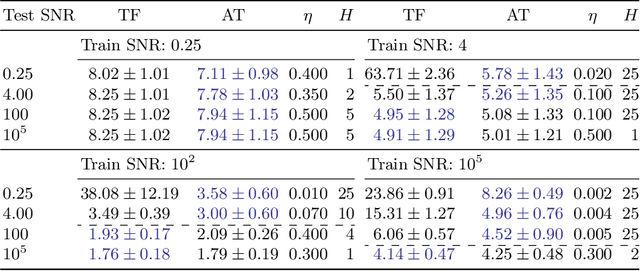

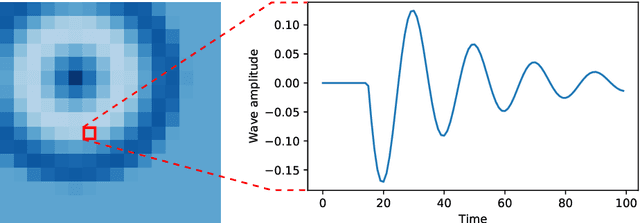

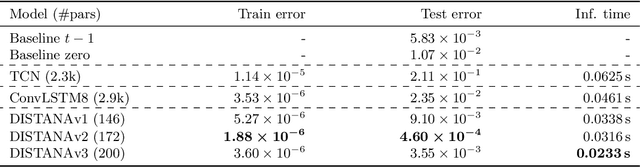

Abstract:Knowledge of the hidden factors that determine particular system dynamics is crucial for both explaining them and pursuing goal-directed, interventional actions. The inference of these factors without supervision given time series data remains an open challenge. Here, we focus on spatio-temporal processes, including wave propagations and weather dynamics, and assume that universal causes (e.g. physics) apply throughout space and time. We apply a novel DIstributed, Spatio-Temporal graph Artificial Neural network Architecture, DISTANA, which learns a generative model in such domains. DISTANA requires fewer parameters, and yields more accurate predictions than temporal convolutional neural networks and other related approaches on a 2D circular wave prediction task. We show that DISTANA, when combined with a retrospective latent state inference principle called active tuning, can reliably derive hidden local causal factors. In a current weather prediction benchmark, DISTANA infers our planet's land-sea mask solely by observing temperature dynamics and uses the self inferred information to improve its own prediction of temperature. We are convinced that the retrospective inference of latent states in generative RNN architectures will play an essential role in future research on causal inference and explainable systems.

Inferring, Predicting, and Denoising Causal Wave Dynamics

Sep 19, 2020

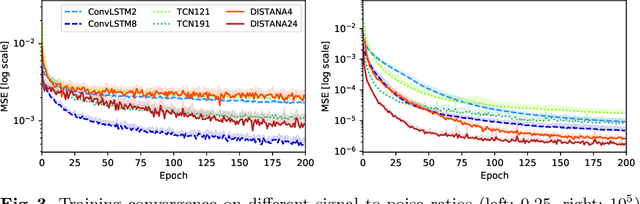

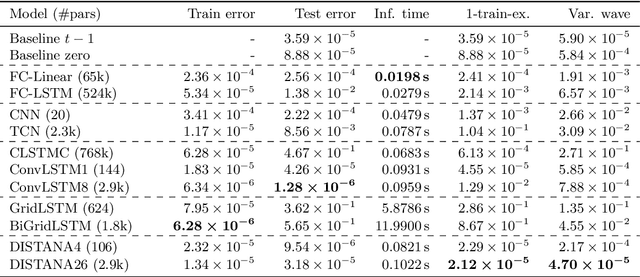

Abstract:The novel DISTributed Artificial neural Network Architecture (DISTANA) is a generative, recurrent graph convolution neural network. It implements a grid or mesh of locally parameterizable laterally connected network modules. DISTANA is specifically designed to identify the causality behind spatially distributed, non-linear dynamical processes. We show that DISTANA is very well-suited to denoise data streams, given that re-occurring patterns are observed, significantly outperforming alternative approaches, such as temporal convolution networks and ConvLSTMs, on a complex spatial wave propagation benchmark. It produces stable and accurate closed-loop predictions even over hundreds of time steps. Moreover, it is able to effectively filter noise -- an ability that can be improved further by applying denoising autoencoder principles or by actively tuning latent neural state activities retrospectively. Results confirm that DISTANA is ready to model real-world spatio-temporal dynamics such as brain imaging, supply networks, water flow, or soil and weather data patterns.

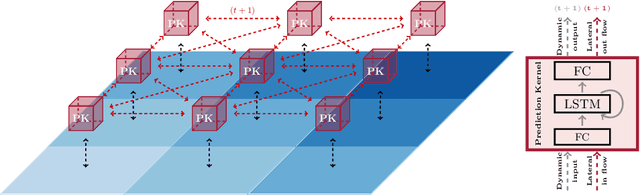

A Distributed Neural Network Architecture for Robust Non-Linear Spatio-Temporal Prediction

Dec 23, 2019

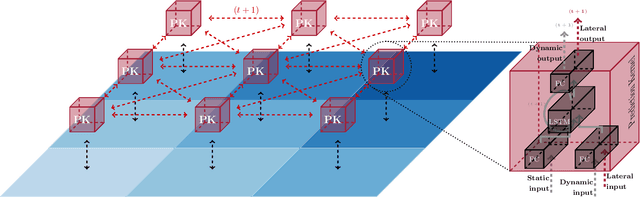

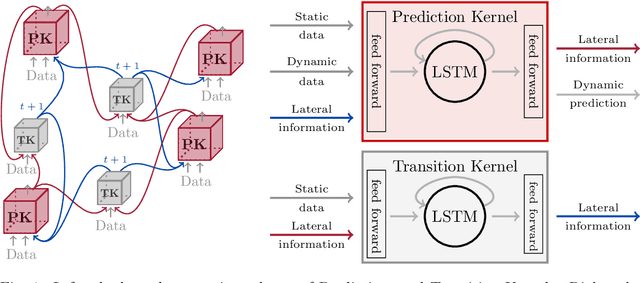

Abstract:We introduce a distributed spatio-temporal artificial neural network architecture (DISTANA). It encodes mesh nodes using recurrent, neural prediction kernels (PKs), while neural transition kernels (TKs) transfer information between neighboring PKs, together modeling and predicting spatio-temporal time series dynamics. As a consequence, DISTANA assumes that generally applicable causes, which may be locally modified, generate the observed data. DISTANA learns in a parallel, spatially distributed manner, scales to large problem spaces, is capable of approximating complex dynamics, and is particularly robust to overfitting when compared to other competitive ANN models. Moreover, it is applicable to heterogeneously structured meshes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge