Vishnu Pandi Chellapandi

Avoid Forgetting by Preserving Global Knowledge Gradients in Federated Learning with Non-IID Data

May 26, 2025Abstract:The inevitable presence of data heterogeneity has made federated learning very challenging. There are numerous methods to deal with this issue, such as local regularization, better model fusion techniques, and data sharing. Though effective, they lack a deep understanding of how data heterogeneity can affect the global decision boundary. In this paper, we bridge this gap by performing an experimental analysis of the learned decision boundary using a toy example. Our observations are surprising: (1) we find that the existing methods suffer from forgetting and clients forget the global decision boundary and only learn the perfect local one, and (2) this happens regardless of the initial weights, and clients forget the global decision boundary even starting from pre-trained optimal weights. In this paper, we present FedProj, a federated learning framework that robustly learns the global decision boundary and avoids its forgetting during local training. To achieve better ensemble knowledge fusion, we design a novel server-side ensemble knowledge transfer loss to further calibrate the learned global decision boundary. To alleviate the issue of learned global decision boundary forgetting, we further propose leveraging an episodic memory of average ensemble logits on a public unlabeled dataset to regulate the gradient updates at each step of local training. Experimental results demonstrate that FedProj outperforms state-of-the-art methods by a large margin.

FedNMUT -- Federated Noisy Model Update Tracking Convergence Analysis

Mar 24, 2024Abstract:A novel Decentralized Noisy Model Update Tracking Federated Learning algorithm (FedNMUT) is proposed that is tailored to function efficiently in the presence of noisy communication channels that reflect imperfect information exchange. This algorithm uses gradient tracking to minimize the impact of data heterogeneity while minimizing communication overhead. The proposed algorithm incorporates noise into its parameters to mimic the conditions of noisy communication channels, thereby enabling consensus among clients through a communication graph topology in such challenging environments. FedNMUT prioritizes parameter sharing and noise incorporation to increase the resilience of decentralized learning systems against noisy communications. Theoretical results for the smooth non-convex objective function are provided by us, and it is shown that the $\epsilon-$stationary solution is achieved by our algorithm at the rate of $\mathcal{O}\left(\frac{1}{\sqrt{T}}\right)$, where $T$ is the total number of communication rounds. Additionally, via empirical validation, we demonstrated that the performance of FedNMUT is superior to the existing state-of-the-art methods and conventional parameter-mixing approaches in dealing with imperfect information sharing. This proves the capability of the proposed algorithm to counteract the negative effects of communication noise in a decentralized learning framework.

FedMFS: Federated Multimodal Fusion Learning with Selective Modality Communication

Oct 10, 2023

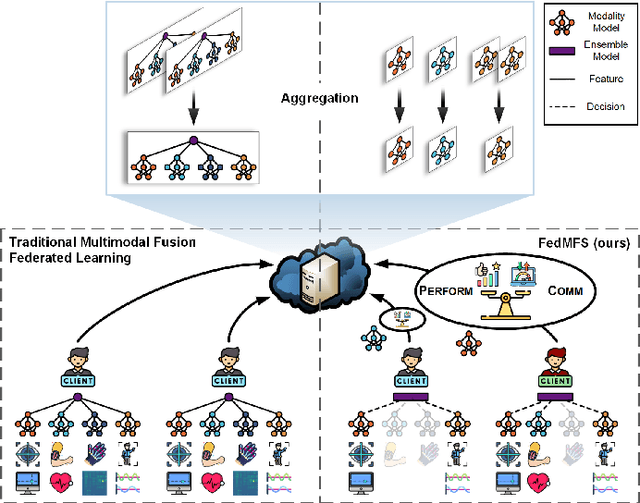

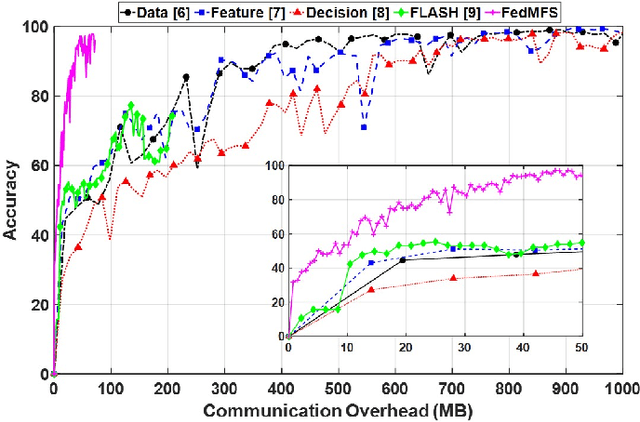

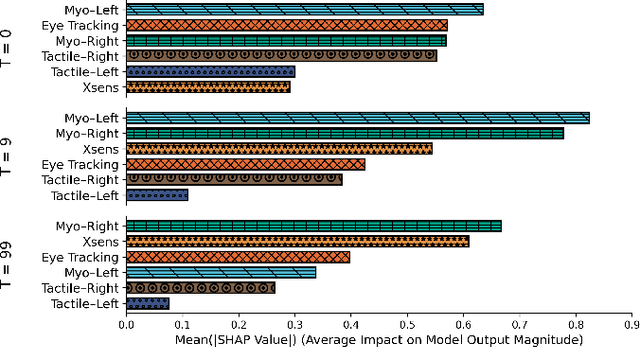

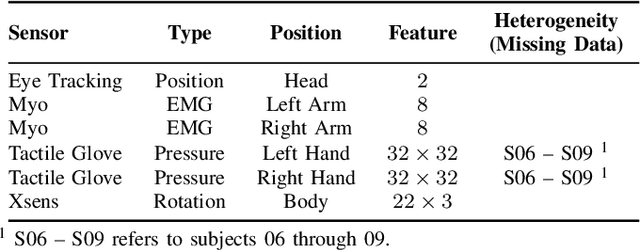

Abstract:Federated learning (FL) is a distributed machine learning (ML) paradigm that enables clients to collaborate without accessing, infringing upon, or leaking original user data by sharing only model parameters. In the Internet of Things (IoT), edge devices are increasingly leveraging multimodal data compositions and fusion paradigms to enhance model performance. However, in FL applications, two main challenges remain open: (i) addressing the issues caused by heterogeneous clients lacking specific modalities and (ii) devising an optimal modality upload strategy to minimize communication overhead while maximizing learning performance. In this paper, we propose Federated Multimodal Fusion learning with Selective modality communication (FedMFS), a new multimodal fusion FL methodology that can tackle the above mentioned challenges. The key idea is to utilize Shapley values to quantify each modality's contribution and modality model size to gauge communication overhead, so that each client can selectively upload the modality models to the server for aggregation. This enables FedMFS to flexibly balance performance against communication costs, depending on resource constraints and applications. Experiments on real-world multimodal datasets demonstrate the effectiveness of FedMFS, achieving comparable accuracy while reducing communication overhead by one twentieth compared to baselines.

Federated Learning for Connected and Automated Vehicles: A Survey of Existing Approaches and Challenges

Aug 21, 2023Abstract:Machine learning (ML) is widely used for key tasks in Connected and Automated Vehicles (CAV), including perception, planning, and control. However, its reliance on vehicular data for model training presents significant challenges related to in-vehicle user privacy and communication overhead generated by massive data volumes. Federated learning (FL) is a decentralized ML approach that enables multiple vehicles to collaboratively develop models, broadening learning from various driving environments, enhancing overall performance, and simultaneously securing local vehicle data privacy and security. This survey paper presents a review of the advancements made in the application of FL for CAV (FL4CAV). First, centralized and decentralized frameworks of FL are analyzed, highlighting their key characteristics and methodologies. Second, diverse data sources, models, and data security techniques relevant to FL in CAVs are reviewed, emphasizing their significance in ensuring privacy and confidentiality. Third, specific and important applications of FL are explored, providing insight into the base models and datasets employed for each application. Finally, existing challenges for FL4CAV are listed and potential directions for future work are discussed to further enhance the effectiveness and efficiency of FL in the context of CAV.

A Survey of Federated Learning for Connected and Automated Vehicles

Mar 19, 2023Abstract:Connected and Automated Vehicles (CAVs) are one of the emerging technologies in the automotive domain that has the potential to alleviate the issues of accidents, traffic congestion, and pollutant emissions, leading to a safe, efficient, and sustainable transportation system. Machine learning-based methods are widely used in CAVs for crucial tasks like perception, motion planning, and motion control, where machine learning models in CAVs are solely trained using the local vehicle data, and the performance is not certain when exposed to new environments or unseen conditions. Federated learning (FL) is an effective solution for CAVs that enables a collaborative model development with multiple vehicles in a distributed learning framework. FL enables CAVs to learn from a wide range of driving environments and improve their overall performance while ensuring the privacy and security of local vehicle data. In this paper, we review the progress accomplished by researchers in applying FL to CAVs. A broader view of the various data modalities and algorithms that have been implemented on CAVs is provided. Specific applications of FL are reviewed in detail, and an analysis of the challenges and future scope of research are presented.

On the Convergence of Decentralized Federated Learning Under Imperfect Information Sharing

Mar 19, 2023

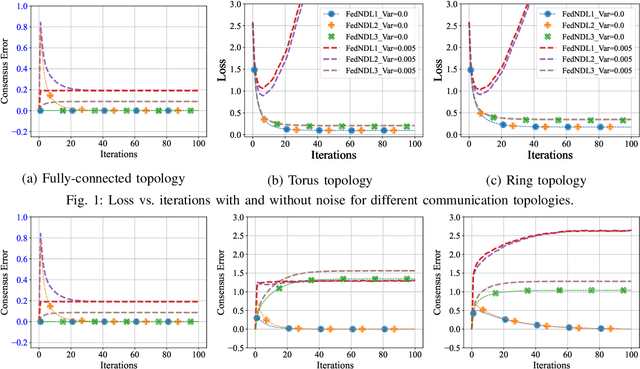

Abstract:Decentralized learning and optimization is a central problem in control that encompasses several existing and emerging applications, such as federated learning. While there exists a vast literature on this topic and most methods centered around the celebrated average-consensus paradigm, less attention has been devoted to scenarios where the communication between the agents may be imperfect. To this end, this paper presents three different algorithms of Decentralized Federated Learning (DFL) in the presence of imperfect information sharing modeled as noisy communication channels. The first algorithm, Federated Noisy Decentralized Learning (FedNDL1), comes from the literature, where the noise is added to their parameters to simulate the scenario of the presence of noisy communication channels. This algorithm shares parameters to form a consensus with the clients based on a communication graph topology through a noisy communication channel. The proposed second algorithm (FedNDL2) is similar to the first algorithm but with added noise to the parameters, and it performs the gossip averaging before the gradient optimization. The proposed third algorithm (FedNDL3), on the other hand, shares the gradients through noisy communication channels instead of the parameters. Theoretical and experimental results demonstrate that under imperfect information sharing, the third scheme that mixes gradients is more robust in the presence of a noisy channel compared with the algorithms from the literature that mix the parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge