Antesh Upadhyay

FedSGM: A Unified Framework for Constraint Aware, Bidirectionally Compressed, Multi-Step Federated Optimization

Jan 23, 2026Abstract:We introduce FedSGM, a unified framework for federated constrained optimization that addresses four major challenges in federated learning (FL): functional constraints, communication bottlenecks, local updates, and partial client participation. Building on the switching gradient method, FedSGM provides projection-free, primal-only updates, avoiding expensive dual-variable tuning or inner solvers. To handle communication limits, FedSGM incorporates bi-directional error feedback, correcting the bias introduced by compression while explicitly understanding the interaction between compression noise and multi-step local updates. We derive convergence guarantees showing that the averaged iterate achieves the canonical $\boldsymbol{\mathcal{O}}(1/\sqrt{T})$ rate, with additional high-probability bounds that decouple optimization progress from sampling noise due to partial participation. Additionally, we introduce a soft switching version of FedSGM to stabilize updates near the feasibility boundary. To our knowledge, FedSGM is the first framework to unify functional constraints, compression, multiple local updates, and partial client participation, establishing a theoretically grounded foundation for constrained federated learning. Finally, we validate the theoretical guarantees of FedSGM via experimentation on Neyman-Pearson classification and constrained Markov decision process (CMDP) tasks.

Equitable Federated Learning with Activation Clustering

Oct 24, 2024Abstract:Federated learning is a prominent distributed learning paradigm that incorporates collaboration among diverse clients, promotes data locality, and thus ensures privacy. These clients have their own technological, cultural, and other biases in the process of data generation. However, the present standard often ignores this bias/heterogeneity, perpetuating bias against certain groups rather than mitigating it. In response to this concern, we propose an equitable clustering-based framework where the clients are categorized/clustered based on how similar they are to each other. We propose a unique way to construct the similarity matrix that uses activation vectors. Furthermore, we propose a client weighing mechanism to ensure that each cluster receives equal importance and establish $O(1/\sqrt{K})$ rate of convergence to reach an $\epsilon-$stationary solution. We assess the effectiveness of our proposed strategy against common baselines, demonstrating its efficacy in terms of reducing the bias existing amongst various client clusters and consequently ameliorating algorithmic bias against specific groups.

FedNMUT -- Federated Noisy Model Update Tracking Convergence Analysis

Mar 24, 2024Abstract:A novel Decentralized Noisy Model Update Tracking Federated Learning algorithm (FedNMUT) is proposed that is tailored to function efficiently in the presence of noisy communication channels that reflect imperfect information exchange. This algorithm uses gradient tracking to minimize the impact of data heterogeneity while minimizing communication overhead. The proposed algorithm incorporates noise into its parameters to mimic the conditions of noisy communication channels, thereby enabling consensus among clients through a communication graph topology in such challenging environments. FedNMUT prioritizes parameter sharing and noise incorporation to increase the resilience of decentralized learning systems against noisy communications. Theoretical results for the smooth non-convex objective function are provided by us, and it is shown that the $\epsilon-$stationary solution is achieved by our algorithm at the rate of $\mathcal{O}\left(\frac{1}{\sqrt{T}}\right)$, where $T$ is the total number of communication rounds. Additionally, via empirical validation, we demonstrated that the performance of FedNMUT is superior to the existing state-of-the-art methods and conventional parameter-mixing approaches in dealing with imperfect information sharing. This proves the capability of the proposed algorithm to counteract the negative effects of communication noise in a decentralized learning framework.

Improved Convergence Analysis and SNR Control Strategies for Federated Learning in the Presence of Noise

Jul 14, 2023Abstract:We propose an improved convergence analysis technique that characterizes the distributed learning paradigm of federated learning (FL) with imperfect/noisy uplink and downlink communications. Such imperfect communication scenarios arise in the practical deployment of FL in emerging communication systems and protocols. The analysis developed in this paper demonstrates, for the first time, that there is an asymmetry in the detrimental effects of uplink and downlink communications in FL. In particular, the adverse effect of the downlink noise is more severe on the convergence of FL algorithms. Using this insight, we propose improved Signal-to-Noise (SNR) control strategies that, discarding the negligible higher-order terms, lead to a similar convergence rate for FL as in the case of a perfect, noise-free communication channel while incurring significantly less power resources compared to existing solutions. In particular, we establish that to maintain the $O(\frac{1}{\sqrt{K}})$ rate of convergence like in the case of noise-free FL, we need to scale down the uplink and downlink noise by $\Omega({\sqrt{k}})$ and $\Omega({k})$ respectively, where $k$ denotes the communication round, $k=1,\dots, K$. Our theoretical result is further characterized by two major benefits: firstly, it does not assume the somewhat unrealistic assumption of bounded client dissimilarity, and secondly, it only requires smooth non-convex loss functions, a function class better suited for modern machine learning and deep learning models. We also perform extensive empirical analysis to verify the validity of our theoretical findings.

On the Convergence of Decentralized Federated Learning Under Imperfect Information Sharing

Mar 19, 2023

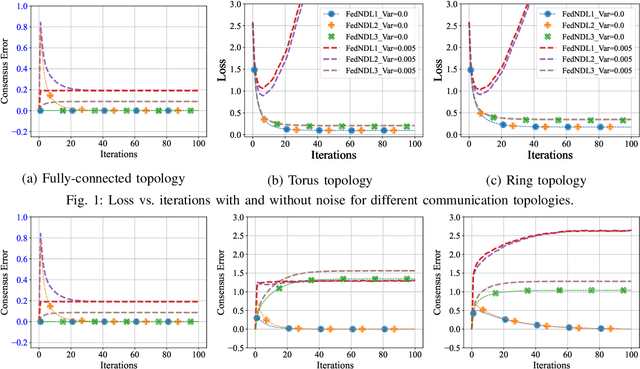

Abstract:Decentralized learning and optimization is a central problem in control that encompasses several existing and emerging applications, such as federated learning. While there exists a vast literature on this topic and most methods centered around the celebrated average-consensus paradigm, less attention has been devoted to scenarios where the communication between the agents may be imperfect. To this end, this paper presents three different algorithms of Decentralized Federated Learning (DFL) in the presence of imperfect information sharing modeled as noisy communication channels. The first algorithm, Federated Noisy Decentralized Learning (FedNDL1), comes from the literature, where the noise is added to their parameters to simulate the scenario of the presence of noisy communication channels. This algorithm shares parameters to form a consensus with the clients based on a communication graph topology through a noisy communication channel. The proposed second algorithm (FedNDL2) is similar to the first algorithm but with added noise to the parameters, and it performs the gossip averaging before the gradient optimization. The proposed third algorithm (FedNDL3), on the other hand, shares the gradients through noisy communication channels instead of the parameters. Theoretical and experimental results demonstrate that under imperfect information sharing, the third scheme that mixes gradients is more robust in the presence of a noisy channel compared with the algorithms from the literature that mix the parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge