Venkata Sriram Siddhardh Nadendla

Fairness Perceptions in Regression-based Predictive Models

May 08, 2025Abstract:Regression-based predictive analytics used in modern kidney transplantation is known to inherit biases from training data. This leads to social discrimination and inefficient organ utilization, particularly in the context of a few social groups. Despite this concern, there is limited research on fairness in regression and its impact on organ utilization and placement. This paper introduces three novel divergence-based group fairness notions: (i) independence, (ii) separation, and (iii) sufficiency to assess the fairness of regression-based analytics tools. In addition, fairness preferences are investigated from crowd feedback, in order to identify a socially accepted group fairness criterion for evaluating these tools. A total of 85 participants were recruited from the Prolific crowdsourcing platform, and a Mixed-Logit discrete choice model was used to model fairness feedback and estimate social fairness preferences. The findings clearly depict a strong preference towards the separation and sufficiency fairness notions, and that the predictive analytics is deemed fair with respect to gender and race groups, but unfair in terms of age groups.

Few-Shot Transfer Learning for Individualized Braking Intent Detection on Neuromorphic Hardware

Jul 21, 2024Abstract:Objective: This work explores use of a few-shot transfer learning method to train and implement a convolutional spiking neural network (CSNN) on a BrainChip Akida AKD1000 neuromorphic system-on-chip for developing individual-level, instead of traditionally used group-level, models using electroencephalographic data. The efficacy of the method is studied on an advanced driver assist system related task of predicting braking intention. Main Results: Efficacy of the above methodology to develop individual specific braking intention predictive models by rapidly adapting the group-level model in as few as three training epochs while achieving at least 90% accuracy, true positive rate and true negative rate is presented. Further, results show an energy reduction of over 97% with only a 1.3x increase in latency when using the Akida AKD1000 processor for network inference compared to an Intel Xeon CPU. Similar results were obtained in a subsequent ablation study using a subset of five out of 19 channels. Significance: Especially relevant to real-time applications, this work presents an energy-efficient, few-shot transfer learning method that is implemented on a neuromorphic processor capable of training a CSNN as new data becomes available, operating conditions change, or to customize group-level models to yield personalized models unique to each individual.

Driver Fatigue Prediction using Randomly Activated Neural Networks for Smart Ridesharing Platforms

Apr 16, 2024Abstract:Drivers in ridesharing platforms exhibit cognitive atrophy and fatigue as they accept ride offers along the day, which can have a significant impact on the overall efficiency of the ridesharing platform. In contrast to the current literature which focuses primarily on modeling and learning driver's preferences across different ride offers, this paper proposes a novel Dynamic Discounted Satisficing (DDS) heuristic to model and predict driver's sequential ride decisions during a given shift. Based on DDS heuristic, a novel stochastic neural network with random activations is proposed to model DDS heuristic and predict the final decision made by a given driver. The presence of random activations in the network necessitated the development of a novel training algorithm called Sampling-Based Back Propagation Through Time (SBPTT), where gradients are computed for independent instances of neural networks (obtained via sampling the distribution of activation threshold) and aggregated to update the network parameters. Using both simulation experiments as well as on real Chicago taxi dataset, this paper demonstrates the improved performance of the proposed approach, when compared to state-of-the-art methods.

Convolutional Spiking Neural Networks for Detecting Anticipatory Brain Potentials Using Electroencephalogram

Aug 14, 2022

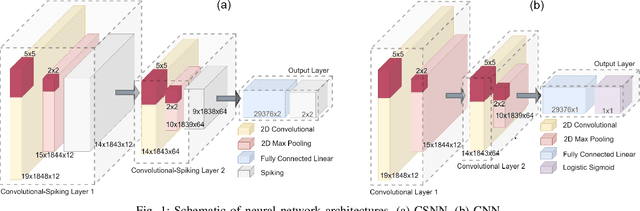

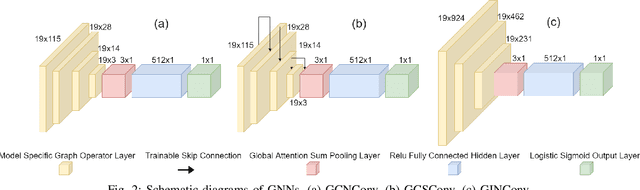

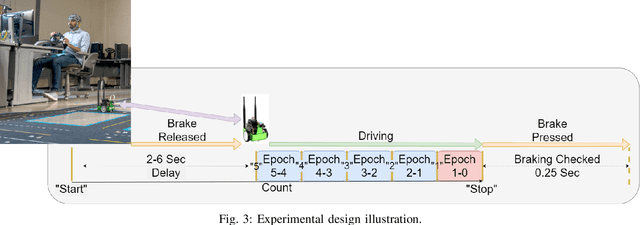

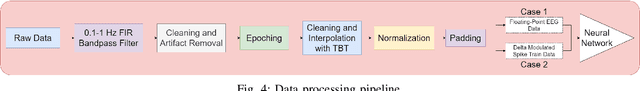

Abstract:Spiking neural networks (SNNs) are receiving increased attention as a means to develop "biologically plausible" machine learning models. These networks mimic synaptic connections in the human brain and produce spike trains, which can be approximated by binary values, precluding high computational cost with floating-point arithmetic circuits. Recently, the addition of convolutional layers to combine the feature extraction power of convolutional networks with the computational efficiency of SNNs has been introduced. In this paper, the feasibility of using a convolutional spiking neural network (CSNN) as a classifier to detect anticipatory slow cortical potentials related to braking intention in human participants using an electroencephalogram (EEG) was studied. The EEG data was collected during an experiment wherein participants operated a remote controlled vehicle on a testbed designed to simulate an urban environment. Participants were alerted to an incoming braking event via an audio countdown to elicit anticipatory potentials that were then measured using an EEG. The CSNN's performance was compared to a standard convolutional neural network (CNN) and three graph neural networks (GNNs) via 10-fold cross-validation. The results showed that the CSNN outperformed the other neural networks.

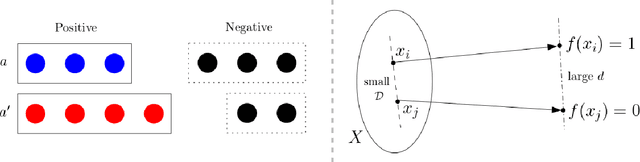

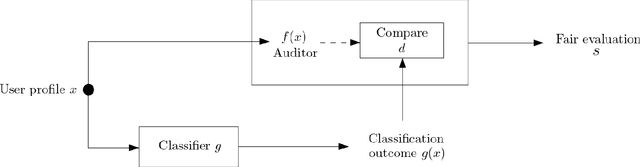

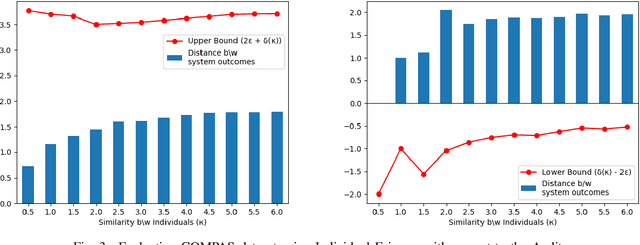

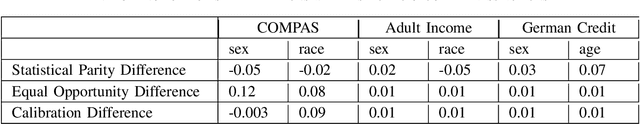

On Learning and Enforcing Latent Assessment Models using Binary Feedback from Human Auditors Regarding Black-Box Classifiers

Feb 16, 2022

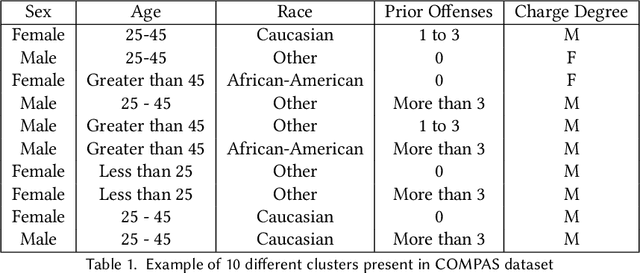

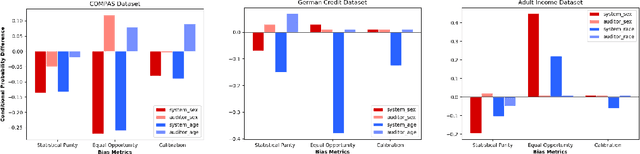

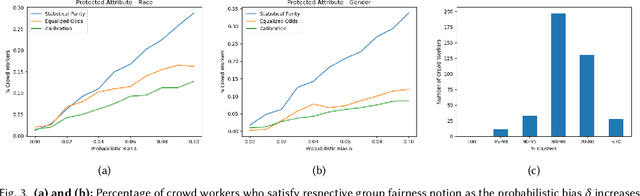

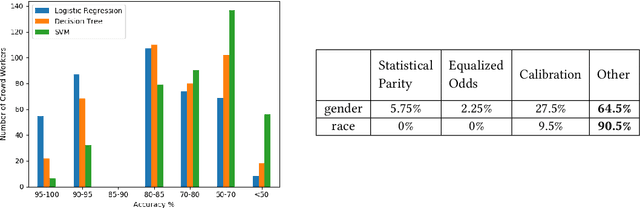

Abstract:Algorithmic fairness literature presents numerous mathematical notions and metrics, and also points to a tradeoff between them while satisficing some or all of them simultaneously. Furthermore, the contextual nature of fairness notions makes it difficult to automate bias evaluation in diverse algorithmic systems. Therefore, in this paper, we propose a novel model called latent assessment model (LAM) to characterize binary feedback provided by human auditors, by assuming that the auditor compares the classifier's output to his or her own intrinsic judgment for each input. We prove that individual and group fairness notions are guaranteed as long as the auditor's intrinsic judgments inherently satisfy the fairness notion at hand, and are relatively similar to the classifier's evaluations. We also demonstrate this relationship between LAM and traditional fairness notions on three well-known datasets, namely COMPAS, German credit and Adult Census Income datasets. Furthermore, we also derive the minimum number of feedback samples needed to obtain PAC learning guarantees to estimate LAM for black-box classifiers. These guarantees are also validated via training standard machine learning algorithms on real binary feedback elicited from 400 human auditors regarding COMPAS.

Online-Learning Deep Neuro-Adaptive Dynamic Inversion Controller for Model Free Control

Jul 21, 2021

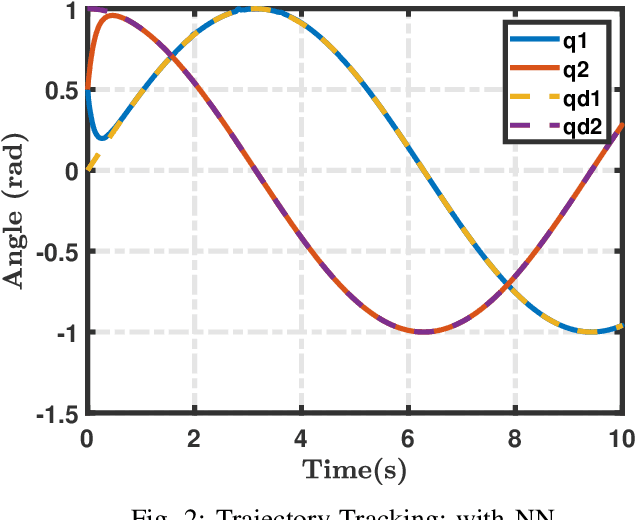

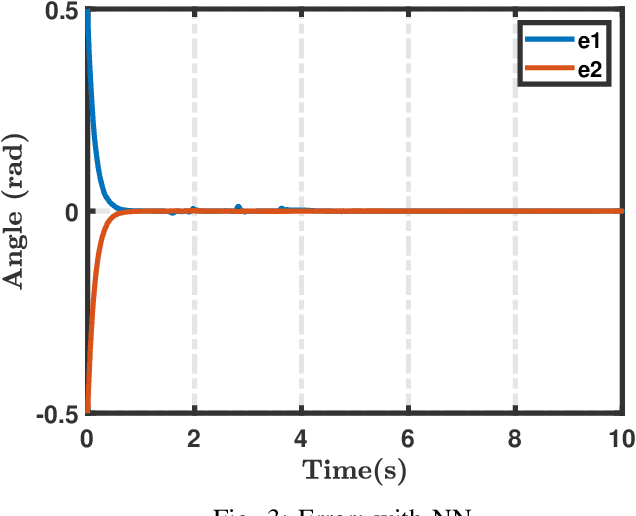

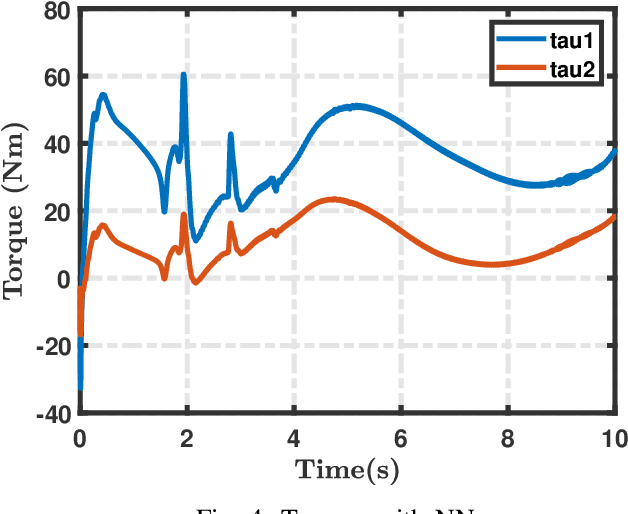

Abstract:Adaptive methods are popular within the control literature due to the flexibility and forgiveness they offer in the area of modelling. Neural network adaptive control is favorable specifically for the powerful nature of the machine learning algorithm to approximate unknown functions and for the ability to relax certain constraints within traditional adaptive control. Deep neural networks are large framework networks with vastly superior approximation characteristics than their shallow counterparts. However, implementing a deep neural network can be difficult due to size specific complications such as vanishing/exploding gradients in training. In this paper, a neuro-adaptive controller is implemented featuring a deep neural network trained on a new weight update law that escapes the vanishing/exploding gradient problem by only incorporating the sign of the gradient. The type of controller designed is an adaptive dynamic inversion controller utilizing a modified state observer in a secondary estimation loop to train the network. The deep neural network learns the entire plant model on-line, creating a controller that is completely model free. The controller design is tested in simulation on a 2 link planar robot arm. The controller is able to learn the nonlinear plant quickly and displays good performance in the tracking control problem.

Strategic Mitigation of Agent Inattention in Drivers with Open-Quantum Cognition Models

Jul 21, 2021

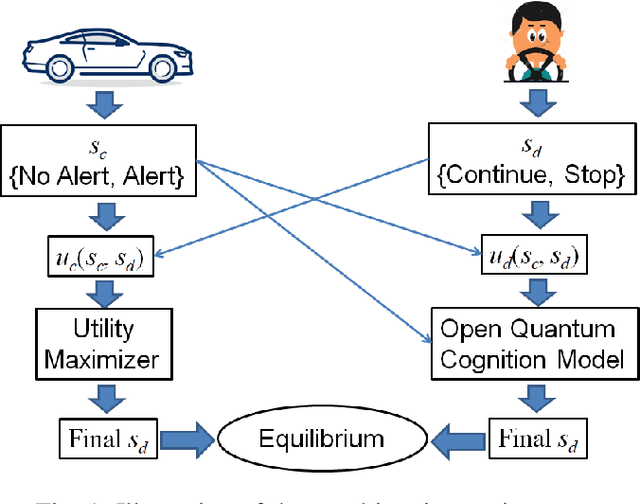

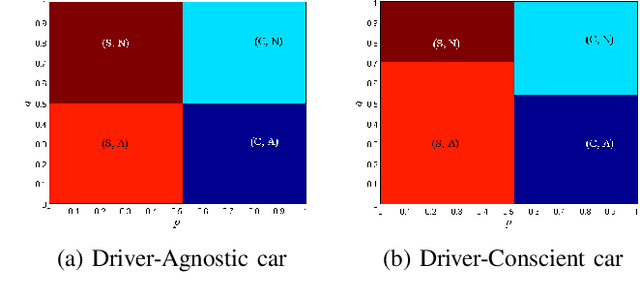

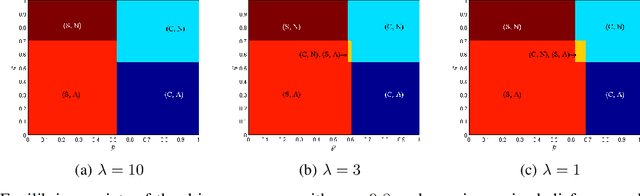

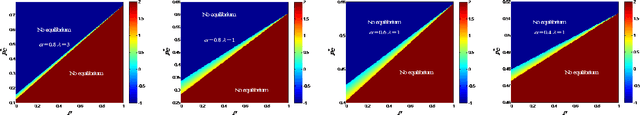

Abstract:State-of-the-art driver-assist systems have failed to effectively mitigate driver inattention and had minimal impacts on the ever-growing number of road mishaps (e.g. life loss, physical injuries due to accidents caused by various factors that lead to driver inattention). This is because traditional human-machine interaction settings are modeled in classical and behavioral game-theoretic domains which are technically appropriate to characterize strategic interaction between either two utility maximizing agents, or human decision makers. Therefore, in an attempt to improve the persuasive effectiveness of driver-assist systems, we develop a novel strategic and personalized driver-assist system which adapts to the driver's mental state and choice behavior. First, we propose a novel equilibrium notion in human-system interaction games, where the system maximizes its expected utility and human decisions can be characterized using any general decision model. Then we use this novel equilibrium notion to investigate the strategic driver-vehicle interaction game where the car presents a persuasive recommendation to steer the driver towards safer driving decisions. We assume that the driver employs an open-quantum system cognition model, which captures complex aspects of human decision making such as violations to classical law of total probability and incompatibility of certain mental representations of information. We present closed-form expressions for players' final responses to each other's strategies so that we can numerically compute both pure and mixed equilibria. Numerical results are presented to illustrate both kinds of equilibria.

Non-Comparative Fairness for Human-Auditing and Its Relation to Traditional Fairness Notions

Jun 29, 2021

Abstract:Bias evaluation in machine-learning based services (MLS) based on traditional algorithmic fairness notions that rely on comparative principles is practically difficult, making it necessary to rely on human auditor feedback. However, in spite of taking rigorous training on various comparative fairness notions, human auditors are known to disagree on various aspects of fairness notions in practice, making it difficult to collect reliable feedback. This paper offers a paradigm shift to the domain of algorithmic fairness via proposing a new fairness notion based on the principle of non-comparative justice. In contrary to traditional fairness notions where the outcomes of two individuals/groups are compared, our proposed notion compares the MLS' outcome with a desired outcome for each input. This desired outcome naturally describes a human auditor's expectation, and can be easily used to evaluate MLS on crowd-auditing platforms. We show that any MLS can be deemed fair from the perspective of comparative fairness (be it in terms of individual fairness, statistical parity, equal opportunity or calibration) if it is non-comparatively fair with respect to a fair auditor. We also show that the converse holds true in the context of individual fairness. Given that such an evaluation relies on the trustworthiness of the auditor, we also present an approach to identify fair and reliable auditors by estimating their biases with respect to a given set of sensitive attributes, as well as quantify the uncertainty in the estimation of biases within a given MLS. Furthermore, all of the above results are also validated on COMPAS, German credit and Adult Census Income datasets.

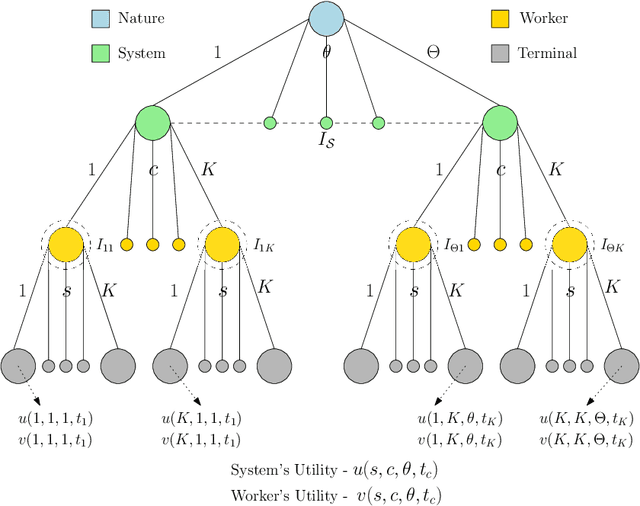

On the Design of Strategic Task Recommendations for Sustainable Crowdsourcing-Based Content Moderation

Jun 04, 2021

Abstract:Crowdsourcing-based content moderation is a platform that hosts content moderation tasks for crowd workers to review user submissions (e.g. text, images and videos) and make decisions regarding the admissibility of the posted content, along with a gamut of other tasks such as image labeling and speech-to-text conversion. In an attempt to reduce cognitive overload at the workers and improve system efficiency, these platforms offer personalized task recommendations according to the worker's preferences. However, the current state-of-the-art recommendation systems disregard the effects on worker's mental health, especially when they are repeatedly exposed to content moderation tasks with extreme content (e.g. violent images, hate-speech). In this paper, we propose a novel, strategic recommendation system for the crowdsourcing platform that recommends jobs based on worker's mental status. Specifically, this paper models interaction between the crowdsourcing platform's recommendation system (leader) and the worker (follower) as a Bayesian Stackelberg game where the type of the follower corresponds to the worker's cognitive atrophy rate and task preferences. We discuss how rewards and costs should be designed to steer the game towards desired outcomes in terms of maximizing the platform's productivity, while simultaneously improving the working conditions of crowd workers.

On the Identification of Fair Auditors to Evaluate Recommender Systems based on a Novel Non-Comparative Fairness Notion

Sep 09, 2020Abstract:Decision-support systems are information systems that offer support to people's decisions in various applications such as judiciary, real-estate and banking sectors. Lately, these support systems have been found to be discriminatory in the context of many practical deployments. In an attempt to evaluate and mitigate these biases, algorithmic fairness literature has been nurtured using notions of comparative justice, which relies primarily on comparing two/more individuals or groups within the society that is supported by such systems. However, such a fairness notion is not very useful in the identification of fair auditors who are hired to evaluate latent biases within decision-support systems. As a solution, we introduce a paradigm shift in algorithmic fairness via proposing a new fairness notion based on the principle of non-comparative justice. Assuming that the auditor makes fairness evaluations based on some (potentially unknown) desired properties of the decision-support system, the proposed fairness notion compares the system's outcome with that of the auditor's desired outcome. We show that the proposed fairness notion also provides guarantees in terms of comparative fairness notions by proving that any system can be deemed fair from the perspective of comparative fairness (e.g. individual fairness and statistical parity) if it is non-comparatively fair with respect to an auditor who has been deemed fair with respect to the same fairness notions. We also show that the converse holds true in the context of individual fairness. A brief discussion is also presented regarding how our fairness notion can be used to identify fair and reliable auditors, and how we can use them to quantify biases in decision-support systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge