Mukund Telukunta

Fairness Perceptions in Regression-based Predictive Models

May 08, 2025Abstract:Regression-based predictive analytics used in modern kidney transplantation is known to inherit biases from training data. This leads to social discrimination and inefficient organ utilization, particularly in the context of a few social groups. Despite this concern, there is limited research on fairness in regression and its impact on organ utilization and placement. This paper introduces three novel divergence-based group fairness notions: (i) independence, (ii) separation, and (iii) sufficiency to assess the fairness of regression-based analytics tools. In addition, fairness preferences are investigated from crowd feedback, in order to identify a socially accepted group fairness criterion for evaluating these tools. A total of 85 participants were recruited from the Prolific crowdsourcing platform, and a Mixed-Logit discrete choice model was used to model fairness feedback and estimate social fairness preferences. The findings clearly depict a strong preference towards the separation and sufficiency fairness notions, and that the predictive analytics is deemed fair with respect to gender and race groups, but unfair in terms of age groups.

Driver Fatigue Prediction using Randomly Activated Neural Networks for Smart Ridesharing Platforms

Apr 16, 2024Abstract:Drivers in ridesharing platforms exhibit cognitive atrophy and fatigue as they accept ride offers along the day, which can have a significant impact on the overall efficiency of the ridesharing platform. In contrast to the current literature which focuses primarily on modeling and learning driver's preferences across different ride offers, this paper proposes a novel Dynamic Discounted Satisficing (DDS) heuristic to model and predict driver's sequential ride decisions during a given shift. Based on DDS heuristic, a novel stochastic neural network with random activations is proposed to model DDS heuristic and predict the final decision made by a given driver. The presence of random activations in the network necessitated the development of a novel training algorithm called Sampling-Based Back Propagation Through Time (SBPTT), where gradients are computed for independent instances of neural networks (obtained via sampling the distribution of activation threshold) and aggregated to update the network parameters. Using both simulation experiments as well as on real Chicago taxi dataset, this paper demonstrates the improved performance of the proposed approach, when compared to state-of-the-art methods.

Learning Social Fairness Preferences from Non-Expert Stakeholder Opinions in Kidney Placement

Apr 04, 2024Abstract:Modern kidney placement incorporates several intelligent recommendation systems which exhibit social discrimination due to biases inherited from training data. Although initial attempts were made in the literature to study algorithmic fairness in kidney placement, these methods replace true outcomes with surgeons' decisions due to the long delays involved in recording such outcomes reliably. However, the replacement of true outcomes with surgeons' decisions disregards expert stakeholders' biases as well as social opinions of other stakeholders who do not possess medical expertise. This paper alleviates the latter concern and designs a novel fairness feedback survey to evaluate an acceptance rate predictor (ARP) that predicts a kidney's acceptance rate in a given kidney-match pair. The survey is launched on Prolific, a crowdsourcing platform, and public opinions are collected from 85 anonymous crowd participants. A novel social fairness preference learning algorithm is proposed based on minimizing social feedback regret computed using a novel logit-based fairness feedback model. The proposed model and learning algorithm are both validated using simulation experiments as well as Prolific data. Public preferences towards group fairness notions in the context of kidney placement have been estimated and discussed in detail. The specific ARP tested in the Prolific survey has been deemed fair by the participants.

On Learning and Enforcing Latent Assessment Models using Binary Feedback from Human Auditors Regarding Black-Box Classifiers

Feb 16, 2022

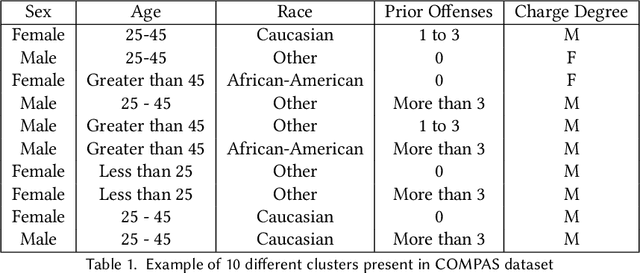

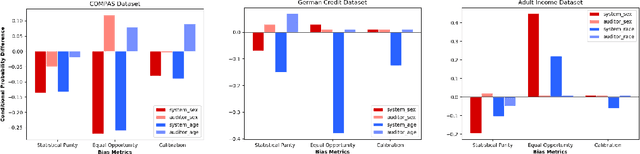

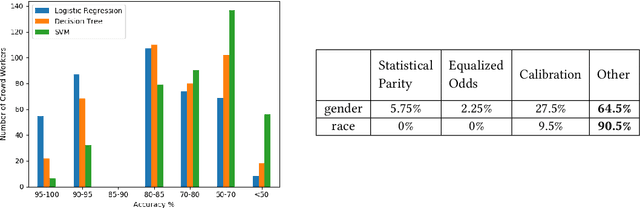

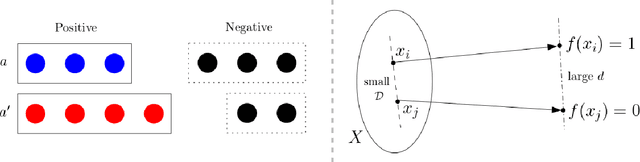

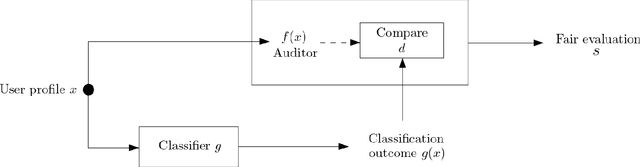

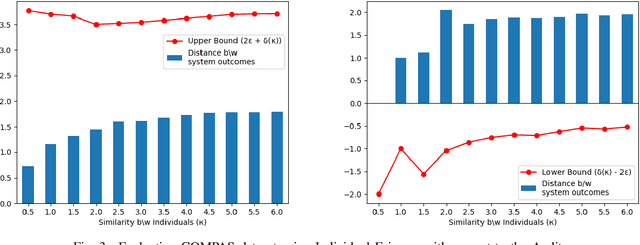

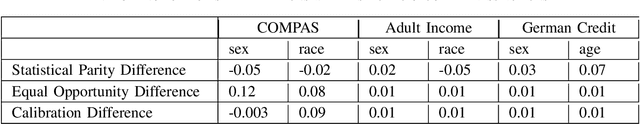

Abstract:Algorithmic fairness literature presents numerous mathematical notions and metrics, and also points to a tradeoff between them while satisficing some or all of them simultaneously. Furthermore, the contextual nature of fairness notions makes it difficult to automate bias evaluation in diverse algorithmic systems. Therefore, in this paper, we propose a novel model called latent assessment model (LAM) to characterize binary feedback provided by human auditors, by assuming that the auditor compares the classifier's output to his or her own intrinsic judgment for each input. We prove that individual and group fairness notions are guaranteed as long as the auditor's intrinsic judgments inherently satisfy the fairness notion at hand, and are relatively similar to the classifier's evaluations. We also demonstrate this relationship between LAM and traditional fairness notions on three well-known datasets, namely COMPAS, German credit and Adult Census Income datasets. Furthermore, we also derive the minimum number of feedback samples needed to obtain PAC learning guarantees to estimate LAM for black-box classifiers. These guarantees are also validated via training standard machine learning algorithms on real binary feedback elicited from 400 human auditors regarding COMPAS.

Non-Comparative Fairness for Human-Auditing and Its Relation to Traditional Fairness Notions

Jun 29, 2021

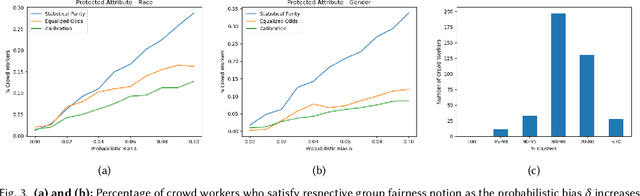

Abstract:Bias evaluation in machine-learning based services (MLS) based on traditional algorithmic fairness notions that rely on comparative principles is practically difficult, making it necessary to rely on human auditor feedback. However, in spite of taking rigorous training on various comparative fairness notions, human auditors are known to disagree on various aspects of fairness notions in practice, making it difficult to collect reliable feedback. This paper offers a paradigm shift to the domain of algorithmic fairness via proposing a new fairness notion based on the principle of non-comparative justice. In contrary to traditional fairness notions where the outcomes of two individuals/groups are compared, our proposed notion compares the MLS' outcome with a desired outcome for each input. This desired outcome naturally describes a human auditor's expectation, and can be easily used to evaluate MLS on crowd-auditing platforms. We show that any MLS can be deemed fair from the perspective of comparative fairness (be it in terms of individual fairness, statistical parity, equal opportunity or calibration) if it is non-comparatively fair with respect to a fair auditor. We also show that the converse holds true in the context of individual fairness. Given that such an evaluation relies on the trustworthiness of the auditor, we also present an approach to identify fair and reliable auditors by estimating their biases with respect to a given set of sensitive attributes, as well as quantify the uncertainty in the estimation of biases within a given MLS. Furthermore, all of the above results are also validated on COMPAS, German credit and Adult Census Income datasets.

On the Identification of Fair Auditors to Evaluate Recommender Systems based on a Novel Non-Comparative Fairness Notion

Sep 09, 2020Abstract:Decision-support systems are information systems that offer support to people's decisions in various applications such as judiciary, real-estate and banking sectors. Lately, these support systems have been found to be discriminatory in the context of many practical deployments. In an attempt to evaluate and mitigate these biases, algorithmic fairness literature has been nurtured using notions of comparative justice, which relies primarily on comparing two/more individuals or groups within the society that is supported by such systems. However, such a fairness notion is not very useful in the identification of fair auditors who are hired to evaluate latent biases within decision-support systems. As a solution, we introduce a paradigm shift in algorithmic fairness via proposing a new fairness notion based on the principle of non-comparative justice. Assuming that the auditor makes fairness evaluations based on some (potentially unknown) desired properties of the decision-support system, the proposed fairness notion compares the system's outcome with that of the auditor's desired outcome. We show that the proposed fairness notion also provides guarantees in terms of comparative fairness notions by proving that any system can be deemed fair from the perspective of comparative fairness (e.g. individual fairness and statistical parity) if it is non-comparatively fair with respect to an auditor who has been deemed fair with respect to the same fairness notions. We also show that the converse holds true in the context of individual fairness. A brief discussion is also presented regarding how our fairness notion can be used to identify fair and reliable auditors, and how we can use them to quantify biases in decision-support systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge