Vanessa Gonzalez Duque

Can ultrasound confidence maps predict sonographers' labeling variability?

Aug 18, 2023

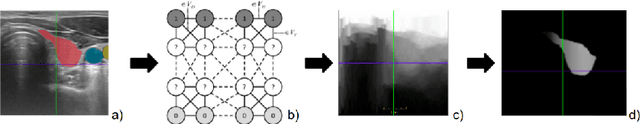

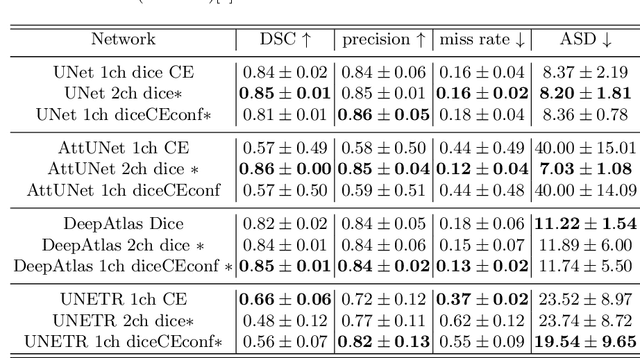

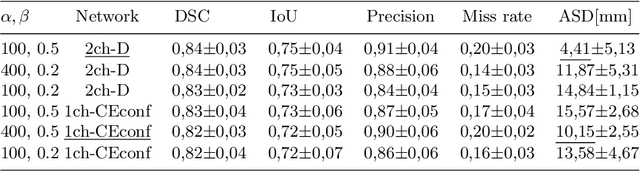

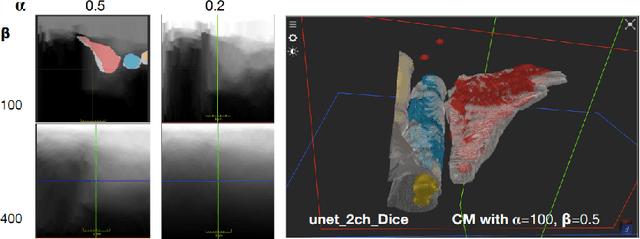

Abstract:Measuring cross-sectional areas in ultrasound images is a standard tool to evaluate disease progress or treatment response. Often addressed today with supervised deep-learning segmentation approaches, existing solutions highly depend upon the quality of experts' annotations. However, the annotation quality in ultrasound is anisotropic and position-variant due to the inherent physical imaging principles, including attenuation, shadows, and missing boundaries, commonly exacerbated with depth. This work proposes a novel approach that guides ultrasound segmentation networks to account for sonographers' uncertainties and generate predictions with variability similar to the experts. We claim that realistic variability can reduce overconfident predictions and improve physicians' acceptance of deep-learning cross-sectional segmentation solutions. Our method provides CM's certainty for each pixel for minimal computational overhead as it can be precalculated directly from the image. We show that there is a correlation between low values in the confidence maps and expert's label uncertainty. Therefore, we propose to give the confidence maps as additional information to the networks. We study the effect of the proposed use of ultrasound CMs in combination with four state-of-the-art neural networks and in two configurations: as a second input channel and as part of the loss. We evaluate our method on 3D ultrasound datasets of the thyroid and lower limb muscles. Our results show ultrasound CMs increase the Dice score, improve the Hausdorff and Average Surface Distances, and decrease the number of isolated pixel predictions. Furthermore, our findings suggest that ultrasound CMs improve the penalization of uncertain areas in the ground truth data, thereby improving problematic interpolations. Our code and example data will be made public at https://github.com/IFL-CAMP/Confidence-segmentation.

LOTUS: Learning to Optimize Task-based US representations

Jul 29, 2023

Abstract:Anatomical segmentation of organs in ultrasound images is essential to many clinical applications, particularly for diagnosis and monitoring. Existing deep neural networks require a large amount of labeled data for training in order to achieve clinically acceptable performance. Yet, in ultrasound, due to characteristic properties such as speckle and clutter, it is challenging to obtain accurate segmentation boundaries, and precise pixel-wise labeling of images is highly dependent on the expertise of physicians. In contrast, CT scans have higher resolution and improved contrast, easing organ identification. In this paper, we propose a novel approach for learning to optimize task-based ultra-sound image representations. Given annotated CT segmentation maps as a simulation medium, we model acoustic propagation through tissue via ray-casting to generate ultrasound training data. Our ultrasound simulator is fully differentiable and learns to optimize the parameters for generating physics-based ultrasound images guided by the downstream segmentation task. In addition, we train an image adaptation network between real and simulated images to achieve simultaneous image synthesis and automatic segmentation on US images in an end-to-end training setting. The proposed method is evaluated on aorta and vessel segmentation tasks and shows promising quantitative results. Furthermore, we also conduct qualitative results of optimized image representations on other organs.

IFSS-Net: Interactive Few-Shot Siamese Network for Faster Muscles Segmentation and Propagation in 3-D Freehand Ultrasound

Nov 26, 2020

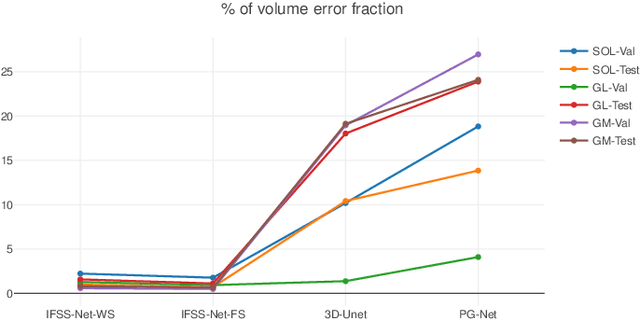

Abstract:We present an accurate, fast and efficient method for segmentation and muscle mask propagation in 3D freehand ultrasound data, towards accurate volume quantification. To this end, we propose a deep Siamese 3D Encoder-Decoder network that captures the evolution of the muscle appearance and shape for contiguous slices and uses it to propagate a reference mask annotated by a clinical expert. To handle longer changes of the muscle shape over the entire volume and to provide an accurate propagation, we devised a Bidirectional Long Short Term Memory module. To train our model with a minimal amount of training samples, we propose a strategy to combine learning from few annotated 2D ultrasound slices with sequential pseudo-labeling of the unannotated slices. To promote few-shot learning, we propose a decremental update of the objective function to guide the model convergence in the absence of large amounts of annotated data. Finally, to handle the class-imbalance between foreground and background muscle pixels, we propose a parametric Tversky loss function that learns to adaptively penalize false positives and false negatives. We validate our approach for the segmentation, label propagation, and volume computation of the three low-limb muscles on a dataset of 44 subjects. We achieve a dice score coefficient of over $95~\%$ and a small fraction of error with $1.6035~\pm~0.587$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge