Van Minh Nguyen

Highly Efficient and Effective LLMs with Multi-Boolean Architectures

May 28, 2025

Abstract:Weight binarization has emerged as a promising strategy to drastically reduce the complexity of large language models (LLMs). It is mainly classified into two approaches: post-training binarization and finetuning with training-aware binarization methods. The first approach, while having low complexity, leads to significant loss of information from the original LLMs, resulting in poor performance. The second approach, on the other hand, relies heavily on full-precision latent weights for gradient approximation of binary weights, which not only remains suboptimal but also introduces substantial complexity. In this paper, we introduce a novel framework that effectively transforms LLMs into multi-kernel Boolean parameters, for the first time, finetunes them directly in the Boolean domain, eliminating the need for expensive latent weights. This significantly reduces complexity during both finetuning and inference. Through extensive and insightful experiments across a wide range of LLMs, we demonstrate that our method outperforms recent ultra low-bit quantization and binarization methods.

SatSplatYOLO: 3D Gaussian Splatting-based Virtual Object Detection Ensembles for Satellite Feature Recognition

Jun 04, 2024

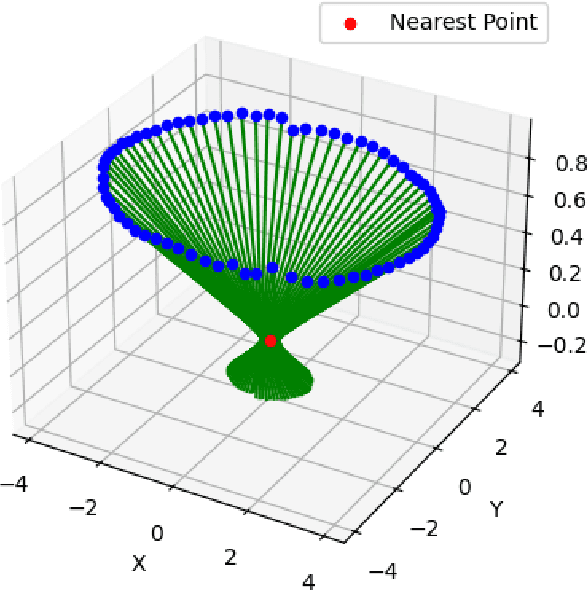

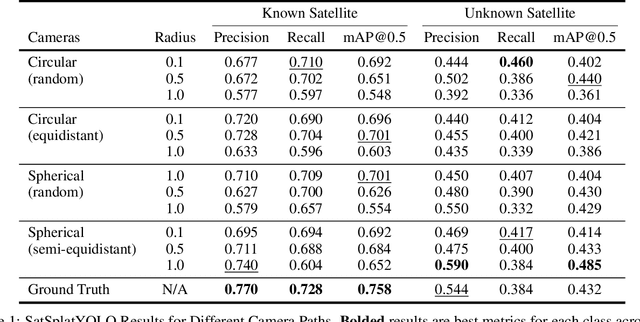

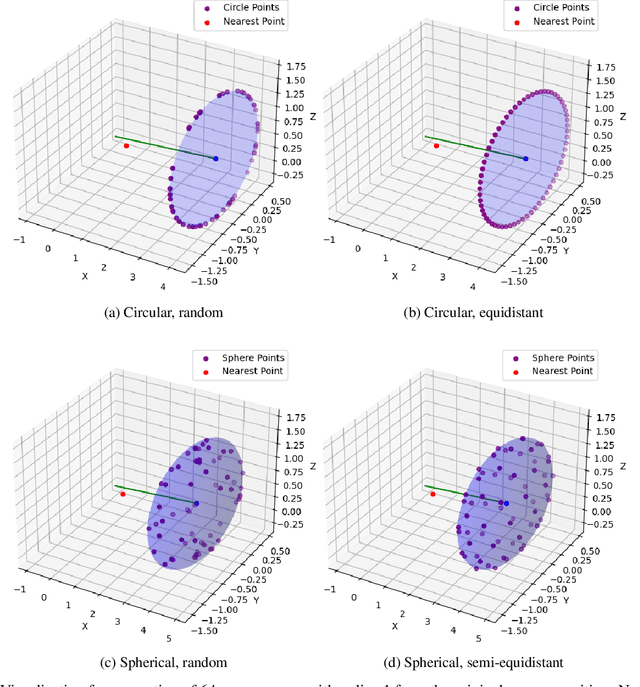

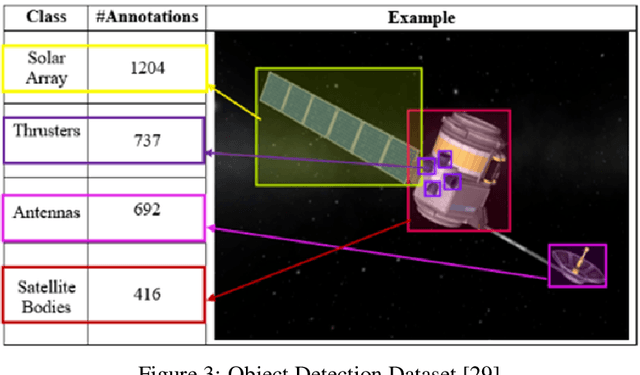

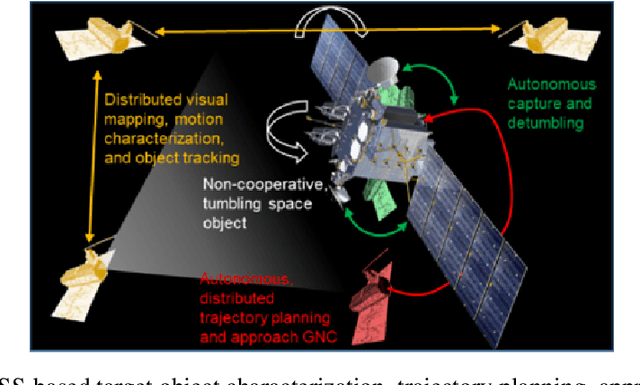

Abstract:On-orbit servicing (OOS), inspection of spacecraft, and active debris removal (ADR). Such missions require precise rendezvous and proximity operations in the vicinity of non-cooperative, possibly unknown, resident space objects. Safety concerns with manned missions and lag times with ground-based control necessitate complete autonomy. In this article, we present an approach for mapping geometries and high-confidence detection of components of unknown, non-cooperative satellites on orbit. We implement accelerated 3D Gaussian splatting to learn a 3D representation of the satellite, render virtual views of the target, and ensemble the YOLOv5 object detector over the virtual views, resulting in reliable, accurate, and precise satellite component detections. The full pipeline capable of running on-board and stand to enable downstream machine intelligence tasks necessary for autonomous guidance, navigation, and control tasks.

BOLD: Boolean Logic Deep Learning

May 25, 2024Abstract:Deep learning is computationally intensive, with significant efforts focused on reducing arithmetic complexity, particularly regarding energy consumption dominated by data movement. While existing literature emphasizes inference, training is considerably more resource-intensive. This paper proposes a novel mathematical principle by introducing the notion of Boolean variation such that neurons made of Boolean weights and inputs can be trained -- for the first time -- efficiently in Boolean domain using Boolean logic instead of gradient descent and real arithmetic. We explore its convergence, conduct extensively experimental benchmarking, and provide consistent complexity evaluation by considering chip architecture, memory hierarchy, dataflow, and arithmetic precision. Our approach achieves baseline full-precision accuracy in ImageNet classification and surpasses state-of-the-art results in semantic segmentation, with notable performance in image super-resolution, and natural language understanding with transformer-based models. Moreover, it significantly reduces energy consumption during both training and inference.

ReALLM: A general framework for LLM compression and fine-tuning

May 21, 2024

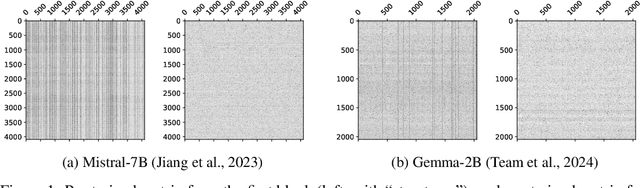

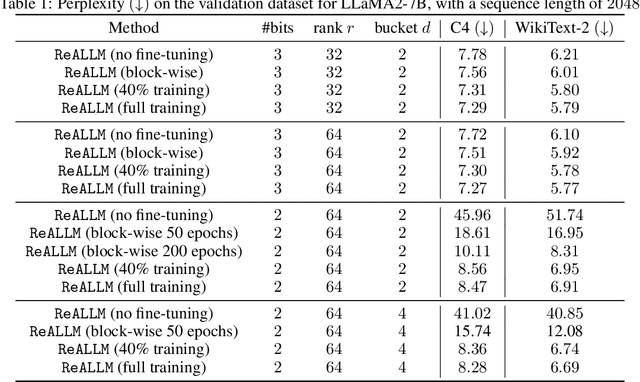

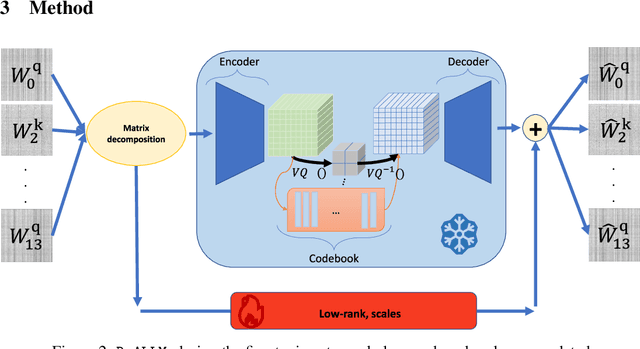

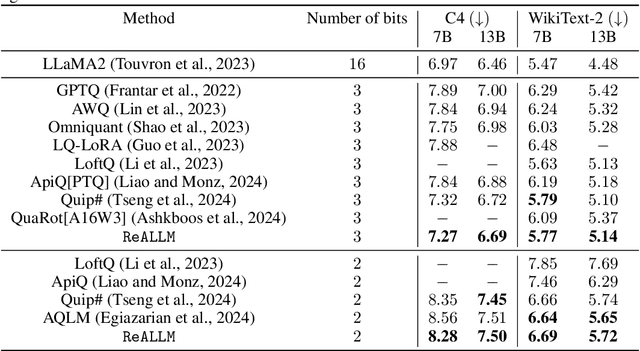

Abstract:We introduce ReALLM, a novel approach for compression and memory-efficient adaptation of pre-trained language models that encompasses most of the post-training quantization and fine-tuning methods for a budget of <4 bits. Pre-trained matrices are decomposed into a high-precision low-rank component and a vector-quantized latent representation (using an autoencoder). During the fine-tuning step, only the low-rank components are updated. Our results show that pre-trained matrices exhibit different patterns. ReALLM adapts the shape of the encoder (small/large embedding, high/low bit VQ, etc.) to each matrix. ReALLM proposes to represent each matrix with a small embedding on $b$ bits and a neural decoder model $\mathcal{D}_\phi$ with its weights on $b_\phi$ bits. The decompression of a matrix requires only one embedding and a single forward pass with the decoder. Our weight-only quantization algorithm yields the best results on language generation tasks (C4 and WikiText-2) for a budget of $3$ bits without any training. With a budget of $2$ bits, ReALLM achieves state-of-the art performance after fine-tuning on a small calibration dataset.

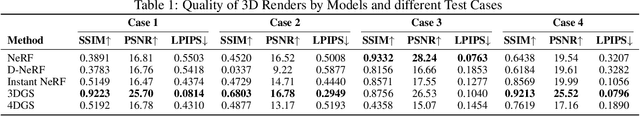

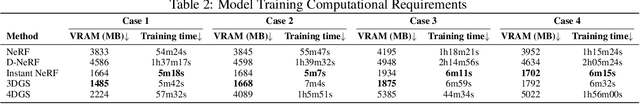

Characterizing Satellite Geometry via Accelerated 3D Gaussian Splatting

Jan 05, 2024

Abstract:The accelerating deployment of spacecraft in orbit have generated interest in on-orbit servicing (OOS), inspection of spacecraft, and active debris removal (ADR). Such missions require precise rendezvous and proximity operations in the vicinity of non-cooperative, possible unknown, resident space objects. Safety concerns with manned missions and lag times with ground-based control necessitate complete autonomy. This requires robust characterization of the target's geometry. In this article, we present an approach for mapping geometries of satellites on orbit based on 3D Gaussian Splatting that can run on computing resources available on current spaceflight hardware. We demonstrate model training and 3D rendering performance on a hardware-in-the-loop satellite mock-up under several realistic lighting and motion conditions. Our model is shown to be capable of training on-board and rendering higher quality novel views of an unknown satellite nearly 2 orders of magnitude faster than previous NeRF-based algorithms. Such on-board capabilities are critical to enable downstream machine intelligence tasks necessary for autonomous guidance, navigation, and control tasks.

Conceptualizing Suicidal Behavior: Utilizing Explanations of Predicted Outcomes to Analyze Longitudinal Social Media Data

Dec 30, 2023

Abstract:The COVID-19 pandemic has escalated mental health crises worldwide, with social isolation and economic instability contributing to a rise in suicidal behavior. Suicide can result from social factors such as shame, abuse, abandonment, and mental health conditions like depression, Post-Traumatic Stress Disorder (PTSD), Attention-Deficit/Hyperactivity Disorder (ADHD), anxiety disorders, and bipolar disorders. As these conditions develop, signs of suicidal ideation may manifest in social media interactions. Analyzing social media data using artificial intelligence (AI) techniques can help identify patterns of suicidal behavior, providing invaluable insights for suicide prevention agencies, professionals, and broader community awareness initiatives. Machine learning algorithms for this purpose require large volumes of accurately labeled data. Previous research has not fully explored the potential of incorporating explanations in analyzing and labeling longitudinal social media data. In this study, we employed a model explanation method, Layer Integrated Gradients, on top of a fine-tuned state-of-the-art language model, to assign each token from Reddit users' posts an attribution score for predicting suicidal ideation. By extracting and analyzing attributions of tokens from the data, we propose a methodology for preliminary screening of social media posts for suicidal ideation without using large language models during inference.

Boolean Variation and Boolean Logic BackPropagation

Nov 13, 2023Abstract:The notion of variation is introduced for the Boolean set and based on which Boolean logic backpropagation principle is developed. Using this concept, deep models can be built with weights and activations being Boolean numbers and operated with Boolean logic instead of real arithmetic. In particular, Boolean deep models can be trained directly in the Boolean domain without latent weights. No gradient but logic is synthesized and backpropagated through layers.

FAVAS: Federated AVeraging with ASynchronous clients

May 25, 2023Abstract:In this paper, we propose a novel centralized Asynchronous Federated Learning (FL) framework, FAVAS, for training Deep Neural Networks (DNNs) in resource-constrained environments. Despite its popularity, ``classical'' federated learning faces the increasingly difficult task of scaling synchronous communication over large wireless networks. Moreover, clients typically have different computing resources and therefore computing speed, which can lead to a significant bias (in favor of ``fast'' clients) when the updates are asynchronous. Therefore, practical deployment of FL requires to handle users with strongly varying computing speed in communication/resource constrained setting. We provide convergence guarantees for FAVAS in a smooth, non-convex environment and carefully compare the obtained convergence guarantees with existing bounds, when they are available. Experimental results show that the FAVAS algorithm outperforms current methods on standard benchmarks.

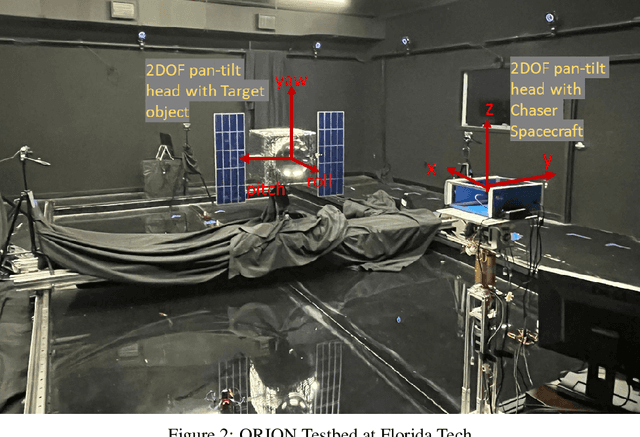

3D Reconstruction of Non-cooperative Resident Space Objects using Instant NGP-accelerated NeRF and D-NeRF

Feb 02, 2023Abstract:The proliferation of non-cooperative resident space objects (RSOs) in orbit has spurred the demand for active space debris removal, on-orbit servicing (OOS), classification, and functionality identification of these RSOs. Recent advances in computer vision have enabled high-definition 3D modeling of objects based on a set of 2D images captured from different viewing angles. This work adapts Instant NeRF and D-NeRF, variations of the neural radiance field (NeRF) algorithm to the problem of mapping RSOs in orbit for the purposes of functionality identification and assisting with OOS. The algorithms are evaluated for 3D reconstruction quality and hardware requirements using datasets of images of a spacecraft mock-up taken under two different lighting and motion conditions at the Orbital Robotic Interaction, On-Orbit Servicing and Navigation (ORION) Laboratory at Florida Institute of Technology. Instant NeRF is shown to learn high-fidelity 3D models with a computational cost that could feasibly be trained on on-board computers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge