Trupti Mahendrakar

SpY: A Context-Based Approach to Spacecraft Component Detection

Jun 26, 2024

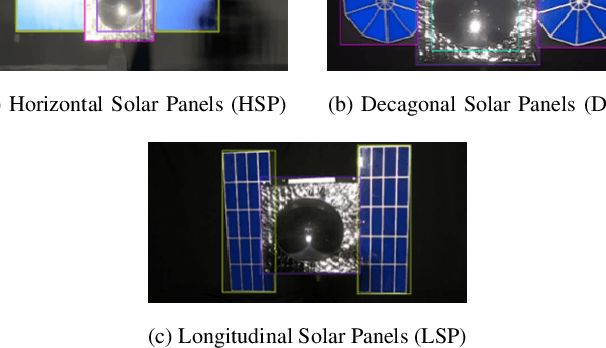

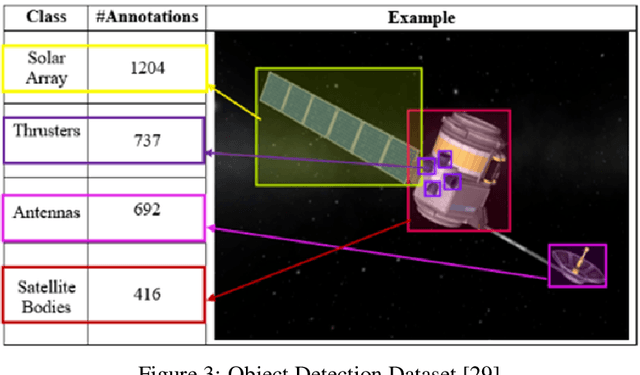

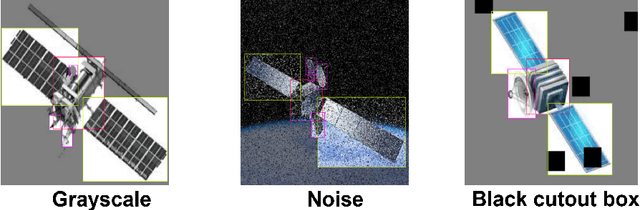

Abstract:This paper focuses on autonomously characterizing components such as solar panels, body panels, antennas, and thrusters of an unknown resident space object (RSO) using camera feed to aid autonomous on-orbit servicing (OOS) and active debris removal. Significant research has been conducted in this area using convolutional neural networks (CNNs). While CNNs are powerful at learning patterns and performing object detection, they struggle with missed detections and misclassifications in environments different from the training data, making them unreliable for safety in high-stakes missions like OOS. Additionally, failures exhibited by CNNs are often easily rectifiable by humans using commonsense reasoning and contextual knowledge. Embedding such reasoning in an object detector could improve detection accuracy. To validate this hypothesis, this paper presents an end-to-end object detector called SpaceYOLOv2 (SpY), which leverages the generalizability of CNNs while incorporating contextual knowledge using traditional computer vision techniques. SpY consists of two main components: a shape detector and the SpaceYOLO classifier (SYC). The shape detector uses CNNs to detect primitive shapes of RSOs and SYC associates these shapes with contextual knowledge, such as color and texture, to classify them as spacecraft components or "unknown" if the detected shape is uncertain. SpY's modular architecture allows customizable usage of contextual knowledge to improve detection performance, or SYC as a secondary fail-safe classifier with an existing spacecraft component detector. Performance evaluations on hardware-in-the-loop images of a mock-up spacecraft demonstrate that SpY is accurate and an ensemble of SpY with YOLOv5 trained for satellite component detection improved the performance by 23.4% in recall, demonstrating enhanced safety for vision-based navigation tasks.

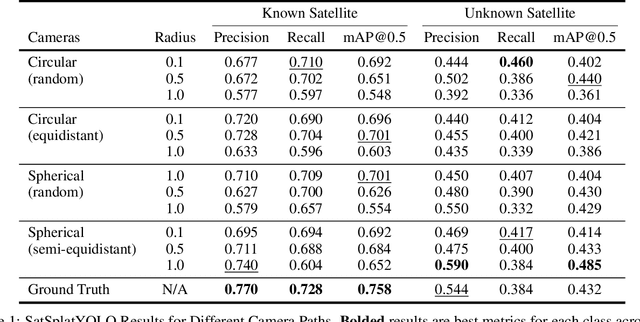

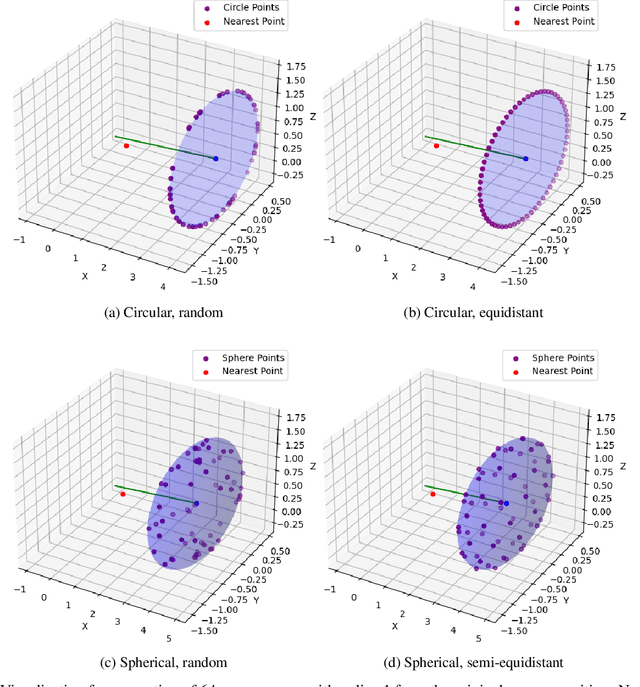

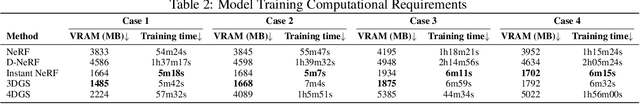

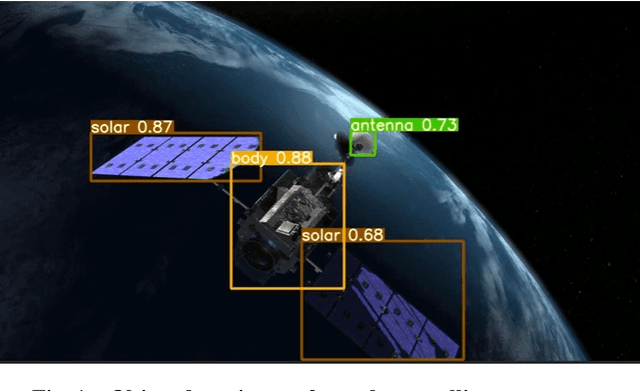

SatSplatYOLO: 3D Gaussian Splatting-based Virtual Object Detection Ensembles for Satellite Feature Recognition

Jun 04, 2024

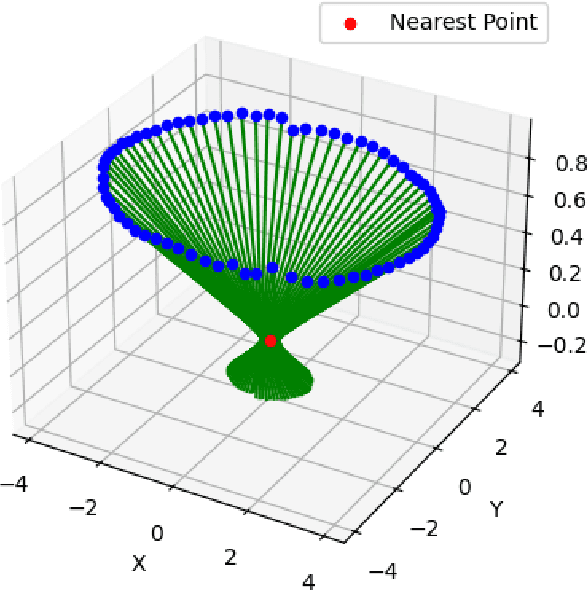

Abstract:On-orbit servicing (OOS), inspection of spacecraft, and active debris removal (ADR). Such missions require precise rendezvous and proximity operations in the vicinity of non-cooperative, possibly unknown, resident space objects. Safety concerns with manned missions and lag times with ground-based control necessitate complete autonomy. In this article, we present an approach for mapping geometries and high-confidence detection of components of unknown, non-cooperative satellites on orbit. We implement accelerated 3D Gaussian splatting to learn a 3D representation of the satellite, render virtual views of the target, and ensemble the YOLOv5 object detector over the virtual views, resulting in reliable, accurate, and precise satellite component detections. The full pipeline capable of running on-board and stand to enable downstream machine intelligence tasks necessary for autonomous guidance, navigation, and control tasks.

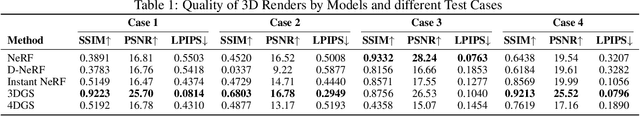

Characterizing Satellite Geometry via Accelerated 3D Gaussian Splatting

Jan 05, 2024

Abstract:The accelerating deployment of spacecraft in orbit have generated interest in on-orbit servicing (OOS), inspection of spacecraft, and active debris removal (ADR). Such missions require precise rendezvous and proximity operations in the vicinity of non-cooperative, possible unknown, resident space objects. Safety concerns with manned missions and lag times with ground-based control necessitate complete autonomy. This requires robust characterization of the target's geometry. In this article, we present an approach for mapping geometries of satellites on orbit based on 3D Gaussian Splatting that can run on computing resources available on current spaceflight hardware. We demonstrate model training and 3D rendering performance on a hardware-in-the-loop satellite mock-up under several realistic lighting and motion conditions. Our model is shown to be capable of training on-board and rendering higher quality novel views of an unknown satellite nearly 2 orders of magnitude faster than previous NeRF-based algorithms. Such on-board capabilities are critical to enable downstream machine intelligence tasks necessary for autonomous guidance, navigation, and control tasks.

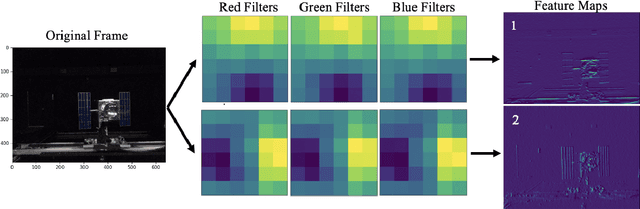

Taking a PEEK into YOLOv5 for Satellite Component Recognition via Entropy-based Visual Explanations

Nov 03, 2023

Abstract:The escalating risk of collisions and the accumulation of space debris in Low Earth Orbit (LEO) has reached critical concern due to the ever increasing number of spacecraft. Addressing this crisis, especially in dealing with non-cooperative and unidentified space debris, is of paramount importance. This paper contributes to efforts in enabling autonomous swarms of small chaser satellites for target geometry determination and safe flight trajectory planning for proximity operations in LEO. Our research explores on-orbit use of the You Only Look Once v5 (YOLOv5) object detection model trained to detect satellite components. While this model has shown promise, its inherent lack of interpretability hinders human understanding, a critical aspect of validating algorithms for use in safety-critical missions. To analyze the decision processes, we introduce Probabilistic Explanations for Entropic Knowledge extraction (PEEK), a method that utilizes information theoretic analysis of the latent representations within the hidden layers of the model. Through both synthetic in hardware-in-the-loop experiments, PEEK illuminates the decision-making processes of the model, helping identify its strengths, limitations and biases.

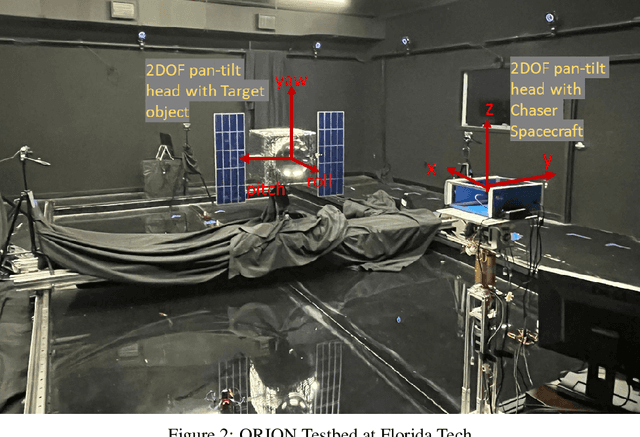

3D Reconstruction of Non-cooperative Resident Space Objects using Instant NGP-accelerated NeRF and D-NeRF

Feb 02, 2023Abstract:The proliferation of non-cooperative resident space objects (RSOs) in orbit has spurred the demand for active space debris removal, on-orbit servicing (OOS), classification, and functionality identification of these RSOs. Recent advances in computer vision have enabled high-definition 3D modeling of objects based on a set of 2D images captured from different viewing angles. This work adapts Instant NeRF and D-NeRF, variations of the neural radiance field (NeRF) algorithm to the problem of mapping RSOs in orbit for the purposes of functionality identification and assisting with OOS. The algorithms are evaluated for 3D reconstruction quality and hardware requirements using datasets of images of a spacecraft mock-up taken under two different lighting and motion conditions at the Orbital Robotic Interaction, On-Orbit Servicing and Navigation (ORION) Laboratory at Florida Institute of Technology. Instant NeRF is shown to learn high-fidelity 3D models with a computational cost that could feasibly be trained on on-board computers.

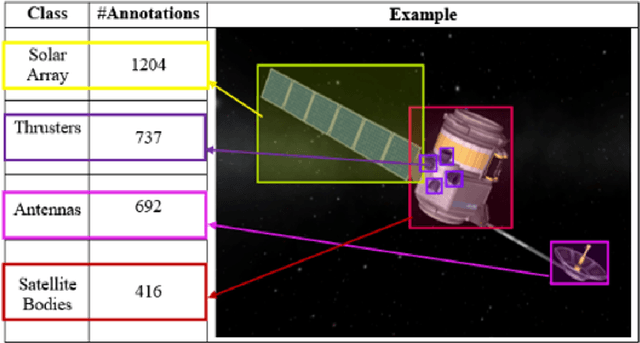

SpaceYOLO: A Human-Inspired Model for Real-time, On-board Spacecraft Feature Detection

Feb 02, 2023Abstract:The rapid proliferation of non-cooperative spacecraft and space debris in orbit has precipitated a surging demand for on-orbit servicing and space debris removal at a scale that only autonomous missions can address, but the prerequisite autonomous navigation and flightpath planning to safely capture an unknown, non-cooperative, tumbling space object is an open problem. This requires algorithms for real-time, automated spacecraft feature recognition to pinpoint the locations of collision hazards (e.g. solar panels or antennas) and safe docking features (e.g. satellite bodies or thrusters) so safe, effective flightpaths can be planned. Prior work in this area reveals the performance of computer vision models are highly dependent on the training dataset and its coverage of scenarios visually similar to the real scenarios that occur in deployment. Hence, the algorithm may have degraded performance under certain lighting conditions even when the rendezvous maneuver conditions of the chaser to the target spacecraft are the same. This work delves into how humans perform these tasks through a survey of how aerospace engineering students experienced with spacecraft shapes and components recognize features of the three spacecraft: Landsat, Envisat, Anik, and the orbiter Mir. The survey reveals that the most common patterns in the human detection process were to consider the shape and texture of the features: antennas, solar panels, thrusters, and satellite bodies. This work introduces a novel algorithm SpaceYOLO, which fuses a state-of-the-art object detector YOLOv5 with a separate neural network based on these human-inspired decision processes exploiting shape and texture. Performance in autonomous spacecraft detection of SpaceYOLO is compared to ordinary YOLOv5 in hardware-in-the-loop experiments under different lighting and chaser maneuver conditions at the ORION Laboratory at Florida Tech.

Performance Study of YOLOv5 and Faster R-CNN for Autonomous Navigation around Non-Cooperative Targets

Jan 22, 2023

Abstract:Autonomous navigation and path-planning around non-cooperative space objects is an enabling technology for on-orbit servicing and space debris removal systems. The navigation task includes the determination of target object motion, the identification of target object features suitable for grasping, and the identification of collision hazards and other keep-out zones. Given this knowledge, chaser spacecraft can be guided towards capture locations without damaging the target object or without unduly the operations of a servicing target by covering up solar arrays or communication antennas. One way to autonomously achieve target identification, characterization and feature recognition is by use of artificial intelligence algorithms. This paper discusses how the combination of cameras and machine learning algorithms can achieve the relative navigation task. The performance of two deep learning-based object detection algorithms, Faster Region-based Convolutional Neural Networks (R-CNN) and You Only Look Once (YOLOv5), is tested using experimental data obtained in formation flight simulations in the ORION Lab at Florida Institute of Technology. The simulation scenarios vary the yaw motion of the target object, the chaser approach trajectory, and the lighting conditions in order to test the algorithms in a wide range of realistic and performance limiting situations. The data analyzed include the mean average precision metrics in order to compare the performance of the object detectors. The paper discusses the path to implementing the feature recognition algorithms and towards integrating them into the spacecraft Guidance Navigation and Control system.

Resource-constrained FPGA Design for Satellite Component Feature Extraction

Jan 22, 2023

Abstract:The effective use of computer vision and machine learning for on-orbit applications has been hampered by limited computing capabilities, and therefore limited performance. While embedded systems utilizing ARM processors have been shown to meet acceptable but low performance standards, the recent availability of larger space-grade field programmable gate arrays (FPGAs) show potential to exceed the performance of microcomputer systems. This work proposes use of neural network-based object detection algorithm that can be deployed on a comparably resource-constrained FPGA to automatically detect components of non-cooperative, satellites on orbit. Hardware-in-the-loop experiments were performed on the ORION Maneuver Kinematics Simulator at Florida Tech to compare the performance of the new model deployed on a small, resource-constrained FPGA to an equivalent algorithm on a microcomputer system. Results show the FPGA implementation increases the throughput and decreases latency while maintaining comparable accuracy. These findings suggest future missions should consider deploying computer vision algorithms on space-grade FPGAs.

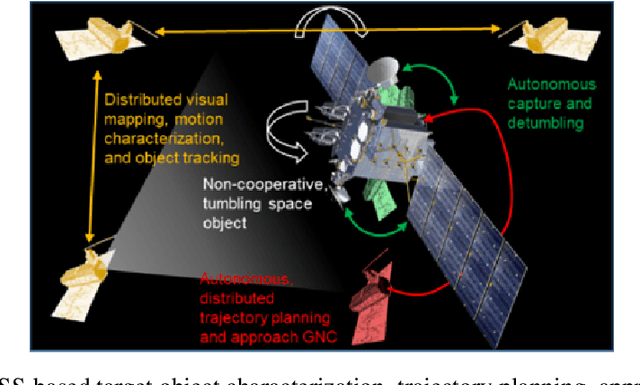

Autonomous Rendezvous with Non-cooperative Target Objects with Swarm Chasers and Observers

Jan 22, 2023

Abstract:Space debris is on the rise due to the increasing demand for spacecraft for com-munication, navigation, and other applications. The Space Surveillance Network (SSN) tracks over 27,000 large pieces of debris and estimates the number of small, un-trackable fragments at over 1,00,000. To control the growth of debris, the for-mation of further debris must be reduced. Some solutions include deorbiting larger non-cooperative resident space objects (RSOs) or servicing satellites in or-bit. Both require rendezvous with RSOs, and the scale of the problem calls for autonomous missions. This paper introduces the Multipurpose Autonomous Ren-dezvous Vision-Integrated Navigation system (MARVIN) developed and tested at the ORION Facility at Florida Institution of Technology. MARVIN consists of two sub-systems: a machine vision-aided navigation system and an artificial po-tential field (APF) guidance algorithm which work together to command a swarm of chasers to safely rendezvous with the RSO. We present the MARVIN architec-ture and hardware-in-the-loop experiments demonstrating autonomous, collabo-rative swarm satellite operations successfully guiding three drones to rendezvous with a physical mockup of a non-cooperative satellite in motion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge