Madhur Tiwari

Dynamic Scene 3D Reconstruction of an Uncooperative Resident Space Object

Sep 09, 2025Abstract:Characterization of uncooperative Resident Space Objects (RSO) play a crucial role in On-Orbit Servicing (OOS) and Active Debris Removal (ADR) missions to assess the geometry and motion properties. To address the challenges of reconstructing tumbling uncooperative targets, this study evaluates the performance of existing state-of-the-art 3D reconstruction algorithms for dynamic scenes, focusing on their ability to generate geometrically accurate models with high-fidelity. To support our evaluation, we developed a simulation environment using Isaac Sim to generate physics-accurate 2D image sequences of tumbling satellite under realistic orbital lighting conditions. Our preliminary results on static scenes using Neuralangelo demonstrate promising reconstruction quality. The generated 3D meshes closely match the original CAD models with minimal errors and artifacts when compared using Cloud Compare (CC). The reconstructed models were able to capture critical fine details for mission planning. This provides a baseline for our ongoing evaluation of dynamic scene reconstruction.

Real-time Testing of Satellite Attitude Control With a Reaction Wheel Hardware-In-the-Loop Platform

Aug 26, 2025Abstract:We propose the Hardware-in-the-Loop (HIL) test of an adaptive satellite attitude control system with reaction wheel health estimation capabilities. Previous simulations and Software-in-the-Loop testing have prompted further experiments to explore the validity of the controller with real momentum exchange devices in the loop. This work is a step toward a comprehensive testing framework for validation of spacecraft attitude control algorithms. The proposed HIL testbed includes brushless DC motors and drivers that communicate using a CAN bus, an embedded computer that executes control and adaptation laws, and a satellite simulator that produces simulated sensor data, estimated attitude states, and responds to actions of the external actuators. We propose methods to artificially induce failures on the reaction wheels, and present related issues and lessons learned.

End-to-End Imitation Learning for Optimal Asteroid Proximity Operations

Feb 03, 2025

Abstract:Controlling spacecraft near asteroids in deep space comes with many challenges. The delays involved necessitate heavy usage of limited onboard computation resources while fuel efficiency remains a priority to support the long loiter times needed for gathering data. Additionally, the difficulty of state determination due to the lack of traditional reference systems requires a guidance, navigation, and control (GNC) pipeline that ideally is both computationally and fuel-efficient, and that incorporates a robust state determination system. In this paper, we propose an end-to-end algorithm utilizing neural networks to generate near-optimal control commands from raw sensor data, as well as a hybrid model predictive control (MPC) guided imitation learning controller delivering improvements in computational efficiency over a traditional MPC controller.

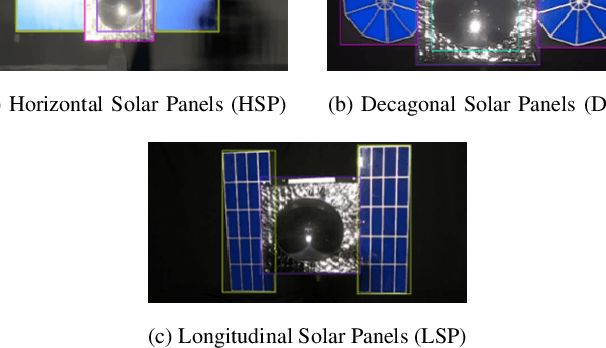

SpY: A Context-Based Approach to Spacecraft Component Detection

Jun 26, 2024

Abstract:This paper focuses on autonomously characterizing components such as solar panels, body panels, antennas, and thrusters of an unknown resident space object (RSO) using camera feed to aid autonomous on-orbit servicing (OOS) and active debris removal. Significant research has been conducted in this area using convolutional neural networks (CNNs). While CNNs are powerful at learning patterns and performing object detection, they struggle with missed detections and misclassifications in environments different from the training data, making them unreliable for safety in high-stakes missions like OOS. Additionally, failures exhibited by CNNs are often easily rectifiable by humans using commonsense reasoning and contextual knowledge. Embedding such reasoning in an object detector could improve detection accuracy. To validate this hypothesis, this paper presents an end-to-end object detector called SpaceYOLOv2 (SpY), which leverages the generalizability of CNNs while incorporating contextual knowledge using traditional computer vision techniques. SpY consists of two main components: a shape detector and the SpaceYOLO classifier (SYC). The shape detector uses CNNs to detect primitive shapes of RSOs and SYC associates these shapes with contextual knowledge, such as color and texture, to classify them as spacecraft components or "unknown" if the detected shape is uncertain. SpY's modular architecture allows customizable usage of contextual knowledge to improve detection performance, or SYC as a secondary fail-safe classifier with an existing spacecraft component detector. Performance evaluations on hardware-in-the-loop images of a mock-up spacecraft demonstrate that SpY is accurate and an ensemble of SpY with YOLOv5 trained for satellite component detection improved the performance by 23.4% in recall, demonstrating enhanced safety for vision-based navigation tasks.

Leveraging KANs For Enhanced Deep Koopman Operator Discovery

Jun 06, 2024Abstract:Multi-layer perceptrons (MLP's) have been extensively utilized in discovering Deep Koopman operators for linearizing nonlinear dynamics. With the emergence of Kolmogorov-Arnold Networks (KANs) as a more efficient and accurate alternative to the MLP Neural Network, we propose a comparison of the performance of each network type in the context of learning Koopman operators with control. In this work, we propose a KANs-based deep Koopman framework with applications to an orbital Two-Body Problem (2BP) and the pendulum for data-driven discovery of linear system dynamics. KANs were found to be superior in nearly all aspects of training; learning 31 times faster, being 15 times more parameter efficiency, and predicting 1.25 times more accurately as compared to the MLP Deep Neural Networks (DNNs) in the case of the 2BP. Thus, KANs shows potential for being an efficient tool in the development of Deep Koopman Theory.

Deep Learning Based Dynamics Identification and Linearization of Orbital Problems using Koopman Theory

Mar 13, 2024

Abstract:The study of the Two-Body and Circular Restricted Three-Body Problems in the field of aerospace engineering and sciences is deeply important because they help describe the motion of both celestial and artificial satellites. With the growing demand for satellites and satellite formation flying, fast and efficient control of these systems is becoming ever more important. Global linearization of these systems allows engineers to employ methods of control in order to achieve these desired results. We propose a data-driven framework for simultaneous system identification and global linearization of both the Two-Body Problem and Circular Restricted Three-Body Problem via deep learning-based Koopman Theory, i.e., a framework that can identify the underlying dynamics and globally linearize it into a linear time-invariant (LTI) system. The linear Koopman operator is discovered through purely data-driven training of a Deep Neural Network with a custom architecture. This paper displays the ability of the Koopman operator to generalize to various other Two-Body systems without the need for retraining. We also demonstrate the capability of the same architecture to be utilized to accurately learn a Koopman operator that approximates the Circular Restricted Three-Body Problem.

Computationally Efficient Data-Driven Discovery and Linear Representation of Nonlinear Systems For Control

Sep 08, 2023

Abstract:This work focuses on developing a data-driven framework using Koopman operator theory for system identification and linearization of nonlinear systems for control. Our proposed method presents a deep learning framework with recursive learning. The resulting linear system is controlled using a linear quadratic control. An illustrative example using a pendulum system is presented with simulations on noisy data. We show that our proposed method is trained more efficiently and is more accurate than an autoencoder baseline.

SpaceYOLO: A Human-Inspired Model for Real-time, On-board Spacecraft Feature Detection

Feb 02, 2023Abstract:The rapid proliferation of non-cooperative spacecraft and space debris in orbit has precipitated a surging demand for on-orbit servicing and space debris removal at a scale that only autonomous missions can address, but the prerequisite autonomous navigation and flightpath planning to safely capture an unknown, non-cooperative, tumbling space object is an open problem. This requires algorithms for real-time, automated spacecraft feature recognition to pinpoint the locations of collision hazards (e.g. solar panels or antennas) and safe docking features (e.g. satellite bodies or thrusters) so safe, effective flightpaths can be planned. Prior work in this area reveals the performance of computer vision models are highly dependent on the training dataset and its coverage of scenarios visually similar to the real scenarios that occur in deployment. Hence, the algorithm may have degraded performance under certain lighting conditions even when the rendezvous maneuver conditions of the chaser to the target spacecraft are the same. This work delves into how humans perform these tasks through a survey of how aerospace engineering students experienced with spacecraft shapes and components recognize features of the three spacecraft: Landsat, Envisat, Anik, and the orbiter Mir. The survey reveals that the most common patterns in the human detection process were to consider the shape and texture of the features: antennas, solar panels, thrusters, and satellite bodies. This work introduces a novel algorithm SpaceYOLO, which fuses a state-of-the-art object detector YOLOv5 with a separate neural network based on these human-inspired decision processes exploiting shape and texture. Performance in autonomous spacecraft detection of SpaceYOLO is compared to ordinary YOLOv5 in hardware-in-the-loop experiments under different lighting and chaser maneuver conditions at the ORION Laboratory at Florida Tech.

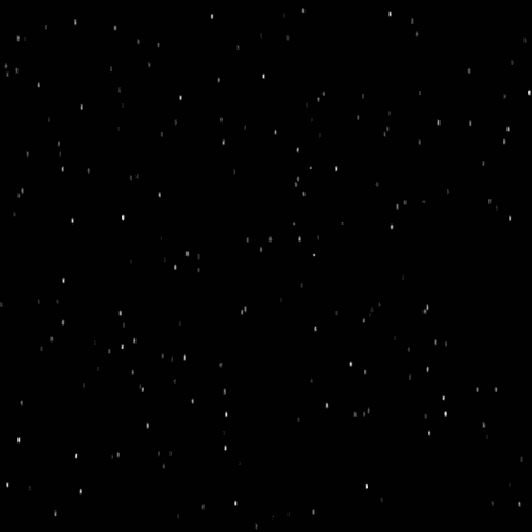

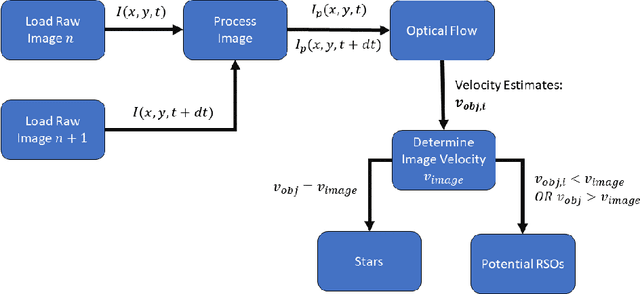

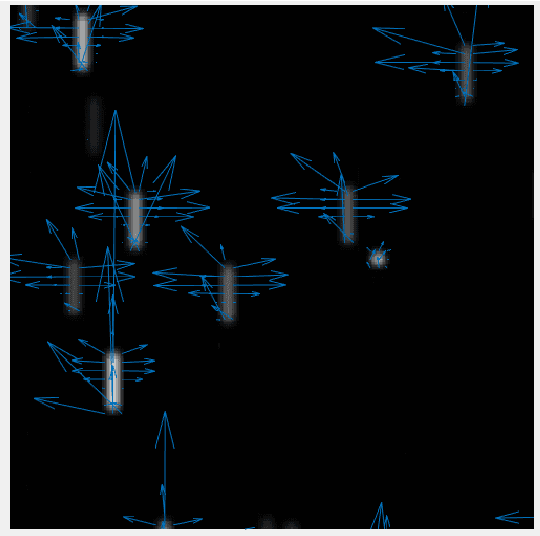

Autonomous Satellite Detection and Tracking using Optical Flow

Apr 14, 2022

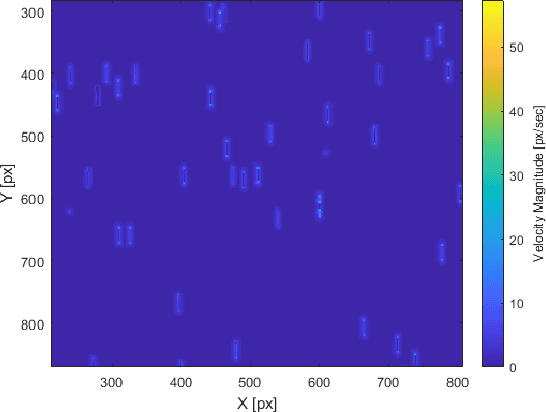

Abstract:In this paper, an autonomous method of satellite detection and tracking in images is implemented using optical flow. Optical flow is used to estimate the image velocities of detected objects in a series of space images. Given that most objects in an image will be stars, the overall image velocity from star motion is used to estimate the image's frame-to-frame motion. Objects seen to be moving with velocity profiles distinct from the overall image velocity are then classified as potential resident space objects. The detection algorithm is exercised using both simulated star images and ground-based imagery of satellites. Finally, this algorithm will be tested and compared using a commercial and an open-source software approach to provide the reader with two different options based on their need.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge