Mackenzie J. Meni

Uncertainty Makes It Stable: Curiosity-Driven Quantized Mixture-of-Experts

Nov 19, 2025Abstract:Deploying deep neural networks on resource-constrained devices faces two critical challenges: maintaining accuracy under aggressive quantization while ensuring predictable inference latency. We present a curiosity-driven quantized Mixture-of-Experts framework that addresses both through Bayesian epistemic uncertainty-based routing across heterogeneous experts (BitNet ternary, 1-16 bit BitLinear, post-training quantization). Evaluated on audio classification benchmarks (ESC-50, Quinn, UrbanSound8K), our 4-bit quantization maintains 99.9 percent of 16-bit accuracy (0.858 vs 0.859 F1) with 4x compression and 41 percent energy savings versus 8-bit. Crucially, curiosity-driven routing reduces MoE latency variance by 82 percent (p = 0.008, Levene's test) from 230 ms to 29 ms standard deviation, enabling stable inference for battery-constrained devices. Statistical analysis confirms 4-bit/8-bit achieve practical equivalence with full precision (p > 0.05), while MoE architectures introduce 11 percent latency overhead (p < 0.001) without accuracy gains. At scale, deployment emissions dominate training by 10000x for models serving more than 1,000 inferences, making inference efficiency critical. Our information-theoretic routing demonstrates that adaptive quantization yields accurate (0.858 F1, 1.2M params), energy-efficient (3.87 F1/mJ), and predictable edge models, with simple 4-bit quantized architectures outperforming complex MoE for most deployments.

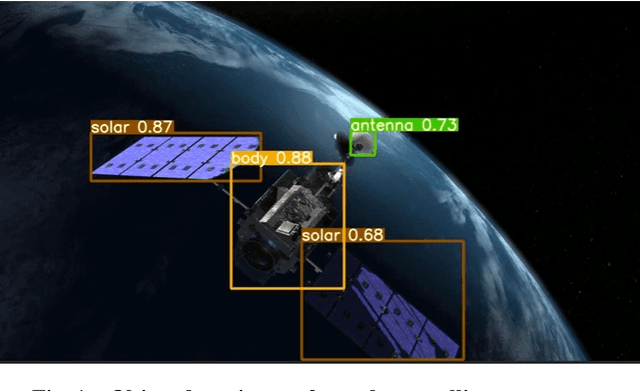

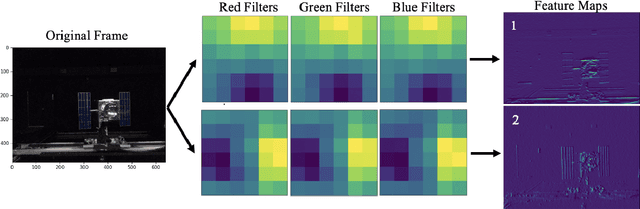

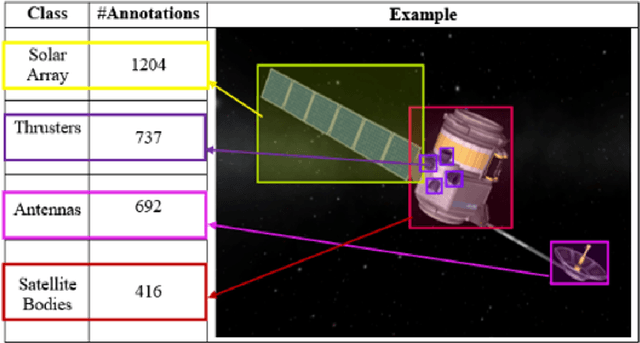

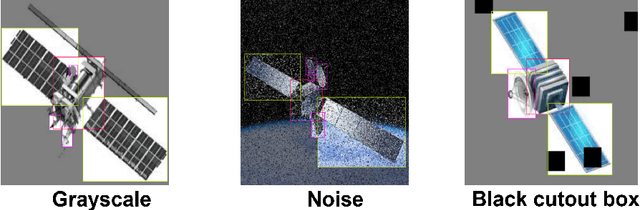

Taking a PEEK into YOLOv5 for Satellite Component Recognition via Entropy-based Visual Explanations

Nov 03, 2023

Abstract:The escalating risk of collisions and the accumulation of space debris in Low Earth Orbit (LEO) has reached critical concern due to the ever increasing number of spacecraft. Addressing this crisis, especially in dealing with non-cooperative and unidentified space debris, is of paramount importance. This paper contributes to efforts in enabling autonomous swarms of small chaser satellites for target geometry determination and safe flight trajectory planning for proximity operations in LEO. Our research explores on-orbit use of the You Only Look Once v5 (YOLOv5) object detection model trained to detect satellite components. While this model has shown promise, its inherent lack of interpretability hinders human understanding, a critical aspect of validating algorithms for use in safety-critical missions. To analyze the decision processes, we introduce Probabilistic Explanations for Entropic Knowledge extraction (PEEK), a method that utilizes information theoretic analysis of the latent representations within the hidden layers of the model. Through both synthetic in hardware-in-the-loop experiments, PEEK illuminates the decision-making processes of the model, helping identify its strengths, limitations and biases.

Entropy-based Guidance of Deep Neural Networks for Accelerated Convergence and Improved Performance

Aug 28, 2023

Abstract:Neural networks have dramatically increased our capacity to learn from large, high-dimensional datasets across innumerable disciplines. However, their decisions are not easily interpretable, their computational costs are high, and building and training them are uncertain processes. To add structure to these efforts, we derive new mathematical results to efficiently measure the changes in entropy as fully-connected and convolutional neural networks process data, and introduce entropy-based loss terms. Experiments in image compression and image classification on benchmark datasets demonstrate these losses guide neural networks to learn rich latent data representations in fewer dimensions, converge in fewer training epochs, and achieve better test metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge