Vítor Santos Costa

Program Synthesis using Inductive Logic Programming for the Abstraction and Reasoning Corpus

May 10, 2024Abstract:The Abstraction and Reasoning Corpus (ARC) is a general artificial intelligence benchmark that is currently unsolvable by any Machine Learning method, including Large Language Models (LLMs). It demands strong generalization and reasoning capabilities which are known to be weaknesses of Neural Network based systems. In this work, we propose a Program Synthesis system that uses Inductive Logic Programming (ILP), a branch of Symbolic AI, to solve ARC. We have manually defined a simple Domain Specific Language (DSL) that corresponds to a small set of object-centric abstractions relevant to ARC. This is the Background Knowledge used by ILP to create Logic Programs that provide reasoning capabilities to our system. The full system is capable of generalize to unseen tasks, since ILP can create Logic Program(s) from few examples, in the case of ARC: pairs of Input-Output grids examples for each task. These Logic Programs are able to generate Objects present in the Output grid and the combination of these can form a complete program that transforms an Input grid into an Output grid. We randomly chose some tasks from ARC that dont require more than the small number of the Object primitives we implemented and show that given only these, our system can solve tasks that require each, such different reasoning.

NeuralLog: a Neural Logic Language

May 04, 2021

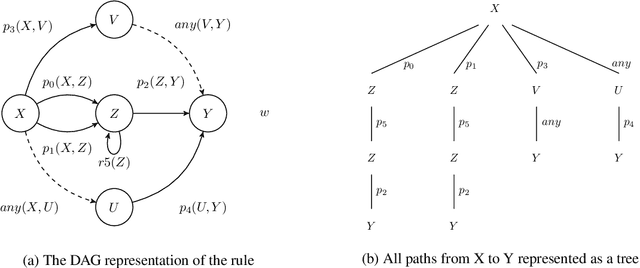

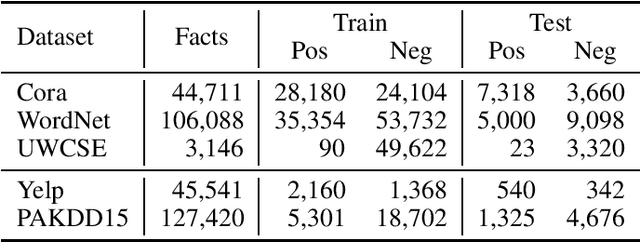

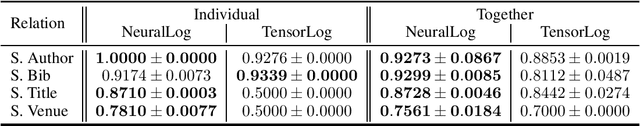

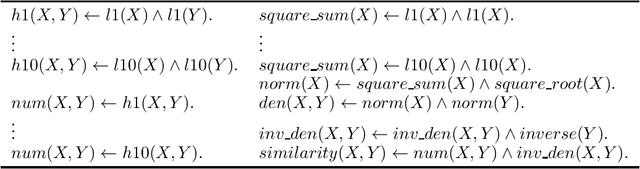

Abstract:Application domains that require considering relationships among objects which have real-valued attributes are becoming even more important. In this paper we propose NeuralLog, a first-order logic language that is compiled to a neural network. The main goal of NeuralLog is to bridge logic programming and deep learning, allowing advances in both fields to be combined in order to obtain better machine learning models. The main advantages of NeuralLog are: to allow neural networks to be defined as logic programs; and to be able to handle numeric attributes and functions. We compared NeuralLog with two distinct systems that use first-order logic to build neural networks. We have also shown that NeuralLog can learn link prediction and classification tasks, using the same theory as the compared systems, achieving better results for the area under the ROC curve in four datasets: Cora and UWCSE for link prediction; and Yelp and PAKDD15 for classification; and comparable results for link prediction in the WordNet dataset.

Introduction to the 28th International Conference on Logic Programming Special Issue

Oct 15, 2012Abstract:We are proud to introduce this special issue of the Journal of Theory and Practice of Logic Programming (TPLP), dedicated to the full papers accepted for the 28th International Conference on Logic Programming (ICLP). The ICLP meetings started in Marseille in 1982 and since then constitute the main venue for presenting and discussing work in the area of logic programming.

On the Implementation of the Probabilistic Logic Programming Language ProbLog

Jun 23, 2010

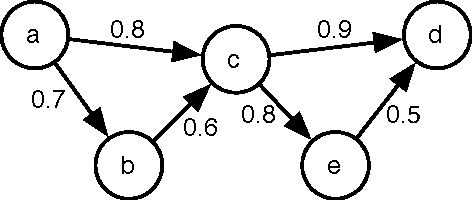

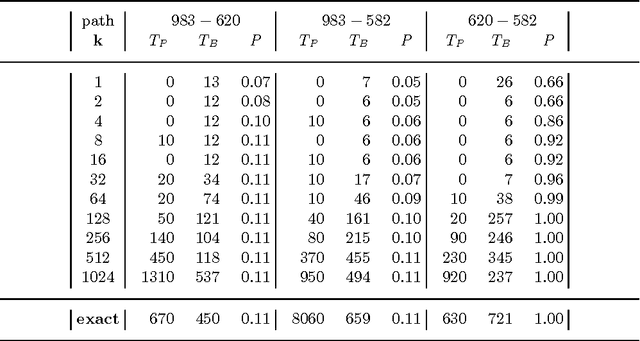

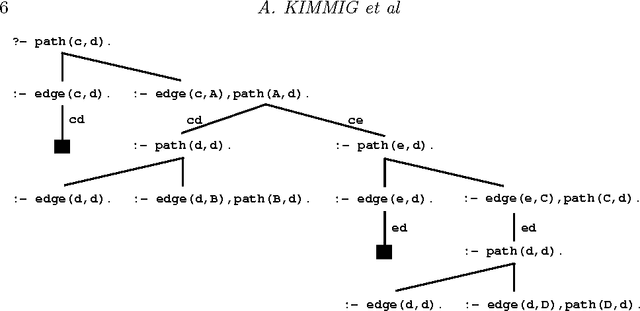

Abstract:The past few years have seen a surge of interest in the field of probabilistic logic learning and statistical relational learning. In this endeavor, many probabilistic logics have been developed. ProbLog is a recent probabilistic extension of Prolog motivated by the mining of large biological networks. In ProbLog, facts can be labeled with probabilities. These facts are treated as mutually independent random variables that indicate whether these facts belong to a randomly sampled program. Different kinds of queries can be posed to ProbLog programs. We introduce algorithms that allow the efficient execution of these queries, discuss their implementation on top of the YAP-Prolog system, and evaluate their performance in the context of large networks of biological entities.

* 28 pages; To appear in Theory and Practice of Logic Programming (TPLP)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge