Trushant Kalyanpur

Command A: An Enterprise-Ready Large Language Model

Apr 01, 2025

Abstract:In this report we describe the development of Command A, a powerful large language model purpose-built to excel at real-world enterprise use cases. Command A is an agent-optimised and multilingual-capable model, with support for 23 languages of global business, and a novel hybrid architecture balancing efficiency with top of the range performance. It offers best-in-class Retrieval Augmented Generation (RAG) capabilities with grounding and tool use to automate sophisticated business processes. These abilities are achieved through a decentralised training approach, including self-refinement algorithms and model merging techniques. We also include results for Command R7B which shares capability and architectural similarities to Command A. Weights for both models have been released for research purposes. This technical report details our original training pipeline and presents an extensive evaluation of our models across a suite of enterprise-relevant tasks and public benchmarks, demonstrating excellent performance and efficiency.

Probabilistic Modeling of Deep Features for Out-of-Distribution and Adversarial Detection

Sep 25, 2019

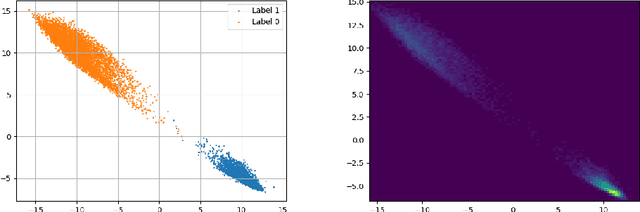

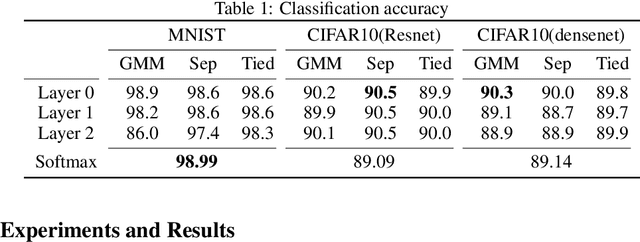

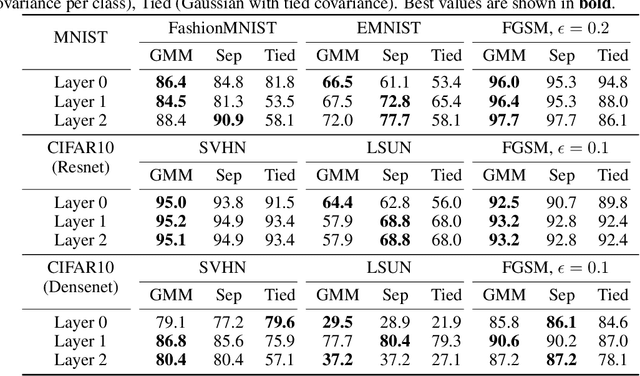

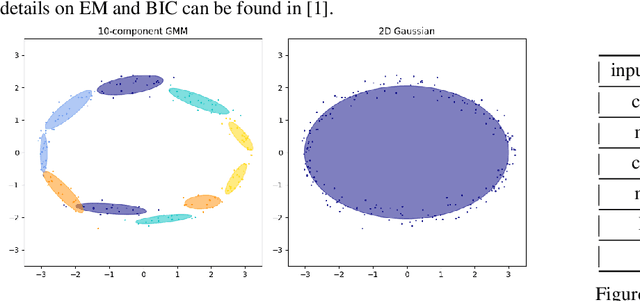

Abstract:We present a principled approach for detecting out-of-distribution (OOD) and adversarial samples in deep neural networks. Our approach consists in modeling the outputs of the various layers (deep features) with parametric probability distributions once training is completed. At inference, the likelihoods of the deep features w.r.t the previously learnt distributions are calculated and used to derive uncertainty estimates that can discriminate in-distribution samples from OOD samples. We explore the use of two classes of multivariate distributions for modeling the deep features - Gaussian and Gaussian mixture - and study the trade-off between accuracy and computational complexity. We demonstrate benefits of our approach on image features by detecting OOD images and adversarially-generated images, using popular DNN architectures on MNIST and CIFAR10 datasets. We show that more precise modeling of the feature distributions result in significantly improved detection of OOD and adversarial samples; up to 12 percentage points in AUPR and AUROC metrics. We further show that our approach remains extremely effective when applied to video data and associated spatio-temporal features by detecting adversarial samples on activity classification tasks using UCF101 dataset, and the C3D network. To our knowledge, our methodology is the first one reported for reliably detecting white-box adversarial framing, a state-of-the-art adversarial attack for video classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge