Ibrahima Ndiour

CONCLAD: COntinuous Novel CLAss Detector

Dec 13, 2024

Abstract:In the field of continual learning, relying on so-called oracles for novelty detection is commonplace albeit unrealistic. This paper introduces CONCLAD ("COntinuous Novel CLAss Detector"), a comprehensive solution to the under-explored problem of continual novel class detection in post-deployment data. At each new task, our approach employs an iterative uncertainty estimation algorithm to differentiate between known and novel class(es) samples, and to further discriminate between the different novel classes themselves. Samples predicted to be from a novel class with high-confidence are automatically pseudo-labeled and used to update our model. Simultaneously, a tiny supervision budget is used to iteratively query ambiguous novel class predictions, which are also used during update. Evaluation across multiple datasets, ablations and experimental settings demonstrate our method's effectiveness at separating novel and old class samples continuously. We will release our code upon acceptance.

CUAL: Continual Uncertainty-aware Active Learner

Dec 12, 2024

Abstract:AI deployed in many real-world use cases should be capable of adapting to novelties encountered after deployment. Here, we consider a challenging, under-explored and realistic continual adaptation problem: a deployed AI agent is continuously provided with unlabeled data that may contain not only unseen samples of known classes but also samples from novel (unknown) classes. In such a challenging setting, it has only a tiny labeling budget to query the most informative samples to help it continuously learn. We present a comprehensive solution to this complex problem with our model "CUAL" (Continual Uncertainty-aware Active Learner). CUAL leverages an uncertainty estimation algorithm to prioritize active labeling of ambiguous (uncertain) predicted novel class samples while also simultaneously pseudo-labeling the most certain predictions of each class. Evaluations across multiple datasets, ablations, settings and backbones (e.g. ViT foundation model) demonstrate our method's effectiveness. We will release our code upon acceptance.

FRE: A Fast Method For Anomaly Detection And Segmentation

Nov 23, 2022Abstract:This paper presents a fast and principled approach for solving the visual anomaly detection and segmentation problem. In this setup, we have access to only anomaly-free training data and want to detect and identify anomalies of an arbitrary nature on test data. We propose the application of linear statistical dimensionality reduction techniques on the intermediate features produced by a pretrained DNN on the training data, in order to capture the low-dimensional subspace truly spanned by said features. We show that the \emph{feature reconstruction error} (FRE), which is the $\ell_2$-norm of the difference between the original feature in the high-dimensional space and the pre-image of its low-dimensional reduced embedding, is extremely effective for anomaly detection. Further, using the same feature reconstruction error concept on intermediate convolutional layers, we derive FRE maps that provide pixel-level spatial localization of the anomalies in the image (i.e. segmentation). Experiments using standard anomaly detection datasets and DNN architectures demonstrate that our method matches or exceeds best-in-class quality performance, but at a fraction of the computational and memory cost required by the state of the art. It can be trained and run very efficiently, even on a traditional CPU.

Out-Of-Distribution Detection With Subspace Techniques And Probabilistic Modeling Of Features

Dec 08, 2020

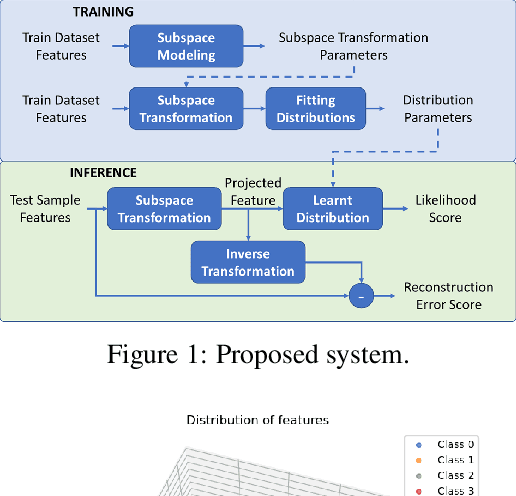

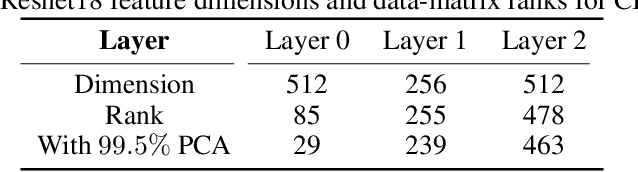

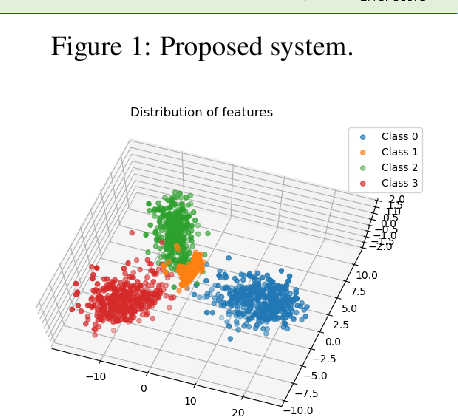

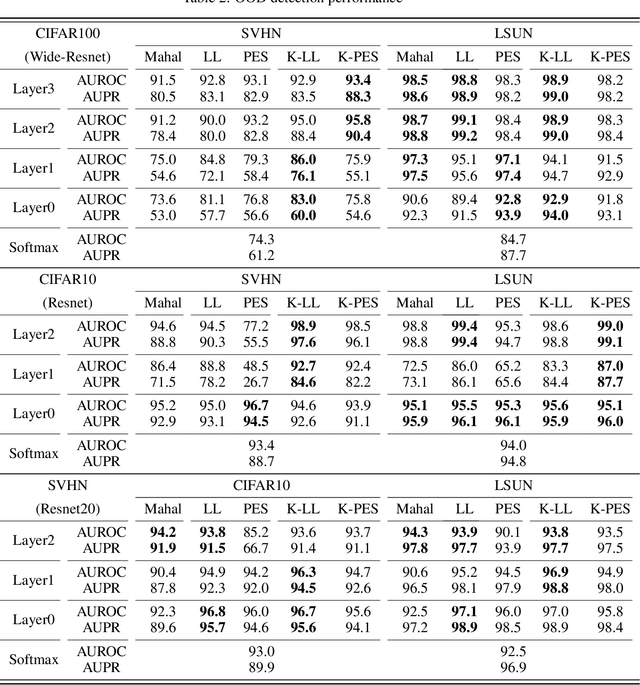

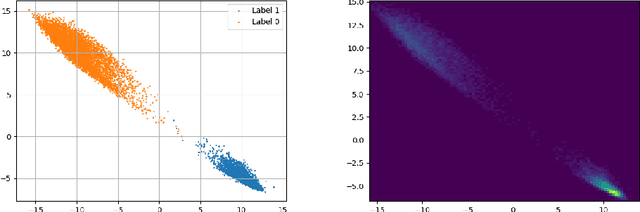

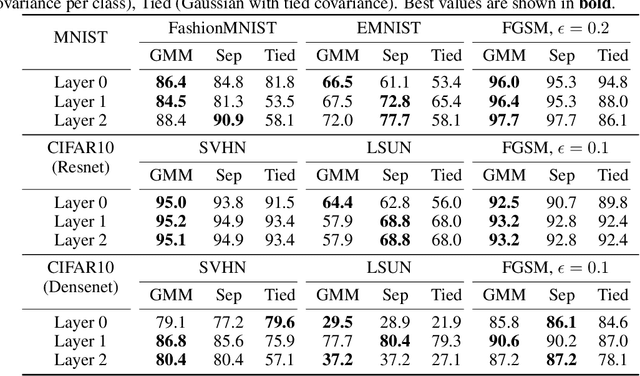

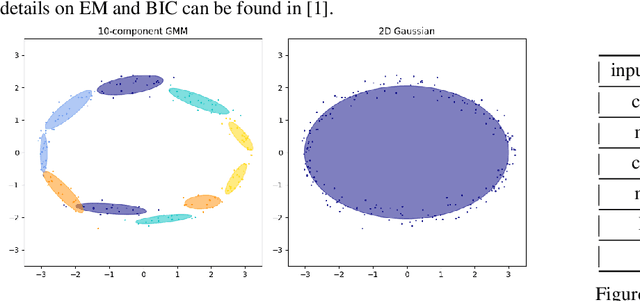

Abstract:This paper presents a principled approach for detecting out-of-distribution (OOD) samples in deep neural networks (DNN). Modeling probability distributions on deep features has recently emerged as an effective, yet computationally cheap method to detect OOD samples in DNN. However, the features produced by a DNN at any given layer do not fully occupy the corresponding high-dimensional feature space. We apply linear statistical dimensionality reduction techniques and nonlinear manifold-learning techniques on the high-dimensional features in order to capture the true subspace spanned by the features. We hypothesize that such lower-dimensional feature embeddings can mitigate the curse of dimensionality, and enhance any feature-based method for more efficient and effective performance. In the context of uncertainty estimation and OOD, we show that the log-likelihood score obtained from the distributions learnt on this lower-dimensional subspace is more discriminative for OOD detection. We also show that the feature reconstruction error, which is the $L_2$-norm of the difference between the original feature and the pre-image of its embedding, is highly effective for OOD detection and in some cases superior to the log-likelihood scores. The benefits of our approach are demonstrated on image features by detecting OOD images, using popular DNN architectures on commonly used image datasets such as CIFAR10, CIFAR100, and SVHN.

Probabilistic Modeling of Deep Features for Out-of-Distribution and Adversarial Detection

Sep 25, 2019

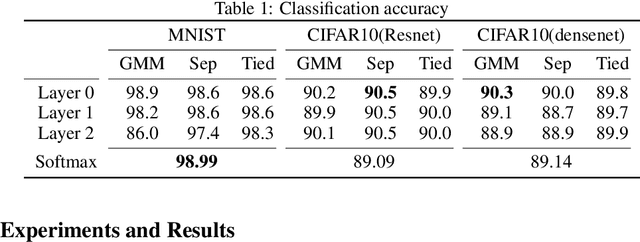

Abstract:We present a principled approach for detecting out-of-distribution (OOD) and adversarial samples in deep neural networks. Our approach consists in modeling the outputs of the various layers (deep features) with parametric probability distributions once training is completed. At inference, the likelihoods of the deep features w.r.t the previously learnt distributions are calculated and used to derive uncertainty estimates that can discriminate in-distribution samples from OOD samples. We explore the use of two classes of multivariate distributions for modeling the deep features - Gaussian and Gaussian mixture - and study the trade-off between accuracy and computational complexity. We demonstrate benefits of our approach on image features by detecting OOD images and adversarially-generated images, using popular DNN architectures on MNIST and CIFAR10 datasets. We show that more precise modeling of the feature distributions result in significantly improved detection of OOD and adversarial samples; up to 12 percentage points in AUPR and AUROC metrics. We further show that our approach remains extremely effective when applied to video data and associated spatio-temporal features by detecting adversarial samples on activity classification tasks using UCF101 dataset, and the C3D network. To our knowledge, our methodology is the first one reported for reliably detecting white-box adversarial framing, a state-of-the-art adversarial attack for video classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge