Toshihiro Matsui

Search Space of Adversarial Perturbations against Image Filters

Mar 05, 2020

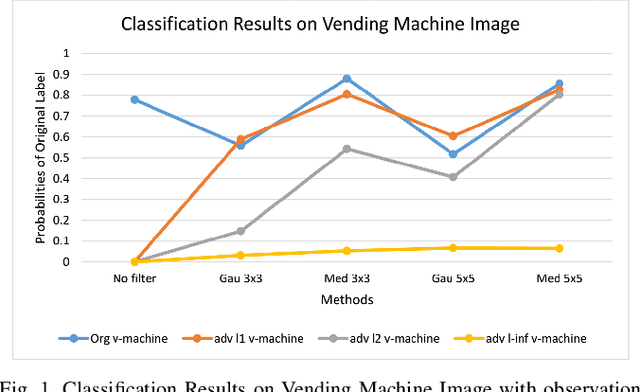

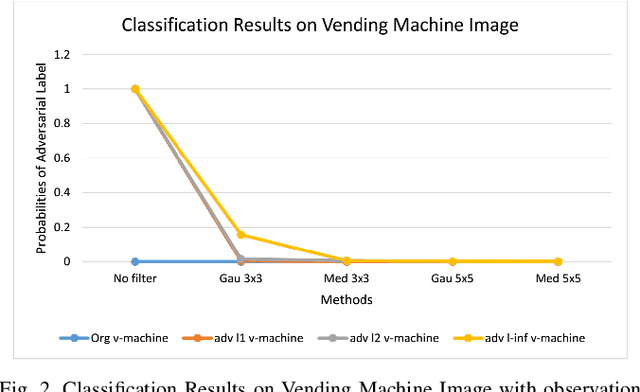

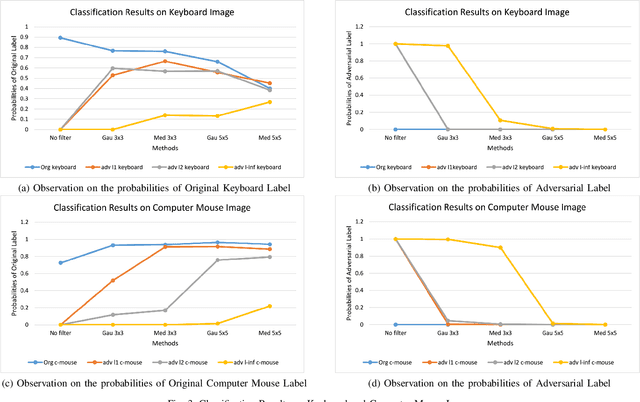

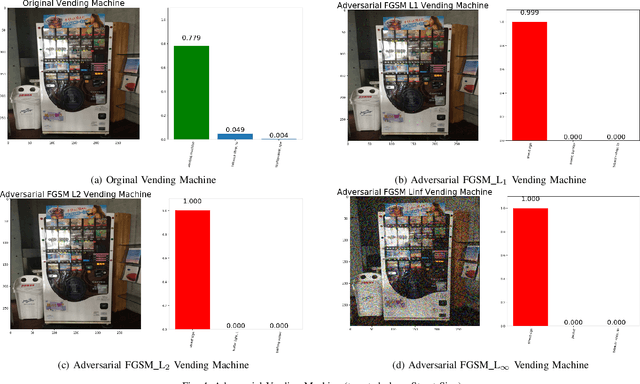

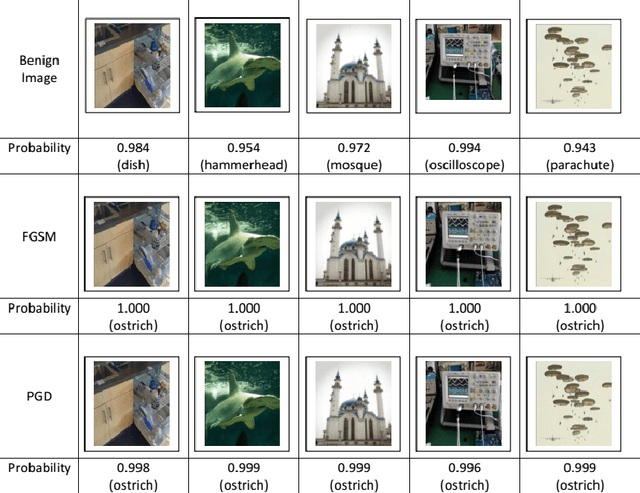

Abstract:The superiority of deep learning performance is threatened by safety issues for itself. Recent findings have shown that deep learning systems are very weak to adversarial examples, an attack form that was altered by the attacker's intent to deceive the deep learning system. There are many proposed defensive methods to protect deep learning systems against adversarial examples. However, there is still a lack of principal strategies to deceive those defensive methods. Any time a particular countermeasure is proposed, a new powerful adversarial attack will be invented to deceive that countermeasure. In this study, we focus on investigating the ability to create adversarial patterns in search space against defensive methods that use image filters. Experimental results conducted on the ImageNet dataset with image classification tasks showed the correlation between the search space of adversarial perturbation and filters. These findings open a new direction for building stronger offensive methods towards deep learning systems.

Automated Detection System for Adversarial Examples with High-Frequency Noises Sieve

Aug 05, 2019

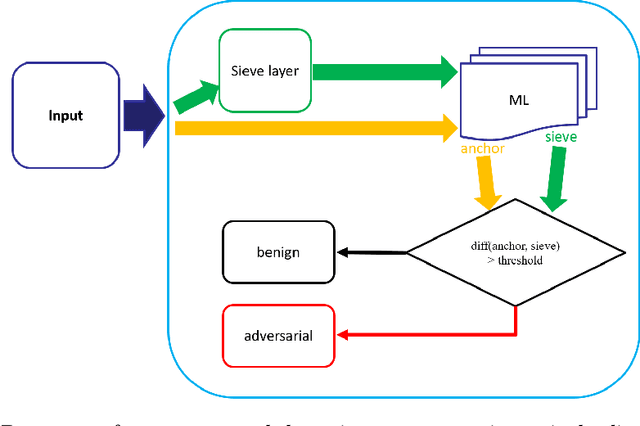

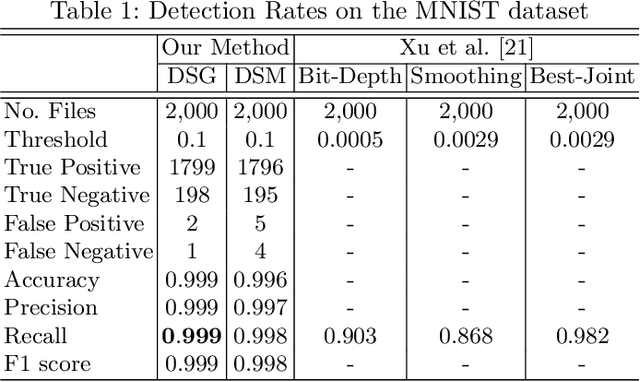

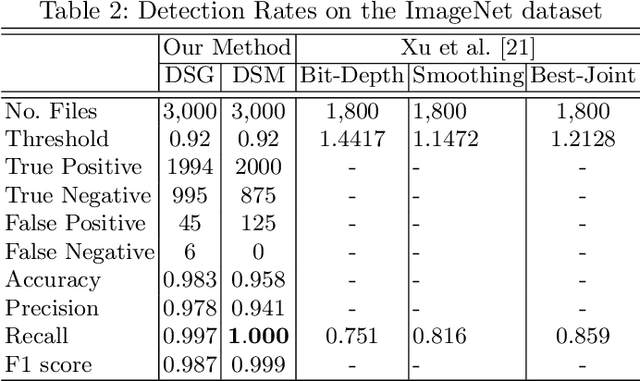

Abstract:Deep neural networks are being applied in many tasks with encouraging results, and have often reached human-level performance. However, deep neural networks are vulnerable to well-designed input samples called adversarial examples. In particular, neural networks tend to misclassify adversarial examples that are imperceptible to humans. This paper introduces a new detection system that automatically detects adversarial examples on deep neural networks. Our proposed system can mostly distinguish adversarial samples and benign images in an end-to-end manner without human intervention. We exploit the important role of the frequency domain in adversarial samples and propose a method that detects malicious samples in observations. When evaluated on two standard benchmark datasets (MNIST and ImageNet), our method achieved an out-detection rate of 99.7 - 100% in many settings.

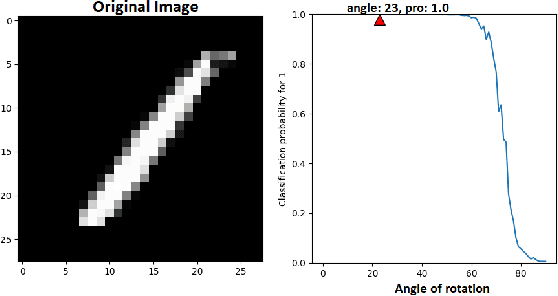

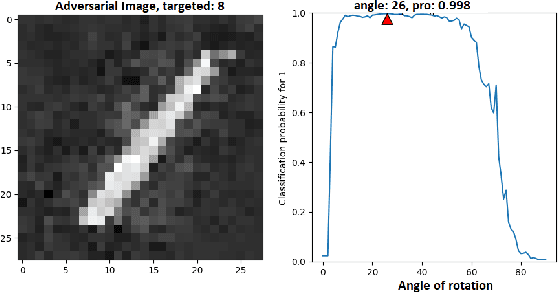

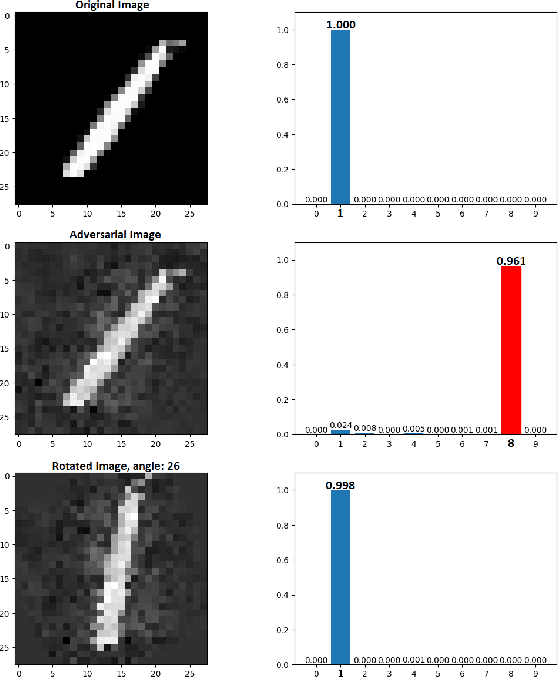

Image Transformation can make Neural Networks more robust against Adversarial Examples

Jan 10, 2019

Abstract:Neural networks are being applied in many tasks related to IoT with encouraging results. For example, neural networks can precisely detect human, objects and animal via surveillance camera for security purpose. However, neural networks have been recently found vulnerable to well-designed input samples that called adversarial examples. Such issue causes neural networks to misclassify adversarial examples that are imperceptible to humans. We found giving a rotation to an adversarial example image can defeat the effect of adversarial examples. Using MNIST number images as the original images, we first generated adversarial examples to neural network recognizer, which was completely fooled by the forged examples. Then we rotated the adversarial image and gave them to the recognizer to find the recognizer to regain the correct recognition. Thus, we empirically confirmed rotation to images can protect pattern recognizer based on neural networks from adversarial example attacks.

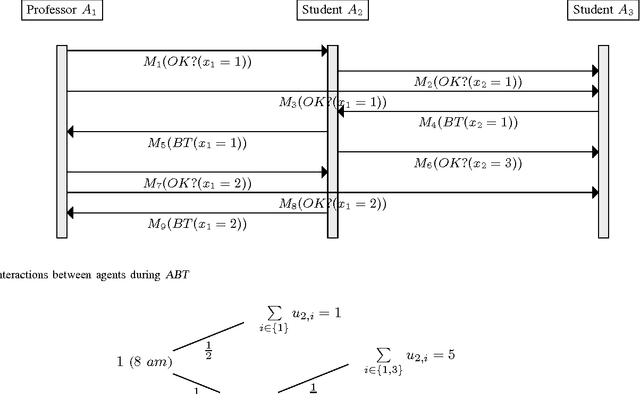

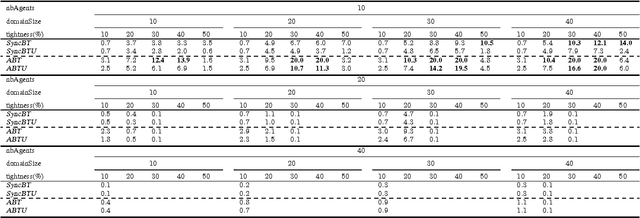

Distributed Constraint Problems for Utilitarian Agents with Privacy Concerns, Recast as POMDPs

Mar 20, 2017

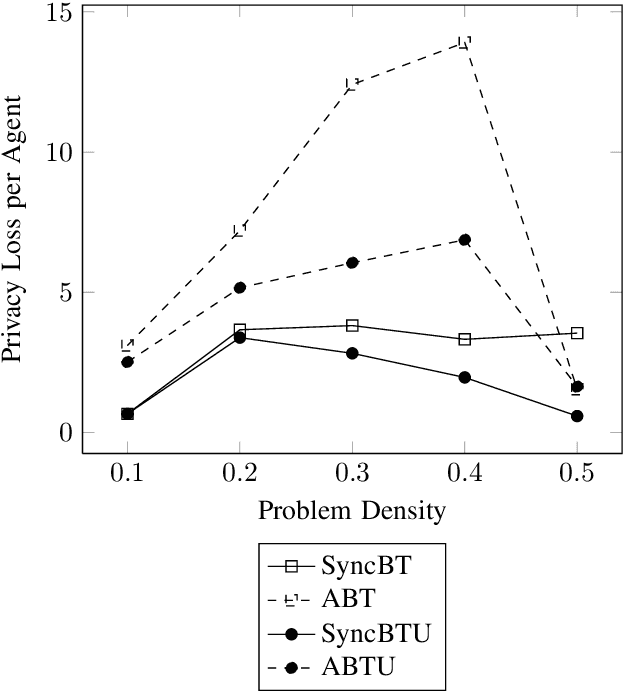

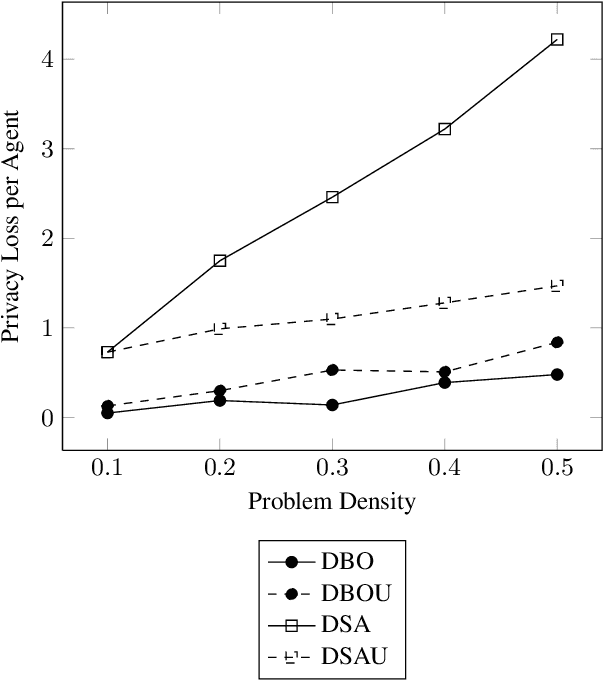

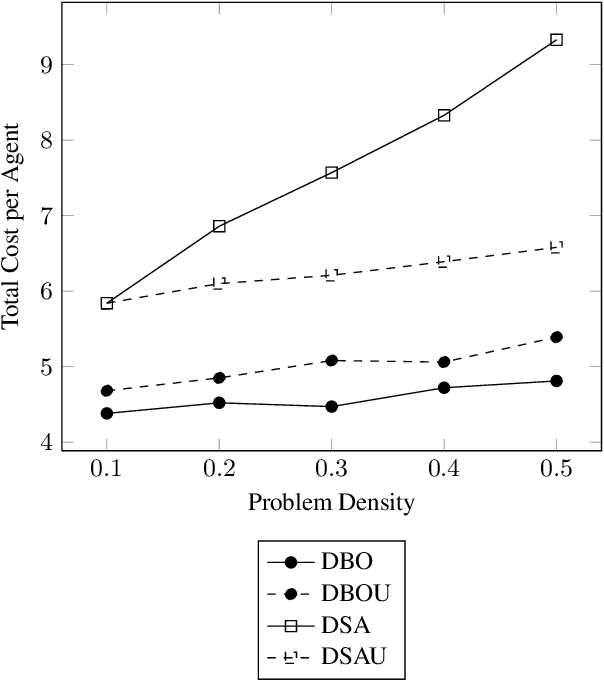

Abstract:Privacy has traditionally been a major motivation for distributed problem solving. Distributed Constraint Satisfaction Problem (DisCSP) as well as Distributed Constraint Optimization Problem (DCOP) are fundamental models used to solve various families of distributed problems. Even though several approaches have been proposed to quantify and preserve privacy in such problems, none of them is exempt from limitations. Here we approach the problem by assuming that computation is performed among utilitarian agents. We introduce a utilitarian approach where the utility of each state is estimated as the difference between the reward for reaching an agreement on assignments of shared variables and the cost of privacy loss. We investigate extensions to solvers where agents integrate the utility function to guide their search and decide which action to perform, defining thereby their policy. We show that these extended solvers succeed in significantly reducing privacy loss without significant degradation of the solution quality.

DisCSPs with Privacy Recast as Planning Problems for Utility-based Agents

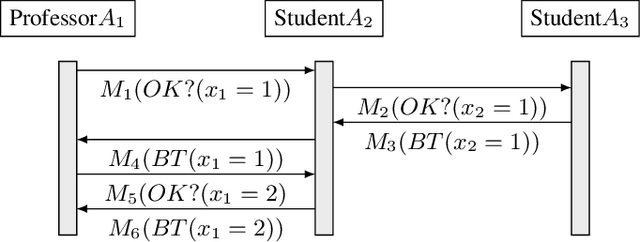

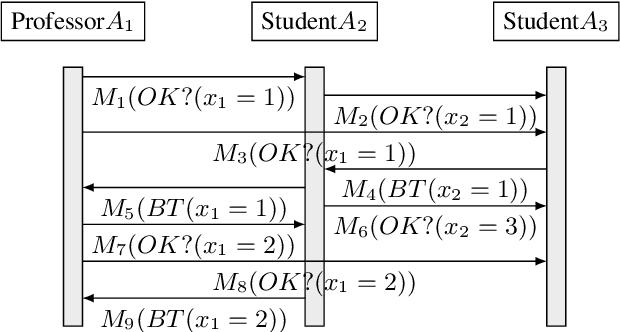

Apr 22, 2016

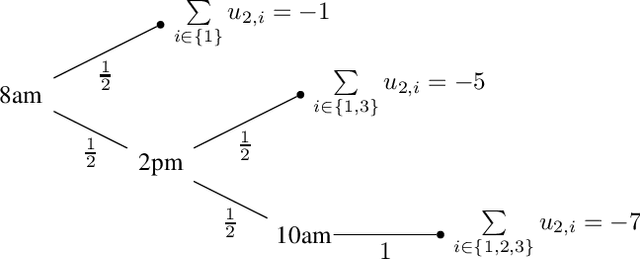

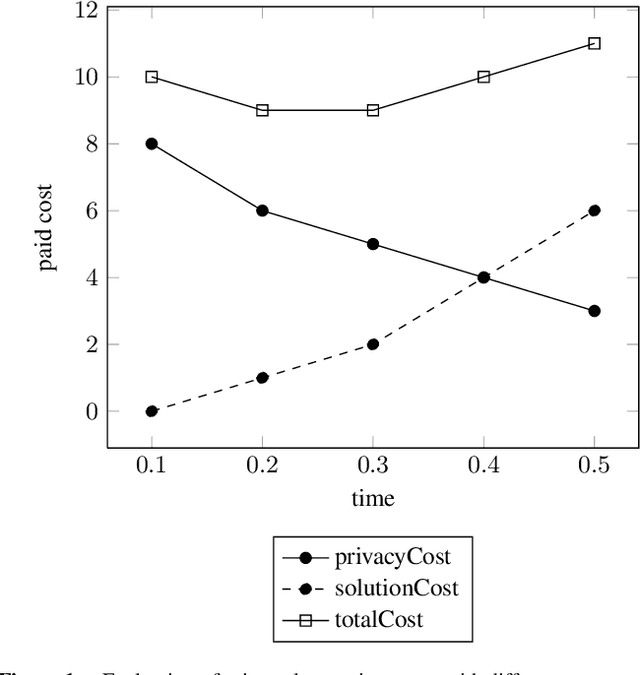

Abstract:Privacy has traditionally been a major motivation for decentralized problem solving. However, even though several metrics have been proposed to quantify it, none of them is easily integrated with common solvers. Constraint programming is a fundamental paradigm used to approach various families of problems. We introduce Utilitarian Distributed Constraint Satisfaction Problems (UDisCSP) where the utility of each state is estimated as the difference between the the expected rewards for agreements on assignments for shared variables, and the expected cost of privacy loss. Therefore, a traditional DisCSP with privacy requirements is viewed as a planning problem. The actions available to agents are: communication and local inference. Common decentralized solvers are evaluated here from the point of view of their interpretation as greedy planners. Further, we investigate some simple extensions where these solvers start taking into account the utility function. In these extensions we assume that the planning problem is further restricting the set of communication actions to only the communication primitives present in the corresponding solver protocols. The solvers obtained for the new type of problems propose the action (communication/inference) to be performed in each situation, defining thereby the policy.

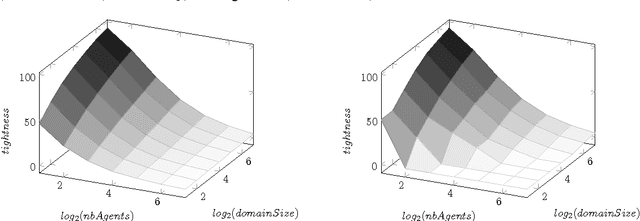

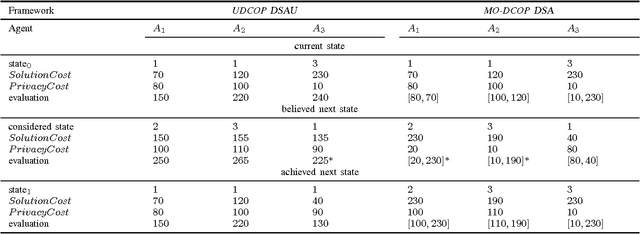

Utilitarian Distributed Constraint Optimization Problems

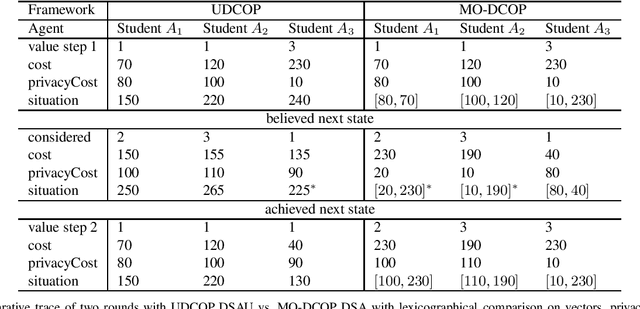

Apr 22, 2016

Abstract:Privacy has been a major motivation for distributed problem optimization. However, even though several methods have been proposed to evaluate it, none of them is widely used. The Distributed Constraint Optimization Problem (DCOP) is a fundamental model used to approach various families of distributed problems. As privacy loss does not occur when a solution is accepted, but when it is proposed, privacy requirements cannot be interpreted as a criteria of the objective function of the DCOP. Here we approach the problem by letting both the optimized costs found in DCOPs and the privacy requirements guide the agents' exploration of the search space. We introduce Utilitarian Distributed Constraint Optimization Problem (UDCOP) where the costs and the privacy requirements are used as parameters to a heuristic modifying the search process. Common stochastic algorithms for decentralized constraint optimization problems are evaluated here according to how well they preserve privacy. Further, we propose some extensions where these solvers modify their search process to take into account their privacy requirements, succeeding in significantly reducing their privacy loss without significant degradation of the solution quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge