Automated Detection System for Adversarial Examples with High-Frequency Noises Sieve

Paper and Code

Aug 05, 2019

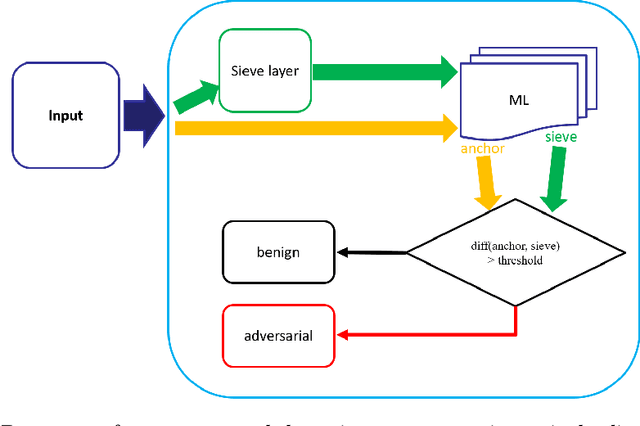

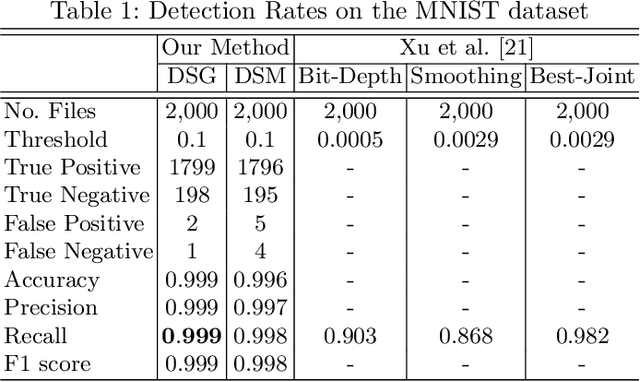

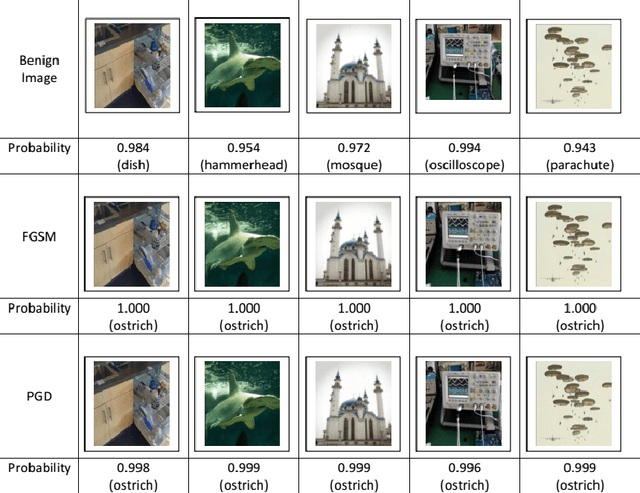

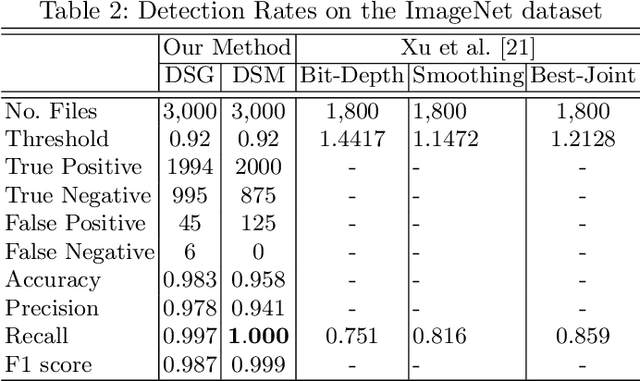

Deep neural networks are being applied in many tasks with encouraging results, and have often reached human-level performance. However, deep neural networks are vulnerable to well-designed input samples called adversarial examples. In particular, neural networks tend to misclassify adversarial examples that are imperceptible to humans. This paper introduces a new detection system that automatically detects adversarial examples on deep neural networks. Our proposed system can mostly distinguish adversarial samples and benign images in an end-to-end manner without human intervention. We exploit the important role of the frequency domain in adversarial samples and propose a method that detects malicious samples in observations. When evaluated on two standard benchmark datasets (MNIST and ImageNet), our method achieved an out-detection rate of 99.7 - 100% in many settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge