Tongxue Zhou

Prediction of brain tumor recurrence location based on multi-modal fusion and nonlinear correlation learning

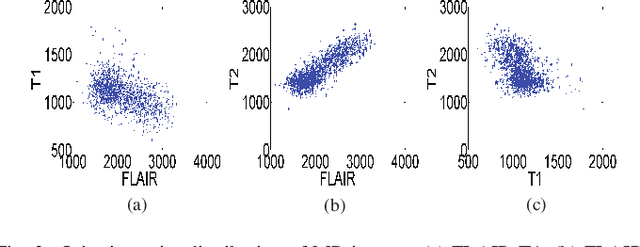

Apr 11, 2023Abstract:Brain tumor is one of the leading causes of cancer death. The high-grade brain tumors are easier to recurrent even after standard treatment. Therefore, developing a method to predict brain tumor recurrence location plays an important role in the treatment planning and it can potentially prolong patient's survival time. There is still little work to deal with this issue. In this paper, we present a deep learning-based brain tumor recurrence location prediction network. Since the dataset is usually small, we propose to use transfer learning to improve the prediction. We first train a multi-modal brain tumor segmentation network on the public dataset BraTS 2021. Then, the pre-trained encoder is transferred to our private dataset for extracting the rich semantic features. Following that, a multi-scale multi-channel feature fusion model and a nonlinear correlation learning module are developed to learn the effective features. The correlation between multi-channel features is modeled by a nonlinear equation. To measure the similarity between the distributions of original features of one modality and the estimated correlated features of another modality, we propose to use Kullback-Leibler divergence. Based on this divergence, a correlation loss function is designed to maximize the similarity between the two feature distributions. Finally, two decoders are constructed to jointly segment the present brain tumor and predict its future tumor recurrence location. To the best of our knowledge, this is the first work that can segment the present tumor and at the same time predict future tumor recurrence location, making the treatment planning more efficient and precise. The experimental results demonstrated the effectiveness of our proposed method to predict the brain tumor recurrence location from the limited dataset.

* 23 pages, 4 figures

Feature-enhanced Generation and Multi-modality Fusion based Deep Neural Network for Brain Tumor Segmentation with Missing MR Modalities

Nov 08, 2021

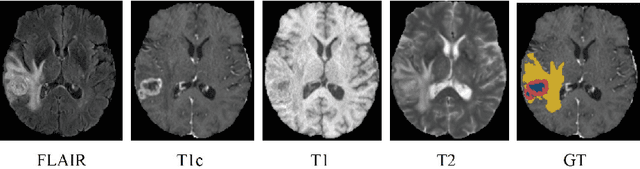

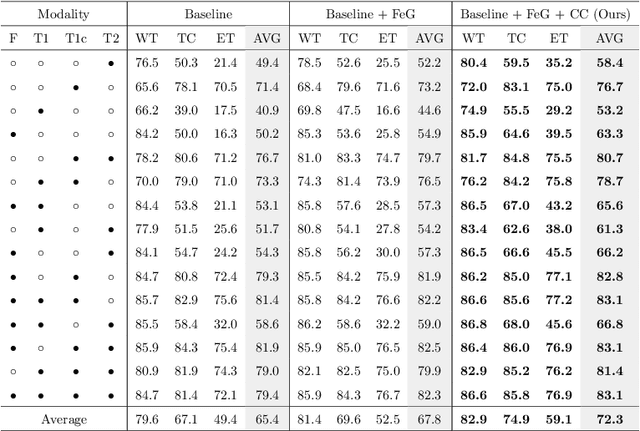

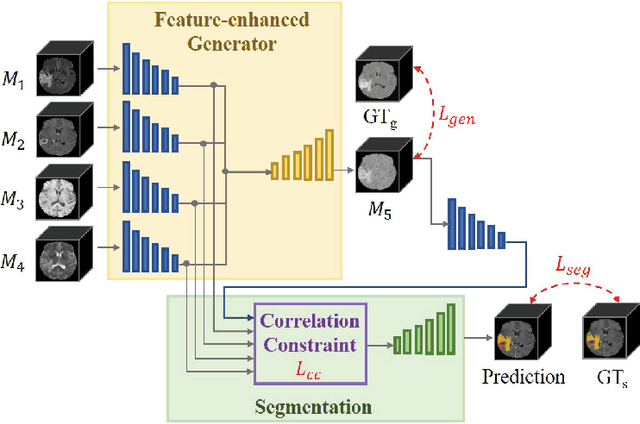

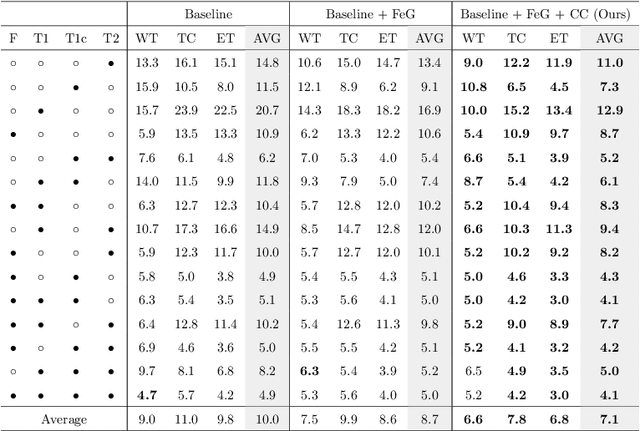

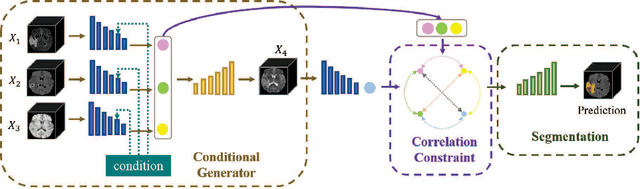

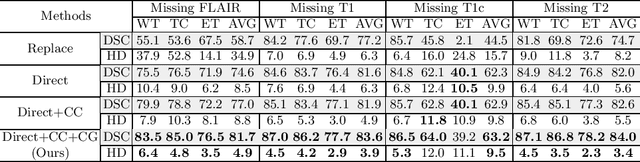

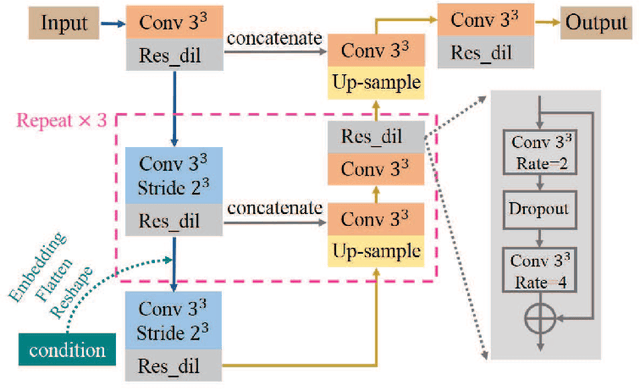

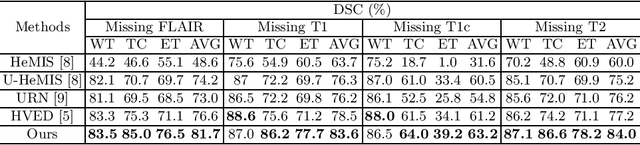

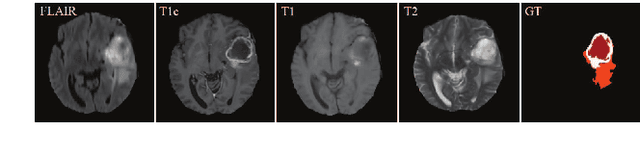

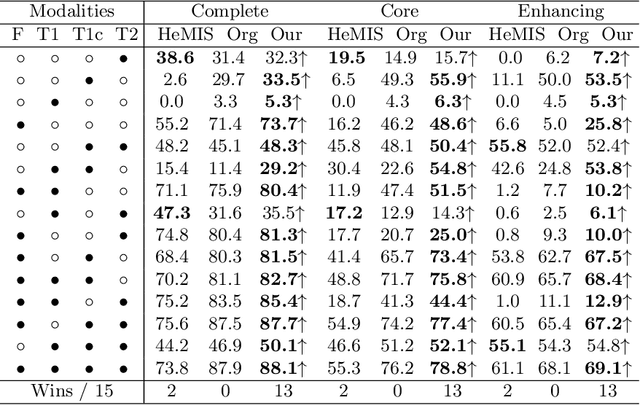

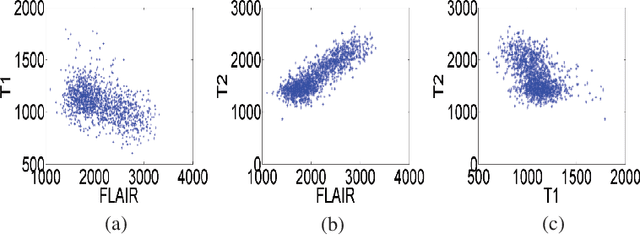

Abstract:Using multimodal Magnetic Resonance Imaging (MRI) is necessary for accurate brain tumor segmentation. The main problem is that not all types of MRIs are always available in clinical exams. Based on the fact that there is a strong correlation between MR modalities of the same patient, in this work, we propose a novel brain tumor segmentation network in the case of missing one or more modalities. The proposed network consists of three sub-networks: a feature-enhanced generator, a correlation constraint block and a segmentation network. The feature-enhanced generator utilizes the available modalities to generate 3D feature-enhanced image representing the missing modality. The correlation constraint block can exploit the multi-source correlation between the modalities and also constrain the generator to synthesize a feature-enhanced modality which must have a coherent correlation with the available modalities. The segmentation network is a multi-encoder based U-Net to achieve the final brain tumor segmentation. The proposed method is evaluated on BraTS 2018 dataset. Experimental results demonstrate the effectiveness of the proposed method which achieves the average Dice Score of 82.9, 74.9 and 59.1 on whole tumor, tumor core and enhancing tumor, respectively across all the situations, and outperforms the best method by 3.5%, 17% and 18.2%.

* 30 pages, 7 figures

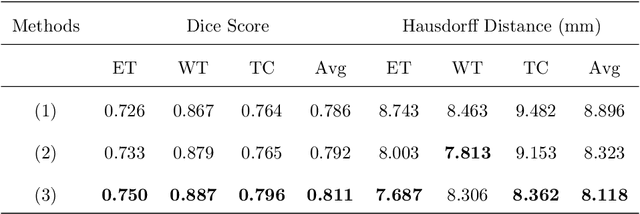

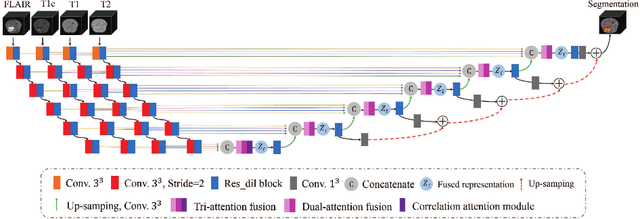

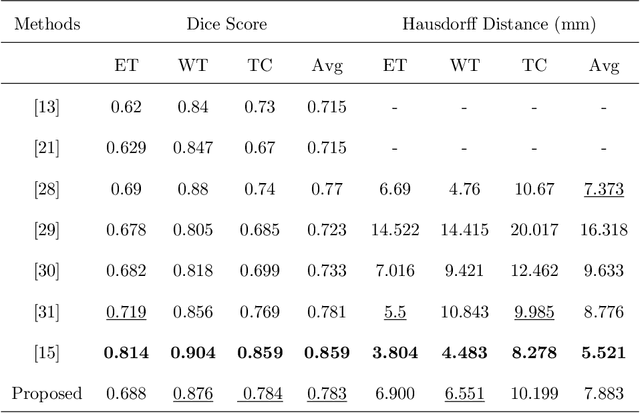

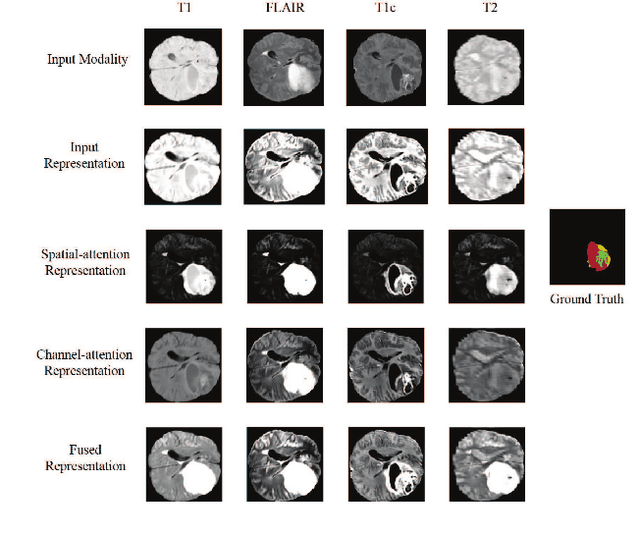

A Tri-attention Fusion Guided Multi-modal Segmentation Network

Nov 02, 2021

Abstract:In the field of multimodal segmentation, the correlation between different modalities can be considered for improving the segmentation results. Considering the correlation between different MR modalities, in this paper, we propose a multi-modality segmentation network guided by a novel tri-attention fusion. Our network includes N model-independent encoding paths with N image sources, a tri-attention fusion block, a dual-attention fusion block, and a decoding path. The model independent encoding paths can capture modality-specific features from the N modalities. Considering that not all the features extracted from the encoders are useful for segmentation, we propose to use dual attention based fusion to re-weight the features along the modality and space paths, which can suppress less informative features and emphasize the useful ones for each modality at different positions. Since there exists a strong correlation between different modalities, based on the dual attention fusion block, we propose a correlation attention module to form the tri-attention fusion block. In the correlation attention module, a correlation description block is first used to learn the correlation between modalities and then a constraint based on the correlation is used to guide the network to learn the latent correlated features which are more relevant for segmentation. Finally, the obtained fused feature representation is projected by the decoder to obtain the segmentation results. Our experiment results tested on BraTS 2018 dataset for brain tumor segmentation demonstrate the effectiveness of our proposed method.

Conditional generator and multi-sourcecorrelation guided brain tumor segmentation with missing MR modalities

May 27, 2021

Abstract:Brain tumor is one of the most high-risk cancers which causes the 5-year survival rate of only about 36%. Accurate diagnosis of brain tumor is critical for the treatment planning. However, complete data are not always available in clinical scenarios. In this paper, we propose a novel brain tumor segmentation network to deal with the missing data issue. To compensate for missing data, we propose to use a conditional generator to generate the missing modality under the condition of the available modalities. As the multi-modality has a strong correlation in tumor region, we design a correlation constraint network to leverage the multi-source information. On the one hand, the correlation constraint network can help the conditional generator to generate the missing modality which should keep the multi-source correlation with the available modalities. On the other hand, it can guide the segmentation network to learn the correlated feature representations to improve the segmentation performance. The proposed network consists of a conditional generator, a correlation constraint network and a segmentation network. We carried out extensive experiments on BraTS 2018 dataset to evaluate the proposed method.The experimental results demonstrate the importance of the proposed components and the superior performance of the proposed method com-pared with the state-of-the-art methods

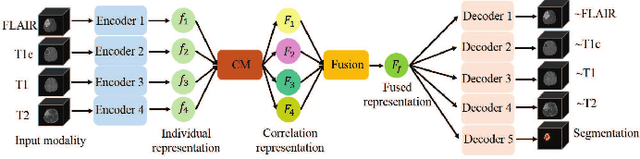

Latent Correlation Representation Learning for Brain Tumor Segmentation with Missing MRI Modalities

Apr 20, 2021

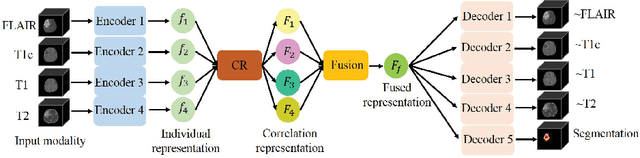

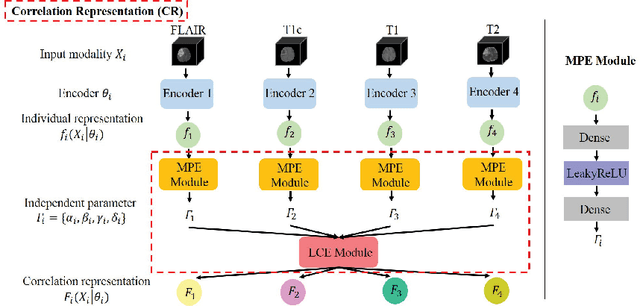

Abstract:Magnetic Resonance Imaging (MRI) is a widely used imaging technique to assess brain tumor. Accurately segmenting brain tumor from MR images is the key to clinical diagnostics and treatment planning. In addition, multi-modal MR images can provide complementary information for accurate brain tumor segmentation. However, it's common to miss some imaging modalities in clinical practice. In this paper, we present a novel brain tumor segmentation algorithm with missing modalities. Since it exists a strong correlation between multi-modalities, a correlation model is proposed to specially represent the latent multi-source correlation. Thanks to the obtained correlation representation, the segmentation becomes more robust in the case of missing modality. First, the individual representation produced by each encoder is used to estimate the modality independent parameter. Then, the correlation model transforms all the individual representations to the latent multi-source correlation representations. Finally, the correlation representations across modalities are fused via attention mechanism into a shared representation to emphasize the most important features for segmentation. We evaluate our model on BraTS 2018 and BraTS 2019 dataset, it outperforms the current state-of-the-art methods and produces robust results when one or more modalities are missing.

* 12 pages, 10 figures, accepted by IEEE Transactions on Image Processing (8 April 2021). arXiv admin note: text overlap with arXiv:2003.08870, arXiv:2102.03111

3D Medical Multi-modal Segmentation Network Guided by Multi-source Correlation Constraint

Feb 05, 2021

Abstract:In the field of multimodal segmentation, the correlation between different modalities can be considered for improving the segmentation results. In this paper, we propose a multi-modality segmentation network with a correlation constraint. Our network includes N model-independent encoding paths with N image sources, a correlation constraint block, a feature fusion block, and a decoding path. The model independent encoding path can capture modality-specific features from the N modalities. Since there exists a strong correlation between different modalities, we first propose a linear correlation block to learn the correlation between modalities, then a loss function is used to guide the network to learn the correlated features based on the linear correlation block. This block forces the network to learn the latent correlated features which are more relevant for segmentation. Considering that not all the features extracted from the encoders are useful for segmentation, we propose to use dual attention based fusion block to recalibrate the features along the modality and spatial paths, which can suppress less informative features and emphasize the useful ones. The fused feature representation is finally projected by the decoder to obtain the segmentation result. Our experiment results tested on BraTS-2018 dataset for brain tumor segmentation demonstrate the effectiveness of our proposed method.

A review: Deep learning for medical image segmentation using multi-modality fusion

Apr 22, 2020

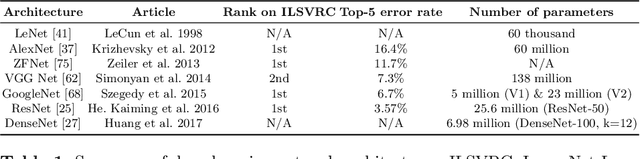

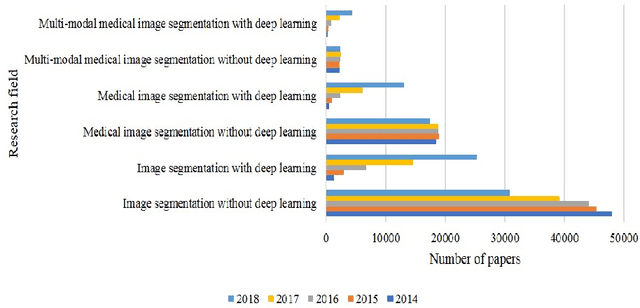

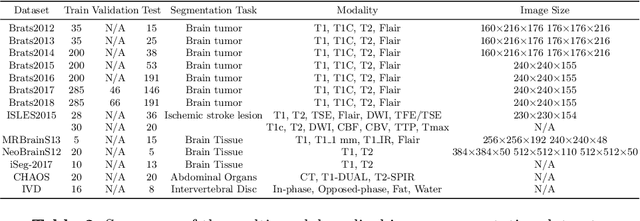

Abstract:Multi-modality is widely used in medical imaging, because it can provide multiinformation about a target (tumor, organ or tissue). Segmentation using multimodality consists of fusing multi-information to improve the segmentation. Recently, deep learning-based approaches have presented the state-of-the-art performance in image classification, segmentation, object detection and tracking tasks. Due to their self-learning and generalization ability over large amounts of data, deep learning recently has also gained great interest in multi-modal medical image segmentation. In this paper, we give an overview of deep learning-based approaches for multi-modal medical image segmentation task. Firstly, we introduce the general principle of deep learning and multi-modal medical image segmentation. Secondly, we present different deep learning network architectures, then analyze their fusion strategies and compare their results. The earlier fusion is commonly used, since it's simple and it focuses on the subsequent segmentation network architecture. However, the later fusion gives more attention on fusion strategy to learn the complex relationship between different modalities. In general, compared to the earlier fusion, the later fusion can give more accurate result if the fusion method is effective enough. We also discuss some common problems in medical image segmentation. Finally, we summarize and provide some perspectives on the future research.

* 26 pages, 8 figures

An automatic COVID-19 CT segmentation network using spatial and channel attention mechanism

Apr 21, 2020

Abstract:The coronavirus disease (COVID-19) pandemic has led to a devastating effect on the global public health. Computed Tomography (CT) is an effective tool in the screening of COVID-19. It is of great importance to rapidly and accurately segment COVID-19 from CT to help diagnostic and patient monitoring. In this paper, we propose a U-Net based segmentation network using attention mechanism. As not all the features extracted from the encoders are useful for segmentation, we propose to incorporate an attention mechanism including a spatial and a channel attention, to a U-Net architecture to re-weight the feature representation spatially and channel-wise to capture rich contextual relationships for better feature representation. In addition, the focal tversky loss is introduced to deal with small lesion segmentation. The experiment results, evaluated on a COVID-19 CT segmentation dataset where 473 CT slices are available, demonstrate the proposed method can achieve an accurate and rapid segmentation on COVID-19 segmentation. The method takes only 0.29 second to segment a single CT slice. The obtained Dice Score, Sensitivity and Specificity are 83.1%, 86.7% and 99.3%, respectively.

Brain tumor segmentation with missing modalities via latent multi-source correlation representation

Mar 19, 2020

Abstract:Multimodal MR images can provide complementary information for accurate brain tumor segmentation. However, it's common to have missing imaging modalities in clinical practice. Since it exists a strong correlation between multi modalities, a novel correlation representation block is proposed to specially discover the latent multi-source correlation. Thanks to the obtained correlation representation, the segmentation becomes more robust in the case of missing modality. The model parameter estimation module first maps the individual representation produced by each encoder to obtain independent parameters, then, under these parameters, correlation expression module transforms all the individual representations to form a latent multi-source correlation representation. Finally, the correlation representations across modalities are fused via attention mechanism into a shared representation to emphasize the most important features for segmentation. We evaluate our model on BraTS 2018 datasets, it outperforms the current state-of-the-art method and produces robust results when one or more modalities are missing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge