Tobias Ziegler

Synaptogen: A cross-domain generative device model for large-scale neuromorphic circuit design

Apr 09, 2024

Abstract:We present a fast generative modeling approach for resistive memories that reproduces the complex statistical properties of real-world devices. To enable efficient modeling of analog circuits, the model is implemented in Verilog-A. By training on extensive measurement data of integrated 1T1R arrays (6,000 cycles of 512 devices), an autoregressive stochastic process accurately accounts for the cross-correlations between the switching parameters, while non-linear transformations ensure agreement with both cycle-to-cycle (C2C) and device-to-device (D2D) variability. Benchmarks show that this statistically comprehensive model achieves read/write throughputs exceeding those of even highly simplified and deterministic compact models.

X-TIME: An in-memory engine for accelerating machine learning on tabular data with CAMs

Apr 05, 2023

Abstract:Structured, or tabular, data is the most common format in data science. While deep learning models have proven formidable in learning from unstructured data such as images or speech, they are less accurate than simpler approaches when learning from tabular data. In contrast, modern tree-based Machine Learning (ML) models shine in extracting relevant information from structured data. An essential requirement in data science is to reduce model inference latency in cases where, for example, models are used in a closed loop with simulation to accelerate scientific discovery. However, the hardware acceleration community has mostly focused on deep neural networks and largely ignored other forms of machine learning. Previous work has described the use of an analog content addressable memory (CAM) component for efficiently mapping random forests. In this work, we focus on an overall analog-digital architecture implementing a novel increased precision analog CAM and a programmable network on chip allowing the inference of state-of-the-art tree-based ML models, such as XGBoost and CatBoost. Results evaluated in a single chip at 16nm technology show 119x lower latency at 9740x higher throughput compared with a state-of-the-art GPU, with a 19W peak power consumption.

Optical link acquisition for the LISA mission with in-field pointing architecture

Feb 23, 2023

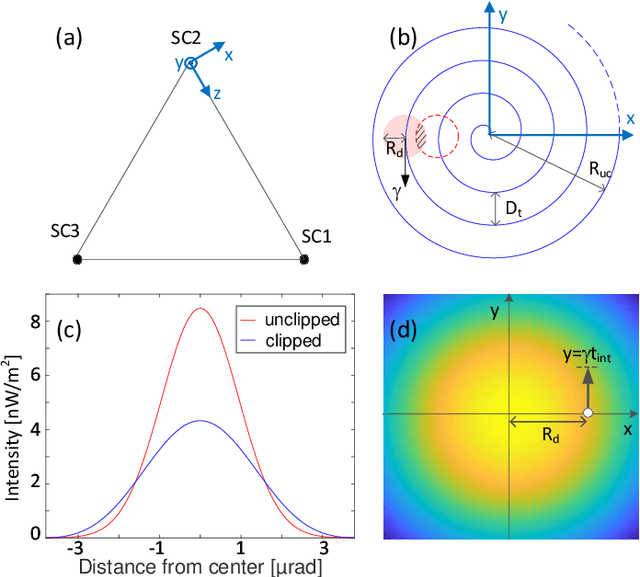

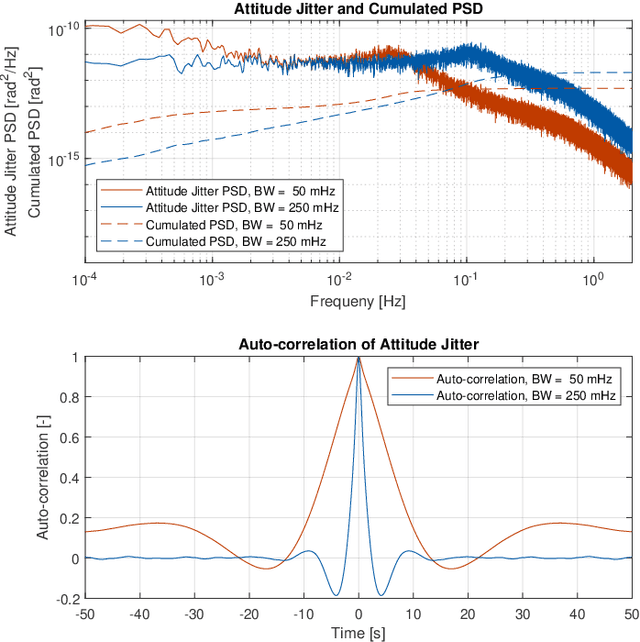

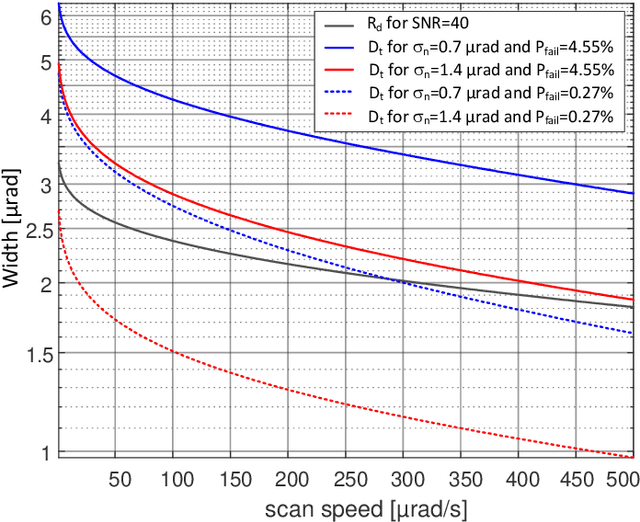

Abstract:We present a comprehensive simulation of the spatial acquisition of optical links for the LISA mission in the in-field pointing architecture, where a fast pointing mirror is used to move the field-of-view of the optical transceiver, which was studied as an alternative scheme to the baselined telescope pointing architecture. The simulation includes a representative model of the far-field intensity distribution and the beam detection process using a realistic detector model, and a model of the expected platform jitter for two alternative control modes with different associated jitter spectra. For optimally adjusted detector settings and accounting for the actual far-field beam profile, we investigate the dependency of acquisition performance on the jitter spectrum and the track-width of the search spiral, while scan speed and detector integration time are varied over several orders of magnitude. Results show a strong dependency of the probability for acquisition failure on the width of the auto-correlation function of the jitter spectrum, which we compare to predictions of analytical models. Depending on the choice of scan speed, three different regimes may be entered which differ in failure probability by several orders of magnitude. We then use these results to optimize the acquisition architecture for the given jitter spectra with respect to failure rate and overall duration, concluding that the full constellation could be acquired on average in less than one minute. Our method and findings can be applied to any other space mission using a fine-steering mirror for link acquisition.

* 10 pages, 7 figures, two columns

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge