Timo Ojala

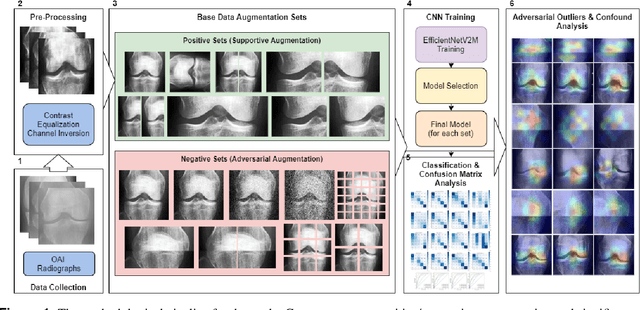

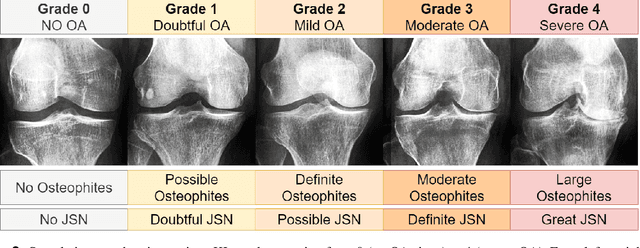

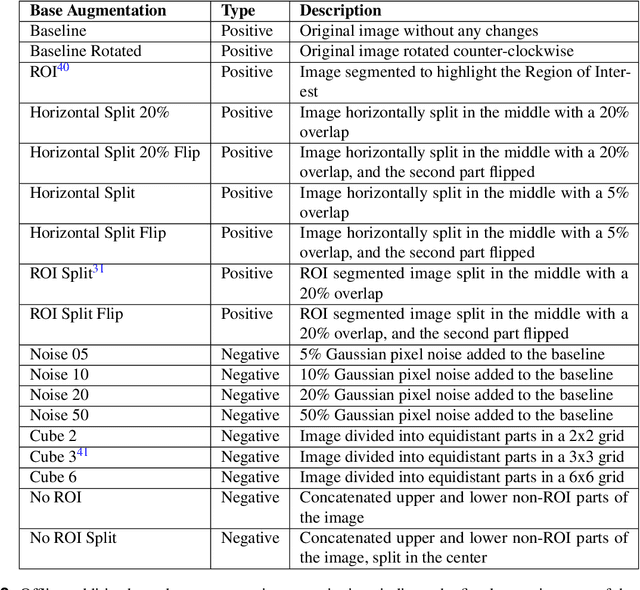

Exploring the Efficacy of Base Data Augmentation Methods in Deep Learning-Based Radiograph Classification of Knee Joint Osteoarthritis

Nov 10, 2023

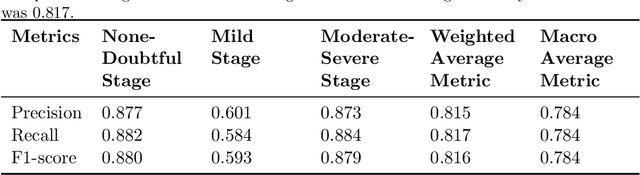

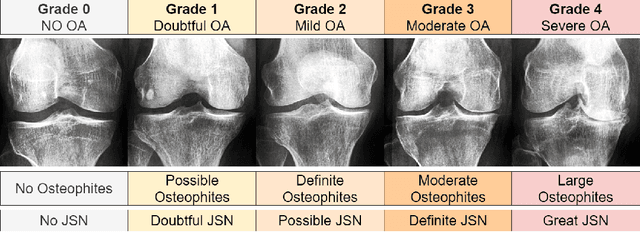

Abstract:Diagnosing knee joint osteoarthritis (KOA), a major cause of disability worldwide, is challenging due to subtle radiographic indicators and the varied progression of the disease. Using deep learning for KOA diagnosis requires broad, comprehensive datasets. However, obtaining these datasets poses significant challenges due to patient privacy concerns and data collection restrictions. Additive data augmentation, which enhances data variability, emerges as a promising solution. Yet, it's unclear which augmentation techniques are most effective for KOA. This study explored various data augmentation methods, including adversarial augmentations, and their impact on KOA classification model performance. While some techniques improved performance, others commonly used underperformed. We identified potential confounding regions within the images using adversarial augmentation. This was evidenced by our models' ability to classify KL0 and KL4 grades accurately, with the knee joint omitted. This observation suggested a model bias, which might leverage unrelated features for classification currently present in radiographs. Interestingly, removing the knee joint also led to an unexpected improvement in KL1 classification accuracy. To better visualize these paradoxical effects, we employed Grad-CAM, highlighting the associated regions. Our study underscores the need for careful technique selection for improved model performance and identifying and managing potential confounding regions in radiographic KOA deep learning.

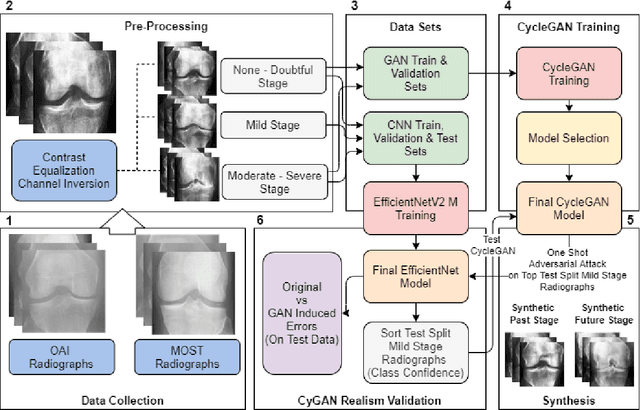

Synthesizing Bidirectional Temporal States of Knee Osteoarthritis Radiographs with Cycle-Consistent Generative Adversarial Neural Networks

Nov 10, 2023

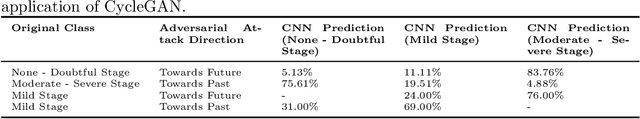

Abstract:Knee Osteoarthritis (KOA), a leading cause of disability worldwide, is challenging to detect early due to subtle radiographic indicators. Diverse, extensive datasets are needed but are challenging to compile because of privacy, data collection limitations, and the progressive nature of KOA. However, a model capable of projecting genuine radiographs into different OA stages could augment data pools, enhance algorithm training, and offer pre-emptive prognostic insights. In this study, we trained a CycleGAN model to synthesize past and future stages of KOA on any genuine radiograph. The model was validated using a Convolutional Neural Network that was deceived into misclassifying disease stages in transformed images, demonstrating the CycleGAN's ability to effectively transform disease characteristics forward or backward in time. The model was particularly effective in synthesizing future disease states and showed an exceptional ability to retroactively transition late-stage radiographs to earlier stages by eliminating osteophytes and expanding knee joint space, signature characteristics of None or Doubtful KOA. The model's results signify a promising potential for enhancing diagnostic models, data augmentation, and educational and prognostic usage in healthcare. Nevertheless, further refinement, validation, and a broader evaluation process encompassing both CNN-based assessments and expert medical feedback are emphasized for future research and development.

Adaptive Variance Thresholding: A Novel Approach to Improve Existing Deep Transfer Vision Models and Advance Automatic Knee-Joint Osteoarthritis Classification

Nov 10, 2023

Abstract:Knee-Joint Osteoarthritis (KOA) is a prevalent cause of global disability and is inherently complex to diagnose due to its subtle radiographic markers and individualized progression. One promising classification avenue involves applying deep learning methods; however, these techniques demand extensive, diversified datasets, which pose substantial challenges due to medical data collection restrictions. Existing practices typically resort to smaller datasets and transfer learning. However, this approach often inherits unnecessary pre-learned features that can clutter the classifier's vector space, potentially hampering performance. This study proposes a novel paradigm for improving post-training specialized classifiers by introducing adaptive variance thresholding (AVT) followed by Neural Architecture Search (NAS). This approach led to two key outcomes: an increase in the initial accuracy of the pre-trained KOA models and a 60-fold reduction in the NAS input vector space, thus facilitating faster inference speed and a more efficient hyperparameter search. We also applied this approach to an external model trained for KOA classification. Despite its initial performance, the application of our methodology improved its average accuracy, making it one of the top three KOA classification models.

Improving Performance in Colorectal Cancer Histology Decomposition using Deep and Ensemble Machine Learning

Oct 25, 2023

Abstract:In routine colorectal cancer management, histologic samples stained with hematoxylin and eosin are commonly used. Nonetheless, their potential for defining objective biomarkers for patient stratification and treatment selection is still being explored. The current gold standard relies on expensive and time-consuming genetic tests. However, recent research highlights the potential of convolutional neural networks (CNNs) in facilitating the extraction of clinically relevant biomarkers from these readily available images. These CNN-based biomarkers can predict patient outcomes comparably to golden standards, with the added advantages of speed, automation, and minimal cost. The predictive potential of CNN-based biomarkers fundamentally relies on the ability of convolutional neural networks (CNNs) to classify diverse tissue types from whole slide microscope images accurately. Consequently, enhancing the accuracy of tissue class decomposition is critical to amplifying the prognostic potential of imaging-based biomarkers. This study introduces a hybrid Deep and ensemble machine learning model that surpassed all preceding solutions for this classification task. Our model achieved 96.74% accuracy on the external test set and 99.89% on the internal test set. Recognizing the potential of these models in advancing the task, we have made them publicly available for further research and development.

Leaning-Based Control of an Immersive-Telepresence Robot

Aug 22, 2022

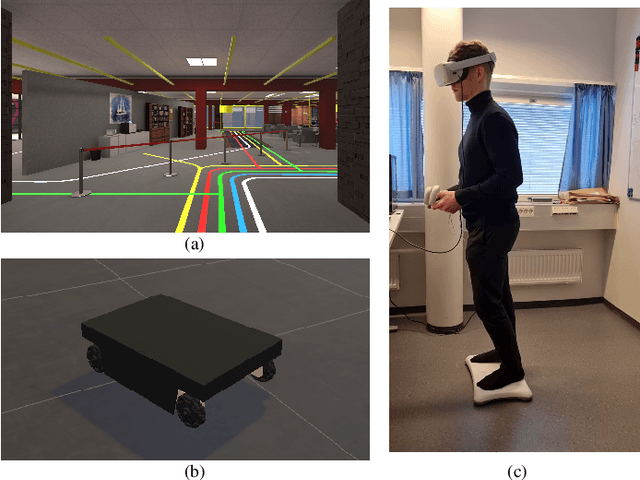

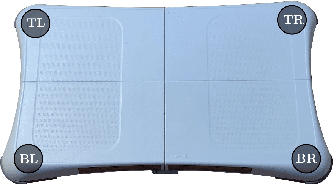

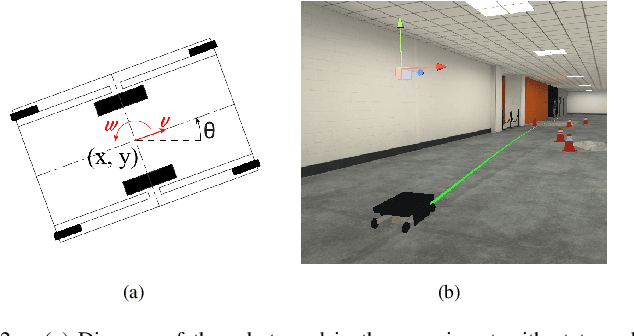

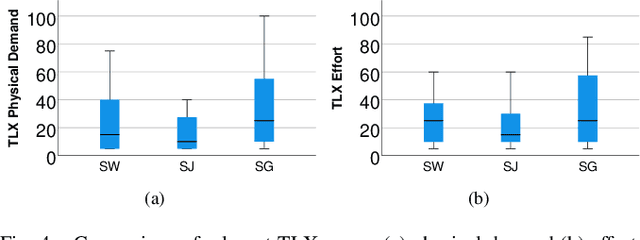

Abstract:In this paper, we present an implementation of a leaning-based control of a differential drive telepresence robot and a user study in simulation, with the goal of bringing the same functionality to a real telepresence robot. The participants used a balance board to control the robot and viewed the virtual environment through a head-mounted display. The main motivation for using a balance board as the control device stems from Virtual Reality (VR) sickness; even small movements of your own body matching the motions seen on the screen decrease the sensory conflict between vision and vestibular organs, which lies at the heart of most theories regarding the onset of VR sickness. To test the hypothesis that the balance board as a control method would be less sickening than using joysticks, we designed a user study (N=32, 15 women) in which the participants drove a simulated differential drive robot in a virtual environment with either a Nintendo Wii Balance Board or joysticks. However, our pre-registered main hypotheses were not supported; the joystick did not cause any more VR sickness on the participants than the balance board, and the board proved to be statistically significantly more difficult to use, both subjectively and objectively. Analyzing the open-ended questions revealed these results to be likely connected, meaning that the difficulty of use seemed to affect sickness; even unlimited training time before the test did not make the use as easy as the familiar joystick. Thus, making the board easier to use is a key to enable its potential; we present a few possibilities towards this goal.

HI-DWA: Human-Influenced Dynamic Window Approach for Shared Control of a Telepresence Robot

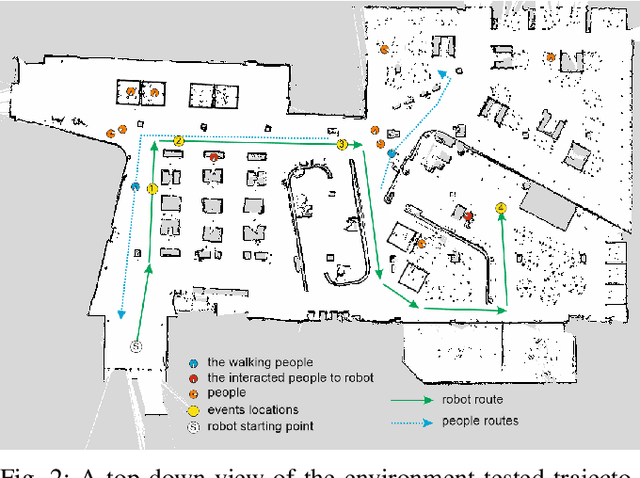

Mar 05, 2022

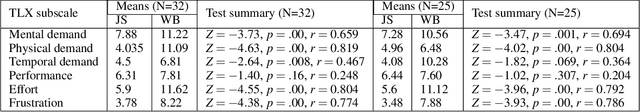

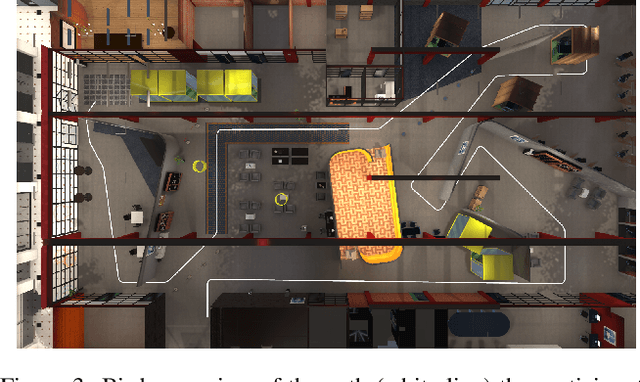

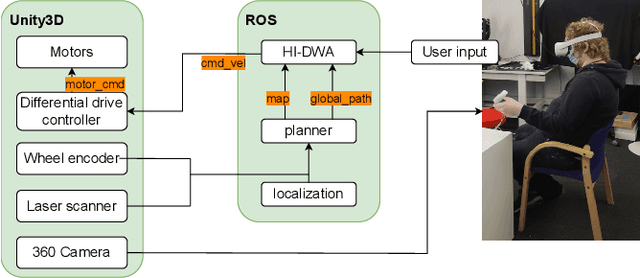

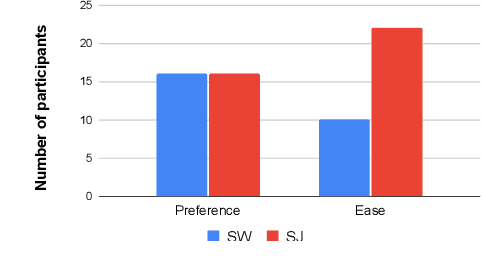

Abstract:This paper considers the problem of enabling the user to modify the path of a telepresence robot. The robot is capable of autonomously navigating to the goal indicated by the user, but the user might still want to modify the path without changing the goal, for example, to go further away from other people, or to go closer to landmarks she wants to see on the way. We propose Human-Influenced Dynamic Window Approach (HI-DWA), a shared control method aimed for telepresence robots based on Dynamic Window Approach (DWA) that allows the user to influence the control input given to the robot. To verify the proposed method, we performed a user study (N=32) in Virtual Reality (VR) to compare HI-DWA with switching between autonomous navigation and manual control for controlling a simulated telepresence robot moving in a virtual environment. Results showed that users reached their goal faster using HI-DWA controller and found it easier to use. Preference between the two methods was split equally. Qualitative analysis revealed that a major reason for the participants that preferred switching between two modes was the feeling of control. We also analyzed the affect of different input methods, joystick and gesture, on the preference and perceived workload.

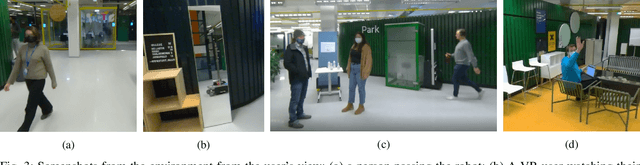

A Study of Preference and Comfort for Users Immersed in a Telepresence Robot

Mar 05, 2022

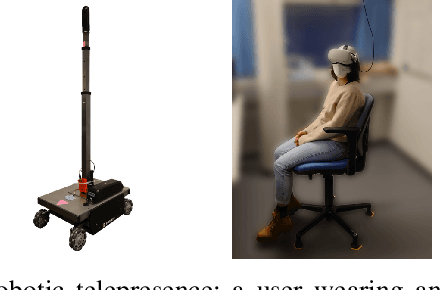

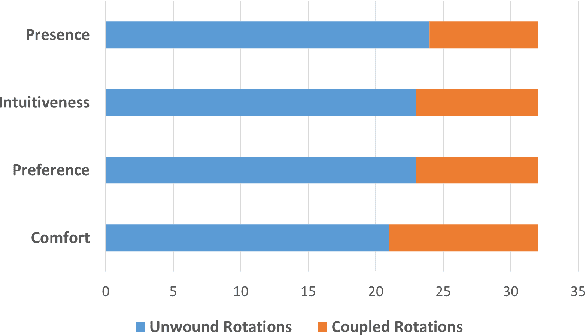

Abstract:In this paper, we show that unwinding the rotations of a user immersed in a telepresence robot is preferred and may increase the feeling of presence or "being there". By immersive telepresence, we mean a scenario where a user wearing a head-mounted display embodies a mobile robot equipped with a 360{\deg} camera in another location, such that the user can move the robot and communicate with people around it. By unwinding the rotations, the user never perceives rotational motion through the head-mounted display while staying stationary, avoiding sensory mismatch which causes a major part of VR sickness. We performed a user study (N=32) on a Dolly mobile robot platform, mimicking an earlier similar study done in simulation. Unlike the simulated study, in this study there is no significant difference in the VR sickness suffered by the participants, or the condition they find more comfortable (unwinding or automatic rotations). However, participants still prefer the unwinding condition, and they judge it to render a stronger feeling of presence, a major piece in natural communication. We show that participants aboard a real telepresence robot perceive distances similarly suitable as in simulation, presenting further evidence on the applicability of VR as a research platform for robotics and human-robot interaction.

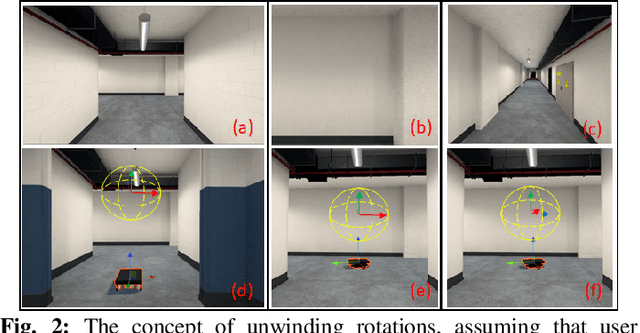

Unwinding Rotations Improves User Comfort with Immersive Telepresence Robots

Jan 07, 2022

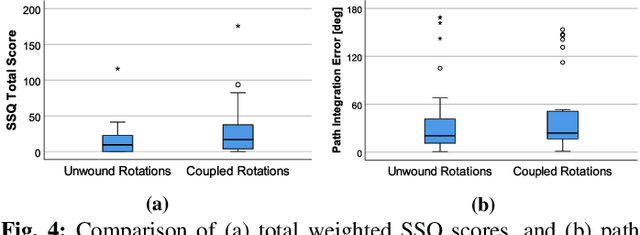

Abstract:We propose unwinding the rotations experienced by the user of an immersive telepresence robot to improve comfort and reduce VR sickness of the user. By immersive telepresence we refer to a situation where a 360\textdegree~camera on top of a mobile robot is streaming video and audio into a head-mounted display worn by a remote user possibly far away. Thus, it enables the user to be present at the robot's location, look around by turning the head and communicate with people near the robot. By unwinding the rotations of the camera frame, the user's viewpoint is not changed when the robot rotates. The user can change her viewpoint only by physically rotating in her local setting; as visual rotation without the corresponding vestibular stimulation is a major source of VR sickness, physical rotation by the user is expected to reduce VR sickness. We implemented unwinding the rotations for a simulated robot traversing a virtual environment and ran a user study (N=34) comparing unwinding rotations to user's viewpoint turning when the robot turns. Our results show that the users found unwound rotations more preferable and comfortable and that it reduced their level of VR sickness. We also present further results about the users' path integration capabilities, viewing directions, and subjective observations of the robot's speed and distances to simulated people and objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge