Tilman Hinnerichs

Towards a fully declarative neuro-symbolic language

May 15, 2024

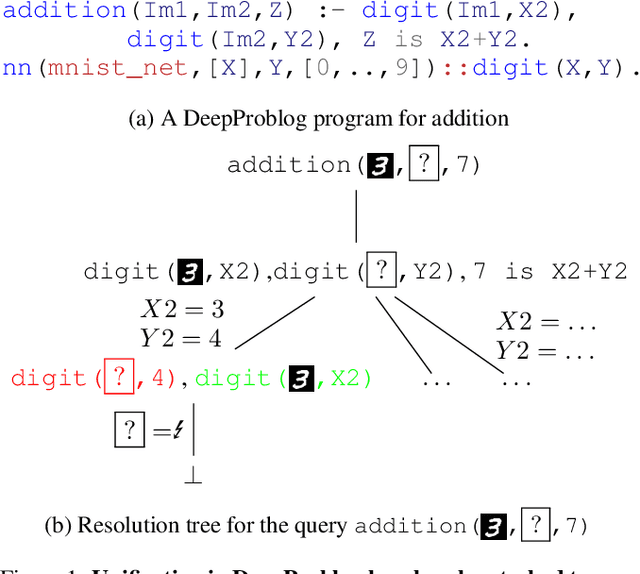

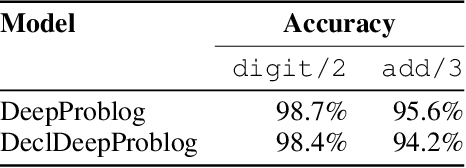

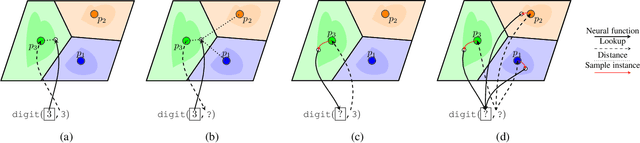

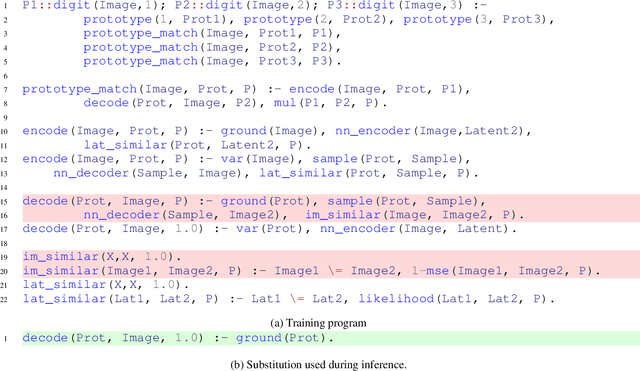

Abstract:Neuro-symbolic systems (NeSy), which claim to combine the best of both learning and reasoning capabilities of artificial intelligence, are missing a core property of reasoning systems: Declarativeness. The lack of declarativeness is caused by the functional nature of neural predicates inherited from neural networks. We propose and implement a general framework for fully declarative neural predicates, which hence extends to fully declarative NeSy frameworks. We first show that the declarative extension preserves the learning and reasoning capabilities while being able to answer arbitrary queries while only being trained on a single query type.

FALCON: Sound and Complete Neural Semantic Entailment over ALC Ontologies

Aug 16, 2022

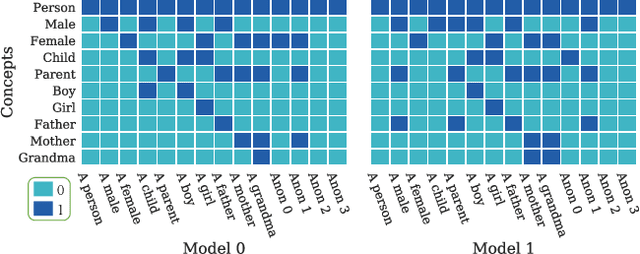

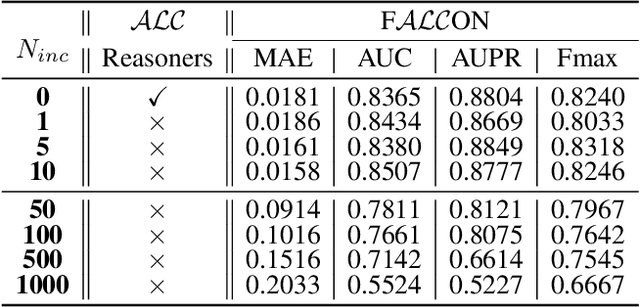

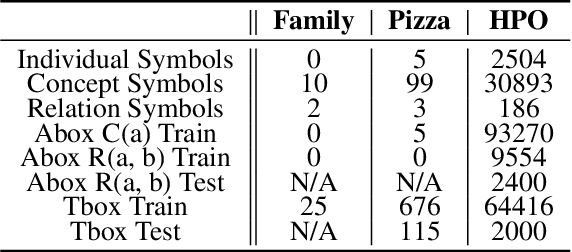

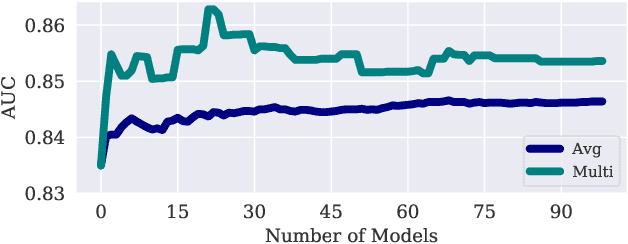

Abstract:Many ontologies, i.e., Description Logic (DL) knowledge bases, have been developed to provide rich knowledge about various domains, and a lot of them are based on ALC, i.e., a prototypical and expressive DL, or its extensions. The main task that explores ALC ontologies is to compute semantic entailment. Symbolic approaches can guarantee sound and complete semantic entailment but are sensitive to inconsistency and missing information. To this end, we propose FALCON, a Fuzzy ALC Ontology Neural reasoner. FALCON uses fuzzy logic operators to generate single model structures for arbitrary ALC ontologies, and uses multiple model structures to compute semantic entailments. Theoretical results demonstrate that FALCON is guaranteed to be a sound and complete algorithm for computing semantic entailments over ALC ontologies. Experimental results show that FALCON enables not only approximate reasoning (reasoning over incomplete ontologies) and paraconsistent reasoning (reasoning over inconsistent ontologies), but also improves machine learning in the biomedical domain by incorporating background knowledge from ALC ontologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge