Tiemin Li

Enhancing Adaptivity of Two-Fingered Object Reorientation Using Tactile-based Online Optimization of Deconstructed Actions

Mar 14, 2025

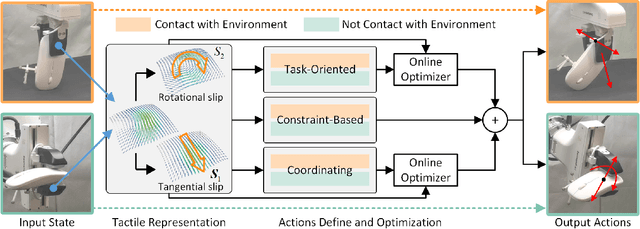

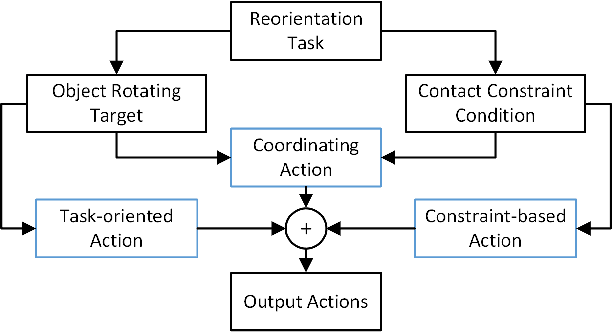

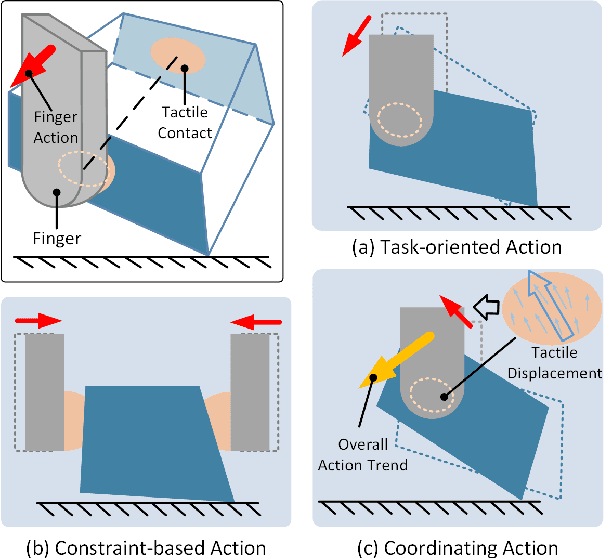

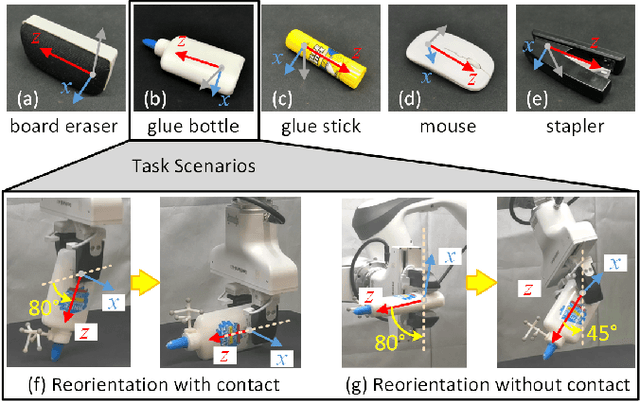

Abstract:Object reorientation is a critical task for robotic grippers, especially when manipulating objects within constrained environments. The task poses significant challenges for motion planning due to the high-dimensional output actions with the complex input information, including unknown object properties and nonlinear contact forces. Traditional approaches simplify the problem by reducing degrees of freedom, limiting contact forms, or acquiring environment/object information in advance, which significantly compromises adaptability. To address these challenges, we deconstruct the complex output actions into three fundamental types based on tactile sensing: task-oriented actions, constraint-oriented actions, and coordinating actions. These actions are then optimized online using gradient optimization to enhance adaptability. Key contributions include simplifying contact state perception, decomposing complex gripper actions, and enabling online action optimization for handling unknown objects or environmental constraints. Experimental results demonstrate that the proposed method is effective across a range of everyday objects, regardless of environmental contact. Additionally, the method exhibits robust performance even in the presence of unknown contacts and nonlinear external disturbances.

Enhancing Regrasping Efficiency Using Prior Grasping Perceptions with Soft Fingertips

Mar 14, 2025Abstract:Grasping the same object in different postures is often necessary, especially when handling tools or stacked items. Due to unknown object properties and changes in grasping posture, the required grasping force is uncertain and variable. Traditional methods rely on real-time feedback to control the grasping force cautiously, aiming to prevent slipping or damage. However, they overlook reusable information from the initial grasp, treating subsequent regrasping attempts as if they were the first, which significantly reduces efficiency. To improve this, we propose a method that utilizes perception from prior grasping attempts to predict the required grasping force, even with changes in position. We also introduce a calculation method that accounts for fingertip softness and object asymmetry. Theoretical analyses demonstrate the feasibility of predicting grasping forces across various postures after a single grasp. Experimental verifications attest to the accuracy and adaptability of our prediction method. Furthermore, results show that incorporating the predicted grasping force into feedback-based approaches significantly enhances grasping efficiency across a range of everyday objects.

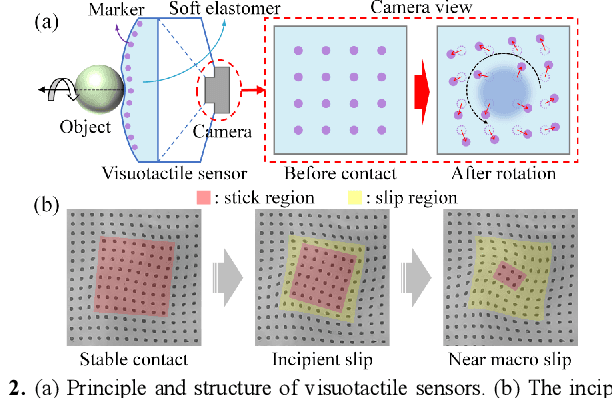

Modeling, Simulation, and Application of Spatio-Temporal Characteristics Detection in Incipient Slip

Feb 24, 2025Abstract:Incipient slip detection provides critical feedback for robotic grasping and manipulation tasks. However, maintaining its adaptability under diverse object properties and complex working conditions remains challenging. This article highlights the importance of completely representing spatio-temporal features of slip, and proposes a novel approach for incipient slip modeling and detection. Based on the analysis of localized displacement phenomenon, we establish the relationship between the characteristic strain rate extreme events and the local slip state. This approach enables the detection of both the spatial distribution and temporal dynamics of stick-slip regions. Also, the proposed method can be applied to strain distribution sensing devices, such as vision-based tactile sensors. Simulations and prototype experiments validated the effectiveness of this approach under varying contact conditions, including different contact geometries, friction coefficients, and combined loads. Experiments demonstrated that this method not only accurately and reliably delineates incipient slip, but also facilitates friction parameter estimation and adaptive grasping control.

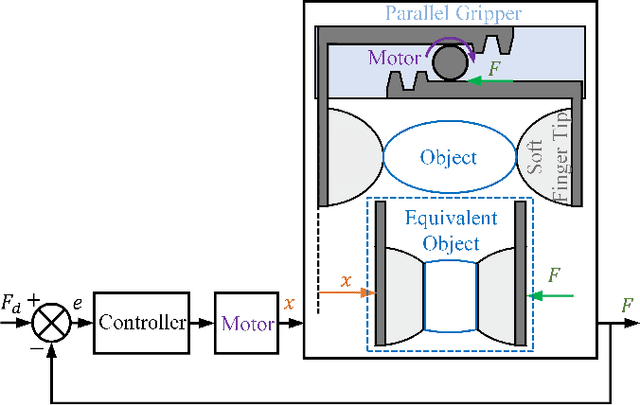

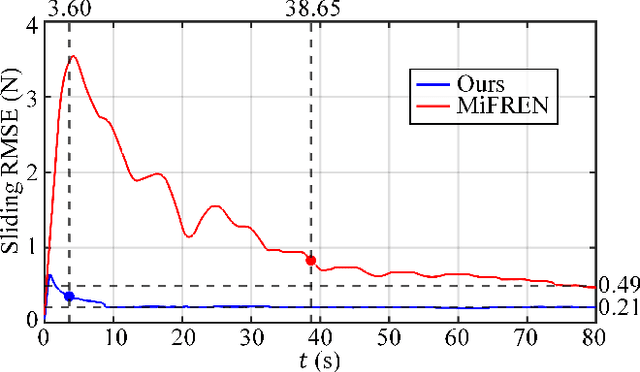

An Adaptive Grasping Force Tracking Strategy for Nonlinear and Time-Varying Object Behaviors

Dec 03, 2024

Abstract:Accurate grasp force control is one of the key skills for ensuring successful and damage-free robotic grasping of objects. Although existing methods have conducted in-depth research on slip detection and grasping force planning, they often overlook the issue of adaptive tracking of the actual force to the target force when handling objects with different material properties. The optimal parameters of a force tracking controller are significantly influenced by the object's stiffness, and many adaptive force tracking algorithms rely on stiffness estimation. However, real-world objects often exhibit viscous, plastic, or other more complex nonlinear time-varying behaviors, and existing studies provide insufficient support for these materials in terms of stiffness definition and estimation. To address this, this paper introduces the concept of generalized stiffness, extending the definition of stiffness to nonlinear time-varying grasp system models, and proposes an online generalized stiffness estimator based on Long Short-Term Memory (LSTM) networks. Based on generalized stiffness, this paper proposes an adaptive parameter adjustment strategy using a PI controller as an example, enabling dynamic force tracking for objects with varying characteristics. Experimental results demonstrate that the proposed method achieves high precision and short probing time, while showing better adaptability to non-ideal objects compared to existing methods. The method effectively solves the problem of grasp force tracking in unknown, nonlinear, and time-varying grasp systems, enhancing the robotic grasping ability in unstructured environments.

Learning Gentle Grasping from Human-Free Force Control Demonstration

Sep 16, 2024Abstract:Humans can steadily and gently grasp unfamiliar objects based on tactile perception. Robots still face challenges in achieving similar performance due to the difficulty of learning accurate grasp-force predictions and force control strategies that can be generalized from limited data. In this article, we propose an approach for learning grasping from ideal force control demonstrations, to achieve similar performance of human hands with limited data size. Our approach utilizes objects with known contact characteristics to automatically generate reference force curves without human demonstrations. In addition, we design the dual convolutional neural networks (Dual-CNN) architecture which incorporating a physics-based mechanics module for learning target grasping force predictions from demonstrations. The described method can be effectively applied in vision-based tactile sensors and enables gentle and stable grasping of objects from the ground. The described prediction model and grasping strategy were validated in offline evaluations and online experiments, and the accuracy and generalizability were demonstrated.

EasyCalib: Simple and Low-Cost In-Situ Calibration for Force Reconstruction with Vision-Based Tactile Sensors

Mar 15, 2024Abstract:For elastomer-based tactile sensors, represented by visuotactile sensors, routine calibration of mechanical parameters (Young's modulus and Poisson's ratio) has been shown to be important for force reconstruction. However, the reliance on existing in-situ calibration methods for accurate force measurements limits their cost-effective and flexible applications. This article proposes a new in-situ calibration scheme that relies only on comparing contact deformation. Based on the detailed derivations of the normal contact and torsional contact theories, we designed a simple and low-cost calibration device, EasyCalib, and validated its effectiveness through extensive finite element analysis. We also explored the accuracy of EasyCalib in the practical application and demonstrated that accurate contact distributed force reconstruction can be realized based on the mechanical parameters obtained. EasyCalib balances low hardware cost, ease of operation, and low dependence on technical expertise and is expected to provide the necessary accuracy guarantees for wide applications of visuotactile sensors in the wild.

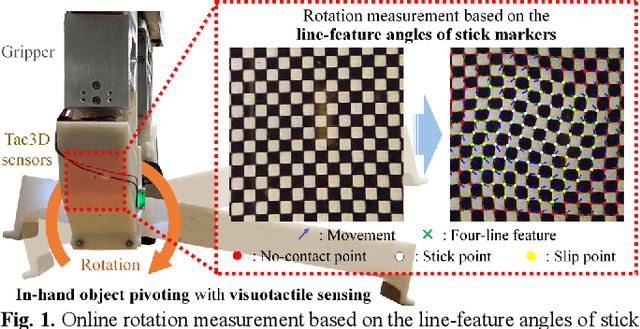

Incipient Slip-Based Rotation Measurement via Visuotactile Sensing During In-Hand Object Pivoting

Sep 14, 2023

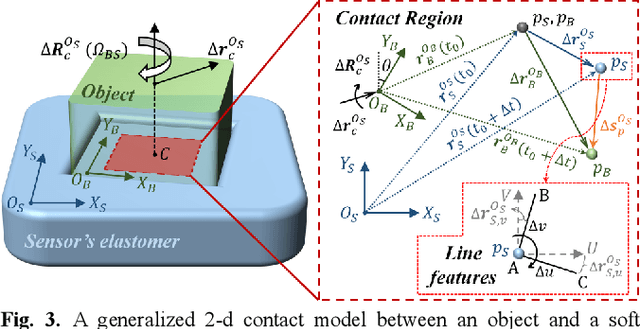

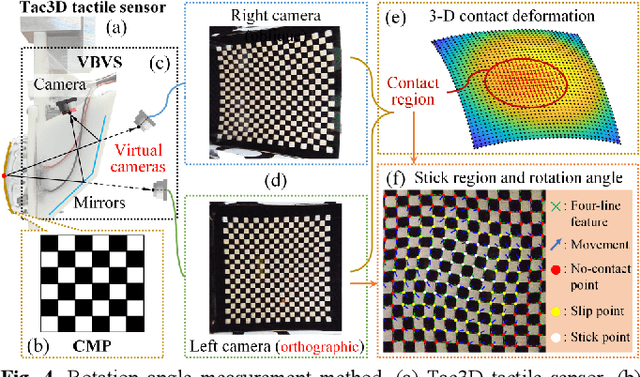

Abstract:In typical in-hand manipulation tasks represented by object pivoting, the real-time perception of rotational slippage has been proven beneficial for improving the dexterity and stability of robotic hands. An effective strategy is to obtain the contact properties for measuring rotation angle through visuotactile sensing. However, existing methods for rotation estimation did not consider the impact of the incipient slip during the pivoting process, which introduces measurement errors and makes it hard to determine the boundary between stable contact and macro slip. This paper describes a generalized 2-d contact model under pivoting, and proposes a rotation measurement method based on the line-features in the stick region. The proposed method was applied to the Tac3D vision-based tactile sensors using continuous marker patterns. Experiments show that the rotation measurement system could achieve an average static measurement error of 0.17 degree and an average dynamic measurement error of 1.34 degree. Besides, the proposed method requires no training data and can achieve real-time sensing during the in-hand object pivoting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge