Tibor Schneider

Sub-100uW Multispectral Riemannian Classification for EEG-based Brain--Machine Interfaces

Dec 18, 2021

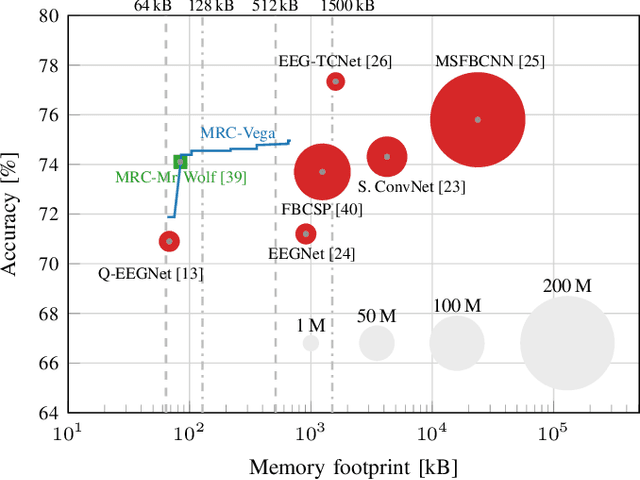

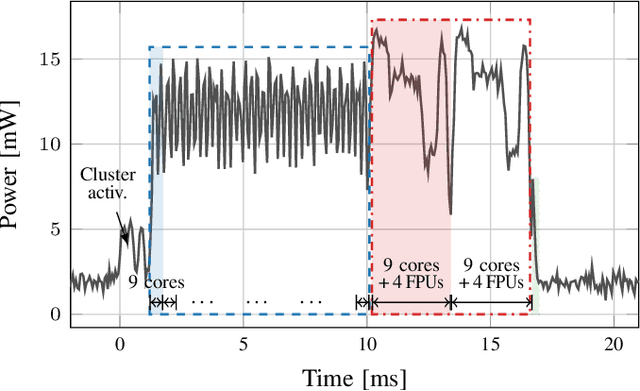

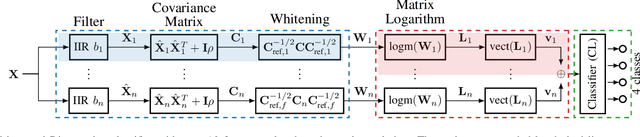

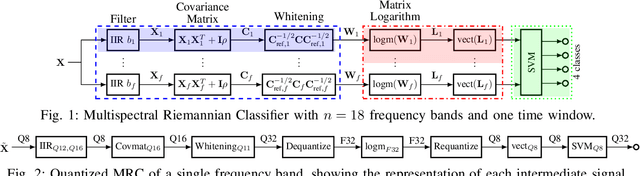

Abstract:Motor imagery brain--machine interfaces enable us to control machines by merely thinking of performing a motor action. Practical use cases require a wearable solution where the classification of the brain signals is done locally near the sensor using machine learning models embedded on energy-efficient microcontroller units, for assured privacy, user comfort, and long-term usage. In this work, we provide practical insights on the accuracy-cost trade-off for embedded BMI solutions. Our multispectral Riemannian classifier reaches 75.1% accuracy on a 4-class MI task. The accuracy is further improved by tuning different types of classifiers to each subject, achieving 76.4%. We further scale down the model by quantizing it to mixed-precision representations with a minimal accuracy loss of 1% and 1.4%, respectively, which is still up to 4.1% more accurate than the state-of-the-art embedded convolutional neural network. We implement the model on a low-power MCU within an energy budget of merely 198uJ and taking only 16.9ms per classification. Classifying samples continuously, overlapping the 3.5s samples by 50% to avoid missing user inputs allows for operation at just 85uW. Compared to related works in embedded MI-BMIs, our solution sets the new state-of-the-art in terms of accuracy-energy trade-off for near-sensor classification.

Mixed-Precision Quantization and Parallel Implementation of Multispectral Riemannian Classification for Brain--Machine Interfaces

Feb 22, 2021

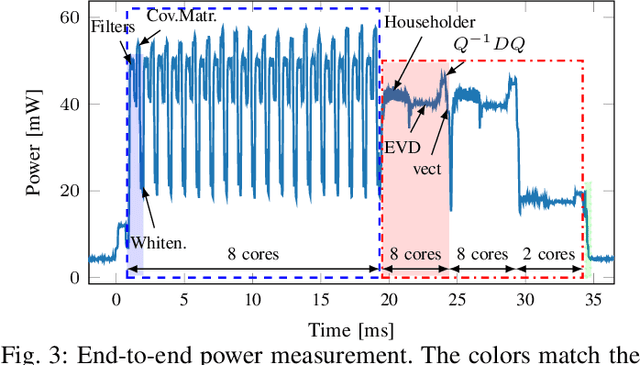

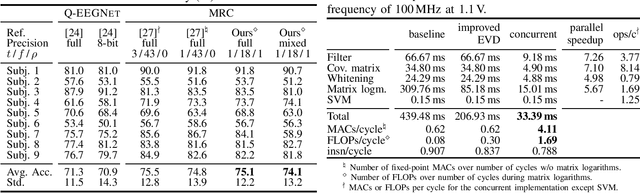

Abstract:With Motor-Imagery (MI) Brain--Machine Interfaces (BMIs) we may control machines by merely thinking of performing a motor action. Practical use cases require a wearable solution where the classification of the brain signals is done locally near the sensor using machine learning models embedded on energy-efficient microcontroller units (MCUs), for assured privacy, user comfort, and long-term usage. In this work, we provide practical insights on the accuracy-cost tradeoff for embedded BMI solutions. Our proposed Multispectral Riemannian Classifier reaches 75.1% accuracy on 4-class MI task. We further scale down the model by quantizing it to mixed-precision representations with a minimal accuracy loss of 1%, which is still 3.2% more accurate than the state-of-the-art embedded convolutional neural network. We implement the model on a low-power MCU with parallel processing units taking only 33.39ms and consuming 1.304mJ per classification.

Q-EEGNet: an Energy-Efficient 8-bit Quantized Parallel EEGNet Implementation for Edge Motor-Imagery Brain--Machine Interfaces

Apr 24, 2020

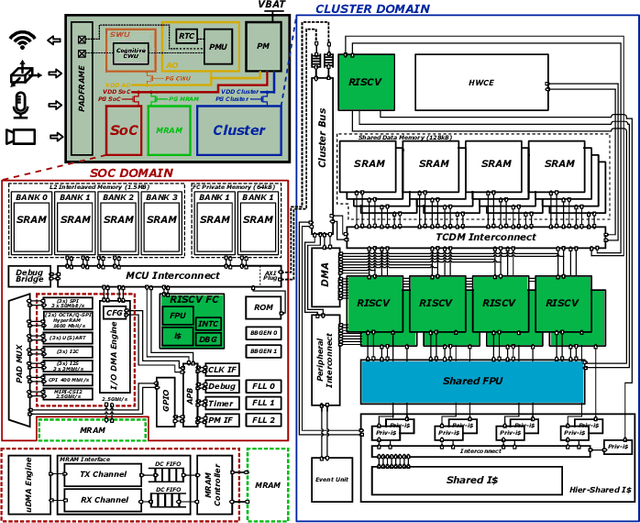

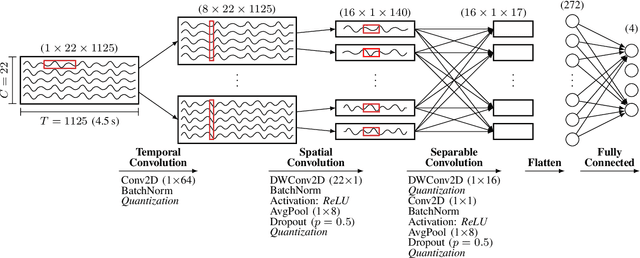

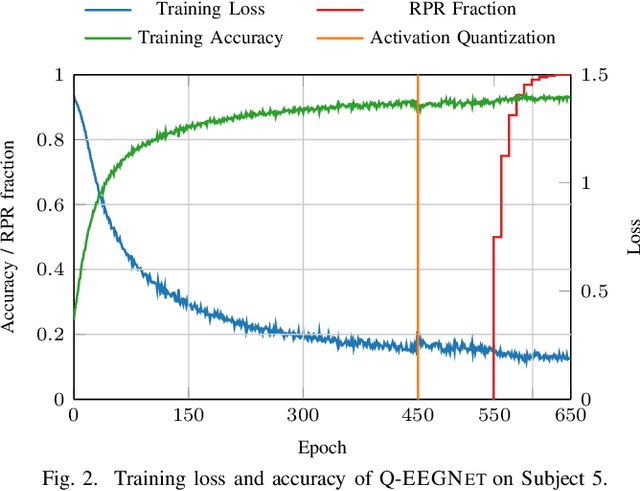

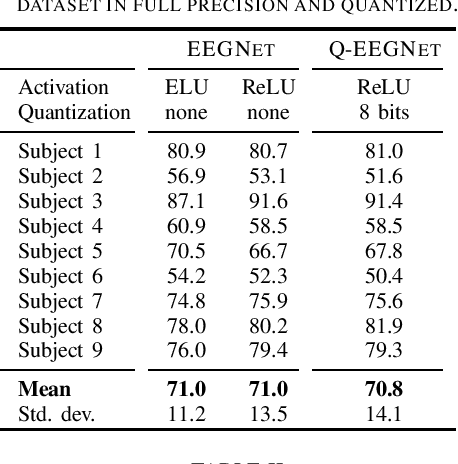

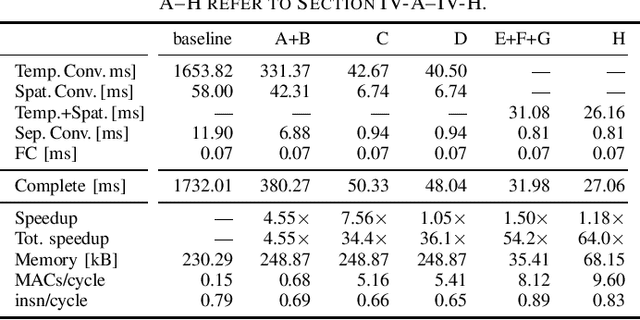

Abstract:Motor-Imagery Brain-Machine Interfaces (MI-BMIs) promise direct and accessible communication between human brains and machines by analyzing brain activities recorded with Electroencephalography (EEG). Latency, reliability, and privacy constraints make it unsuitable to offload the computation to the cloud. Practical use cases demand a wearable, battery-operated device with a low average power consumption for long-term use. Recently, sophisticated algorithms, in particular deep learning models, have emerged for classifying EEG signals. While reaching outstanding accuracy, these models often exceed the limitations of edge devices due to their memory and computational requirements. In this paper, we demonstrate algorithmic and implementation optimizations for EEGNET, a compact Convolutional Neural Network (CNN) suitable for many BMI paradigms. We quantize weights and activations to 8-bit fixed-point with a negligible accuracy loss of 0.2% on 4-class MI, and present an energy-efficient hardware-aware implementation on the Mr.Wolf parallel ultra-low power (PULP) System-on-Chip (SoC) by utilizing its custom RISC-V ISA extensions and 8-core compute cluster. With our proposed optimization steps, we can obtain an overall speedup of 64x and a reduction of up to 85% in memory footprint with respect to a single-core layer-wise baseline implementation. Our implementation takes only 5.82 ms and consumes 0.627 mJ per inference. With 20.692GMAC/s/W, it is 252x more energy-efficient than an EEGNET implementation on an ARM Cortex-M7 (0.082GMAC/s/W).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge