Sub-100uW Multispectral Riemannian Classification for EEG-based Brain--Machine Interfaces

Paper and Code

Dec 18, 2021

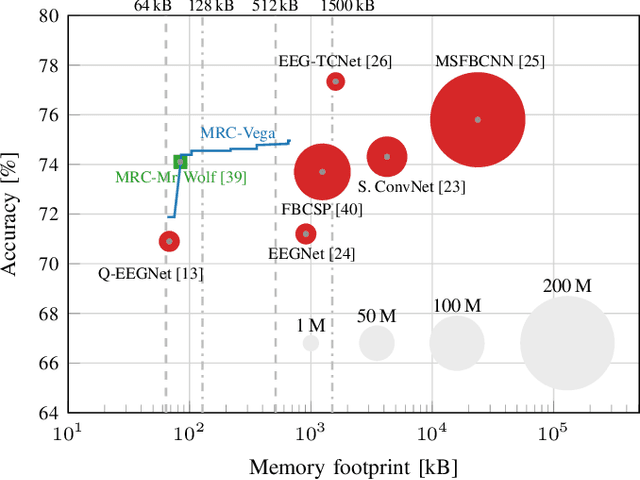

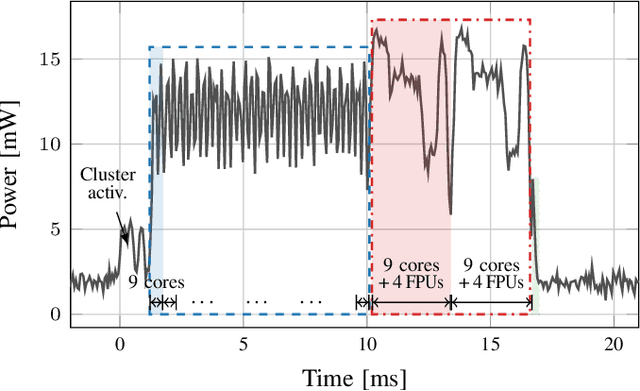

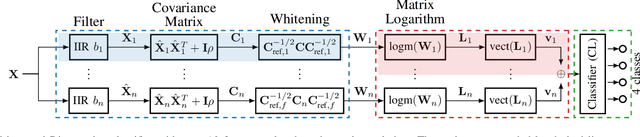

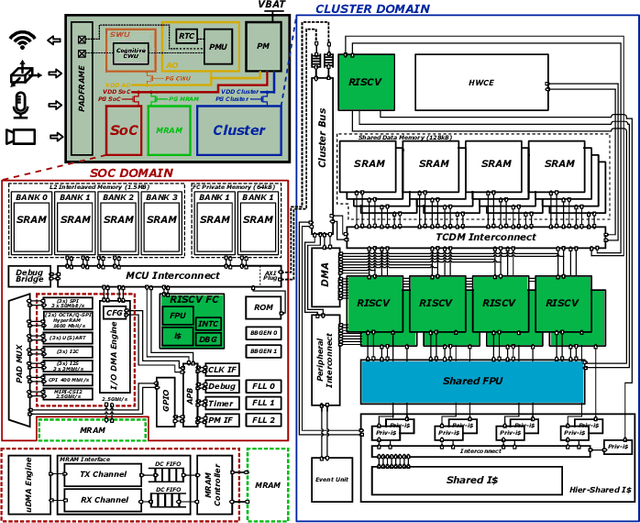

Motor imagery brain--machine interfaces enable us to control machines by merely thinking of performing a motor action. Practical use cases require a wearable solution where the classification of the brain signals is done locally near the sensor using machine learning models embedded on energy-efficient microcontroller units, for assured privacy, user comfort, and long-term usage. In this work, we provide practical insights on the accuracy-cost trade-off for embedded BMI solutions. Our multispectral Riemannian classifier reaches 75.1% accuracy on a 4-class MI task. The accuracy is further improved by tuning different types of classifiers to each subject, achieving 76.4%. We further scale down the model by quantizing it to mixed-precision representations with a minimal accuracy loss of 1% and 1.4%, respectively, which is still up to 4.1% more accurate than the state-of-the-art embedded convolutional neural network. We implement the model on a low-power MCU within an energy budget of merely 198uJ and taking only 16.9ms per classification. Classifying samples continuously, overlapping the 3.5s samples by 50% to avoid missing user inputs allows for operation at just 85uW. Compared to related works in embedded MI-BMIs, our solution sets the new state-of-the-art in terms of accuracy-energy trade-off for near-sensor classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge